当前位置:网站首页>[NLP] pre training model - gpt1

[NLP] pre training model - gpt1

2022-07-01 13:46:00 【Coriander Chrysanthemum】

background

I don't say much nonsense , First put out the links of three papers :GPT1:Improving Language Understanding by Generative Pre-Training、GPT2:Language Models are Unsupervised Multitask Learners、GPT3:Language Models are Few-Shot Learners. Teacher Li Mu is also B There is an introduction on the station GPT Video of model :GPT,GPT-2,GPT-3 Intensive reading 【 Intensive reading 】.

First of all, let's tidy up Transformer The time period after the emergence of some language models :Transformer:2017/06,GPT1:2018/06,BERT:2018/10,GTP2:2019/02,GPT3:2020/05. From the time sequence of the emergence of the model , It's really competitive .

GPT1

We know that NLP There are many tasks in the field , Such as Q & A , semantic similarity , Text classification, etc . Although a large number of unmarked text corpora are very rich , But there is little labeled data for learning these specific tasks , Then it is more difficult to carry out model training .GTP1 This paper is about , The language model is tested on different unlabeled text corpora Generative pre training , Then for each specific task Differential fine tuning , You can get good results on these tasks . Compared with the previous method ,GPT1 Use task aware input transformations during tuning , To achieve effective conversion , At the same time, make minimal changes to the model architecture . Here we can think of word2vec, Although it is also a pre training model , But we will still construct a neural network according to the task type , and GPT1 There is no need to .GPT1 The effectiveness of this approach is illustrated by its effectiveness on a wide range of benchmarks for natural language understanding .GTP1 In general, the task agnostic model is better than the differentiated training model using the architecture specially made for each task , In the study of 12 One of the tasks is 9 Three have significantly improved the technical level .

Before that , Of course, the most successful pre training model is word2vec. In this paper, we put forward 2 Major issues ,1. How to choose what kind of loss function in a given unlabeled corpus ? Of course, there will also be some tasks at this time, such as language models , Machine translation, etc , But there is no loss function that performs well in all tasks ;2. How to express the learned text Effectively transfer to downstream tasks ?

GPT1 Our approach is to use semi supervised on a large number of unlabeled corpus (semi-supervised) The method of learning a language model , Then fine tune the downstream tasks . So far , What we can think of better in the language model is RNN and Transformer That's it . Compared with RNN,Transformer The characteristics learned are more robust , The text explains , Compared with alternatives such as circular Networks , This model selection provides us with more structured memory , Used to deal with long-term dependencies in text , Thus, robust transmission performance is produced in different tasks (This model choice provides us with a more structured memory for handling long-term dependencies intext, compared to alternatives like recurrent networks, resulting in robust transfer performance acrossdiverse tasks. ) And it uses a task related input representation when migrating (During transfer, we utilize task-specific input adaptations derived from traversal-styleapproaches, which process structured text input as a single contiguous sequence of tokens)

Model structure

Model training includes two stages . The first stage is to learn high-capacity language models on a large corpus . Next is the fine-tuning stage , At this stage , Adjust the model to suit the discrimination task with marked data .

Unsupervised pre training

Existing unsupervised corpus data U = { u 1 , ⋯ , u n } {\mathcal U} = \{ u_1,\cdots,u_n \} U={ u1,⋯,un}, A standard language model is used to minimize the following likelihood function :

L 1 ( U ) = ∑ ⋅ i log P ( u i ∣ u i − k , … , u i − 1 ; Θ ) L_{1}(\mathcal{U})=\sum_{\cdot_{i}} \log P\left(u_{i} \mid u_{i-k}, \ldots, u_{i-1} ; \Theta\right) L1(U)=⋅i∑logP(ui∣ui−k,…,ui−1;Θ)

among Θ \Theta Θ Namely GPT1 Model , Then before use k k k individual token To predict number one i i i individual token Probability , That's the window size , It's a super parameter . So from the first 0 From the beginning to the end, all the results are added up to get the objective function L 1 L_1 L1, choice log The function is also to avoid the multiplication of probabilities until the final value is gone . In other words, the model can maximize the probability of corpus information .

In this article, we use multiple layers Transformer decoder. stay Transformer Of decoder Due to the existence of mask, when extracting features, you can only see the content in front of the current character , The following content is used to calculate the attention mechanism 0.

In the process of forecasting , Add a given context U = ( u k , ⋯ , u − 1 ) U=(u_{_k},\cdots,u_{-1}) U=(uk,⋯,u−1) It's context token Vector , n n n Express transformer decoder The number of layers , W e W_e We yes token embedding matrix , W p W_p Wp Is the position vector embedding matrix . Then the prediction context is U U U The process of the next word of is as follows :

h 0 = U W e + W p h l = t r a n s f o r m e r _ b l o c k ( h l − 1 ) , ∀ i ∈ [ 1 , n ] P ( u ) = s o f t m a x ( h n W e T ) h_0 = UW_e+W_p \\ h_l = transformer\_block(h_{l-1}),\forall { i\in[1, n]}\\ P(u)=softmax(h_nW_e^{T}) h0=UWe+Wphl=transformer_block(hl−1),∀i∈[1,n]P(u)=softmax(hnWeT)

Fine tuning based on supervision (fine-tuning)

Use L 1 L_1 L1 After training a model with the objective function formula , We can use the parameters of this model supervised Task . This model after training is what we call the pre training model . Suppose we have marked corpus C C C, Each sample is a sequence x 1 , ⋯ , x m x^1,\cdots,x^m x1,⋯,xm, The corresponding label is y y y. After the input data passes through our pre training model, we get a h l m h_l^m hlm. This h l m h_l^m hlm It is the input of a linear output layer added according to the task , The predicted result is y y y. The following formula :

P ( y ∣ x 1 , … , x m ) = softmax ( h l m W y ) P\left(y \mid x^{1}, \ldots, x^{m}\right)=\operatorname{softmax}\left(h_{l}^{m} W_{y}\right) P(y∣x1,…,xm)=softmax(hlmWy)

The loss function of the fine-tuning model is as follows :

L 2 ( C ) = ∑ ( x , y ) log P ( y ∣ x 1 , … , x m ) L_{2}(\mathcal{C})=\sum_{(x, y)} \log P\left(y \mid x^{1}, \ldots, x^{m}\right) L2(C)=(x,y)∑logP(y∣x1,…,xm)

The paper also says , If you add a sequence to predict the loss of the next word in the sequence , namely L 1 L_1 L1, Add to the loss function , It works better , That is, we have the following loss function :

L 3 ( C ) = L 2 ( C ) + λ ∗ L 1 ( C ) L_{3}(\mathcal{C})=L_{2}(\mathcal{C})+\lambda * L_{1}(\mathcal{C}) L3(C)=L2(C)+λ∗L1(C)

among λ \lambda λ It's a super parameter .

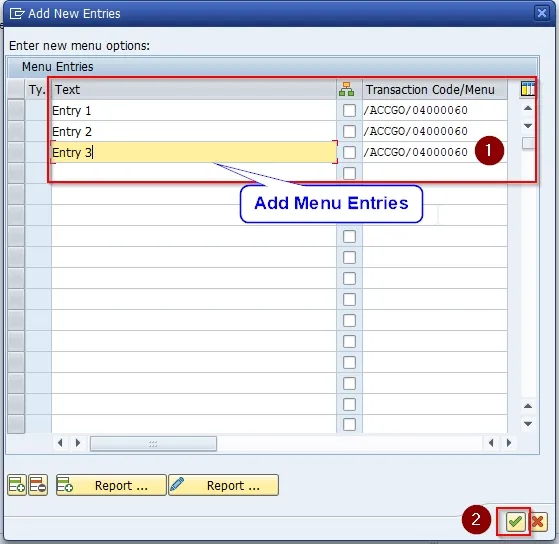

in general , Only additional parameters are needed in the trimmer W y W_y Wy. So how to NLP Task input is represented and processed using a pre training model ? Look at this picture first :

Take the text categorization task as an example , Add the specified delimiter, Then send it to Transformer, Next to that is Linear that will do . The second task is Entailment( contains ), That is what we introduced before NIU Mission . Other tasks are to splice according to the above figure .

in general , By constructing the input of different types of pre training models , To complete multiple NLP Mission , The pre training model itself will not change .

边栏推荐

- 当你真的学会DataBinding后,你会发现“这玩意真香”!

- 8 popular recommended style layout

- Camp division of common PLC programming software

- Summary of interview questions (1) HTTPS man in the middle attack, the principle of concurrenthashmap, serialVersionUID constant, redis single thread,

- 玩转MongoDB—搭建MongoDB集群

- 单工,半双工,全双工区别以及TDD和FDD区别

- Learning to use livedata and ViewModel will make it easier for you to write business

- Analysis report on the development trend and prospect scale of silicon intermediary industry in the world and China Ⓩ 2022 ~ 2027

- Global and Chinese styrene acrylic lotion polymer development trend and prospect scale prediction report Ⓒ 2022 ~ 2028

- 面试题目总结(1) https中间人攻击,ConcurrentHashMap的原理 ,serialVersionUID常量,redis单线程,

猜你喜欢

Understand the window query function of tdengine in one article

Liu Dui (fire line safety) - risk discovery in cloudy environment

Learning to use livedata and ViewModel will make it easier for you to write business

![[machine learning] VAE variational self encoder learning notes](/img/38/3eb8d9078b2dcbe780430abb15edcb.png)

[machine learning] VAE variational self encoder learning notes

介绍一种对 SAP GUI 里的收藏夹事务码管理工具增强的实现方案

玩转gRPC—不同编程语言间通信

Fiori 应用通过 Adaptation Project 的增强方式分享

What is the future development direction of people with ordinary education, appearance and family background? The career planning after 00 has been made clear

刘对(火线安全)-多云环境的风险发现

清华章毓晋老师新书:2D视觉系统和图像技术(文末送5本)

随机推荐

Investment analysis and prospect prediction report of global and Chinese dimethyl sulfoxide industry Ⓦ 2022 ~ 2028

Interpretation of R & D effectiveness measurement framework

介绍一种对 SAP GUI 里的收藏夹事务码管理工具增强的实现方案

Yan Rong looks at how to formulate a multi cloud strategy in the era of hybrid cloud

uni-app实现广告滚动条

B站被骂上了热搜。。

Self cultivation of open source programmers who contributed tens of millions of lines of code to shardingsphere and later became CEO

Applet - applet chart Library (F2 chart Library)

Flow management technology

Report on the 14th five year plan and future development trend of China's integrated circuit packaging industry Ⓓ 2022 ~ 2028

Google Earth engine (GEE) - Global Human Settlements grid data 1975-1990-2000-2014 (p2016)

Benefiting from the Internet, the scientific and technological performance of overseas exchange volume has returned to high growth

玩转gRPC—不同编程语言间通信

JVM有哪些类加载机制?

Report on the "14th five year plan" and scale prospect prediction of China's laser processing equipment manufacturing industry Ⓢ 2022 ~ 2028

Global and Chinese n-butanol acetic acid market development trend and prospect forecast report Ⓧ 2022 ~ 2028

C语言基础知识

Liu Dui (fire line safety) - risk discovery in cloudy environment

spark源码阅读总纲

Investment analysis and prospect prediction report of global and Chinese p-nitrotoluene industry Ⓙ 2022 ~ 2027