当前位置:网站首页>OpenGL Chapter 7 basic lighting

OpenGL Chapter 7 basic lighting

2022-06-11 03:34:00 【Carefree young heart】

The basic lighting here uses :

Feng's illumination model

Feng's illumination model is divided into three parts

Before I begin, I want to emphasize one point , In the first half of the chapter, the use of lighting is to use fixed normals , And in “ World coordinates ” Run under

Then we will rewrite our vertex shader and fragment shader in the observation coordinates

The advantage of observation coordinates is that we can easily obtain the position of the camera or the position of our observer , He is always in the observation coordinate (0,0,0) Location

Let's review each space in the coordinate system

1 Ambient light (Ambient)

Adding ambient lighting to a scene is very simple .

We multiply the color of the light by a small constant environmental factor , Multiplied by the color of the object , Then take the final result as the color of the clip

2 Diffuse reflection (diffuse)

We know that the smaller the angle between two unit vectors , The result of their dot multiplication tends to 1.

When the angle between two vectors is 90 When , Dot multiplication becomes 0. This also applies to θ,θ The bigger it is , The less light should affect the color of the clip .

At this point, we need a normal direction and a direction pointing to the light source ( In graphics, lighting direction refers to the position of the light source starting from each world coordinate , It has no physical meaning but is convenient for calculation ).

We can get the diffuse effect by their dot product

3 Specular illumination (Specular)

We calculate the reflection vector by inverting the direction of the incident light according to the normal vector .

Then we calculate the angle difference between the reflection vector and the viewing direction , The smaller the angle between them , The greater the effect of mirror light . The result is , When we look at the reflection direction of the incident light on the surface , You'll see a little highlights .

The observation vector is an additional variable that we need to calculate the specular illumination , We can use the observer's world space position and the position of the fragment to calculate it . Then we calculate the illumination intensity of the mirror surface , Multiply it by the color of the light source , And add it to the ambient lighting and diffuse lighting .

But here we use the world coordinates instead of the observation matrix

We choose to do illumination calculation in world space , But most people tend to calculate the illumination in the observation space .

The advantage of computing in observation space is , The observer's position is always (0, 0, 0), So you've got the observer's position for nothing .

However , If learning is the goal , I think it is more intuitive to calculate illumination in world space .

If you still want to calculate lighting in the observation space , You need to transform all the relevant vectors with the observation matrix ( Don't forget to modify the normal matrix as well ).

//cubeShader.fs

#version 330 core

out vec4 FragColor;

uniform vec3 objectColor;// The color of the object

uniform vec3 lightColor;// The color of the light source

uniform vec3 lightPos;// You need the position of the light source to calculate the diffuse reflection

uniform vec3 viewPos;

in vec3 Normal;

in vec3 FragPos;

void main()

{

//ambient

float ambientStrength = 0.1;// Ambient light intensity coefficient

vec3 ambient = ambientStrength * lightColor;// The ambient light = Ambient light intensity * The color of the light source

// diffuse // Diffuse reflection

vec3 norm = normalize(Normal);//normalize Normalized coordinates use their unit vectors

vec3 lightDir = normalize(lightPos-FragPos);// The direction is FragPos Point to lightPos In graphics, lighting direction refers to the position of the light source starting from each world coordinate , It has no physical meaning but is convenient for calculation

float diff = max(dot(norm, lightDir), 0.0);// Use maximum max To ensure that the result is not negative

vec3 diffuse = diff * lightColor;// Finally, multiply by the color of the light source to get the diffuse effect

// Specular reflection

vec3 viewDir = normalize(viewPos - FragPos);// A vector from an object to the position of the line of sight Here is from the object to the camera

vec3 reflectDir = reflect(-lightDir, norm);// We said earlier lightDir Is the direction in which the object points to the light source But here we use the light source to point in the direction of the object , You also need to provide a normal to calculate the reflection vector

float specularStrength=0.5;// Light intensity

float spec = pow(max(dot(viewDir, reflectDir), 0.0), 32);// Dot product of line of sight direction and reflection vector , Is the cosine of the angle between the reflection angle and the line of sight according to 2 To the power of Here is 2 The fifth power of is the fifth degree Of course, there are 2 Of 8 To the power of 256

// And take it 32 The next power . This 32 Is the reflectivity of the highlight (Shininess). The more reflective an object is , The stronger the ability to reflect light , The less scattering , The smaller the highlight

vec3 specular = specularStrength * spec * lightColor;// The final specular reflection = Reflectance * Light intensity * The color of the light source

vec3 result = (ambient + diffuse+ specular) * objectColor;// final result =( The ambient light + Diffuse reflection + Specular reflection )* The color of the object itself

FragColor = vec4(result, 1.0);

}

//cubeShader.vs

#version 330 core

layout (location = 0) in vec3 aPos;

layout (location = 1) in vec3 aNormal;// tell GPU Location 1 The attribute of is the normal vector

out vec3 Normal;

out vec3 FragPos;

uniform vec3 viewPos;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0);

FragPos = vec3(model * vec4(aPos, 1.0));// The position of the fragment is the position of the cube after the model transformation

Normal = aNormal;

}

//lightShader.fs

#version 330 core

out vec4 FragColor;

void main()

{

FragColor = vec4(1.0);

}

//lightShader.vs

#version 330 core

layout (location = 0) in vec3 aPos;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0);

}

#include "Shader.h"

#define STB_IMAGE_IMPLEMENTATION

#include "stb_image.h"

#include <glad/glad.h>

#include <GLFW/glfw3.h>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

#include <iostream>

void mouse_callback(GLFWwindow* window, double xpos, double ypos);

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset);

void processInput(GLFWwindow* window);

// settings

const unsigned int SCR_WIDTH = 800; // Define the size of screen space

const unsigned int SCR_HEIGHT = 600;

glm::vec3 cameraPos = glm::vec3(0.0f, 0.0f, 3.0f);

glm::vec3 cameraFront = glm::vec3(0.0f, 0.0f, -1.0f);

glm::vec3 cameraUp = glm::vec3(0.0f, 1.0f, 0.0f);

bool firstMouse = true;

float yaw = -90.0f; // Yaw is initialized to -90.0 degree , Because the yaw is 0.0 Will result in a direction vector pointing to the right , So we initially rotated a little to the left .

float pitch = 0.0f; // Initialize pitch angle

float lastX = 800.0f / 2.0;// In order to set the initial position as the center of the screen, take half the size of the screen space

float lastY = 600.0 / 2.0;

float fov = 45.0f;// Initial field angle

// timing

float deltaTime = 0.0f; // time between current frame and last frame

float lastFrame = 0.0f;

//glm::vec3 lightPos(1.2f, 1.0f, 2.0f);

void processInput(GLFWwindow* window);

float vertices[] = {

// The vertices // normal normal

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

0.5f, 0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f

};

// Define a vec3 Type array to store the displacement matrix

glm::vec3 cubePositions[] = {

glm::vec3(0.0f, 0.0f, 0.0f),

glm::vec3(2.0f, 5.0f, -15.0f),

glm::vec3(-1.5f, -2.2f, -2.5f),

glm::vec3(-3.8f, -2.0f, -12.3f),

glm::vec3(2.4f, -0.4f, -3.5f),

glm::vec3(-1.7f, 3.0f, -7.5f),

glm::vec3(1.3f, -2.0f, -2.5f),

glm::vec3(1.5f, 2.0f, -2.5f),

glm::vec3(1.5f, 0.2f, -1.5f),

glm::vec3(-1.3f, 1.0f, -1.5f)

};

int main()

{

//Glfw: Initialization and configuration

// ------------------------------

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

#ifdef __APPLE__

glfwWindowHint(GLFW_OPENGL_FORWARD_COMPAT, GL_TRUE);

#endif

//glfw Window creation

// --------------------

GLFWwindow* window = glfwCreateWindow(SCR_WIDTH, SCR_HEIGHT, "LearnOpenGL", NULL, NULL);// Use the size of the defined screen space

if (window == NULL)

{

std::cout << "Failed to create GLFW window" << std::endl;

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

glfwSetCursorPosCallback(window, mouse_callback);

glfwSetScrollCallback(window, scroll_callback);

// First we have to tell GLFW, It should hide the cursor , And capture (Capture) it .

glfwSetInputMode(window, GLFW_CURSOR, GLFW_CURSOR_DISABLED);

// Load all OpenGL A function pointer

// ---------------------------------------

if (!gladLoadGLLoader((GLADloadproc)glfwGetProcAddress))

{

std::cout << "Failed to initialize GLAD" << std::endl;

return -1;

}

// Configure global opengl state

// -----------------------------

glEnable(GL_DEPTH_TEST);

// Build and compile our shader Program

// ------------------------------------

Shader ourShader("shaderSampler.vs", "shaderSampler.fs");

Shader modelShader("cubeShader.vs", "cubeShader.fs");

Shader lightShader("lightShader.vs", "lightShader.fs");

// Create and compile vertex data ( And buffer ), Configure vertex attributes

// ------------------------------------------------------------------

unsigned int VBO, VAO, VAO2;

glGenVertexArrays(1, &VAO);

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

glBindVertexArray(VAO);// The light source uses VAO To manage vertex attributes

// Location properties

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)0);// We just need to use the first three vertices and forget about the rest

glEnableVertexAttribArray(0);

// Then in the vertex shader layout (location = 1) in vec3 aNormal;// tell GPU Location 1 Properties of

glGenVertexArrays(1, &VAO2);// Objects use VAO2 To manage vertex attributes

glBindVertexArray(VAO2);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

// Location properties

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

// Normal attributes

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(1);

// Load and create a texture

// -------------------------

unsigned int texture1, texture2;

// texture 1

// ---------

glGenTextures(1, &texture1);

glBindTexture(GL_TEXTURE_2D, texture1);

// Set the texture wrapping Parameters

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

// Set the texture filtering Parameters

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// Load image , Create textures and generate mipmaps // Multi level principle texture

int width, height, nrChannels;

stbi_set_flip_vertically_on_load(true); // tell stb_image.h stay y Flip the loaded texture on the axis .

// Why do you need to flip Y The axis is because the starting position of the texture image is the upper right and the coordinates of our vertices (0,0) The point is lower left

unsigned char* data = stbi_load("container.jpg", &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

std::cout << "Failed to load texture" << std::endl;

}

stbi_image_free(data);

// texture 2

// ---------

glGenTextures(1, &texture2);

glBindTexture(GL_TEXTURE_2D, texture2);

// Set the texture wrapping Parameters

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

// Set the texture filtering Parameters

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// load image, create texture and generate mipmaps

//data = stbi_load(("aotu.jpg"), &width, &height, &nrChannels, 0);

//data = stbi_load(("shanshui.jpg"), &width, &height, &nrChannels, 0);

data = stbi_load(("awesomeface.png"), &width, &height, &nrChannels, 0);

if (data)

{

// If there is no map, please give priority to this RGBA Medium alpha(A) passageway If your map has alpha Please be sure to use RGBA Otherwise, the map cannot be displayed

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

std::cout << "Failed to load texture" << std::endl;

}

stbi_image_free(data);

// Tell... For each sampler opengl Which texture unit does it belong to ( Just do it once )

// -------------------------------------------------------------------------------------------

ourShader.use();

ourShader.setInt("texture1", 0);

ourShader.setInt("texture2", 1);

// ourShader.setVec3("lightPos", lightPos);

// Render loop

// -----------

while (!glfwWindowShouldClose(window))

{

float currentFrame = glfwGetTime();

deltaTime = currentFrame - lastFrame;

lastFrame = currentFrame;

// -----

processInput(window);

// Rendering

// ------

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); // Clears the color buffer of the previous frame as well as Depth test buffer

// bind textures on corresponding texture units

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, texture1);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, texture2);

// Activate shader

float angle = 20.0f * 0 * (float)glfwGetTime();// Give me a glfwGetTime Let the model rotate

glm::mat4 projection = glm::mat4(1.0f);

glm::mat4 view = glm::mat4(1.0f);

glm::mat4 model = glm::mat4(1.0f);

glm::vec3 lightPos = glm::vec3(cubePositions[2]);// Light source location

ourShader.use();

projection = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);// Projection matrix Parameters : Viewport size , Screen aspect ratio , as well as near and far

view = glm::lookAt(cameraPos, cameraPos + cameraFront, cameraUp);//lookAt matrix Parameters : Camera position , Observe the position of the target , A vertical upward direction

model = glm::translate(model, cubePositions[0]);// Pass in the array to each new model, which has different displacement in world coordinates

model = glm::rotate(model, glm::radians(angle), glm::vec3(1.0f, 0.3f, 0.5f));

lightShader.use();

lightPos.x = 1.0f + sin(glfwGetTime()) * 2.0f;

lightPos.y = sin(glfwGetTime() / 2.0f) * 1.0f;

model = glm::mat4(1.0f);

model = glm::translate(model, lightPos);// Pass in the array to each new model, which has different displacement in world coordinates

angle = 20.0f * 2 * (float)glfwGetTime();// Give me a glfwGetTime Let the model rotate

model = glm::rotate(model, glm::radians(angle) * 0, glm::vec3(1.0f, 0.3f, 0.5f));

lightShader.setMat4("model", model);

lightShader.setMat4("projection", projection);

lightShader.setMat4("view", view);

glBindVertexArray(VAO);

glDrawArrays(GL_TRIANGLES, 0, 36);

modelShader.use();

model = glm::mat4(1.0f);

model = glm::translate(model, cubePositions[0]);// Pass in the array to each new model, which has different displacement in world coordinates

angle = 20.0f * 1 * (float)glfwGetTime();// Give me a glfwGetTime Let the model rotate

model = glm::rotate(model, glm::radians(angle) * 0, glm::vec3(1.0f, 0.5f, 0.5f));//rotate Model location Rotation angle Rotation axis

modelShader.setMat4("model", model);// Set the model transformation matrix

modelShader.setMat4("projection", projection);// Set the projection change matrix

modelShader.setMat4("view", view);// Set the view change matrix

modelShader.setVec3("objectColor", 1, 0.5, 0.5);// Set the color of the object

modelShader.setVec3("lightColor", 1, 1, 1);// Set the color of the light source. Of course, you can also set a uniform To set variables

modelShader.setVec3("lightPos", lightPos);// Set the light source position

modelShader.setVec3("viewPos", cameraPos);// Set the position of the camera to the viewing position

glBindVertexArray(VAO2);

glDrawArrays(GL_TRIANGLES, 0, 36);

glfwSwapBuffers(window);

glfwPollEvents();

}

glDeleteVertexArrays(1, &VAO);

glDeleteBuffers(1, &VBO);

glfwTerminate();

return 0;

}

void processInput(GLFWwindow* window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

glfwSetWindowShouldClose(window, true);

float cameraSpeed = 5.5f * deltaTime;; // adjust accordingly

if (glfwGetKey(window, GLFW_KEY_W) == GLFW_PRESS)

cameraPos += cameraSpeed * cameraFront;

if (glfwGetKey(window, GLFW_KEY_S) == GLFW_PRESS)

cameraPos -= cameraSpeed * cameraFront;

if (glfwGetKey(window, GLFW_KEY_A) == GLFW_PRESS)

cameraPos -= glm::normalize(glm::cross(cameraFront, cameraUp)) * cameraSpeed;

if (glfwGetKey(window, GLFW_KEY_D) == GLFW_PRESS)

cameraPos += glm::normalize(glm::cross(cameraFront, cameraUp)) * cameraSpeed;

if (glfwGetKey(window, GLFW_KEY_SPACE) == GLFW_PRESS)// Here is a space bar so that we can Y Move on the axis

cameraPos += cameraUp * cameraSpeed;

//cameraPos.y = 0.0f;

// You can do this by Y The vector in the direction is set to 0 Make him FPS This type of camera can only be used in XZ Move on the plane

}

void mouse_callback(GLFWwindow* window, double xposIn, double yposIn)

{

float xpos = static_cast<float>(xposIn);

float ypos = static_cast<float>(yposIn);

// This bool The variable is initially set to true Of

// We need to set it as the center of the screen at the beginning

// If you don't do this At the beginning of the program, it will call the callback function to point to the position of the screen when you enter the mouse

// So it's far from the center

if (firstMouse)

{

lastX = xpos;

lastY = ypos;

firstMouse = false;

}

// Then, in the mouse callback function, we calculate the offset of the mouse position between the current frame and the previous frame :

float xoffset = xpos - lastX;

float yoffset = lastY - ypos; // y The coordinates are from bottom to top

lastX = xpos;

lastY = ypos;

float sensitivity = 0.1f; // sensitivity This value can be set arbitrarily

xoffset *= sensitivity;

yoffset *= sensitivity;

yaw += xoffset;

pitch += yoffset;

// To make sure the camera doesn't roll over

if (pitch > 89.0f)

pitch = 89.0f;

if (pitch < -89.0f)

pitch = -89.0f;

// stay xz Look at... On the plane Y Axis

// Here we only update y value , Observe carefully x and z The component is also affected . From the triangles, we can see that their value is equal to :

//direction.x = cos(glm::radians(pitch));

//direction.y = sin(glm::radians(pitch)); // Notice that we first turn the angle into radians

//direction.z = cos(glm::radians(pitch));// here Y Axis updates do affect Z But I don't quite understand why it's directly equal to cos(pitch)

//

//

//

// Here we only update y value , Observe carefully x and z The component is also affected . From the triangles, we can see that their value is equal to :

//direction.x = cos(glm::radians(yaw));

//direction.y =1 // Y unchanged

//direction.z = sin(glm::radians(yaw));

//

// The following equation is equivalent to first completing the rotation transformation of the pitch angle and then multiplying it by the yaw angle

// Combine the above two steps

glm::vec3 front;

front.x = cos(glm::radians(yaw)) * cos(glm::radians(pitch));

front.y = sin(glm::radians(pitch));

front.z = sin(glm::radians(yaw)) * cos(glm::radians(pitch));

cameraFront = glm::normalize(front);

}

// glfw: whenever the mouse scroll wheel scrolls, this callback is called

// ----------------------------------------------------------------------

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset)

{

//yoffset Is the direction in which our roller rolls vertically

if (fov >= 1.0f && fov <= 45.0f)

fov -= yoffset;

// Set a boundary for him stay 1 To 45 Between

if (fov < 1.0f)

fov = 1.0f;

if (fov > 45.0f)

fov = 45.0f;

}

After successful operation, the light source will change over time

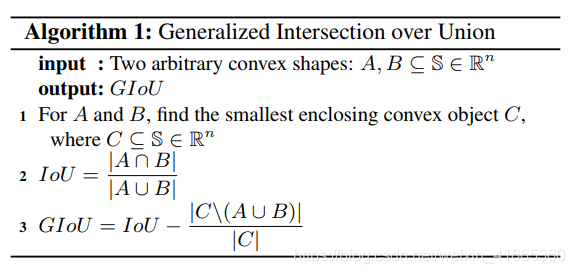

Now we will introduce the normal matrix

What is a normal matrix , And why we need to use the normal matrix ?

For objects with regular scaling , Regular scaling only affects the size of the normal , The direction will not change , This can easily be normalized or normalized (normalize) To eliminate the effect of rule scaling

But for objects that change irregularly , The normal will no longer be perpendicular to the corresponding surface , So the light will be destroyed .

Here's the picture :

Normal matrix :

There will be such a problem in the observation coordinates , Multiplying by an observation matrix will only change the normal , In this way, we need to use the normal matrix to eliminate the influence of irregular changes

This is what we hope to achieve

We want the tangent direction to always be the same as the normal direction

This is actually the result of non proportional scaling

Now let's assume that the tangent vector transformation matrix is M, Transformed tangent vector T’ = M * T, Similarly, let's assume that we now have a correct normal transformation matrix G, Make the transformed normal N’ = G * N, So after the transformation ,N’ Point multiplication T’ Is still equal to zero , So we can wait until the equation :

Because the dot product of a vector is equivalent to the product of a vector ( You can think of the transposed vector as a Nx1 Columns of the matrix ):

Since the transpose of the product is equal to the product of transpose, we can get :

I It's a unit matrix

It can be obtained. :

Normal = mat3(transpose(inverse(view * model))) * aNormal;

Now we can use the normal matrix to calculate our basic illumination in the observation coordinates

//cubeShader.fs

#version 330 core

out vec4 FragColor;

in vec3 FragPos;

in vec3 Normal;

in vec3 LightPos; // The position of the light source in view coordinates

uniform vec3 lightColor;// The color of the light source Set in the main program

uniform vec3 objectColor;// The color of the object Set in the main program

void main()

{

// ambient Ambient light

float ambientStrength = 0.1;

vec3 ambient = ambientStrength * lightColor; // The influence factor of the light is multiplied by the color of the light source

// diffuse Diffuse reflection

vec3 norm = normalize(Normal);// The unit vector of the normal

vec3 lightDir = normalize(LightPos - FragPos);// In graphics, the ray direction of the diffuse reflection setting is the direction from the object to the light source Just for the convenience of calculation, there is no physical meaning

float diff = max(dot(norm, lightDir), 0.0);// Point to the direction of the light source and then point to the normal to calculate the included angle The more the angle, the more bright the light is overhead ( Imagine the sun at noon ) The light is the strongest Why use max Because it doesn't make sense to have a negative cosine

vec3 diffuse = diff * lightColor;

// specular Specular highlights

float specularStrength = 0.5;

vec3 viewDir = normalize(-FragPos); // Since the position of the camera in view coordinates is 0 therefore 0-FragPos=-FragPos A direction from an object to an observer ( The camera )

vec3 reflectDir = reflect(-lightDir, norm); // In graphics, the ray direction of the diffuse reflection setting is the direction from the object to the light source Just for the convenience of calculation, there is no physical meaning

// The opposite direction here is the direction in which the light source points to the object

float spec = pow(max(dot(viewDir, reflectDir), 0.0), 32);// Point the reflected light and the direction of the observer 32 Power table this 32 Is the reflectivity of the highlight (Shininess). The more reflective an object is , The stronger the ability to reflect light , The less scattering , The smaller the highlight .

vec3 specular = specularStrength * spec * lightColor;

vec3 result = (ambient + diffuse + specular) * objectColor;

FragColor = vec4(result, 1.0);

}

//cubeShader.vs

#version 330 core

layout (location = 0) in vec3 aPos;// Set the first attribute to vertex position

layout (location = 1) in vec3 aNormal;// Set the second attribute to normal

out vec3 FragPos;// Output the position of an object

out vec3 Normal;// Output the normal vector after the normal matrix changes

out vec3 LightPos;// Output the position of a light source in view coordinates

uniform vec3 lightPos; // stay modelShader.setVec3("lightPos", lightPos); Light source position set in

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0);

FragPos = vec3(view * model * vec4(aPos, 1.0));// The position of the object in view coordinates

Normal = mat3(transpose(inverse(view * model))) * aNormal;// View coordinates The normal after the normal matrix changes

LightPos = vec3(view * vec4(lightPos, 1.0)); // The position of the light source in view coordinates

}

边栏推荐

- SSL库选择

- HikariPool-1 - Shutdown initiated... HikariPool-1 - Shutdown completed.

- What has TCL done right to break through the technological strength of Chinese brand innovation?

- Technology Pro strength evaluation: comprehensive PK of high-end massage chair market, who is worthy of the machine king?

- 潮玩力真火力!年轻人第一台巨幕影院?酷开电视Max 86“庞然来袭

- The tide play power is really firepower! The first big screen cinema for young people? Cool open TV Max 86 "sudden attack

- 【安全科普】今天你被社工了吗?

- RHEL7 切换字符编码为GBK

- postgresql 函数的参数为自定义类型时传参格式

- Tweenmax colorful ball bouncing animation

猜你喜欢

js点击太阳月亮切换白天黑夜js特效

Troubleshooting of single chip microcomputer communication data delay

The key data of music genuine rate is missing. What is the odds of Netease cloud music IPO?

JS top icon menu click to switch background color JS special effect

潮玩力真火力!年轻人第一台巨幕影院?酷开电视Max 86“庞然来袭

【ELT.ZIP】OpenHarmony啃论文俱乐部——多层存储分级数据压缩

HikariPool-1 - Shutdown initiated... HikariPool-1 - Shutdown completed.

Computer vision (AI) interview

Azure Kubernates Service 更新|提升开发体验和效率

OpenGl第十章 投光物

随机推荐

SSL库选择

Using minted to insert highlighted code in texstudio in latex environment

postgresql copy语句

Mavros controls UAV to conduct binocular slam in gazebo environment

Arm development board scheme and manufacturer analysis

音乐正版率关键数据缺失,网易云音乐IPO胜算几何?

Opencv实现纵横比保持的图像缩放

SSL交互过程

rt-thread测试

Solution to the problem of gd32f4 serial port DMA reception

B_ QuRT_ User_ Guide(18)

Canvas rotation drawing H5 animation JS effect

基于SSM框架的连锁超市购物零售后台管理系统

路径计数2(dp + 组合数)

Lecturer paging query_ Instructor condition query with page

Mavros控制无人机在gazebo环境下进行双目SLAM

联易融一面(已过)

js实现柯里化

Jeecgboot learning_ Online form first experience

If there is no separation ----- > > log interpretation (3)