当前位置:网站首页>Three ways to operate tables in Apache iceberg

Three ways to operate tables in Apache iceberg

2020-11-09 07:35:00 【osc_tjee7s】

stay Apache Iceberg There are many ways to create tables in , Among them is the use of Catalog How or how to implement org.apache.iceberg.Tables Interface . Let's briefly introduce how to use ..

If you want to know in time Spark、Hadoop perhaps HBase Related articles , Welcome to WeChat official account. : iteblog_hadoop

List of articles

Use Hive catalog

You can tell by the name ,Hive catalog It's through connections Hive Of MetaStore, hold Iceberg The table is stored in it , Its implementation class is org.apache.iceberg.hive.HiveCatalog, Here is the passage sparkContext Medium hadoopConfiguration To get HiveCatalog The way :

import org.apache.iceberg.hive.HiveCatalog;

Catalog catalog = new HiveCatalog(spark.sparkContext().hadoopConfiguration());Catalog The interface defines the method of operation table , such as createTable, loadTable, renameTable, as well as dropTable. If you want to create a table , We need to define TableIdentifier, Tabular Schema And partition information , as follows :

import org.apache.iceberg.Table;

import org.apache.iceberg.catalog.TableIdentifier;

import org.apache.iceberg.PartitionSpec;

import org.apache.iceberg.Schema;

TableIdentifier name = TableIdentifier.of("default", "iteblog");

Schema schema = new Schema(

Types.NestedField.required(1, "id", Types.IntegerType.get()),

Types.NestedField.optional(2, "name", Types.StringType.get()),

Types.NestedField.required(3, "age", Types.IntegerType.get()),

Types.NestedField.optional(4, "ts", Types.TimestampType.withZone())

);

PartitionSpec spec = PartitionSpec.builderFor(schema).year("ts").bucket("id", 2).build();

Table table = catalog.createTable(name, schema, spec);Use Hadoop catalog

Hadoop catalog Do not rely on Hive MetaStore To store metadata , Its use HDFS Or a similar file system to store metadata . Be careful , File systems need to support atomic renaming operations , So the local file system (local FS)、 Object storage (S3、OSS etc. ) To store Apache Iceberg Metadata is not secure . Here's how to get HadoopCatalog Example :

import org.apache.hadoop.conf.Configuration;

import org.apache.iceberg.hadoop.HadoopCatalog;

Configuration conf = new Configuration();

String warehousePath = "hdfs://www.iteblog.com:8020/warehouse_path";

HadoopCatalog catalog = new HadoopCatalog(conf, warehousePath);and Hive catalog equally ,HadoopCatalog Also realize Catalog Interface , So it also implements various operations of the table , Include createTable, loadTable, as well as dropTable. Here's how to use HadoopCatalog To create Iceberg Example :

import org.apache.iceberg.Table;

import org.apache.iceberg.catalog.TableIdentifier;

TableIdentifier name = TableIdentifier.of("logging", "logs");

Table table = catalog.createTable(name, schema, spec);Use Hadoop tables

Iceberg It also supports storing in HDFS Table in table of contents . and Hadoop catalog equally , File systems need to support atomic renaming operations , So the local file system (local FS)、 Object storage (S3、OSS etc. ) To store Apache Iceberg Metadata is not secure . Tables stored in this way do not support various operations of the table , For example, it doesn't support renameTable. Here's how to get HadoopTables Example :

import org.apache.hadoop.conf.Configuration;

import org.apache.iceberg.hadoop.HadoopTables;

import org.apache.iceberg.Table;

Configuration conf = new Configuration():

HadoopTables tables = new HadoopTables(conf);

Table table = tables.create(schema, spec, table_location);stay Spark in , It supports HiveCatalog、HadoopCatalog as well as HadoopTables Way to create 、 Load table . If the incoming table is not a path , select HiveCatalog, otherwise Spark It will be inferred that the table is stored in HDFS Upper .

Of course ,Apache Iceberg The storage place of table metadata is pluggable , So we can customize the way metadata is stored , such as AWS Just one for the community issue, Its handle Apache Iceberg Metadata in is stored in glue Inside , See #1633、#1608.

In addition to this blog post , It's all original !Please add : Reprinted from Past memory (https://www.iteblog.com/)

Link to this article : 【Apache Iceberg There are three ways to operate the table 】(https://www.iteblog.com/archives/9886.html)

版权声明

本文为[osc_tjee7s]所创,转载请带上原文链接,感谢

边栏推荐

- 14.Kubenetes简介

- 平台商业化能力的另一种表现形式SAAS

- Teacher Liang's small class

- A bunch of code forgot to indent? Shortcut teach you carefree!

- How does pipedrive support quality publishing with 50 + deployments per day?

- When we talk about data quality, what are we talking about?

- Investigation of solutions to rabbitmq cleft brain problem

- 链表

- Factory pattern pattern pattern (simple factory, factory method, abstract factory pattern)

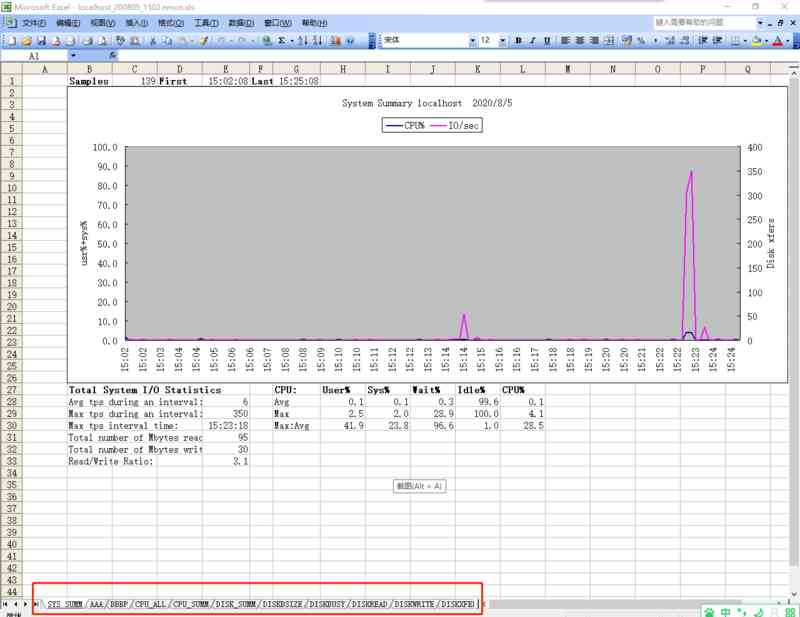

- 服务器性能监控神器nmon使用介绍

猜你喜欢

C + + adjacency matrix

2020,Android开发者打破寒冬的利器是什么?

Sublime text3 插件ColorPicker(调色板)不能使用快捷键的解决方法

B. protocal has 7000eth assets in one week!

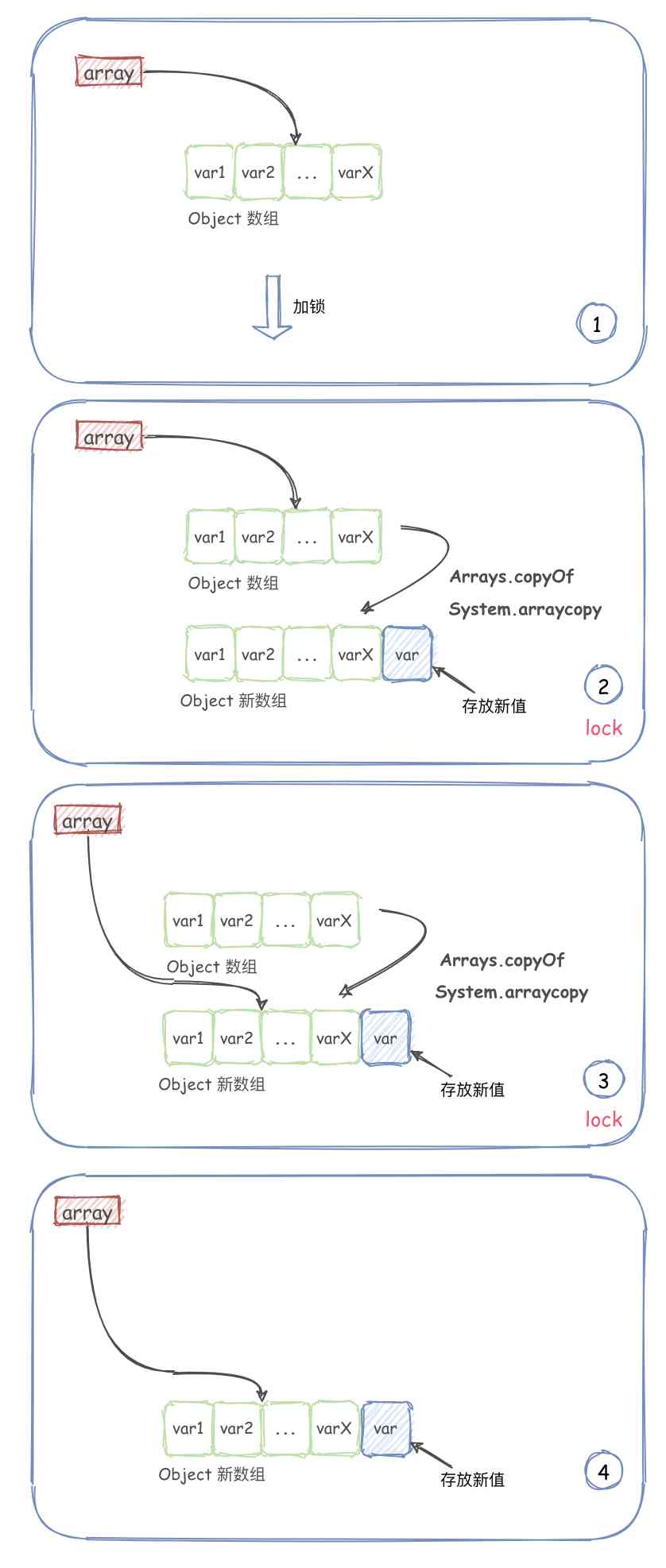

Copy on write collection -- copyonwritearraylist

Platform in architecture

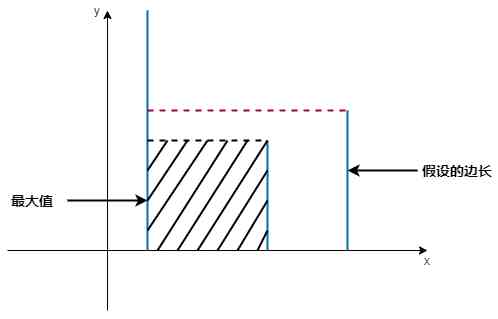

Leetcode-11: container with the most water

Introduction to nmon

2. Introduction to computer hardware

操作系统之bios

随机推荐

Teacher Liang's small class

Several rolling captions based on LabVIEW

CSP-S 2020 游记

Linked blocking queue based on linked list

salesforce零基础学习(九十八)Salesforce Connect & External Object

APP 莫名崩溃,开始以为是 Header 中 name 大小写的锅,最后发现原来是容器的错!

首次开通csdn,这篇文章送给过去的自己和正在发生的你

leetcode之反转字符串中的元音字母

This program cannot be started because msvcp120.dll is missing from your computer. Try to install the program to fix the problem

《MFC dialog中加入OpenGL窗体》

Introduction to nmon

几行代码轻松实现跨系统传递 traceId,再也不用担心对不上日志了!

Bifrost 之 文件队列(一)

Depth first search and breadth first search

2.计算机硬件简介

c++11-17 模板核心知识(二)—— 类模板

Pipedrive如何在每天部署50+次的情况下支持质量发布?

老大问我:“建表为啥还设置个自增 id ?用流水号当主键不正好么?”

Apache Iceberg 中三种操作表的方式

使用递增计数器的线程同步工具 —— 信号量,它的原理是什么样子的?