There is a very common branch of dynamic programming —— State compression dynamic planning , Many people are afraid of state compression , But it's not that hard , I hope this article can take you to learn this classic application .

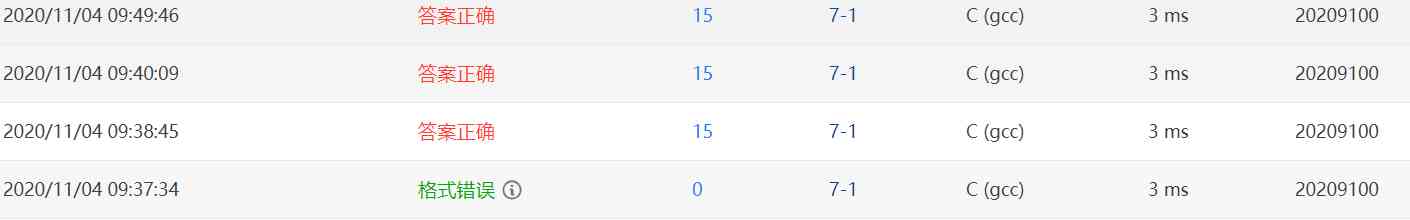

Binary represents state

When explaining the problem of multiple backpacks , We've talked about binary representation to solve multiple knapsacks . Using the properties of binary , Split multiple items into a few items , Transformed into a simple zero one backpack to solve . Today's state compression is also inseparable from binary , But I personally feel that today's binary applications are easier to understand .

Many binary applications are inseparable from aggregate The concept , We all know that in computers , All data is stored in binary form . Generally one \(int\) Plastic surgery is 4 Bytes , That is to say 32 position bit, We go through this 32 position bit On 0 and 1 How big can a combination of 21 Billion different numbers . If we put this 32 position bit Think of it as a collection , that Each number should correspond to a state of the set , And the states of each number are different .

For example, in the picture above , We listed 5 Binary bits , We set two of them to 1, The rest of the settings are 0. We calculate , You can get 6 This number , that 6 It means (00110) This state . Numbers and states are one-to-one , Because every integer converted to binary is unique .

That is to say, an integer can be converted into a binary number , It can represent a state of a collection , The two correspond one by one . This is very important , It's the basis of all the derivation .

State shift

The binary representation of an integer can represent the state of a binary set , Since it is a state, it can be transferred . On this basis , We can come to another very important conclusion —— We can use the addition and subtraction of integers to represent the transition between states .

Let's use the example just now , In the picture above, we list 5 Binary bits , Suppose we use this 5 Binary bits indicate 5 A little ball , The numbers of these balls are 0 To 4. thus , Just now 6 It can be considered as taking 1 Number and 2 The state of two little balls .

If we take it again at this time 3 Little ball No , So the state of the set changes , We use a picture to show :

The pen of the fans in the picture above represents the decision-making , For example, we took 3 Number one is a decision , Under the influence of this decision , The state of the collection has shifted . The number represented by the transferred set is 14, It's made up of previous sets 6 Plus the changes brought about by the transfer , That's what you get . It just means taking 3 The decision of the number one ball , So we're going to chain up the whole process .

To sum up , We use binary 0 and 1 Represents the state of a binary set . The state in which an article exists or does not exist . Because of binary 0 and 1 Can be transformed into a \(int\) Integers , In other words, we use integers to represent the state of a set . thus , We can The addition and subtraction of integers is used to represent the change of set state .

This is the essence of state compression , So called compression , In fact, it means that a set is compressed into an integer , Because integers can be used as subscripts for arrays , This operation will facilitate our coding .

There are a lot of tricks about bit operations , Link to the original text :Here

Travel agent problem

After understanding the meaning of state compression , Let's look at a classic example , That is to say, the problem of famous travel agents .

The background of the traveling salesman problem is interesting , It is said that there is a businessman who wants to Travel all over And Trade . There are a number of One way passage Connected to a , The merchant set out from one place , I want to take the shortest journey around all the areas , What's the shortest distance for a tour ? In this question , Let's assume that the businessman from 0 Set out , Finally, I still return to my position 0.

Let's take a look at the picture below to get a sense of :

Let's say that our businessmen from 0 Set out , to want to Return to... After a week's tour 0, So what's the shortest distance it needs to go through ?

This picture is still relatively simple , If in In extreme cases, there is a connection between all points When , For every point , The next position it can choose is \(n-1\) Kind of . Then there are a total of alternative routes \(n!\) Kind of , This is a very big value , Obviously we can't accept . That's why we say that the problem of traveling agents is a problem of \(NP-Hard\) problem .

NP problem

Now that we're here NP problem [1], Talk to you briefly NP The definition of the problem .

Many beginners of algorithms are very confused about these concepts , It's true , These concepts all sound similar , It's easy to get dizzy . Let's start with the simplest , First of all P problem .

P The problem can be regarded as a solved problem , The definition of this solution is that it can be done In the time complexity of polynomials solve . So called polynomials , That is to say , there k It's a constant . There are many functions opposite to polynomials , For example, exponential function 、 Factorials and so on .

\(NP\) The problem is not P The opposite of the problem , there N It can't be understood as No, It's like \(noSQL\) It's not \(SQL\) It means the same thing .\(NP\) The problem is that you can The problem of verifying solutions in polynomials .

For example, given a sequence of sorts, we can judge whether it is ordered or not , It's very simple , We just need to traverse it . Another example is factorization of large integers , It's hard for us to do factorization , But let's judge whether the solution of factorization is correct or not is much simpler , We can just multiply them and compare them with the original formula .

obviously all P The problem is NP problem , Now that we can find solutions in polynomials , So we can also verify whether the solution is correct in polynomials . But is the reverse true , The problem of whether the solution can be verified in polynomial time , It can also be solved in polynomial time by some algorithm ? We haven't thought of algorithm yet , Or the solution doesn't exist at the beginning ?

The above question is famous NP=P The question of whether it is true , The problem is still a mystery , Some people believe in the establishment of , Some people don't believe , It's also considered one of the biggest problems of the 21st century .

In order to prove the problem , Scientists have come up with another way , It's about making rules for problems . for instance , Like solving equations , It's very simple for us to solve the equation of one variable and one degree , But it's a little more difficult to solve the bivariate equation . If we come up with a way to understand the equation of 2 yuan degree , So it can also be used to solve the equation of one variable and one degree , Because we just need to make another unknown equal to 0 It's a one-dimensional equation .

Empathy , We can also put NP Problems are transformed , Make it more difficult , Increasing to the limit becomes an ultimate problem . Because the ultimate question is all NP The problem translates into , As long as we come up with algorithms to solve the ultimate problem , that , be-all NP All the problems are solved . For example, if we come up with an idea to understand N The algorithm of the meta equation , Then all the problems of solving the equations are solved . The problem after this transformation is called NP Problem completely , It's also called NPC problem .

Let's take a look at a classic NPC problem , That is, the logic circuit problem .

Here is a logic circuit , Suppose we know that its output is \(True\) , We also know the structure of the circuit , So can we be sure that we can find a combination of inputs , So the final output is \(True\) Do you ?

It's obviously a \(NP\) problem , Because we can directly put the solution into the circuit to calculate , Then we can verify whether the solution is correct , But it's hard to get an answer . After rigorous proof , all NP All problems can be transformed into , That is to say, if we find a solution, we can solve this problem in polynomial , Then we've solved all the problems \(NP\) problem .

Last , One more \(NP-Hard\) problem ,\(NP-Hard\) The problem is that all \(NP\) The problem can be transformed into , however It's not... In itself NP problem , That is to say, we can't judge whether its solution is correct in polynomial time .

For example, the problem of traveling agents mentioned just now is one of \(NP-Hard\) problem , Because even if we give a solution , We also There is no way to quickly determine whether a given solution is correct , You have to go through all the situations before . The complexity of our verification is beyond the scope of polynomials , So it doesn't belong to \(NP\) problem , Than \(NP\) The problem is more difficult , So it's a \(NP-Hard\) problem .

State compression solution

That's it \(NP\) problem , Let's go back to the algorithm itself .

Since we are going to use the idea of dynamic planning to solve this problem , Just Can't get out of state and decision making . As mentioned above, we can use binary to represent the state of a set with an integer , It's easy to think of this state as a state of dynamic planning , But it's not true .

There are no restrictions on the transfer between simple sets , For example, in the previous examples, we have taken 1 Ball and 2 Ball No , You can take the rest of the ball in the back , But the problem with the traveling agent is different , Suppose we've been to 0 and 1 Two places , We are in the position 1, We can't use 2 and 5 The connection between the two places updates the status of , Because we can only from 1 We're on the way out . That is to say, we There are limits to the decisions that can be taken .

So we can't just take the state of the set as the State , To ensure the correct order of movement between locations , We also need to add one dimension , That is, the current position . therefore The real state is the state of the location we've traversed before , Add the current location , The combination of the two .

The state is established , The decision is simple , Where the current location can go and has not been before , All can make up a decision .

We talked about that before , In dynamic planning problems , Complexity is equal to the number of states multiplied by the number of decisions , The number of States is , The number of decisions is n, So the overall complexity is . Although the figure still seems to be exaggerated , But still more than n! Many small .

Let's take an example of , If \(n=10,n!=3628800\),, The difference between the two is more than 30 times . With n The increase of , The gap between the two will be even greater .

Last , Let's implement the following algorithm :

import math

if __name__ == "__main__":

inf = 1 << 31

# Adjacency matrix stores edge weight

d = [[inf for _ in range(10)] for _ in range(10)]

# Test data

edges = [[0, 1, 3], [1, 2, 5], [2, 3, 5], [3, 4, 3], [4, 0, 7], [4, 1, 6], [0, 3, 4], [2, 0, 4]]

for u, v, l in edges:

d[u][v] = l

# Initialize to a value approximately infinite

dp = [[inf for _ in range(5)] for _ in range((1 << 5))]

dp[0][0] = 0

# Traversal state

for s in range(1, (1 << 5)):

for u in range(5):

# Traversal decision

for v in range(5):

# It must be demanded that this point has not been

if (s >> v) & 1 == 0:

continue

dp[s][v] = min(dp[s][v], dp[s - (1 << v)][u] + d[u][v])

print(dp[(1 << 5) - 1][0])

stay ACM In the code style of the competition , We usually use u Indicates the starting point of the edge ,v Indicates the end of the edge . So the first kind of triple loop above is to traverse all States , The next two loops enumerate the start and end points , That's all the sides . We traverse the last moving edge before the current state , That is to say, the current point is v, The previous point is u, So the previous state was \(s-2^v\) , The cost of making a decision is \(d[u][v]\), That is from u To v Distance of .

If you have read the previous article , It will be found that this is an inverse dynamic planning . We enumerate the current state and all sources of the current state , So as to find the optimal solution of the current state . If you are not familiar with this concept , You can check other articles under dynamic planning .

There are two details in this code , The first detail is We didn't do u The legal judgment of , It's possible that we u It's illegal , For example, we only have 2 and 3 Two points , But we enumerate from 4 To 5 The strategy of . This is no problem , Because we started by setting all States to infinity , Only the legal state is not infinite , Because we hope that the smaller the final result, the better , Illegal status will not be used to update .

The second detail is a little more subtle , That's what we set during initialization \(dp[0][0] = 0\) . This means that we started with empty sets , Not from 0 Point start . because 0 The number of states that the point has traversed is 1, Of course, we can also set it to 0 Already visited , from 0 PM , In this case, each point cannot be accessed repeatedly , So in the end we can't go back to 0 Dot , To get the right results we need to add back 0 Point of consumption needed .

The first point is the basis of the second point , If we all judge when enumerating strategies u Is the point legal , Then there is no way to implement this algorithm , because For an empty set , All points are unanswered , It's all illegal , We can't find a visiting u As a starting point for decision making .

It doesn't matter if you don't understand the above , I'll attach a slightly simpler way :

# We from 0 The point has been traversed

dp[1][0] = 0

for s in range(2, (1 << 5)):

for u in range(5):

# Strict restrictions u Must have traversed

if (s >> u) & 1 == 0:

continue

for v in range(5):

if (s >> v) & 1 == 0:

continue

dp[s][v] = min(dp[s][v], dp[s - (1 << v)][u] + d[u][v])

ans = inf

# Finally add back to 0 Distance of points

for i in range(5):

ans = min(ans, dp[(1 << 5) - 1][i] + d[i][0])

print(ans)

In this way , We From the State 1 Start , That is to say, we put 0 The position of the number is taken as the current point , And it's been traversed , So the sign is 1. The problem is that we can't go back to 0 了 , Because a point can only go once , So at the end of the day, we need to find back 0 The optimal path of a point .

\((1 << n) - 1\) The value of is from \(0\) To \(n-1\) All binary bits are \(1\) Value , It means \(n\) All positions have been traversed . Then we traverse all back to \(0\) Point of departure , Find the nearest one . Compared with the above method , This is easier to understand , But write a few more lines of code , But it's easier to understand . I suggest that if it is difficult to understand the first code directly , You can understand the second paragraph first , Then I want to understand why the first code also works .

summary

I don't know how many people have successfully seen this , Dynamic planning is not simple , It's normal to find it difficult to understand the first time you learn . But it's the kind of entry that feels especially difficult before , however Once you think about it, it's a very simple question . And you can see from the amount of code , I used thousands of words to describe the algorithm , It's only a dozen lines .

Dynamic programming algorithms are always like this , The code is not long. , But every line is the essence . At this point , It's really cost-effective .

Okay , Today's article is all about , If you think you've got something , Please feel free to order recommend Well , Your help is very important to me .

Reference resources

State compression techniques : Dimension reduction of dynamic programming