Host memory

In the system by CPU Memory accessed , There are two types : Pageable memory (pageable memory, In general applications, it is used by default ) And page locked memory (page-locked perhaps pinned).

Pageable memory is through the operating system api(malloc(), new()) Allocated memory space ; Page locked memory is never allocated to low-speed virtual memory , Can be guaranteed to exist in physical memory , And can pass through DMA Speed up communication with the device .

For hardware to use DMA, The operating system allows page locking of host memory , And because of performance ,CUDA It includes developers using these operating system tools API. Page locked and mapped to cuda Direct access to locked memory allows for the following :

- Faster transmission performance ;

- Asynchronous replication operations ( The memory copy returns control to the caller before the necessary copy ends ;GPU Copy operation and cpu Parallel execution );

- Mapped lock page memory can be cuda Kernel direct access

Host side lock page memory

Use pinned memory There are many benefits : Can achieve a higher host - Data transmission bandwidth on the device side , If the page locks memory to write-commbined Method allocation , Higher bandwidth ; Some devices support DMA function , Use... While executing kernel functions pinned memory Communication between the host and the device ; On some devices ,pinned memory You can also use zero-copy Function mapping to device address space , from GPU Direct access , In this way, there is no data transfer between main memory and video memory .

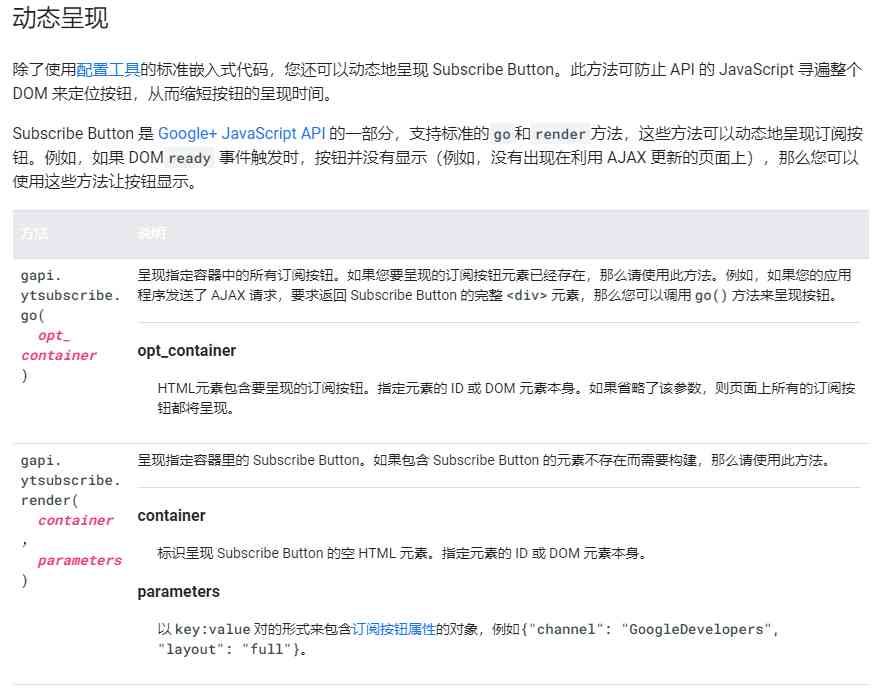

Memory allocation page lock

adopt cudaHostAlloc() and cudaFreeHost() To distribute and release pinned memory.

portable memory/ Shared lock page memory

In the use of cudaHostAlloc When allocating page locked memory , add cudaHostAllocPortable sign , Can make multiple CPU Threads lock memory by sharing a page , So as to achieve cpu Communication between threads . By default ,pinned memory Which is from cpu Thread allocation , It's just that CPU Only threads can access this space . And by portable memory You can make the control different GPU Several CPU Threads share the same block pinned memory, Reduce CPU Data transmission and communication between threads .

write-combined memory/ Write combined with lock page memory

When CPU When processing data in a block of memory , It will cache the data in this memory to CPU Of L1、L2 Cache in , And you need to monitor the data changes in this memory , To ensure cache consistency .

In general , This mechanism can reduce CPU Access to memory , But in “CPU The production data ,GPU Consumption data ” In the model ,CPU Just write this memory data . There is no need to maintain cache consistency at this point , Monitoring memory can degrade performance .

adopt write-combined memory, You don't have to use CPU Of L1、L2 Cache To a piece of pinned memory Buffer the data in the , And will be Cache Resources are left to other programs .

write-combined memory stay PCI-e Will not be transmitted from the bus during transmission CPU The surveillance interrupted , The host side can be - The transmission bandwidth on the device side is increased by as much as 40%.

Calling cudaHostAlloc() When combined with cudaHostAllocWriteCombined sign , You can put a piece of pinned memory Declare as write-combined memory.

Due to write-combined memory There is no cache for access to ,CPU from write-combined memory When reading data, the speed will decrease . Because it's best to just CPU End write only data is stored in write-combined memory in .

mapped memory/ Mapping lock page memory

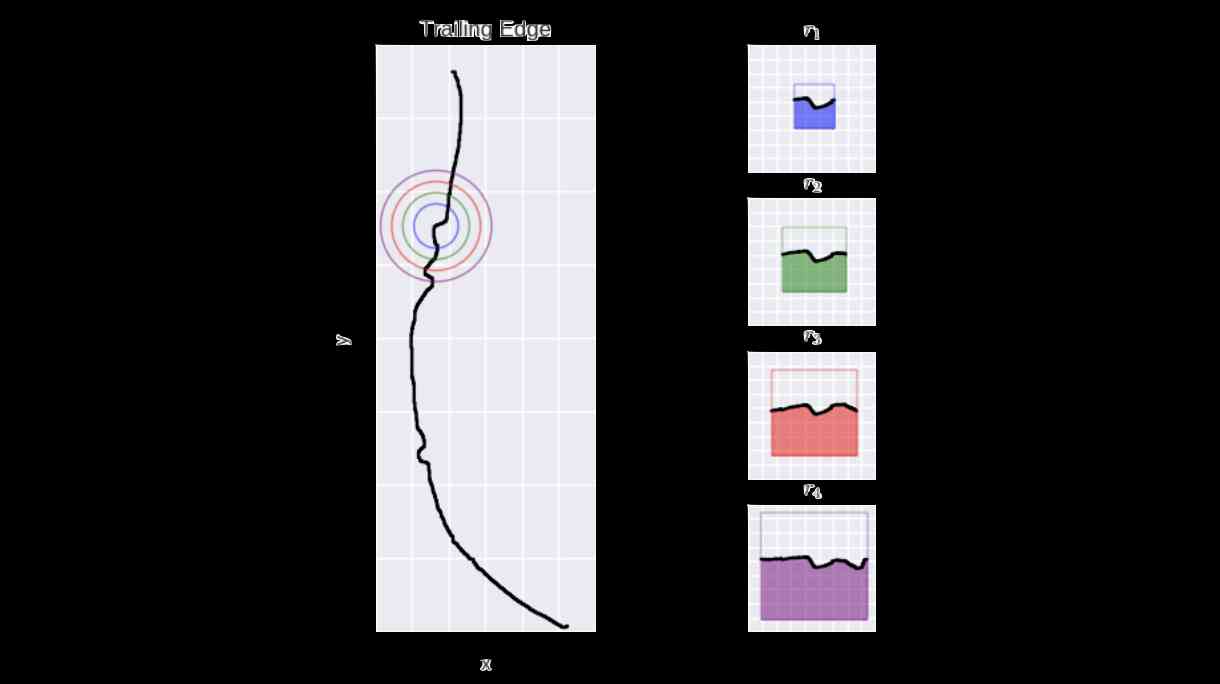

mapped memory Have two addresses : Host address ( Memory address ) And device address ( Video memory address ), Can be in kernel Direct access in mapped memory Data in , Instead of copying data between memory and video memory , namely zero-copy function . If the kernel program only needs to mapped memory Do a little reading and writing , This reduces the time it takes to allocate video memory and copy data .

mapped memory The pointer on the host side can be cudaHostAlloc() Function to obtain ; Its device side pointer can be passed through cudaHostGetDevicePointer() get , from kernel Access page lock memory in , The device side pointer needs to be passed in as a parameter .

Not all devices support memory mapping , adopt cudaGetDeviceProperties() Function return cudaMapHostMemory attribute , You can know whether the current device supports mapped memory. If the device provides support , You can call cudaHostAlloc() When combined with cudaHostAllocMapped sign , take pinned memory Mapping to device address space .

because mapped memory Can be in CPU End sum GPU End access , So it has to be synchronized CPU and GPU Sequence consistency of operations on the same block of memory . Streams and events can be used to prevent post read writing , Read after writing , And mistakes like writing after writing .

Yes mapped memory The visit should satisfy with global memory Same merge access requirements , For best performance .

Be careful :

- In execution cuda Before the operation , First call

cudaSetDeviceFlags()( AddcudaDeviceMapHostsign ) Lock page memory mapping . otherwise , callcudaHostGetDevicePointer()The function returns an error . - A block of multiple host side thread operations portable memory It's also mapped memory when , Every host thread must call cudaHostGetDevicePointer() Get this piece of pinned memory Device end pointer of . here , In this block, there is a device side pointer in each host thread .

Register lock page memory

Lock page memory registration separates memory allocation from page locking and host memory mapping . You can operate on an assigned virtual address range , And page lock it . then , Map it to GPU, just as cudaHostAlloc() You can map memory to cuda Address space may become shareable ( all GPU Accessible ).

function cuMemHostRegister()/cudaHostRegister() and cuMemHostUnregister()/cudaHostUnregister() Register the host memory as lock page memory and remove the registration function respectively .

The registered memory range must be page aligned ; Whether it's base address or size , Must be divisible by operating system page size .

Be careful :

When UVA( The same virtual addressing ) It works , All lock page memory allocation is mapped and sharable . The exception to this rule is write combined memory and registered memory . For both , The device pointer may be different from the host pointer , The application needs to use cudaHostGetDevicePointer()/cuMemHostGetDevicePointer() Query device pointer .