当前位置:网站首页>Flink deployment mode and runtime architecture (session mode, single job mode, application mode, jobmanager, taskmanager, yarn mode deployment and runtime architecture)

Flink deployment mode and runtime architecture (session mode, single job mode, application mode, jobmanager, taskmanager, yarn mode deployment and runtime architecture)

2022-06-11 12:10:00 【But don't ask about your future】

List of articles

1. Deployment mode ( Abstract concept )

1.1 Conversational mode (Session Mode)

Conversational mode , You need to start a cluster first , Keep a conversation , In this session, submit the job through the client . All resources have been determined when the cluster starts , Therefore, all submitted jobs compete for resources in the cluster .

The life cycle of the cluster is beyond the job , Release resources when the job is over , The cluster is still running . Of course : Because resources are shared , So there are not enough resources , Submitting a new job will fail . in addition , The same TaskManager There may be a lot of jobs running on , If one of them fails, it leads to TaskManager Downtime , Then all homework will be affected

Conversational mode Suitable for single small scale 、 Large number of jobs with short execution time

1.2 Single operation mode (Per-Job Mode)

The single operation mode is strictly one-to-one , The cluster is only born for this job . Run the application by the client , Then start the cluster , The assignment is submitted to JobManager, And then distribute it to TaskManager perform . When the job is finished , The cluster will shut down , All resources will be released . Each assignment has its own JobManager management , Occupy exclusive resources , Even in case of failure , its TaskManager Downtime will not affect other jobs .

The single operation mode is more stable in the production environment , yes Preferred mode for practical application

notes :

Flink Single job mode cannot be run directly by itself , Some resource management frameworks are needed to start the cluster , Such as YARN

1.3 Application mode (Application Mode)

The application code executes on the client , Then the client submits it to JobManager, The client needs to occupy a large amount of network bandwidth to download dependencies and send binary data to JobManager; And the same client is used to submit the job , It will increase the resource consumption of the node where the client is located .

The solution is , Submit the application directly to JobManger Up operation . Launch a separate for each submitted application JobManager, That is to create a cluster . This JobManager Only exist to execute this application , After execution JobManager It's closed

1.4 summary

In conversation mode , The life cycle of the cluster is independent of the life cycle of any job running on the cluster , And all jobs submitted share resources . The single job mode creates a cluster for each submitted job , It brings better resource isolation , At this time, the life cycle of the cluster is bound to the life cycle of the job . Last , Application mode creates a session cluster for each application , stay JobManager Call the application directly on main() Method

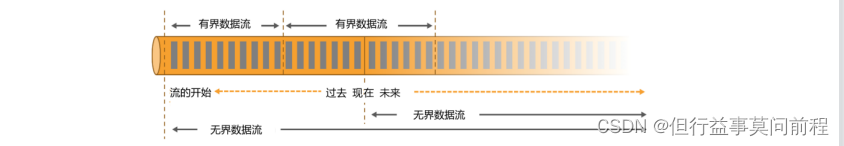

2. System architecture

2.1 The whole structure

Flink In the runtime architecture of , The most important thing is the two components : Job manager (JobManger) And task manager (TaskManager). For a job submitted for execution ,JobManager In the true sense “ managers ”(Master), Responsible for managing and scheduling , Therefore, without considering high availability, there can only be one ; and TaskManager yes “ Worker ”(Worker、Slave), Responsible for performing tasks and processing data , So there can be one or more .

notes :

attached mode (default): The yarn-session.sh client submits the Flink cluster to YARN, but the client keeps running, tracking the state of the cluster. If the cluster fails, the client will show the error. If the client gets terminated, it will signal the cluster to shut down as well.

detached mode (-d or --detached): The yarn-session.sh client submits the Flink cluster to YARN, then the client returns. Another invocation of the client, or YARN tools is needed to stop the Flink cluster.

The client is not part of the processing system , It is only responsible for job submission . say concretely , It's the calling program main Method , Convert the code to “ Data flow diagram ”(Dataflow Graph), And finally generate the job diagram (JobGraph), Send it to JobManager. After submission , The execution of the task has nothing to do with the client ; You can choose to disconnect from JobManager The connection of , You can also keep connected . When you command to submit a job , Plus -d Parameters , It means separation mode (detached mode), That is, disconnect

2.1.1 Job manager (JobManager)

JobManger contain 3 Different components :

1. JobMaster

JobMaster yes JobManager The core component of , Responsible for handling individual operations (Job). therefore JobMaster And concrete Job It's one-to-one , Multiple Job It can run in one at the same time Flink In the cluster , Every Job All have their own JobMaster. When the job is submitted ,JobMaster The application to be executed will be received first , Include :Jar package , Data flow diagram (dataflow graph), And working drawings (JobGraph).JobMaster Will be able to JobGraph Convert to a physical level data flow diagram , This picture is called “ Execution diagram ”(ExecutionGraph), It contains all tasks that can be executed concurrently . JobMaster To the explorer (ResourceManager) Request , Apply for the necessary resources to perform the task . Once it gets enough resources , The execution diagrams are distributed to the... That actually run them TaskManager On . And in the process of running ,JobMaster Will be responsible for all operations that need central coordination , Check points, for example (checkpoints) The coordination of

2. Explorer (ResourceManager)

ResourceManager Mainly responsible for resource allocation and management , stay Flink Only one in the cluster . So-called “ resources ”, Mainly refers to TaskManager Task slot (task slots). The task slot is Flink The provisioning unit in the cluster , Contains a set of... That the machine uses to perform calculations CPU And memory resources . Every task (Task) All need to be assigned to a slot On the implementation . Pay attention to Flink Built in ResourceManager And other resource management platforms ( such as YARN) Of ResourceManager Differentiate .Flink Of ResourceManager, There are different specific implementations for different environments and resource management platforms . stay Standalone When the deployment , because TaskManager It started separately ( No, Per-Job Pattern ), therefore ResourceManager Only available TaskManager Task slot , You cannot start a new server alone TaskManager. When there is a resource management platform , Not subject to this restriction . When a new job requests resources ,ResourceManager There will be free slots TaskManager Assigned to JobMaster. If ResourceManager Not enough task slots , It can also initiate a session to the resource provider platform , Request to provide startup TaskManager Process container . in addition ,ResourceManager Also responsible for stopping idle TaskManager, Free computing resources

3. The dispenser (Dispatcher)

Dispatcher Mainly responsible for providing a REST Interface , Used to submit applications , And be responsible for starting a new job for each newly submitted job JobMaster Components .Dispatcher It will also activate a Web UI, Information used to easily display and monitor job execution .Dispatcher Not required in the architecture , It may be ignored in different deployment modes .

2.1.2 Task manager (TaskManager)

TaskManager yes Flink The process of work in , The specific calculation of data flow is done by it , So it's also called “Worker”.Flink There must be at least one in the cluster TaskManager; Of course, due to the consideration of Distributed Computing , There are usually more than one TaskManager function , every last TaskManager Both contain a certain number of task slots (task slots).Slot Is the smallest unit of resource scheduling ,slot The number of is limited TaskManager Number of tasks that can be processed in parallel . After starting ,TaskManager Will register it with the Explorer slots; After receiving the instruction from the resource manager ,TaskManager One or more slots will be provided to JobMaster call ,JobMaster You can assign tasks to perform . In the process of execution ,TaskManager Can buffer data , You can also run the same application with other TaskManager Exchange data

2.2 High level abstract perspective

(1) In general , By the client (App) Provided through the distributor REST Interface , Submit the assignment to JobManager

(2) Started by distributor JobMaster, And put the homework ( contain JobGraph) Submit to JobMaster

(3)JobMaster take JobGraph Resolve to executable ExecutionGraph, Get the amount of resources needed , Then request resources from the resource manager (slots).

(4) The resource manager determines whether there are enough available resources ; without , Start a new TaskManager

(5)TaskManager After starting , towards ResourceManager Register your own available task slots (slots)

(6) Explorer notification TaskManager Provide... For new jobs slots

(7)TaskManager Connect to the corresponding JobMaster, Provide slots

(8)JobMaster Distribute the tasks to be performed to TaskManager

(9)TaskManager Perform tasks , Data can be exchanged with each other

3. Independent mode (Standalone)

3.1 Concept

Independent mode (Standalone) It's deployment Flink The most basic and simplest way : All that is needed Flink Components , Are just one running on the operating system JVM process .

Independent mode does not depend on any external resource management platform ; If resources are insufficient , Or something goes wrong , There is no guarantee of automatic expansion or reallocation of resources , It has to be handled manually . Therefore, the independent mode is generally only used in the scenario with very few development tests or jobs .

3.2 Session mode deployment 、 Single job mode deployment ( I won't support it )、 Application mode deployment

flink 1.15 Independent mode deployment

4. YARN Pattern deployment and running architecture

4.1 Concept

YARN The process of deployment is : The client puts Flink The application is submitted to Yarn Of ResourceManager, Yarn Of ResourceManager Will send to Yarn Of NodeManager Apply for containers . On these containers ,Flink Will deploy JobManager and TaskManager Example , To start the cluster .Flink Will run according to JobManger Required for homework on Slot Quantity dynamic allocation TaskManager resources

yarn Deployment mode

Check hadoop Environmental Science

echo $HADOOP_CLASSPATH

4.2 Conversational mode

4.2.1 Deploy

Start cluster :

(1) start-up hadoop colony (HDFS, YARN).

(2) Execute script commands to YARN Cluster application resources , To start a YARN conversation , start-up Flink colony

bin/yarn-session.sh -d -nm cz -qu hello

Can be interpreted with parameters :

-d: Separation mode ,Flink YARN The client runs in the background

-jm(–jobManagerMemory): To configure JobManager Memory required , Default unit MB

-nm(–name): Configure in YARN UI The task name displayed on the interface

-qu(–queue): Appoint YARN Team name

-tm(–taskManager): Configure each TaskManager Memory used

notes :

Flink1.11.0 Version no longer uses -n Parameters and -s Parameters are specified separately TaskManager Quantity and sum slot Number ,YARN It will be dynamically allocated according to the demand TaskManager and slot

YARN Session After startup, a will be given web UI Address and a YARN application ID

Above initiated yarn Pattern flink colony :

web UI by http://hadoop104:42947

Stop the cluster echo "stop" | ./bin/yarn-session.sh -id application_1654053140138_0001

Force to stop yarn application -kill application_1654053140138_0001

Submit the assignment :

(1) adopt Web UI Submit the assignment

(2) Submit the job through the command line

bin/flink run [OPTIONS] <jar-file> <arguments>

4.2.2 Runtime schema

(1) Client pass REST Interface , Submit the job to the distributor

(2) The distributor starts JobMaster, And put the homework ( contain JobGraph) Submit to JobMaster

(3)JobMaster Request resources from the resource manager (slots)

(4) Explorer to YARN Resource manager request for container resources

(5)YARN Start a new TaskManager Containers

(6)TaskManager After starting , towards Flink The resource manager registers its own available task slots

(7) Explorer notification TaskManager Provide... For new jobs slots

(8)TaskManager Connect to the corresponding JobMaster, Provide slots

(9)JobMaster Distribute the tasks to be performed to TaskManager, Perform tasks

4.3 Single operation mode

4.3.1 Deploy

stay YARN Environment , With an external platform for resource scheduling , So you can go directly to YARN Submit a separate assignment , To start a Flink colony

(1) Execute the command and submit the job

bin/flink run -d -t yarn-per-job -creview.part2.StreamWordCount libexec/FlinkTutorial-1.0-SNAPSHOT-jar-with-dependencies.jar

(2) stay YARN Of ResourceManager View the implementation status in the interface

Click on Tracking URL

(3) Use the command line to view or cancel jobs

bin/flink list -t yarn-per-job -Dyarn.application.id=application_XXXX_YY

bin/flink cancel -t yarn-per-job -Dyarn.application.id=application_XXXX_YY <jobId>

4.3.2 Runtime schema

(1) The client submits the job to YARN Explorer for , In this step, we will also Flink Of Jar Upload packages and configurations to HDFS, For subsequent startup Flink Containers for related components

(2)YARN Resource manager allocation for Container resources , start-up Flink JobManager, And submit the assignment to JobMaster. Omit here Dispatcher Components

(3)JobMaster Request resources from the resource manager (slots)

(4) Explorer to YARN Resource manager request for container resources

(5)YARN Start a new TaskManager Containers

(6)TaskManager After starting , towards Flink The resource manager registers its own available task slots

(7) Explorer notification TaskManager Provide... For new jobs slots

(8)TaskManager Connect to the corresponding JobMaster, Provide slots

(9)JobMaster Distribute the tasks to be performed to TaskManager, Perform tasks

4.4 Application mode

4.4.1 Deploy

(1) Execute the command and submit the job

bin/flink run-application -t yarn-application -c review.part2.StreamWordCount libexec/FlinkTutorial-1.0-SNAPSHOT-jar-with-dependencies.jar

(2) View or cancel jobs on the command line

bin/flink list -t yarn-application -Dyarn.application.id=application_XXXX_YY

$ bin/flink cancel -t yarn-application

-Dyarn.application.id=application_XXXX_YY <jobId>

(3) It can be done by yarn.provided.lib.dirs The configuration option specifies the location , take jar Upload to remote

4.4.2 Runtime schema

The application mode is very similar to the submission process of single job mode , Just the initial submission to YARN The of the resource manager is no longer a specific job , It's the whole application . An application may contain multiple jobs , These assignments will be in Flink Start the corresponding in the cluster JobMaster

4.5 High availability

Standalone In the pattern , Start multiple... At the same time JobManager, One for leader, For the other standby, When leader Hang up , One of the others will become leader. and YARN High availability is to start only one Jobmanager, When this Jobmanager After hanging up , YARN Will start another , So it's actually used YARN The number of retries to achieve high availability

(1) stay yarn-site.xml Middle configuration

<property>

<name>yarn.resourcemanager.am.max-attempts</name>

<value>4</value>

<description>

The maximum number of application master execution attempts.

</description>

</property>

(2) stay flink-conf.yaml Middle configuration .

yarn.application-attempts: 3

high-availability: zookeeper

high-availability.storageDir: hdfs Catalog

high-availability.zookeeper.quorum: hadoop102:2181,hadoop103:2181,hadoop104:2181

high-availability.zookeeper.path.root: /flink-yarn

(3) start-up yarn-session

(4) Kill JobManager, Check the resurrection

Be careful :

yarn-site.xml The configuration in is JobManager Maximum number of restarts , flink-conf.xml The number of times in should be less than this value

ps: Reference books pdf:

link : Baidu SkyDrive

Extraction code :1256

边栏推荐

- You call this shit MQ?

- arguments. Callee implement function recursive call

- CVPR 2022 | 文本引导的实体级别图像操作ManiTrans

- Objectinputstream read file object objectoutputstream write file object

- Zero after factorial (C language)

- PS does not display text cursor, text box, and does not highlight after selection

- Qt中radioButton使用

- Software project management 7.1 Basic concept of project schedule

- Elk - x-pack set user password

- Apple mobileone: the mobile terminal only needs 1ms of high-performance backbone

猜你喜欢

阶乘后的零(C语言)

YARN 切换ResourceManager(Failed to connect to server:8032 retries get failed due to exceeded maximum)

flink Spark 和 Flink对比

纯数据业务的机器打电话进来时回落到了2G/3G

Use cache to reduce network requests

(推荐)splunk 多少数量search head 才合适

Intl.numberformat set number format

Elk - x-pack set user password

解决swagger文档接口404的问题

flink 物理分区( 随机分区、 轮询分区、重缩放分区、 广播、 全局分区、自定义分区 )

随机推荐

JS to realize the rotation chart (riding light). Pictures can be switched left and right. Moving the mouse will stop the rotation

Hamiltonian graph

Full Permutation (recursion, backtracking)

Pan domain SSL certificate, sectigo cheap wildcard certificate popularization plan

The wonderful use of XOR (C language)

(解决)Splunk 之 kv-store down 问题

Use cache to reduce network requests

flink 时间语义、水位线(Watermark)、生成水位线、水位线的传递

Where is it safer to open an account for soda ash futures? How is the deposit calculated?

微信授权获取手机号码

JMeter 学习心得

Android 11+ configuring sqlserver2014+

Command symbols commonly used by programmers

2020-07 study notes sorting

[JUC supplementary] immutable object, shared meta mode, final principle

采用快慢指针法来解决有关数组的问题(C语言)

Sulley fuzzer learning

[Chapter II Relationship between genes and chromosomes] summary of biological knowledge - Biology in grade one of senior high school

01_ Description object_ Class diagram

吊打面试官,涨姿势