当前位置:网站首页>(解决)Splunk 之 kv-store down 问题

(解决)Splunk 之 kv-store down 问题

2022-06-11 12:01:00 【shenghuiping2001】

先说问题:in seach head server:

splunk show kvstore-status, 显示有search head node 是down 和recovering:

abc.com:8191

configVersion : 95

hostAndPort : bbb.com:8191

lastHeartbeat : Mon May 23 17:17:15 2022

lastHeartbeatRecv : Mon May 23 17:17:16 2022

lastHeartbeatRecvSec : 1653351436.052

lastHeartbeatSec : 1653351435.069

optimeDate : Fri Dec 10 17:41:20 2021

optimeDateSec : 1639186880

pingMs : 25

replicationStatus : Recovering

uptime : 1270601

aaa.com:8191

configVersion : -1

hostAndPort : aaa.com:8191

lastHeartbeat : Mon May 23 17:17:15 2022

lastHeartbeatRecv : ZERO_TIME

lastHeartbeatRecvSec : 0

lastHeartbeatSec : 1653351435.48

optimeDate : ZERO_TIME

optimeDateSec : 0

pingMs : 0

replicationStatus : Down

uptime : 0

说明一下: 下面一台机器:aaa.com, 是属于另外一个集群,后来加到现在的search head 集群:检查一下心跳:

2022-05-24T00:26:12.756Z I REPL_HB [replexec-9724] Error in heartbeat (requestId: 1148968128) to ccc.com:8191, response status: InvalidReplicaSetConfig: replica set IDs do not

参考文档:

Resync the KV store - Splunk Documentation

When we went to one of the above SH members and ran kvstore command, it only showed only 3 members indicating that these 3 members belonged to the previous SH cluster.

These 3 SH members were part of other SH cluster and it seemed that the following command were not performed when they were removed from then SH cluster.

splunk clean kvstore --cluster (注意: only down 的node 才执行这个操作)

首先: we stopped all these 3 SH mebers showing "Down" status and then performed the following step on the one SH member(bbb.com) and the SH member was joined to the current cluster sucessfully.

(1) Stop the search head that has the stale KV store member.

(2) Run the command splunk clean kvstore --cluster <<<==== This step is the additional step for search heads showing "Down" status

(3) Run the command splunk clean kvstore --local

(4) Restart the search head. This triggers the initial synchronization from other KV store members.

(5) Run the command splunk show kvstore-status to verify synchronization.

接下来的操作:

(1) Please perform the above same step for other 2 SH members(aaa.com and ccc.com)

(2) And perform the following steps for the SH members showing "Recovering" status.

(1) Stop the search head that has the stale KV store member.

(2) Run the command splunk clean kvstore --local

(3) Restart the search head. This triggers the initial synchronization from other KV store members.

(4) Run the command splunk show kvstore-status to verify synchronization.

最后检查一下:

splunk show kvstore-status, 发现all is fine.

去/opt/spunk/var/log/splunk/ 下面看log: mong.log, 这个就是kv-store 的log

参考文档:1: clean kv-store:

Remove a cluster member - Splunk Documentation

2: backup kv-store: Back up and restore KV store - Splunk Documentation

边栏推荐

猜你喜欢

2022 | framework for Android interview -- Analysis of the core principles of binder, handler, WMS and AMS!

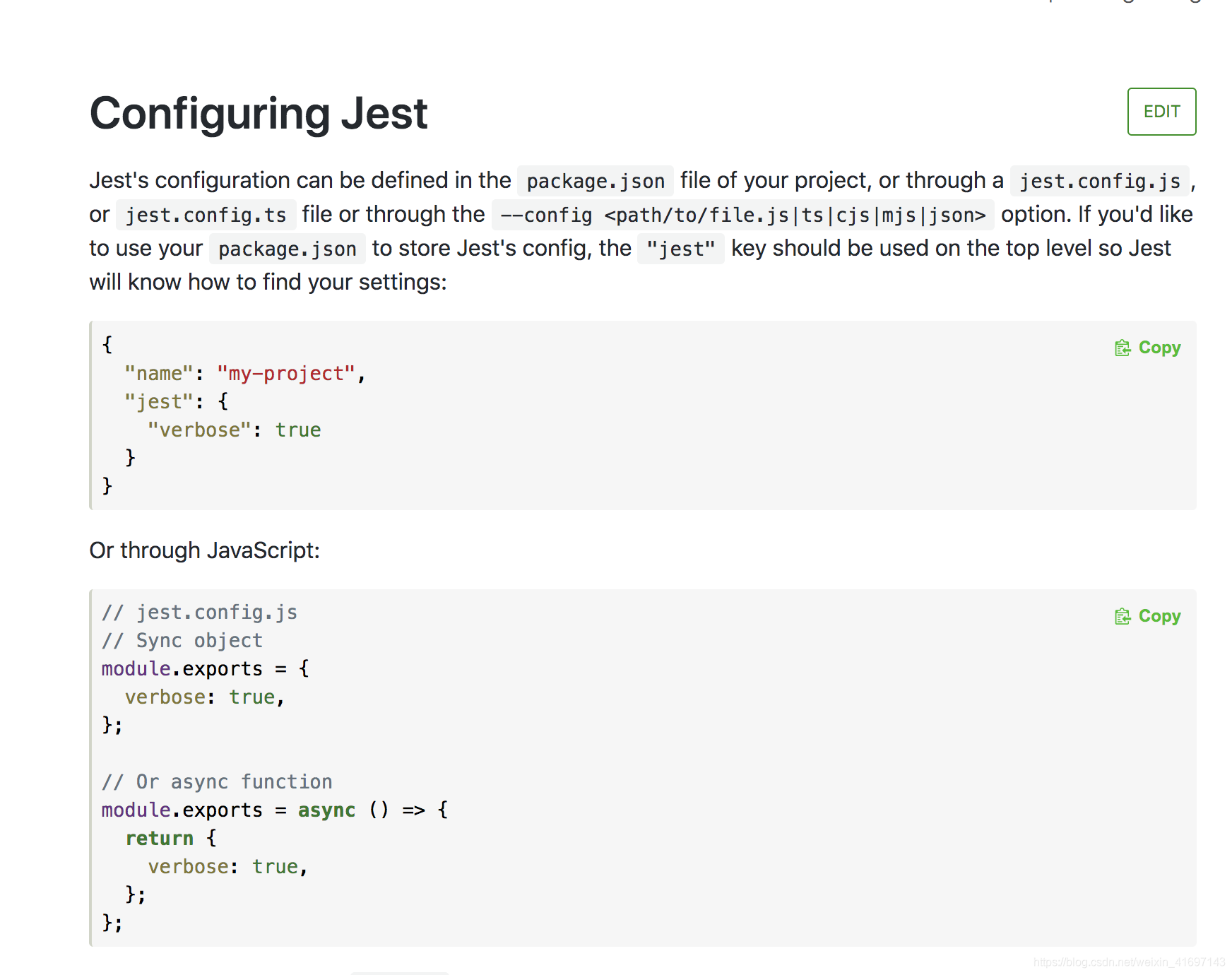

JEST 单元测试说明 config.json

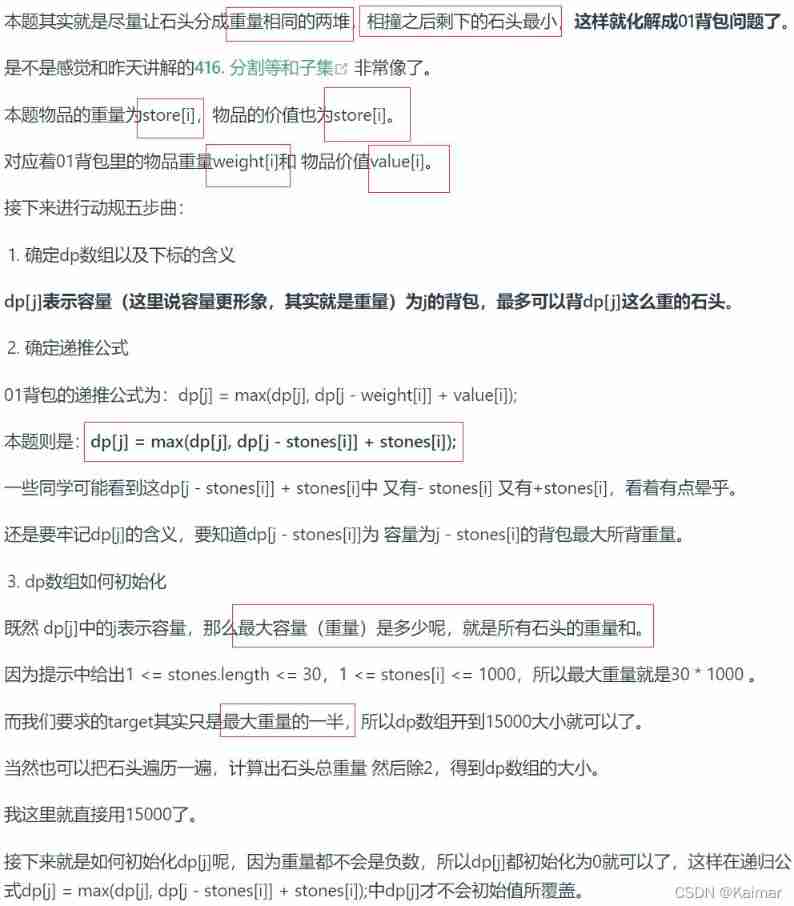

【LeetCode】1049. Weight of the last stone II (wrong question 2)

Web development model selection, who graduated from web development

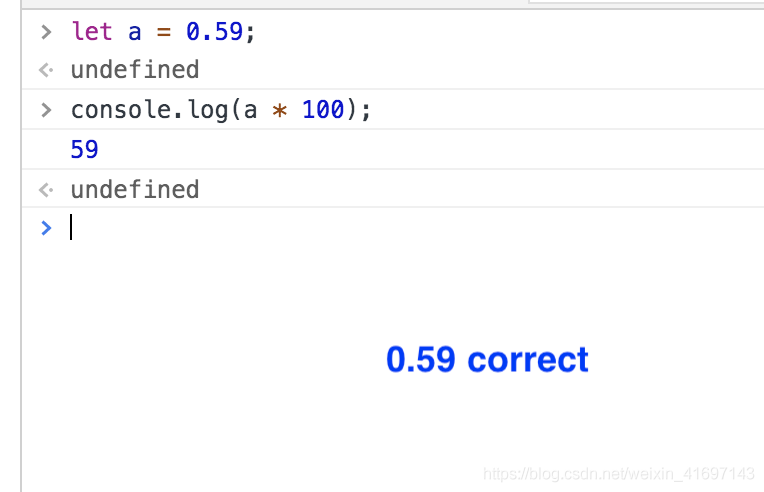

JS 加法乘法错误解决 number-precision

mysql 导入宝塔中数据库data为0000-00-00,enum为null出错

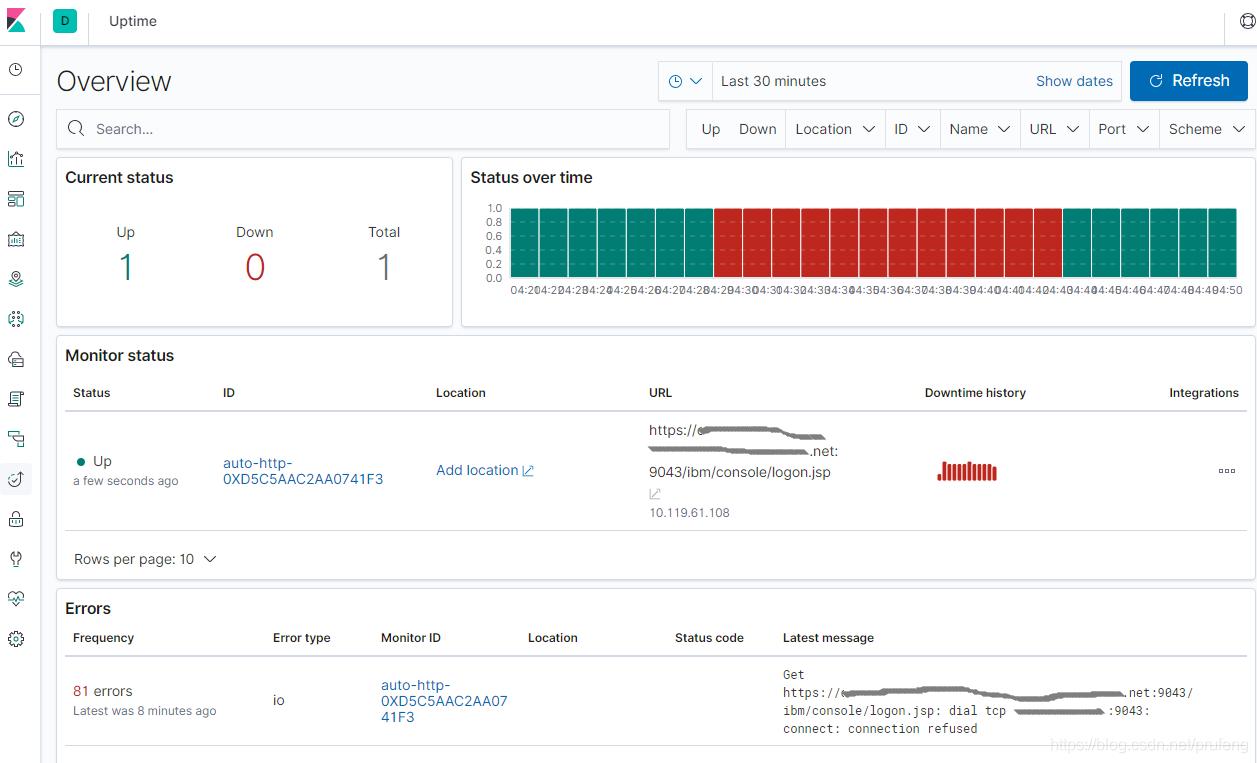

Elk - hearthbeat implements service monitoring

纯数据业务的机器打电话进来时回落到了2G/3G

Iframe value transfer

![[file upload vulnerability 05] server suffix detection and bypass experiment (based on upload-labs-3 shooting range)](/img/f5/52bc5e01bb0607b6ecab828fb70c93.jpg)

[file upload vulnerability 05] server suffix detection and bypass experiment (based on upload-labs-3 shooting range)

随机推荐

微信web开发者,如何学习web开发

Objectinputstream read file object objectoutputstream write file object

Interview experience of Xiaomi Android development post~

安全工程师发现PS主机重大漏洞 用光盘能在系统中执行任意代码

Dominating set, independent set, covering set

centos安装mysql5.7

JS to realize the rotation chart (riding light). Pictures can be switched left and right. Moving the mouse will stop the rotation

纯数据业务的机器打电话进来时回落到了2G/3G

C# 将OFD转为PDF

Use of Chinese input method input event composition

Live app source code, and the status bar and navigation bar are set to transparent status

How should ordinary people choose annuity insurance products?

Merge two ordered arrays (C language)

什么是Gerber文件?PCB电路板Gerber文件简介

反射真的很耗时吗,反射 10 万次,耗时多久。

Template engine - thymeleaf

Use of RadioButton in QT

Zhouhongyi's speech at the China Network Security Annual Conference: 360 secure brain builds a data security system

Hang up the interviewer

The role of Gerber file in PCB manufacturing