当前位置:网站首页>Gold medal scheme of kaggle patent matching competition post competition summary

Gold medal scheme of kaggle patent matching competition post competition summary

2022-06-25 02:56:00 【To great】

Brief introduction to the game

In the patent matching dataset , The contestant needs to judge the similarity of the two phrases , One is anchor , One is target

, Then output the two in different semantics (context) The similarity , The scope is 0-1, Our team id by xlyhq,a A list of rank 13,b A list of ran12, Thank you very much @heng zheng、@pythonlan,@leolu1998,@syzong The efforts and efforts of the four teammates , Last comparison lucky Dog to gold medal .

It is similar to other front row core ideas , Here we mainly share the course of our competition and the specific results of relevant experiments , And interesting attempts

Text processing

The main data sets are anchor、target and context Field , In addition, there is additional text splicing information , During the competition, we mainly tried the following splicing attempts :

- v1:test[‘anchor’] + ‘[SEP]’ + test[‘target’] + ‘[SEP]’ + test[‘context_text’]

- v2:test[‘anchor’] + ‘[SEP]’ + test[‘target’] + ‘[SEP]’ +test[‘context’]+ ‘[SEP]’ + test[‘context_text’], It's equivalent to putting A47 Similar codes are spliced together

- v3:test[‘text’] = test[‘anchor’] + ‘[SEP]’ + test[‘target’] + ‘[SEP]’ + test[‘context’] + ‘[SEP]’ + test[‘context_text’] Get more text to splice , Is equivalent to A47 The following subcategories are spliced together , such as A47B,A47C

context_mapping = {

"A": "Human Necessities",

"B": "Operations and Transport",

"C": "Chemistry and Metallurgy",

"D": "Textiles",

"E": "Fixed Constructions",

"F": "Mechanical Engineering",

"G": "Physics",

"H": "Electricity",

"Y": "Emerging Cross-Sectional Technologies",

}

titles = pd.read_csv('./input/cpc-codes/titles.csv')

def process(text):

return re.sub(u"\\(.*?\\)|\\{.*?}|\\[.*?]", "", text)

def get_context(cpc_code):

cpc_data = titles[(titles['code'].map(len) <= 4) & (titles['code'].str.contains(cpc_code))]

texts = cpc_data['title'].values.tolist()

texts = [process(text) for text in texts]

return ";".join([context_mapping[cpc_code[0]]] + texts)

def get_cpc_texts():

cpc_texts = dict()

for code in tqdm(train['context'].unique()):

cpc_texts[code] = get_context(code)

return cpc_texts

cpc_texts = get_cpc_texts()

This splicing method can be greatly improved , But the text becomes longer , The maximum length is set to 300, This leads to slower training

- v4: The splicing method of the core :test[‘text’] = test[‘text’] + ‘[SEP]’ + test[‘target_info’]

# Splicing target info

test['text'] = test['anchor'] + '[SEP]' + test['target'] + '[SEP]' + test['context_text']

target_info = test.groupby(['anchor', 'context'])['target'].agg(list).reset_index()

target_info['target'] = target_info['target'].apply(lambda x: list(set(x)))

target_info['target_info'] = target_info['target'].apply(lambda x: ', '.join(x))

target_info['target_info'].apply(lambda x: len(x.split(', '))).describe()

del target_info['target']

test=test.merge(target_info,on=['anchor','context'],how='left')

test['text'] = test['text'] + '[SEP]' + test['target_info']

test.head()

This splicing method can make the model cv and lb Scores have been greatly improved , adopt v3 and v4 Comparison of two different splicing methods , We can find that selecting higher quality text for splicing can improve the model ,v3 There is a lot of redundant information in the mode , and v4 There are many key information at the entity level .

Data partitioning

In the course of the game , We tried different data partitioning methods , These include :

- StratifiedGroupKFold, This splicing method cv And lb The line difference is small , The score is a little better

- StratifiedKFold: Offline cv Relatively high

- other Kfold and GrouFold The result is bad

Loss function

The main loss functions that can be referred to are :

- BCE: nn.BCEWithLogitsLoss(reduction=“mean”)

- MSE:nn.MSELoss()

- Mixture Loss:MseCorrloss

class CorrLoss(nn.Module):

"""

use 1 - correlational coefficience between the output of the network and the target as the loss

input (o, t):

o: Variable of size (batch_size, 1) output of the network

t: Variable of size (batch_size, 1) target value

output (corr):

corr: Variable of size (1)

"""

def __init__(self):

super(CorrLoss, self).__init__()

def forward(self, o, t):

assert(o.size() == t.size())

# calcu z-score for o and t

o_m = o.mean(dim = 0)

o_s = o.std(dim = 0)

o_z = (o - o_m)/o_s

t_m = t.mean(dim =0)

t_s = t.std(dim = 0)

t_z = (t - t_m)/t_s

# calcu corr between o and t

tmp = o_z * t_z

corr = tmp.mean(dim = 0)

return 1 - corr

class MSECorrLoss(nn.Module):

def __init__(self, p = 1.5):

super(MSECorrLoss, self).__init__()

self.p = p

self.mseLoss = nn.MSELoss()

self.corrLoss = CorrLoss()

def forward(self, o, t):

mse = self.mseLoss(o, t)

corr = self.corrLoss(o, t)

loss = mse + self.p * corr

return loss

The loss function used in our experiment , The effect is slightly better than BCE A little bit better.

Model design

In order to improve the difference of the model , We mainly selected the variants of different models , It includes the following five models :

- Deberta-v3-large

- Bert-for-patents

- Roberta-large

- Ernie-en-2.0-Large

- Electra-large-discriminator

Specifically cv The scores are as follows :

deberta-v3-large:[0.8494,0.8455,0.8523,0.8458,0.8658] cv 0.85176

bertforpatents [0.8393, 0.8403, 0.8457, 0.8402, 0.8564] cv 0.8444

roberta-large [0.8183,0.8172,0.8203,0.8193,0.8398] cv 0.8233

ernie-large [0.8276,0.8277,0.8251,0.8296,0.8466] cv 0.8310

electra-large [0.8429,0.8309,0.8259,0.8416,0.846] cv 0.8376

Training optimization

Based on previous competition experience , We mainly adopt the following model training optimization methods :

- Confrontation training : tried FGM It can improve the model training

class FGM():

def __init__(self, model):

self.model = model

self.backup = {}

def attack(self, epsilon=1., emb_name='word_embeddings'):

# emb_name This parameter needs to be changed to your model embedding Parameter name of

for name, param in self.model.named_parameters():

if param.requires_grad and emb_name in name:

self.backup[name] = param.data.clone()

norm = torch.norm(param.grad)

if norm != 0 and not torch.isnan(norm):

r_at = epsilon * param.grad / norm

param.data.add_(r_at)

def restore(self, emb_name='emb.'):

# emb_name This parameter needs to be changed to your model embedding Parameter name of

for name, param in self.model.named_parameters():

if param.requires_grad and emb_name in name:

assert name in self.backup

param.data = self.backup[name]

self.backup = {}

- Model generalization : Joined the multidroout

- ema It can improve the model training

class EMA():

def __init__(self, model, decay):

self.model = model

self.decay = decay

self.shadow = {}

self.backup = {}

def register(self):

for name, param in self.model.named_parameters():

if param.requires_grad:

self.shadow[name] = param.data.clone()

def update(self):

for name, param in self.model.named_parameters():

if param.requires_grad:

assert name in self.shadow

new_average = (1.0 - self.decay) * param.data + self.decay * self.shadow[name]

self.shadow[name] = new_average.clone()

def apply_shadow(self):

for name, param in self.model.named_parameters():

if param.requires_grad:

assert name in self.shadow

self.backup[name] = param.data

param.data = self.shadow[name]

def restore(self):

for name, param in self.model.named_parameters():

if param.requires_grad:

assert name in self.backup

param.data = self.backup[name]

self.backup = {}

# initialization

ema = EMA(model, 0.999)

ema.register()

# During training , After updating the parameters , Sync update shadow weights

def train():

optimizer.step()

ema.update()

# eval front ,apply shadow weights;eval after , Restore the parameters of the original model

def evaluate():

ema.apply_shadow()

# evaluate

ema.restore()

A useless attempt :

- AWP

- PGD

Model fusion

Feedback according to offline cross verification scores and online scores , We use weighted fusion to average fusion

from sklearn.preprocessing import MinMaxScaler

MMscaler = MinMaxScaler()

predictions1 = MMscaler.fit_transform(submission['predictions1'].values.reshape(-1,1)).reshape(-1)

predictions2 = MMscaler.fit_transform(submission['predictions2'].values.reshape(-1,1)).reshape(-1)

predictions3 = MMscaler.fit_transform(submission['predictions3'].values.reshape(-1,1)).reshape(-1)

predictions4 = MMscaler.fit_transform(submission['predictions4'].values.reshape(-1,1)).reshape(-1)

predictions5 = MMscaler.fit_transform(submission['predictions5'].values.reshape(-1,1)).reshape(-1)

# final_predictions=(predictions1+predictions2)/2

# final_predictions=(predictions1+predictions2+predictions3+predictions4+predictions5)/5

# 5:2:1:1:1

final_predictions=0.5*predictions1+0.2*predictions2+0.1*predictions3+0.1*predictions4+0.1*predictions5

Other attempts

- two stage

In the early stage, we made fine adjustments to different pre training models , Therefore, the number of features is relatively large , We try to predict text statistical features and models based on tree models stacking Try , At that time, the model had a good fusion effect , The following contains some code

# ====================================================

# predictions1

# ====================================================

def get_fold_pred(CFG, path, model):

CFG.path = path

CFG.model = model

CFG.config_path = CFG.path + "config.pth"

CFG.tokenizer = AutoTokenizer.from_pretrained(CFG.path)

test_dataset = TestDataset(CFG, test)

test_loader = DataLoader(test_dataset,

batch_size=CFG.batch_size,

shuffle=False,

num_workers=CFG.num_workers, pin_memory=True, drop_last=False)

predictions = []

for fold in CFG.trn_fold:

model = CustomModel(CFG, config_path=CFG.config_path, pretrained=False)

state = torch.load(CFG.path + f"{CFG.model.split('/')[-1]}_fold{fold}_best.pth",

map_location=torch.device('cpu'))

model.load_state_dict(state['model'])

prediction = inference_fn(test_loader, model, device)

predictions.append(prediction.flatten())

del model, state, prediction

gc.collect()

torch.cuda.empty_cache()

# predictions1 = np.mean(predictions, axis=0)

# fea_df = pd.DataFrame(predictions).T

# fea_df.columns = [f"{CFG.model.split('/')[-1]}_fold{fold}" for fold in CFG.trn_fold]

# del test_dataset, test_loader

return predictions

model_paths = [

"../input/albert-xxlarge-v2/albert-xxlarge-v2/",

"../input/bert-large-cased-cv5/bert-large-cased/",

"../input/deberta-base-cv5/deberta-base/",

"../input/deberta-v3-base-cv5/deberta-v3-base/",

"../input/deberta-v3-small/deberta-v3-small/",

"../input/distilroberta-base/distilroberta-base/",

"../input/roberta-large/roberta-large/",

"../input/xlm-roberta-base/xlm-roberta-base/",

"../input/xlmrobertalarge-cv5/xlm-roberta-large/",

]

print("train.shape, test.shape", train.shape, test.shape)

print("titles.shape", titles.shape)

# for model_path in model_paths:

# with open(f'{model_path}/oof_df.pkl', "rb") as fh:

# oof = pickle.load(fh)[['id', 'fold', 'pred']]

# # oof = pd.read_pickle(f'{model_path}/oof_df.pkl')[['id', 'fold', 'pred']]

# oof[f"{model_path.split('/')[1]}"] = oof['pred']

# train = train.merge(oof[['id', f"{model_path.split('/')[1]}"]], how='left', on='id')

oof_res=pd.read_csv('../input/train-res/train_oof.csv')

train = train.merge(oof_res, how='left', on='id')

model_infos = {

'albert-xxlarge-v2': ['../input/albert-xxlarge-v2/albert-xxlarge-v2/', "albert-xxlarge-v2"],

'bert-large-cased': ['../input/bert-large-cased-cv5/bert-large-cased/', "bert-large-cased"],

'deberta-base': ['../input/deberta-base-cv5/deberta-base/', "deberta-base"],

'deberta-v3-base': ['../input/deberta-v3-base-cv5/deberta-v3-base/', "deberta-v3-base"],

'deberta-v3-small': ['../input/deberta-v3-small/deberta-v3-small/', "deberta-v3-small"],

'distilroberta-base': ['../input/distilroberta-base/distilroberta-base/', "distilroberta-base"],

'roberta-large': ['../input/roberta-large/roberta-large/', "roberta-large"],

'xlm-roberta-base': ['../input/xlm-roberta-base/xlm-roberta-base/', "xlm-roberta-base"],

'xlm-roberta-large': ['../input/xlmrobertalarge-cv5/xlm-roberta-large/', "xlm-roberta-large"],

}

for model, path_info in model_infos.items():

print(model)

model_path, model_name = path_info[0], path_info[1]

fea_df = get_fold_pred(CFG, model_path, model_name)

model_infos[model].append(fea_df)

del model_path, model_name

del oof_res

Training code :

for fold_ in range(5):

print("Fold:", fold_)

trn_ = train[train['fold'] != fold_].index

val_ = train[train['fold'] == fold_].index

# print(train.iloc[val_].sort_values('id'))

trn_x, trn_y = train[train_features].iloc[trn_], train['score'].iloc[trn_]

val_x, val_y = train[train_features].iloc[val_], train['score'].iloc[val_]

# train_folds = folds[folds['fold'] != fold].reset_index(drop=True)

# valid_folds = folds[folds['fold'] == fold].reset_index(drop=True)

reg = lgb.LGBMRegressor(**params,n_estimators=1100)

xgb = XGBRegressor(**xgb_params, n_estimators=1000)

cat = CatBoostRegressor(iterations=1000,learning_rate=0.03,

depth=10,

eval_metric='RMSE',

random_seed = 42,

bagging_temperature = 0.2,

od_type='Iter',

metric_period = 50,

od_wait=20)

print("-"* 20 + "LightGBM Training" + "-"* 20)

reg.fit(trn_x, np.log1p(trn_y),eval_set=[(val_x, np.log1p(val_y))],early_stopping_rounds=50,verbose=100,eval_metric='rmse')

print("-"* 20 + "XGboost Training" + "-"* 20)

xgb.fit(trn_x, np.log1p(trn_y),eval_set=[(val_x, np.log1p(val_y))],early_stopping_rounds=50,eval_metric='rmse',verbose=100)

print("-"* 20 + "Catboost Training" + "-"* 20)

cat.fit(trn_x, np.log1p(trn_y), eval_set=[(val_x, np.log1p(val_y))],early_stopping_rounds=50,use_best_model=True,verbose=100)

imp_df = pd.DataFrame()

imp_df['feature'] = train_features

imp_df['gain_reg'] = reg.booster_.feature_importance(importance_type='gain')

imp_df['fold'] = fold_ + 1

importances = pd.concat([importances, imp_df], axis=0, sort=False)

for model, values in model_infos.items():

test[model] = values[2][fold_]

for model, values in uspppm_model_infos.items():

test[f"uspppm_{model}"] = values[2][fold_]

# for f in tqdm(amount_feas, desc="amount_feas Basic aggregation features "):

# for cate in category_fea:

# if f != cate:

# test['{}_{}_medi'.format(cate, f)] = test.groupby(cate)[f].transform('median')

# test['{}_{}_mean'.format(cate, f)] = test.groupby(cate)[f].transform('mean')

# test['{}_{}_max'.format(cate, f)] = test.groupby(cate)[f].transform('max')

# test['{}_{}_min'.format(cate, f)] = test.groupby(cate)[f].transform('min')

# test['{}_{}_std'.format(cate, f)] = test.groupby(cate)[f].transform('std')

# LightGBM

oof_reg_preds[val_] = reg.predict(val_x, num_iteration=reg.best_iteration_)

# oof_reg_preds[oof_reg_preds < 0] = 0

lgb_preds = reg.predict(test[train_features], num_iteration=reg.best_iteration_)

# lgb_preds[lgb_preds < 0] = 0

# Xgboost

oof_reg_preds1[val_] = xgb.predict(val_x)

oof_reg_preds1[oof_reg_preds1 < 0] = 0

xgb_preds = xgb.predict(test[train_features])

# xgb_preds[xgb_preds < 0] = 0

# catboost

oof_reg_preds2[val_] = cat.predict(val_x)

oof_reg_preds2[oof_reg_preds2 < 0] = 0

cat_preds = cat.predict(test[train_features])

cat_preds[xgb_preds < 0] = 0

# merge all prediction

merge_pred[val_] = oof_reg_preds[val_] * 0.4 + oof_reg_preds1[val_] * 0.3 +oof_reg_preds2[val_] * 0.3

# sub_reg_preds += np.expm1(_preds) / len(folds)

# sub_reg_preds += np.expm1(_preds) / len(folds)

sub_preds += (lgb_preds / 5) * 0.6 + (xgb_preds / 5) * 0.2 + (cat_preds / 5) * 0.2 # The prediction results of the three models' 50% discount test set

sub_reg_preds+=lgb_preds / 5 # lgb 50% discount test set prediction results

print("lgb",pearsonr(train['score'], np.expm1(oof_reg_preds))[0]) # lgb

print("xgb",pearsonr(train['score'], np.expm1(oof_reg_preds1))[0]) # xgb

print("cat",pearsonr(train['score'], np.expm1(oof_reg_preds2))[0]) # cat

print("xgb lgb cat",pearsonr(train['score'], np.expm1(merge_pred))[0]) # xgb lgb cat

边栏推荐

- 把 Oracle 数据库从 Windows 系统迁移到 Linux Oracle Rac 集群环境(1)——迁移数据到节点1

- Can automate - 10k, can automate - 20K, do you understand automated testing?

- Tell you about mvcc sequel

- Difference between left join on and join on

- 用向量表示两个坐标系的变换

- vie的刷新机制

- Processon producer process (customized)

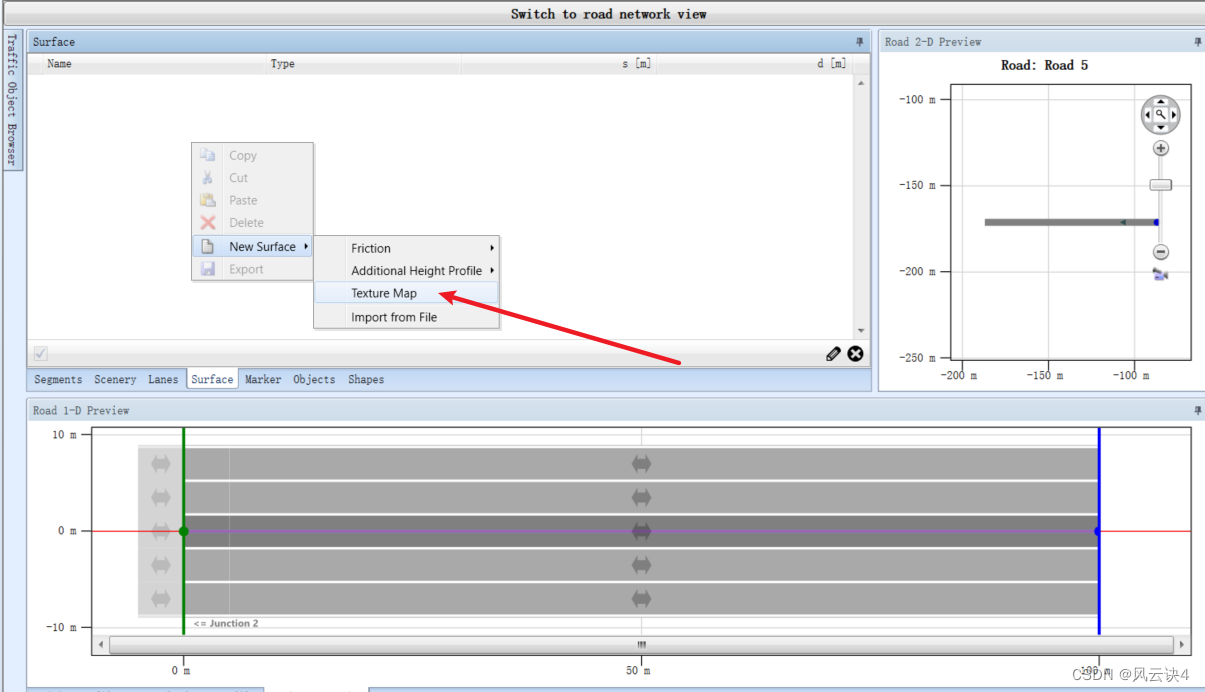

- DSPACE的性能渲染问题

- Detailed explanation of cache (for the postgraduate entrance examination of XD)

- Migrate Oracle database from windows system to Linux Oracle RAC cluster environment (2) -- convert database to cluster mode

猜你喜欢

Leetcode 210: curriculum II (topological sorting)

Copilot免费时代结束!学生党和热门开源项目维护者可白嫖

Difference between left join on and join on

好用的字典-defaultdict

Refresh mechanism of vie

DSPACE设置斑马线和道路箭头

计网 | 【四 网络层】知识点及例题

使用ShaderGraph制作边缘融合粒子Shader的启示

![Network planning | [four network layers] knowledge points and examples](/img/c3/d7f382409e99eeee4dcf4f50f1a259.png)

Network planning | [four network layers] knowledge points and examples

Expressing the transformation of two coordinate systems with vectors

随机推荐

ACM. HJ70 矩阵乘法计算量估算 ●●

爱

计算机三级(数据库)备考题目知识点总结

Pytorch learning notes (VII) ------------------ vision transformer

小米路由R4A千兆版安装breed+OpenWRT教程(全脚本无需硬改)

UnityShader入门精要——PBS基于物理的渲染

Jetson nano from introduction to practice (cases: opencv configuration, face detection, QR code detection)

AI clothing generation helps you complete the last step of clothing design

ACM. HJ75 公共子串计算 ●●

Insurance can also be bought together? Four risks that individuals can pool enough people to buy Medical Insurance in groups

消息称一加将很快更新TWS耳塞、智能手表和手环产品线

记一次beego通过go get命令后找不到bee.exe的坑

Leecode learning notes - the shortest path for a robot to reach its destination

把 Oracle 数据库从 Windows 系统迁移到 Linux Oracle Rac 集群环境(1)——迁移数据到节点1

Once beego failed to find bee after passing the go get command Exe's pit

Introduction to CUDA Programming minimalist tutorial

How to uninstall CUDA

Of the seven levels of software testers, it is said that only 1% can achieve level 7

Groovy之高级用法

doak-cms 文章管理系统 推荐