当前位置:网站首页>Pytorchcnn image recognition and classification model training framework

Pytorchcnn image recognition and classification model training framework

2022-06-30 05:00:00 【Jason_ Chen__】

PytorchCNN Image recognition and classification model training framework

List of articles

Preface

PytorchCNN Picture training framework

One 、 Image data set preprocessing

Two 、 model training

1.transforms.Compose Get ready

from torchvision import transforms

transforms.Compose([

transforms.Scale(64),

transforms.CenterCrop(48),

transforms.ToTensor(),

transforms.Normalize([0.5,0.5,0.5], [0.5,0.5,0.5])

])

2. General form image data set preparation

from torchvision import datasets

''' Absolute path to the image collection folder :os.path.join( Dataset root , subdirectories )'''

ImageFolder = datasets.ImageFolder('{ Absolute path to the image collection folder }',transforms.Compose)

3. Loading a generic form image data set

import torch

torch.utils.data.DataLoader(

ImageFolder,#Dataset ImageFolder Formal data

batch_size=128, #GPU The amount of data read at one time , involves GPU Memory

shuffle=True if It's a training set else False,

num_workers=0 # Use during training cpu Number of threads

)

4. How many images can be saved in the general format image data set

ataset_sizes = len(ImageFolder)

5. Training Model Set up

class simpleconv3(nn.Module): ``````

def __init__(self):

super(simpleconv3,self).__init__()

#----------------- first floor -------------------

# Convolution kernel settings : Input chanel Count , Number of output convolution kernels ( Output chanel Count ), Convolution kernel size , The convolution kernel moves the step size

self.conv1 = nn.Conv2d(3, 12, 3, 2)

#Batch Normalization; Parameters num_features: You can enter the convolution result chanel(C) Number or N, C, H, W

self.bn1 = nn.BatchNorm2d(12)

#----------------- The second floor -------------------

self.conv2 = nn.Conv2d(12, 24, 3, 2)

self.bn2 = nn.BatchNorm2d(24)

#----------------- The third level -------------------

self.conv3 = nn.Conv2d(24, 48, 3, 2)

self.bn3 = nn.BatchNorm2d(48)

# The shape of the calculated characteristic matrix is (5,5,48)

self.fc1 = nn.Linear(48 * 5 * 5 , 1200)

self.fc2 = nn.Linear(1200 , 128)

self.fc3 = nn.Linear(128 , 4)

# Why divide 3 Time ??? incomprehension , I hope the boss can answer

def forward(self , x):

#F.relu() relu Activation function , Convert to probability

x = F.relu(self.bn1(self.conv1(x)))

#print "bn1 shape",x.shape

x = F.relu(self.bn2(self.conv2(x)))

x = F.relu(self.bn3(self.conv3(x)))

x = x.view(-1 , 48 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

6.GPU Set up and use

use_gpu = torch.cuda.is_available() # see GPU Is it available

modelclc = simpleconv3()

if use_gpu:

torch.cuda.empty_cache() # Clear video memory

modelclc = modelclc.cuda() # Use GPU To train

7. Configure training parameters

# standard : Using cross entropy , Actual and expected closeness

criterion = nn.CrossEntropyLoss()

# Optimizer : Stochastic gradient descent

optimizer_ft = optim.SGD(modelclc.parameters(), lr=0.1, momentum=0.9)

# Optimizer adjuster

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=100, gamma=0.1)

# To configure moedl Training parameters

modelclc = train_model(model=modelclc,

criterion=criterion,

optimizer=optimizer_ft,

scheduler=exp_lr_scheduler,

num_epochs=4) # Here you can adjust the training rounds

8. Save model training weights

if not os.path.exists("models"):

os.mkdir('models')

torch.save(modelclc.state_dict(),'models/model.ckpt')

3、 ... and 、 Model validation

1. The header file

import sys

import numpy as np

import cv2

import os

import dlib

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import torchvision

from torchvision import datasets, models, transforms

import time

from PIL import Image

import torch.nn.functional as F

import matplotlib.pyplot as plt

import warnings

2. Import opencv Algorithm

#opencv Lip detection algorithm

PREDICTOR_PATH = "./Emotion_Recognition_File/face_detect_model/shape_predictor_68_face_landmarks.dat"

predictor = dlib.shape_predictor(PREDICTOR_PATH)

#opencv Face detection algorithm

cascade_path = './Emotion_Recognition_File/face_detect_model/haarcascade_frontalface_default.xml'

cascade = cv2.CascadeClassifier(cascade_path)

3. Import opencv Algorithm

#opencv Lip detection algorithm

PREDICTOR_PATH = "./Emotion_Recognition_File/face_detect_model/shape_predictor_68_face_landmarks.dat"

predictor = dlib.shape_predictor(PREDICTOR_PATH)

#opencv Face detection algorithm

cascade_path = './Emotion_Recognition_File/face_detect_model/haarcascade_frontalface_default.xml'

cascade = cv2.CascadeClassifier(cascade_path)

5. Import training model

net = simpleconv3()

net.eval()

modelpath = "./models/model.ckpt" # Model path

#map_location A function or dictionary that specifies how to map storage devices

net.load_state_dict(torch.load(modelpath, map_location=lambda storage, loc: storage))

6. test opencv Face detection algorithm and lip recognition algorithm

# Test one file at a time

img_path = "./Emotion_Recognition_File/find_face_img/"

imagepaths = os.listdir(img_path) # Image folder

for imagepath in imagepaths:

im = cv2.imread(os.path.join(img_path, imagepath), 1)

try:

rects = cascade.detectMultiScale(im, 1.3, 5) # Face detection

x, y, w, h = rects[0] # Get the four attribute values of the face , Top left coordinates x,y 、 Height and width w、h

# print(x, y, w, h)

rect = dlib.rectangle(int(x), int(y), int(x + w), int(y + h))

landmarks = np.matrix([[p.x, p.y]

for p in predictor(im, rect).parts()]) # Set matrix of all detection points

except:

# print(" No face was detected ")

continue # No face was detected

6. Get a lip crop

# Obtain the smallest rectangular box surrounding the lips according to the outermost key points

# 68 The first key point is from

# Left ear 0 - chin - Right ear 16- Left eyebrow (17-21)- Right eyebrow (22-26)- Left eye (36-41)

# Right eye (42-47)- Nose from top to bottom (27-30)- nostril (31-35)

# The outline of the mouth (48-59) Inside the mouth (60-67)

xmin = 10000

xmax = 0

ymin = 10000

ymax = 0

# Traverse lip points

for i in range(48, 67):

x = landmarks[i, 0] # Get lip points x Axis coordinates

y = landmarks[i, 1] # Get lip points y Axis coordinates

if x < xmin:

xmin = x

if x > xmax:

xmax = x

if y < ymin:

ymin = y

if y > ymax:

ymax = y

# Get the four points of the graph frame of the mouth

#print("xmin=", xmin)

#print("xmax=", xmax)

#print("ymin=", ymin)

#print("ymax=", ymax)

#print('\n')

roiwidth = xmax - xmin # Get the length of the graphic frame of the mouth

roiheight = ymax - ymin # Get the width of the graphic frame of the mouth

roi = im[ymin:ymax, xmin:xmax, 0:3]

# Make sure your mouth is in the center , Not the whole picture of the mouth just right , Prevent the loss of edge features during convolution

if roiwidth > roiheight:

dstlen = 1.5 * roiwidth

else:

dstlen = 1.5 * roiheight

diff_xlen = dstlen - roiwidth

diff_ylen = dstlen - roiheight

newx = xmin

newy = ymin

imagerows, imagecols, channel = im.shape

if newx >= diff_xlen / 2 and newx + roiwidth + diff_xlen / 2 < imagecols:

newx = newx - diff_xlen / 2

elif newx < diff_xlen / 2:

newx = 0

else:

newx = imagecols - dstlen

if newy >= diff_ylen / 2 and newy + roiheight + diff_ylen / 2 < imagerows:

newy = newy - diff_ylen / 2

elif newy < diff_ylen / 2:

newy = 0

else:

newy = imagecols - dstlen

# Get the lip clipping matrix

roi = im[int(newy):int(newy + dstlen), int(newx):int(newx + dstlen), 0:3]

7. Get ready transforms.Compose

data_transforms = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

8. Predicted results

#BGR——》RGB

roi = cv2.cvtColor(roi, cv2.COLOR_BGR2RGB)

# Match model inputs 48*48*3, normalization

roiresized = cv2.resize(roi,

(48, 48)).astype(np.float32) / 255.0

# convert to tensor, Standardization , And in 0 Axis adds a dimension

imgblob = data_transforms(roiresized).unsqueeze(0) #[1,48,48,3] Why?

print(imgblob)

#tensor You don't need to take derivatives

imgblob.requires_grad = False

# convert to Variable

imgblob = Variable(imgblob)

# Do not take derivatives from the data

torch.no_grad()

# Normalize the prediction results

predict = F.softmax(net(imgblob))

print(predict)

# Obtain the prediction result matrix and convert it into numpy form , And use np.argmax() Function returns the index of the maximum predicted result , Get the index value of the expression

index = np.argmax(predict.detach().numpy())

9. Frame the lips in the picture , And display

# Read the picture

im_show = cv2.imread(os.path.join(img_path, imagepath), 1)

# Get the dimension information of the picture

im_h, im_w, im_c = im_show.shape

pos_x = int(newx + dstlen)

pos_y = int(newy + dstlen)

font = cv2.FONT_HERSHEY_SIMPLEX

# Frame your lips on the picture

cv2.rectangle(im_show, (int(newx), int(newy)),

(int(newx + dstlen), int(newy + dstlen)), (0, 255, 255), 2)

if index == 0:

cv2.putText(im_show, 'none', (pos_x, pos_y), font, 1.5, (0, 0, 255), 2) # Write... On the picture ‘none’

if index == 1:

cv2.putText(im_show, 'pout', (pos_x, pos_y), font, 1.5, (0, 0, 255), 2)

if index == 2:

cv2.putText(im_show, 'smile', (pos_x, pos_y), font, 1.5, (0, 0, 255), 2)

if index == 3:

cv2.putText(im_show, 'open', (pos_x, pos_y), font, 1.5, (0, 0, 255), 2)

# cv2.namedWindow('result', 0)

# cv2.imshow('result', im_show)

# Write the picture to ./results Under the path

cv2.imwrite(os.path.join('results', imagepath), im_show)

# print(os.path.join('results', imagepath))

# You need to switch channels here , because matplotlib The channel order for saving pictures is RGB, And in the OpenCV Medium is BGR

plt.imshow(im_show[:, :, ::-1])

plt.show()

# cv2.waitKey(0)

# cv2.destroyAllWindows()

3、 ... and 、 Model tuning

...

边栏推荐

- Procedural animation -- inverse kinematics of tentacles

- [recruitment] UE4 Development Engineer

- 力扣59. 螺旋矩阵 II

- Why does the computer have no network after win10 is turned on?

- Exploration of unity webgl

- Create a simple battle game with photon pun

- Unity is associated with vs. there is a compiler problem when opening

- Unity a* road finding force planning

- Writing unityshader with sublimetext

- 力扣349. 两个数组的交集

猜你喜欢

深度学习------不同方法实现Inception-10

Unity automatic pathfinding

How does unity use mapbox to implement real maps in games?

UE4 method of embedding web pages

【Paper】2019_ Distributed Cooperative Control of a High-speed Train

Some problems encountered in unity steamvr

Unity lens making

SCM learning notes: interrupt learning

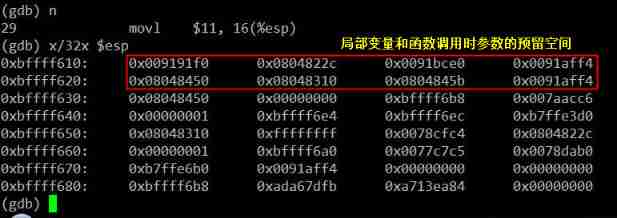

Deeply understand the function calling process of C language

Lambda&Stream

随机推荐

Unreal 4 unavigationsystemv1 compilation error

PS1 Contemporary Art Center, Museum of modern art, New York

ParticleSystem in the official Manual of unity_ Collision module

力扣59. 螺旋矩阵 II

Procedural animation -- inverse kinematics of tentacles

Webots learning notes

图的一些表示方式、邻居和度的介绍

[control] multi agent system summary. 5. system consolidation.

Create a simple battle game with photon pun

力扣292周赛题解

Unity/ue reads OPC UA and OPC Da data (UE4)

[recruitment] UE4 Development Engineer

Arrays class

力扣2049:统计最高分的节点数目

Unity script life cycle and execution sequence

UnityEngine. JsonUtility. The pit of fromjason()

Photon pun refresh hall room list

【VCS+Verdi联合仿真】~ 以计数器为例

amd锐龙CPU A320系列主板如何安装win7

Unrealeengine4 - about uobject's giant pit that is automatically GC garbage collected