当前位置:网站首页>Scrapy framework (I): basic use

Scrapy framework (I): basic use

2022-06-27 15:34:00 【User 8336546】

Preface

This article briefly introduces Scrapy The basic use of the framework , And some problems and solutions encountered in the process of use .

Scrapy Basic use of framework

Installation of environment

1. Enter the following instructions to install wheel

pip install wheel

2. download twisted

Here is a download link :http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

notes : There are two points to note when downloading :

- To download with yourself python File corresponding to version ,

cpxxVersion number .( For example, I python Version is 3.8.2, Just download cp38 The file of ) - Download the corresponding file according to the number of bits of the operating system .32 Bit operating system download

win32;64 Bit operating system download win_amd64.

3. install twisted

Download the good in the previous step twisted Enter the following command in the directory of :

pip install Twisted-20.3.0-cp38-cp38-win_amd64.whl

4. Enter the following instructions to install pywin32

pip install pywin32

5. Enter the following instructions to install scrapy

pip install scrapy

6. test

Input in the terminal scrapy command , If no error is reported, the installation is successful .

establish scrapy engineering

Here it is. PyCharm Created in the scrapy engineering

1. open Terminal panel , Enter the following instructions to create a scrapy engineering

scrapy startproject ProjectName

ProjectNameIs the project name , Define your own .

2. The following directories are automatically generated

3. Create a crawler file

First, enter the newly created project directory :

cd ProjectName

And then in spiders Create a crawler file in a subdirectory

scrapy genspider spiderName www.xxx.com

spiderNameIs the name of the crawler file , Define your own .

4. Execute the project

scrapy crawl spiderName

Modification of file parameters

In order to better implement the crawler project , Some file parameters need to be modified .

1.spiderName.py

The contents of the crawler file are as follows :

import scrapy

class FirstSpider(scrapy.Spider):

# The name of the crawler file : Is a unique identifier of the crawler source file

name = 'spiderName'

# Allowed domain names : Used to define start_urls Which... In the list url You can send requests

allowed_domains = ['www.baidu.com']

# Initial url list : The... Stored in this list url Will be scrapy Send the request automatically

start_urls = ['http://www.baidu.com/','https://www.douban.com']

# For data analysis :response The parameter represents the corresponding response object after the request is successful

def parse(self, response):

passnotes :

allowed_domainsThe list is used to limit the requested url. In general, there is no need for , Just comment it out .

2.settings.py

1). ROBOTSTXT_OBEY

find ROBOTSTXT_OBEY keyword , The default parameter here is Ture.( That is, the project complies with by default robots agreement ) Practice for the project , It can be temporarily changed to False.

# Obey robots.txt rules ROBOTSTXT_OBEY = False

2). USER_AGENT

find USER_AGENT keyword , The default comment here is . Modify its contents , To avoid UA Anti creeping .

# Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.50'

3). LOG_LEVEL

In order to view the project operation results more clearly ( The default running result of the project will print a large amount of log information ), Can be added manually LOG_LEVEL keyword .

# Displays log information of the specified type LOG_LEVEL = 'ERROR' # Show only error messages

Possible problems

1. Installation completed successfully scrapy, But after the crawler file is created, it still shows import scrapy Mistake .

The environment in which I practice is based on Python3.8 Various virtual environments created , However, in building scrapy Project time pip install scrapy Always report an error .

Initially, it was manually posted on the official website :https://scrapy.org/ download scrapy library , Then install to the virtual environment site-packages Under the table of contents , Sure enough, looking back import scrapy It's normal , Programs can also run . But still print a lot of error messages , adopt PyCharm Of Python Interpreter Check to see if Scrapy Library included .

But I tried some solutions , To no avail …

Finally found Anaconda Bring their own Scrapy library , So it is based on Anaconda Created a virtual environment , Perfect operation ~~~~

ending

study hard

边栏推荐

- 16 -- 删除无效的括号

- 避孕套巨头过去两年销量下降40% ,下降原因是什么?

- 专家:让你低分上好校的都是诈骗

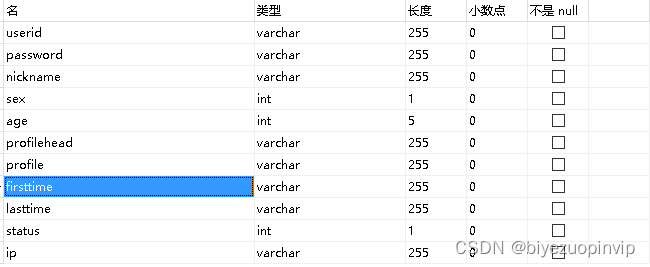

- Web chat room system based on SSM

- 漏洞复现----34、yapi 远程命令执行漏洞

- E modulenotfounderror: no module named 'psychopg2' (resolved)

- Abnormal analysis of pcf8591 voltage measurement data

- QT 如何在背景图中将部分区域设置为透明

- Jupiter core error

- Elegant custom ThreadPoolExecutor thread pool

猜你喜欢

#28对象方法扩展

Web chat room system based on SSM

ReentrantLock、ReentrantReadWriteLock、StampedLock

What is the London Silver code

Pychart installation and setup

QT notes (XXVIII) using qwebengineview to display web pages

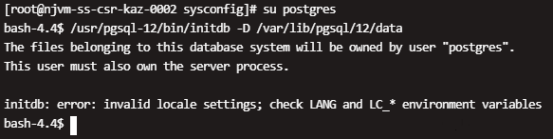

CentOS8-postgresql初始化时报错:initdb: error: invalid locale settings; check LANG and LC_* environment

AutoCAD - line width setting

一场分销裂变活动,不止是发发朋友圈这么简单!

![[digital signal processing] discrete time signal (discrete time signal knowledge points | signal definition | signal classification | classification according to certainty | classification according t](/img/69/daff175c3c6a8971d631f9e681b114.jpg)

[digital signal processing] discrete time signal (discrete time signal knowledge points | signal definition | signal classification | classification according to certainty | classification according t

随机推荐

Numerical extension of 27es6

Typescript learning materials

机械硬盘和ssd固态硬盘的原理对比分析

How is the London Silver point difference calculated

E-week finance Q1 mobile banking has 650million active users; Layout of financial subsidiaries in emerging fields

Fundamentals of software engineering (I)

Great God developed the new H5 version of arXiv, saying goodbye to formula typography errors in one step, and the mobile phone can easily read literature

AQS抽象队列同步器

Luogu_ P1008 [noip1998 popularization group] triple strike_ enumeration

Synchronized and lock escalation

LVI: feature extraction and sorting of lidar subsystem

Volatile and JMM

Computer screen splitting method

Difference between special invoice and ordinary invoice

About the meaning of the first two $symbols of SAP ui5 parameter $$updategroupid

洛谷入门2【分支结构】题单题解

Design of electronic calculator system based on FPGA (with code)

创建数据库并使用

Beginner level Luogu 2 [branch structure] problem list solution

Luogu_ P1003 [noip2011 improvement group] carpet laying_ Violence enumeration