当前位置:网站首页>Activation function - relu vs sigmoid

Activation function - relu vs sigmoid

2022-07-02 20:22:00 【Zi Yan Ruoshui】

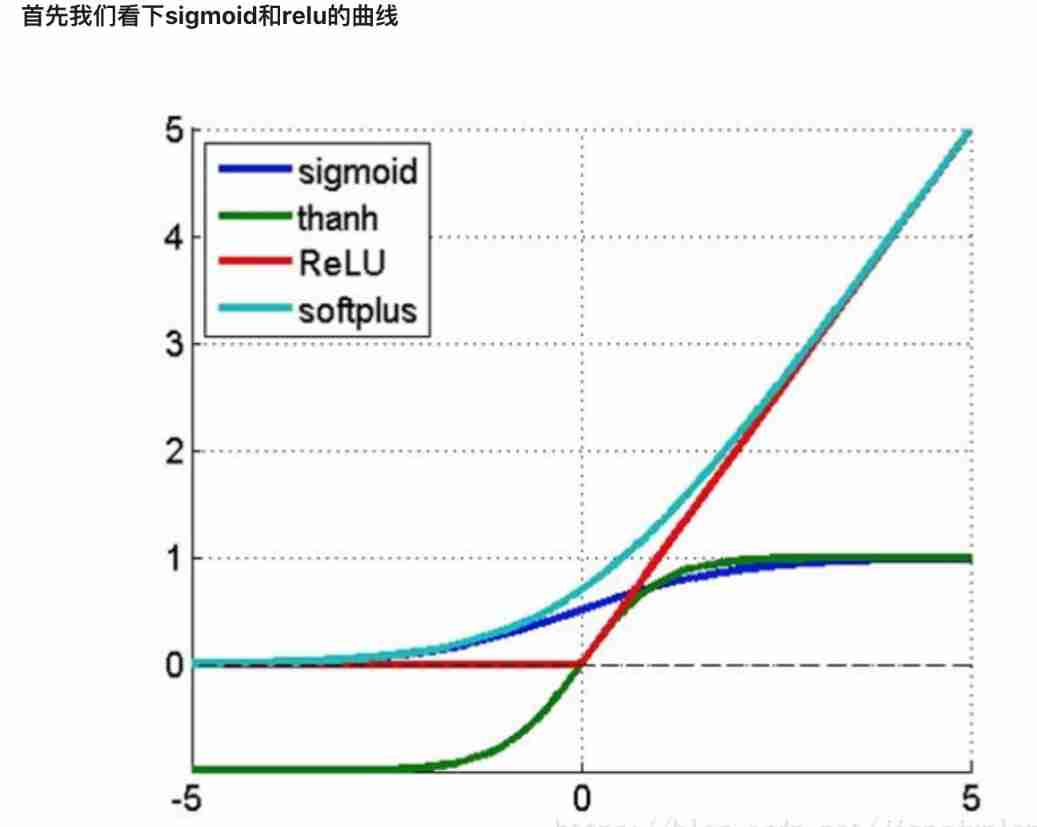

Data flow through sigmoid after , There will be significant attenuation .

Hypothetical front face w Make a big change  , after sigmoid Then it will become a small change . This change has been transmitted back attenuation , Until

, after sigmoid Then it will become a small change . This change has been transmitted back attenuation , Until  . At this time, you will find the front layer

. At this time, you will find the front layer  Obviously smaller than the following

Obviously smaller than the following  .

.

If you use the gradient descent method , The latter parameters must iterate faster than the previous parameters , So convergence is faster . As a result, the training of the following parameters is almost completed , The previous parameters are still close to the bad training results of random numbers .

therefore ML Search for alternatives sigmoid The activation function of , Such as relu.

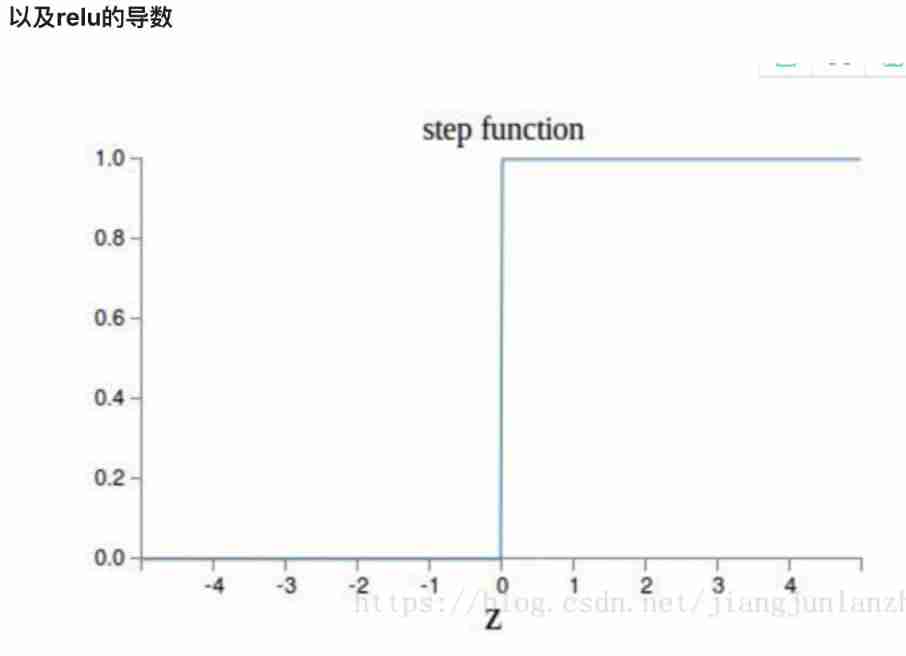

relu Function in Greater than 0 Part of The gradient is constant ,relu Function in Less than 0 At the time of the Derivative is 0 , So once the neuron activation value enters the negative half region , Then the gradient will be 0, In other words, this neuron will not undergo training . Only the neuron activation value enters the positive half area , There will be a gradient value , At this point, the neuron will do this once ( To strengthen ) Training .

relu The nature of the function is very similar to the activation of neurons in Biology .

To sum up relu Characteristics as activation function :

1) Fast calculation ;

2) It simulates the activation characteristics of biological nervous system

3) A series of relu With different bias After superposition, it can be combined into sigmoid;

4) Solved the problem of gradient disappearance

边栏推荐

- Automated video production

- Self-Improvement! Daliangshan boys all award Zhibo! Thank you for your paper

- Outsourcing for three years, abandoned

- Kt148a voice chip instructions, hardware, protocols, common problems, and reference codes

- 【Hot100】22. 括号生成

- 在网上炒股开户安全吗?我是新手,还请指导

- sense of security

- Is it safe to open an account for online stock speculation? I'm a novice, please guide me

- 【Hot100】23. Merge K ascending linked lists

- AcWing 181. Turnaround game solution (search ida* search)

猜你喜欢

![[cloud native topic -50]:kubesphere cloud Governance - operation - step by step deployment of microservice based business applications - database middleware MySQL microservice deployment process](/img/e6/1dc747de045166f09ecdce1c5a34b1.jpg)

[cloud native topic -50]:kubesphere cloud Governance - operation - step by step deployment of microservice based business applications - database middleware MySQL microservice deployment process

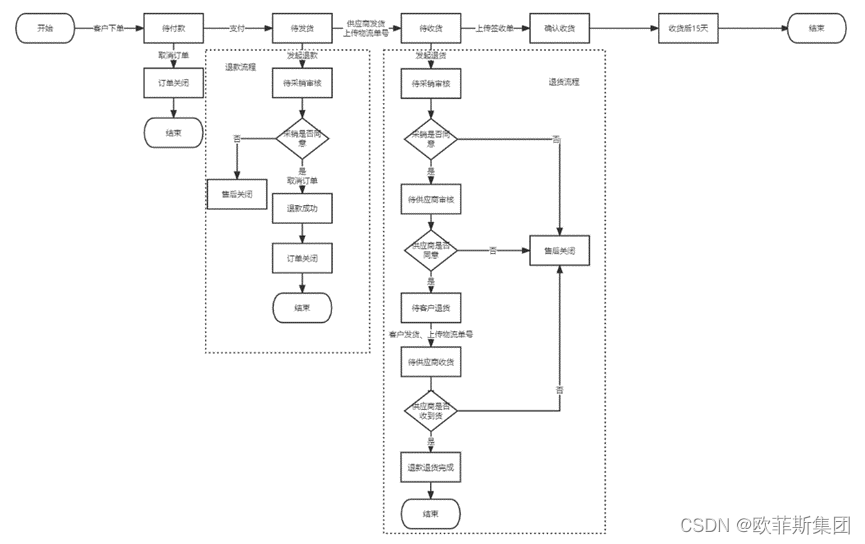

B端电商-订单逆向流程

![[QT] QPushButton creation](/img/c4/bc0c346e394484354e5b9d645916c0.png)

[QT] QPushButton creation

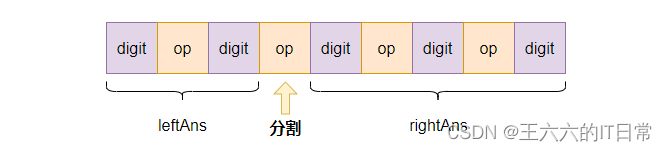

【每日一题】241. 为运算表达式设计优先级

JASMINER X4 1U deep disassembly reveals the secret behind high efficiency and power saving

【QT】QPushButton创建

「 工业缺陷检测深度学习方法」最新2022研究综述

AcWing 903. Expensive bride price solution (the shortest path - building map, Dijkstra)

HDL design peripheral tools to reduce errors and help you take off!

Sometimes only one line of statements are queried, and the execution is slow

随机推荐

Outsourcing for three years, abandoned

现在券商的优惠开户政策什么?实际上网上开户安全么?

After 65 days of closure and control of the epidemic, my home office experience sharing | community essay solicitation

What is the Bluetooth chip ble, how to select it, and what is the path of subsequent technology development

Use graalvm native image to quickly expose jar code as a native shared library

什么叫在线开户?现在网上开户安全么?

RPD product: super power squad nanny strategy

测试人员如何做不漏测?这7点就够了

CRM客户关系管理系统

[QT] QPushButton creation

API documentation tool knife4j usage details

自动化制作视频

笔记本安装TIA博途V17后出现蓝屏的解决办法

想请教一下,我在东莞,到哪里开户比较好?手机开户是安全么?

在消费互联网时代,诞生了为数不多的头部平台的话

八年测开经验,面试28K公司后,吐血整理出高频面试题和答案

Implementation of online shopping mall system based on SSM

KT148A语音芯片使用说明、硬件、以及协议、以及常见问题,和参考代码

RPD出品:Superpower Squad 保姆级攻略

Is it safe to open an account for online stock speculation? I'm a novice, please guide me