当前位置:网站首页>Collection | can explain the development and common methods of machine learning!

Collection | can explain the development and common methods of machine learning!

2022-06-11 15:53:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery

In recent years , Interpretable machine learning (IML) Relevant research is booming . Although this field is just beginning , However, its work on regression modeling and rule-based machine learning began in 20 century 60 years . lately ,arXiv A paper on explains machine learning briefly (IML) History of the field , An overview of the most advanced interpretable methods is given , The challenges encountered are also discussed .

When machine learning models are used in products 、 In the process of decision-making or research ,“ Interpretability ” Usually a determinant .

Interpretable machine learning (Interpretable machine learning , abbreviation IML) Can be used to discover knowledge , debugging 、 Prove the model and its prediction , And control and improve the model .

The researchers believe that IML In some cases, it can be considered that the development of has entered a new stage , However, some challenges remain .

Interpretable machine learning (IML) Brief history

In recent years, there have been many researches on interpretable machine learning , But the history of learning interpretable models from data has a long history .

Linear regression dates back to 19 It has been used since the beginning of the century , Since then, it has developed into a variety of regression analysis tools , for example , Generalized model addition (generalized additive models) And elastic networks (elastic net) etc. .

The philosophical meaning behind these statistical models is usually to make some distribution assumptions or limit the complexity of the model , And therefore impose the inherent interpretability of the model .

And in machine learning , The modeling method used is slightly different .

Machine learning algorithms usually follow nonlinear rules , Nonparametric methods , Instead of pre limiting the complexity of the model , In this method , The complexity of the model is controlled by one or more super parameters , And select through cross validation . This flexibility usually leads to good prediction performance of models that are difficult to explain .

Although machine learning algorithms usually focus on predicting performance , But work on the interpretability of machine learning has existed for many years . The built-in feature importance measure in random forest is one of the important milestones of interpretable machine learning .

After a long period of development, deep learning , Finally in the 2010 Year of ImageNet To win .

The years since then , according to Google On “ Interpretable machine learning ” and “ Explicable AI” The frequency of these two search terms , It can be roughly concluded that IML Field in 2015 It took off in .

IML Common methods in

Usually by analyzing model components , Model sensitivity or alternative models to distinguish IML Method .

Analyze the components of the interpretable model

To analyze the components of the model , It needs to be broken down into parts that can be explained separately . however , It is not necessary for the user to fully understand the model .

Generally, interpretable models are models with learnable structures and parameters , It can be assigned a specific explanation . under these circumstances , linear regression model , Decision trees and decision rules are considered interpretable .

Linear regression models can be explained by analyzing components : Model structure ( Weighted summation of features ) It is allowed to interpret the weight as the influence of the feature on the prediction .

Analyze the components of more complex models

Researchers will also analyze the components of more complex black box models . for example , You can find or generate an active CNN The image of feature graph is used to visualize convolutional neural network (CNN) Abstract features of learning .

For random forests , The minimum depth distribution and Gini coefficient of trees are used to analyze the trees in random forest , Can be used to quantify the importance of features .

Model component analysis is a good tool , But its disadvantage is that it is related to a specific model , And it can not be well combined with the commonly used model selection methods , It is usually through machine learning to search for many different ML Cross validation of the model .

IML Challenges in development

Statistical uncertainty

many IML Method , for example : Arrangement and combination of feature importance, etc , An explanation is provided without quantifying the uncertainty of the explanation .

The model itself and its interpretation are calculated based on data , So there is uncertainty . At present, research is trying to quantify the uncertainty of interpretation , For example, layer by layer analysis of feature importance, correlation, etc .

Causal interpretation

Ideally , The model should reflect the real causal structure of its potential phenomenon , For causal interpretation . If used in Science IML, Then causal interpretation is usually the goal of modeling .

However, most statistical learning programs only reflect the correlation structure between features and analyze the data generation process , Not its real inherent structure . Such a causal structure will also make the model more resistant to attacks , And more useful as a basis for decision-making .

Unfortunately , Predictive performance and causality can be conflicting goals .

for example , Today's weather directly leads to tomorrow's weather , But we may only use “ Slippery ground ” This information , Use... In the prediction model “ Slippery ground ” It's useful to show the weather tomorrow , Because it contains information about today's weather , But because of ML Today's weather information is missing from the model , Therefore, there is no causal explanation .

Feature dependence

The dependence between features introduces the problems of attribution and extrapolation . for example , When features are interrelated and share information , The importance and role of features become difficult to distinguish .

The correlation characteristics in random forest are of high importance , Many methods based on sensitivity analysis will replace features , When the replaced feature has some dependence on another feature , This association will be broken , And the obtained data points will be extrapolated to areas outside the distribution .

ML The model has never been trained on such combined data , And you may not encounter similar data points in your application . therefore , Extrapolation may cause misunderstanding .

How to interpret the prediction results to individuals with different knowledge and backgrounds , And meeting the need for interpretability at the institutional or social level may be IML Future goals .

It covers a wider range of areas , For example, human-computer interaction , Psychology and Sociology . In order to solve the challenges of the future , The author believes that the field of interpretable machine learning must be extended horizontally to other fields , And it extends vertically in statistics and computer science .

Reference link :

https://arxiv.org/abs/2010.09337

The good news !

Xiaobai learns visual knowledge about the planet

Open to the outside world

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , Cover expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing and other more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download, including image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 Face recognition, etc 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 A real project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Please do not send ads in the group , Or you'll be invited out , Thanks for your understanding ~边栏推荐

- Connect to the database using GSQL

- [Yugong series] June 2022 Net architecture class 077 distributed middleware schedulemaster loading assembly timing task

- From 0 to 1, master the mainstream technology of large factories steadily. Isn't it necessary to increase salary after one year?

- Using cloud DB to build app quick start -server

- 鼻孔插灯,智商上升,风靡硅谷,3万就成

- 码农必备SQL调优(上)

- 泰雷兹云安全报告显示,云端数据泄露和复杂程度呈上升趋势

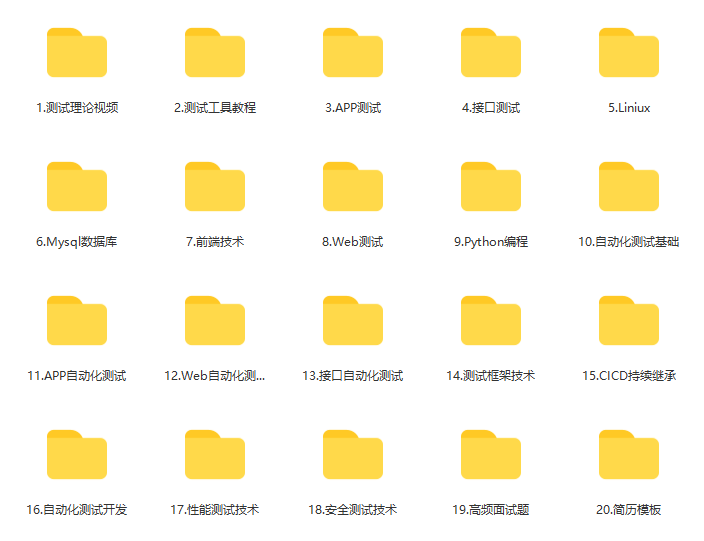

- 零基础自学软件测试,我花7天时间整理了一套学习路线,希望能帮助到大家..

- Overview and operation of database dense equivalent query

- 2022年软件测试的前景如何?需不需要懂代码?

猜你喜欢

随机推荐

GO语言-值类型和引用类型

使用Cloud DB构建APP 快速入门-快应用篇

Kaixia takes the lead in launching a new generation of UFS embedded flash memory devices that support Mipi m-phy v5.0

Go Language - value type and Reference Type

openGauss 多线程架构启动过程详解

[azure application service] nodejs express + msal realizes the authentication experiment of API Application token authentication (AAD oauth2 idtoken) -- passport authenticate('oauth-bearer', {session:

从屡遭拒稿到90后助理教授,罗格斯大学王灏:好奇心驱使我不断探索

Application of AI in index recommendation

CLP information - financial standardization "14th five year plan" highlights data security

Daily blog - wechat service permission 12 matters

Easy to use GS_ Dump and GS_ Dumpall command export data

Using cloud DB to build apps quick start - quick games

DB4AI: 数据库驱动AI

Overview and example analysis of opengauss database performance tuning

一文教会你数据库系统调优

Nat Common | le Modèle linguistique peut apprendre des distributions moléculaires complexes

Export configuration to FTP or TFTP server

Frontier technology exploration deepsql: in Library AI algorithm

验证码是自动化的天敌?阿里研究出了解决方法

[Yugong series] June 2022 Net architecture class 076- execution principle of distributed middleware schedulemaster