当前位置:网站首页>Hyperparameter Optimization - Excerpt

Hyperparameter Optimization - Excerpt

2022-07-31 06:14:00 【Young_win】

Introduction to Hyperparameter Optimization

When building a deep learning model, you must decide: how many layers should be stacked?How many cells or filters should each layer contain?Should activation use relu or some other function?Should BatchNormalization be used after a certain layer?What dropout ratio should I use?These parameters at the architectural level are called hyperparameters.Correspondingly, model parameters can be trained by backpropagation.

The way to adjust hyperparameters is generally "to formulate a principle to systematically and automatically explore the possible decision space".Search the architecture space and empirically find the best performing architecture.The process of hyperparameter optimization:

1.) Select a set of hyperparameters (automatic selection);

2.) Build the corresponding model;

3.) Fit the model on the training data and measureits final performance on validation data;

4.) choose the next set of hyperparameters to try (automatic selection);

5.) repeat the above process;

6.) finally, measure the model atPerformance on test data.

The key to this process is that, given many sets of hyperparameters, the history of validation performance is used to select the next set of hyperparameters to evaluate of algorithms, such as: Bayesian Optimization, Genetic Algorithms, Simple Random Search, etc.

Hyperparameter optimization v.s. model parameter optimization

Training the model weights is relatively simple, that is, calculating the loss function on a small batch of data, and then using the backpropagation algorithm to make the weights move in the correct direction.

Hyperparameter optimization: (1.) Computing the feedback signal - whether this set of hyperparameters results in a high-performance model on this task - can be very computationally expensive, and it requires creating aNew models and trained from scratch; (2.) The hyperparameter space usually consists of many discrete decisions and thus is neither continuous nor differentiable.Therefore, in general can't do gradient descent in hyperparameter space.Instead, you have to rely on optimization methods that don't use gradients, which are much less efficient than gradient descent.

In general, random search - randomly selecting the hyperparameters to evaluate, and repeating the process - is the best solution.a.) The Python tool library Hyperopt is a hyperparameter optimization tool that internally uses Parzen to estimate its tree to predict which set of hyperparameters may yield good results.b.) The Hyperas library is to integrate Hyperopt with the Keras model.

An important issue to keep in mind when doing large-scale hyperparameter field optimization is validation overfitting.Because you are using the validation data to calculate a signal, and then updating the hyperparameters based on that signal, you are actually training the hyperparameters on the validation data and will soon overfit the validation data.

边栏推荐

猜你喜欢

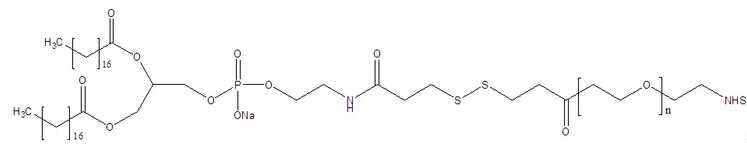

科学研究用磷脂-聚乙二醇-活性酯 DSPE-PEG-NHS CAS:1445723-73-8

jenkins +miniprogram-ci 一键上传微信小程序

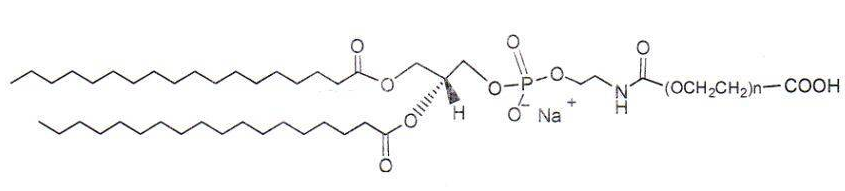

DSPE-PEG-COOH CAS:1403744-37-5 磷脂-聚乙二醇-羧基脂质PEG共轭物

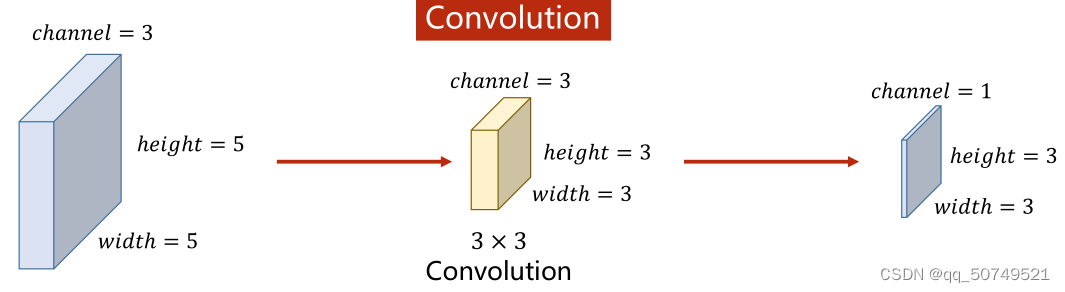

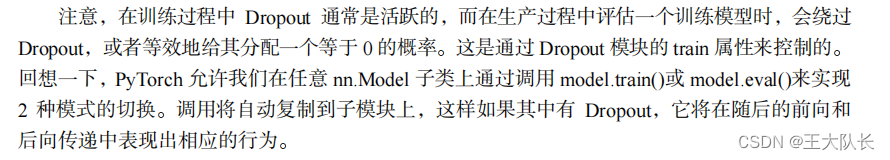

pytorch学习笔记10——卷积神经网络详解及mnist数据集多分类任务应用

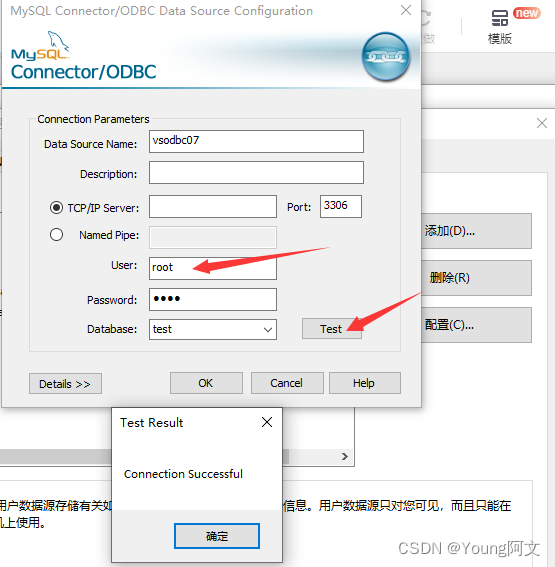

VS通过ODBC连接MYSQL(一)

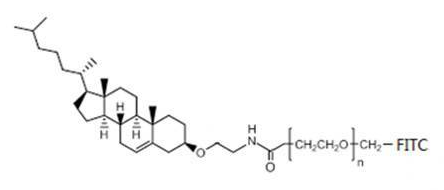

Introduction to CLS-PEG-FITC Fluorescein-PEG-CLS Cholesterol-PEG-Fluorescein

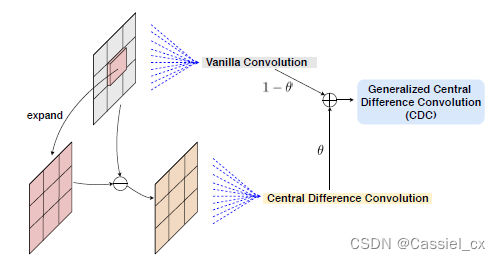

Multi-Modal Face Anti-Spoofing Based on Central Difference Networks学习笔记

人脸识别AdaFace学习笔记

深度学习知识点杂谈

wangeditor编辑器内容传至后台服务器存储

随机推荐

Android software security and reverse analysis reading notes

unicloud 云开发记录

计算图像数据集均值和方差

MYSQL transaction and lock problem handling

Markdown help documentation

Tensorflow边用边踩坑

拒绝采样小记

Embedding前沿了解

MySQL 出现 The table is full 的解决方法

Introduction to CLS-PEG-FITC Fluorescein-PEG-CLS Cholesterol-PEG-Fluorescein

This in js points to the prototype object

Pytorch学习笔记09——多分类问题

Xiaomi mobile phone SMS location service activation failed

如何修改数据库密码

Flutter mixed development module dependencies

Pytorch学习笔记13——Basic_RNN

Several forms of Attribute Changer

Cholesterol-PEG-Amine CLS-PEG-NH2 胆固醇-聚乙二醇-氨基科研用

sql add default constraint

2022 SQL big factory high-frequency practical interview questions (detailed analysis)