当前位置:网站首页>Popular understanding of random forest

Popular understanding of random forest

2022-07-03 15:20:00 【alw_ one hundred and twenty-three】

Since there is a decision tree , Is there an algorithm that uses multiple decision trees to form a forest ? Yes ! That is random forest . Random forest is a kind of forest called Bagging Variant of the algorithm framework . So if you want to understand random forest, you must first understand Bagging.

Bagging

What is? Bagging

Bagging yes Bootstrap Aggregating English abbreviations , Don't mistakenly think that you just contacted Bagging It's an algorithm ,Bagging It is a learning framework in integrated learning , Bagging It is a parallel integrated learning method . The famous random forest algorithm is in Bagging Based on the modified algorithm .

** Bagging The core idea of the method is that three cobblers top Zhuge Liang **. If you use Bagging Solve the problem of classification , Is to integrate the results of multiple classifiers to vote , Choose the result with the highest number of votes as the final result . If you use Bagging Solve the problem of return , The results of multiple regressors are added up and averaged , Take the average as the final result .

that Bagging The method is so effective , for instance . Werewolf kill I believe you should have played , Before dark , The villagers have to vote on who may be a werewolf based on what happened that day and what others found .

If we regard each villager as a classifier , Then the task of each villager is to classify , hypothesis h i ( x ) h_i(x) hi(x) It means the first one i One villager thinks x Is it a werewolf ( -1 It means you're not a werewolf , 1 It means werewolf ), f ( x ) f(x) f(x) Express x Real identity ( Is it a werewolf ), ϵ \epsilon ϵ Expressed as the error rate of villagers' wrong judgment . Then there are P ( h i ( x ) ≠ f ( x ) ) = ϵ P(h_i(x)\neq f(x))=\epsilon P(hi(x)̸=f(x))=ϵ.

According to the rules of werewolf killing , The villagers need to vote to decide who the werewolf is before dark , In other words, if more than half of the villagers guessed right when voting , So this round is right . So let's say there is now T A villager , H ( x ) H(x) H(x) It means the final result after voting , Then there are H ( x ) = s i g n ( ∑ i = 1 T h i ( x ) ) H(x)=sign(\sum_{i=1}^Th_i(x)) H(x)=sign(∑i=1Thi(x)).

Now suppose that every villager has his own opinion , Everyone has his own ideas about who is a werewolf , Then their error rates are independent of each other . So according to Hoeffding inequality You know , H ( x ) H(x) H(x) The error rate is : P ( H ( x ) ≠ f ( x ) ) = ∑ k = 0 T / 2 C T k ( 1 − ϵ ) k ϵ T − k ≤ e x p ( − 1 2 T ( 1 − 2 ϵ ) 2 ) P(H(x)\neq f(x))=\sum_{k=0}^{T/2}C_T^k(1-\epsilon)^k\epsilon ^{T-k} \leq exp(-\frac{1}{2}T(1-2\epsilon)^2) P(H(x)̸=f(x))=∑k=0T/2CTk(1−ϵ)kϵT−k≤exp(−21T(1−2ϵ)2)

According to the above formula , If 5 A villager , The error rate of each villager is 0.33 , Then the error rate of voting is 0.749 ; If 20 20 20 A villager , The error rate of each villager is 0.33 , Then the error rate of voting is 0.315 ; If 50 A villager , The error rate of each villager is 0.33 , Then the error rate of voting is 0.056 ; If 100 A villager , The error rate of each villager is 0.33 , Then the error rate of voting is 0.003. It can be seen from the results , The larger the number of villagers , Then the smaller the error rate after voting . This is also Bagging One of the reasons for strong performance .

Bagging Methods how to train

Bagging The characteristic of training is random put back sampling and parallel .

Randomly put back sampling : Suppose the training data set has m Bar sample data , Every time from here m Randomly take a piece of data from the pieces of data and put it into the sampling set , Then return it to , So that the next sampling can still be sampled . Then repeat m Time , You can have m A sample set of data , The sample set is used as Bagging One of the many classifiers is used as the training data set . Suppose there is T A classifier ( Whatever classifier ), Then repeat T This random sample is put back , build T Sample sets are used as T A training data set of classifiers .

parallel : Suppose there is 10 A classifier , stay Boosting in ,1 No. 1 classifier training can be started only after it is completed 2 No. classifier training , And in the Bagging in , The classifier can be trained at the same time , When all classifier training is completed , Whole Bagging The training process is over .

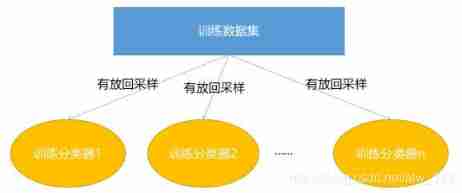

Bagging The training process is shown in the figure below :

Bagging How to predict the method

Bagging It's very simple to predict , Is to vote ! For example, there is now 5 A classifier , Yes 3 A classifier thinks that the current sample belongs to A class ,1 A classifier is considered to belong to B class ,1 A classifier is considered to belong to C class , that Bagging The result will be A class ( because A Class has the highest number of votes ).

Bagging The prediction process is shown in the figure below :

Random forests

Random forest is Bagging An extended variant of , The training process of random forest is relative to Bagging The changes in the training process are :

- Basic learner :Bagging The base learner of can be any learner , The random forest is based on decision tree .

- Random attribute selection : Suppose the original training data set has 10 Features , From here 10 Random selection of features k Features form a subset of training data , Then this subset is thrown to the decision tree as a training set for training . among k The value of is generally log2 ( Number of features ).

Such changes usually make the random forest more generalized , Because the training data set of each decision tree is random , Moreover, the features in the training data set are also randomly extracted . If the difference of each decision tree model is large , Then it is easy to solve the problem that the decision tree is easy to over fit .

边栏推荐

- Puppet automatic operation and maintenance troubleshooting cases

- Popular understanding of linear regression (I)

- 基础SQL教程

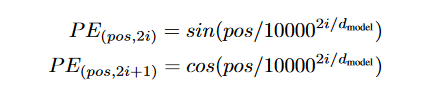

- 【Transform】【NLP】首次提出Transformer,Google Brain团队2017年论文《Attention is all you need》

- Win10 enterprise 2016 long term service activation tutorial

- Can‘t connect to MySQL server on ‘localhost‘

- Stress test WebService with JMeter

- Apache ant extension tutorial

- Introduction, use and principle of synchronized

- 详解指针进阶1

猜你喜欢

视觉上位系统设计开发(halcon-winform)

【Transform】【NLP】首次提出Transformer,Google Brain团队2017年论文《Attention is all you need》

What is machine reading comprehension? What are the applications? Finally someone made it clear

What is one hot encoding? In pytoch, there are two ways to turn label into one hot coding

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用

Summary of concurrent full knowledge points

High quality workplace human beings must use software to recommend, and you certainly don't know the last one

Jvm-06-execution engine

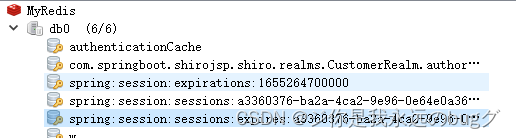

秒杀系统2-Redis解决分布式Session问题

【pytorch学习笔记】Datasets and Dataloaders

随机推荐

Redis lock Optimization Practice issued by gaobingfa

What is label encoding? How to distinguish and use one hot encoding and label encoding?

socket. IO build distributed web push server

Summary of JVM knowledge points

视觉上位系统设计开发(halcon-winform)-4.通信管理

详解指针进阶1

Tensorflow realizes verification code recognition (I)

Jvm-02-class loading subsystem

求字符串函数和长度不受限制的字符串函数的详解

【pytorch学习笔记】Datasets and Dataloaders

Neon global and Chinese markets 2022-2028: Research Report on technology, participants, trends, market size and share

Introduction to redis master-slave, sentinel and cluster mode

Visual upper system design and development (Halcon WinForm) -5 camera

北京共有产权房出租新规实施的租赁案例

Global and Chinese markets for infrared solutions (for industrial, civil, national defense and security applications) 2022-2028: Research Report on technology, participants, trends, market size and sh

Global and Chinese market of marketing automation 2022-2028: Research Report on technology, participants, trends, market size and share

Dataframe returns the whole row according to the value

开启 Chrome 和 Edge 浏览器多线程下载

Concurrency-01-create thread, sleep, yield, wait, join, interrupt, thread state, synchronized, park, reentrantlock

Using Tengine to solve the session problem of load balancing