当前位置:网站首页>[Pytorch] F.softmax() method description

[Pytorch] F.softmax() method description

2022-07-31 13:59:00 【rain or shine】

1、Function syntax format and role:

F.sofrmax(x,dim)作用:

根据不同的dimrules to do the normalization operation.

xrefers to the input tensor,dimRefers to the way of normalization.

2、F.softmax()Example under 2D tensors:

2.1、举例代码:

import torch

import torch.nn.functional as F

input = torch.randn(3, 4)

print("input=",input)

b = F.softmax(input, dim=0) # 按列SoftMax,列和为1(即0维度进行归一化)

print("b=",b)

c = F.softmax(input, dim=1) # 按行SoftMax,行和为1(即1维度进行归一化)

print("c=",c)

2.2运行结果:

input= tensor([[-0.4918, 2.5391, -0.3338, -0.4989],

[-0.2537, 0.1675, 1.1313, 0.0916],

[ 0.9846, -1.4170, -0.7165, 1.8283]])

b= tensor([[0.1505, 0.8989, 0.1664, 0.0766],

[0.1909, 0.0839, 0.7201, 0.1383],

[0.6586, 0.0172, 0.1135, 0.7851]])

c= tensor([[0.0419, 0.8675, 0.0490, 0.0416],

[0.1261, 0.1921, 0.5037, 0.1781],

[0.2779, 0.0252, 0.0507, 0.6462]])

3、F.softmax()Example under 3D tensors:

3.1、举例代码:

import torch

import torch.nn.functional as F

a = torch.rand(3,4,5)

print("aa=",aa)

bb = F.softmax(aa,dim=0) # 维度为0进行归一化

print("bb=",bb)

cc = F.softmax(aa,dim=1) # 维度为1进行归一化

print("cc=",cc)

dd = F.softmax(aa,dim=2) # 维度为2进行归一化

print("dd=",dd)

3.2、运行结果:

aa= tensor([[[0.0532, 0.9631, 0.9244, 0.9132, 0.7016],

[0.5757, 0.2128, 0.6454, 0.6925, 0.1175],

[0.0750, 0.5791, 0.6225, 0.7012, 0.5312],

[0.9914, 0.1633, 0.7572, 0.9257, 0.3213]],

[[0.6944, 0.5708, 0.5255, 0.3559, 0.6915],

[0.7808, 0.3902, 0.6919, 0.7571, 0.5835],

[0.0716, 0.9227, 0.8213, 0.3502, 0.7966],

[0.9457, 0.4547, 0.4147, 0.8405, 0.3674]],

[[0.9406, 0.8854, 0.6632, 0.5422, 0.1366],

[0.1791, 0.1090, 0.2523, 0.5594, 0.8374],

[0.7514, 0.2770, 0.8544, 0.5708, 0.2875],

[0.8299, 0.9569, 0.1342, 0.2009, 0.3595]]])

bb= tensor([[[0.1877, 0.3845, 0.4096, 0.4419, 0.3909],

[0.3448, 0.3231, 0.3673, 0.3399, 0.2152],

[0.2523, 0.3175, 0.2873, 0.3873, 0.3239],

[0.3563, 0.2198, 0.4452, 0.4162, 0.3240]],

[[0.3564, 0.2597, 0.2749, 0.2531, 0.3870],

[0.4233, 0.3858, 0.3848, 0.3626, 0.3429],

[0.2515, 0.4477, 0.3505, 0.2727, 0.4223],

[0.3404, 0.2942, 0.3161, 0.3822, 0.3393]],

[[0.4559, 0.3558, 0.3155, 0.3049, 0.2222],

[0.2319, 0.2912, 0.2479, 0.2975, 0.4420],

[0.4962, 0.2347, 0.3623, 0.3400, 0.2538],

[0.3032, 0.4860, 0.2388, 0.2016, 0.3366]]])

cc= tensor([[[0.1596, 0.3842, 0.2992, 0.2760, 0.3242],

[0.2692, 0.1814, 0.2264, 0.2213, 0.1808],

[0.1632, 0.2617, 0.2212, 0.2233, 0.2734],

[0.4080, 0.1727, 0.2531, 0.2794, 0.2216]],

[[0.2556, 0.2411, 0.2262, 0.1956, 0.2680],

[0.2787, 0.2013, 0.2672, 0.2922, 0.2405],

[0.1371, 0.3429, 0.3041, 0.1945, 0.2977],

[0.3286, 0.2147, 0.2025, 0.3176, 0.1938]],

[[0.3135, 0.3248, 0.2888, 0.2661, 0.1842],

[0.1464, 0.1494, 0.1915, 0.2708, 0.3713],

[0.2595, 0.1768, 0.3496, 0.2739, 0.2142],

[0.2807, 0.3489, 0.1701, 0.1892, 0.2302]]])

dd= tensor([[[0.0985, 0.2447, 0.2355, 0.2328, 0.1884],

[0.2210, 0.1538, 0.2370, 0.2484, 0.1398],

[0.1277, 0.2114, 0.2207, 0.2388, 0.2015],

[0.2720, 0.1188, 0.2152, 0.2547, 0.1392]],

[[0.2253, 0.1991, 0.1903, 0.1606, 0.2247],

[0.2278, 0.1542, 0.2085, 0.2225, 0.1870],

[0.1131, 0.2648, 0.2393, 0.1494, 0.2334],

[0.2732, 0.1672, 0.1606, 0.2459, 0.1532]],

[[0.2616, 0.2475, 0.1982, 0.1756, 0.1171],

[0.1562, 0.1456, 0.1680, 0.2285, 0.3017],

[0.2384, 0.1484, 0.2643, 0.1990, 0.1499],

[0.2637, 0.2994, 0.1315, 0.1406, 0.1647]]])

结语:在弄清楚F.softmax()后,在Pytorch中还有一个torch.max()方法,The two can be compared for learning.链接如下:

【Pytorch】torch.argmax()用法

边栏推荐

- Usage of += in C#

- Shell project combat 1. System performance analysis

- Spark学习:为Spark Sql添加自定义优化规则

- The operator,

- [QNX Hypervisor 2.2 User Manual]9.14 safety

- 机器学习模型验证:被低估的重要一环

- Network layer key protocol - IP protocol

- C# using NumericUpDown control

- 1-hour live broadcast recruitment order: industry leaders share dry goods, and enterprise registration is open丨qubit · point of view

- 清除浮动的四种方式及其原理理解

猜你喜欢

The batch size does not have to be a power of 2!The latest conclusions of senior ML scholars

技能大赛dhcp服务训练题

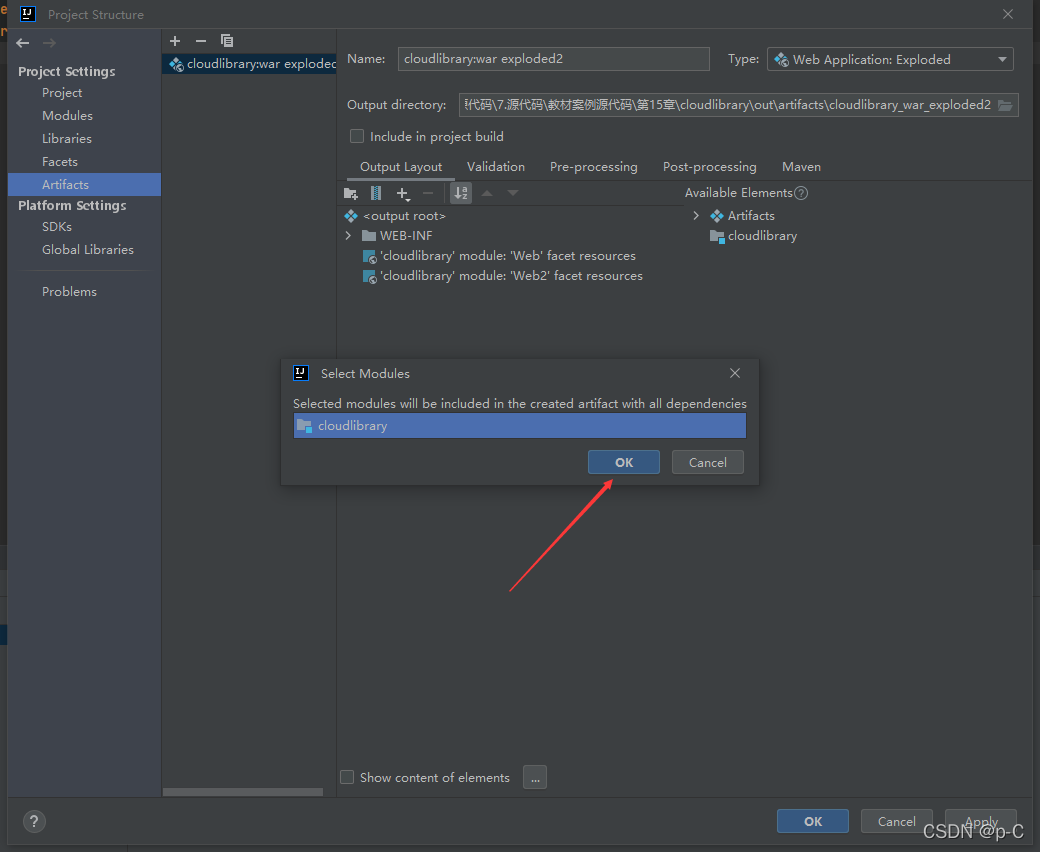

How IDEA runs web programs

Batch大小不一定是2的n次幂!ML资深学者最新结论

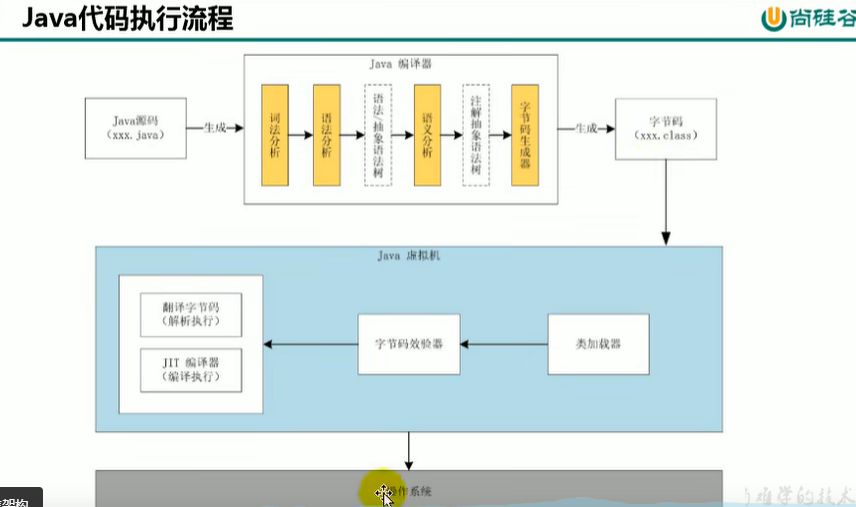

Shang Silicon Valley-JVM-Memory and Garbage Collection (P1~P203)

I summed up the bad MySQL interview questions

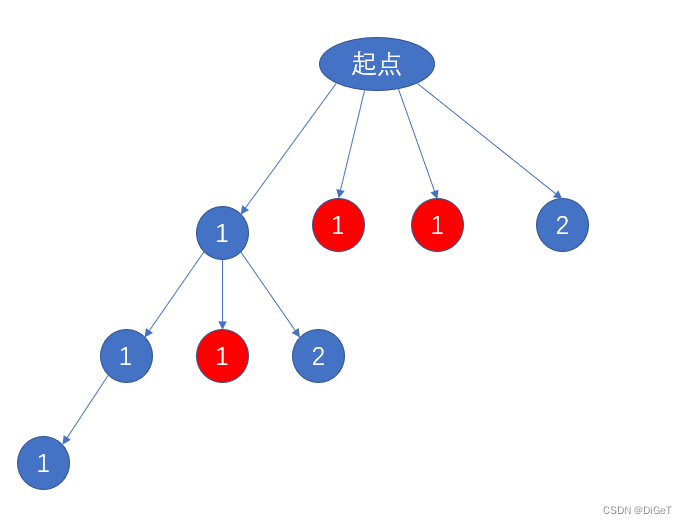

组合系列--有排列就有组合

MySQL 23道经典面试吊打面试官

【蓝桥杯选拔赛真题46】Scratch磁铁游戏 少儿编程scratch蓝桥杯选拔赛真题讲解

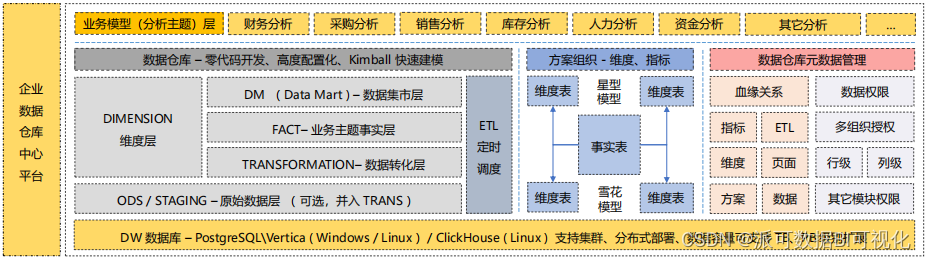

An article makes it clear!What is the difference and connection between database and data warehouse?

随机推荐

组合系列--有排列就有组合

Node version switching management using NVM

IDEA can't find the Database solution

C# using NumericUpDown control

csdn发文助手问题

Shell项目实战1.系统性能分析

Productivity Tools and Plugins

3.爬虫之Scrapy框架1安装与使用

MySQL【聚合函数】

MySQL玩到这种程度,难怪大厂抢着要!

海康摄像机取流RTSP地址规则说明

Selenium自动化测试之Selenium IDE

MySQL【子查询】

ICML2022 | 面向自监督图表示学习的全粒度自语义传播

Six Stones Programming: No matter which function you think is useless, people who can use it will not be able to leave, so at least 99%

How IDEA runs web programs

C# List Usage List Introduction

Detailed explanation of network protocols and related technologies

[Blue Bridge Cup Trial Question 46] Scratch Magnet Game Children's Programming Scratch Blue Bridge Cup Trial Question Explanation

C# using ComboBox control