当前位置:网站首页>【Pytorch】F.softmax()方法说明

【Pytorch】F.softmax()方法说明

2022-07-31 13:50:00 【风雨无阻啊】

1、函数语法格式和作用:

F.sofrmax(x,dim)作用:

根据不同的dim规则来做归一化操作。

x指的是输入的张量,dim指的是归一化的方式。

2、F.softmax()在二维张量下的例子:

2.1、举例代码:

import torch

import torch.nn.functional as F

input = torch.randn(3, 4)

print("input=",input)

b = F.softmax(input, dim=0) # 按列SoftMax,列和为1(即0维度进行归一化)

print("b=",b)

c = F.softmax(input, dim=1) # 按行SoftMax,行和为1(即1维度进行归一化)

print("c=",c)

2.2运行结果:

input= tensor([[-0.4918, 2.5391, -0.3338, -0.4989],

[-0.2537, 0.1675, 1.1313, 0.0916],

[ 0.9846, -1.4170, -0.7165, 1.8283]])

b= tensor([[0.1505, 0.8989, 0.1664, 0.0766],

[0.1909, 0.0839, 0.7201, 0.1383],

[0.6586, 0.0172, 0.1135, 0.7851]])

c= tensor([[0.0419, 0.8675, 0.0490, 0.0416],

[0.1261, 0.1921, 0.5037, 0.1781],

[0.2779, 0.0252, 0.0507, 0.6462]])

3、F.softmax()在三维张量下的例子:

3.1、举例代码:

import torch

import torch.nn.functional as F

a = torch.rand(3,4,5)

print("aa=",aa)

bb = F.softmax(aa,dim=0) # 维度为0进行归一化

print("bb=",bb)

cc = F.softmax(aa,dim=1) # 维度为1进行归一化

print("cc=",cc)

dd = F.softmax(aa,dim=2) # 维度为2进行归一化

print("dd=",dd)

3.2、运行结果:

aa= tensor([[[0.0532, 0.9631, 0.9244, 0.9132, 0.7016],

[0.5757, 0.2128, 0.6454, 0.6925, 0.1175],

[0.0750, 0.5791, 0.6225, 0.7012, 0.5312],

[0.9914, 0.1633, 0.7572, 0.9257, 0.3213]],

[[0.6944, 0.5708, 0.5255, 0.3559, 0.6915],

[0.7808, 0.3902, 0.6919, 0.7571, 0.5835],

[0.0716, 0.9227, 0.8213, 0.3502, 0.7966],

[0.9457, 0.4547, 0.4147, 0.8405, 0.3674]],

[[0.9406, 0.8854, 0.6632, 0.5422, 0.1366],

[0.1791, 0.1090, 0.2523, 0.5594, 0.8374],

[0.7514, 0.2770, 0.8544, 0.5708, 0.2875],

[0.8299, 0.9569, 0.1342, 0.2009, 0.3595]]])

bb= tensor([[[0.1877, 0.3845, 0.4096, 0.4419, 0.3909],

[0.3448, 0.3231, 0.3673, 0.3399, 0.2152],

[0.2523, 0.3175, 0.2873, 0.3873, 0.3239],

[0.3563, 0.2198, 0.4452, 0.4162, 0.3240]],

[[0.3564, 0.2597, 0.2749, 0.2531, 0.3870],

[0.4233, 0.3858, 0.3848, 0.3626, 0.3429],

[0.2515, 0.4477, 0.3505, 0.2727, 0.4223],

[0.3404, 0.2942, 0.3161, 0.3822, 0.3393]],

[[0.4559, 0.3558, 0.3155, 0.3049, 0.2222],

[0.2319, 0.2912, 0.2479, 0.2975, 0.4420],

[0.4962, 0.2347, 0.3623, 0.3400, 0.2538],

[0.3032, 0.4860, 0.2388, 0.2016, 0.3366]]])

cc= tensor([[[0.1596, 0.3842, 0.2992, 0.2760, 0.3242],

[0.2692, 0.1814, 0.2264, 0.2213, 0.1808],

[0.1632, 0.2617, 0.2212, 0.2233, 0.2734],

[0.4080, 0.1727, 0.2531, 0.2794, 0.2216]],

[[0.2556, 0.2411, 0.2262, 0.1956, 0.2680],

[0.2787, 0.2013, 0.2672, 0.2922, 0.2405],

[0.1371, 0.3429, 0.3041, 0.1945, 0.2977],

[0.3286, 0.2147, 0.2025, 0.3176, 0.1938]],

[[0.3135, 0.3248, 0.2888, 0.2661, 0.1842],

[0.1464, 0.1494, 0.1915, 0.2708, 0.3713],

[0.2595, 0.1768, 0.3496, 0.2739, 0.2142],

[0.2807, 0.3489, 0.1701, 0.1892, 0.2302]]])

dd= tensor([[[0.0985, 0.2447, 0.2355, 0.2328, 0.1884],

[0.2210, 0.1538, 0.2370, 0.2484, 0.1398],

[0.1277, 0.2114, 0.2207, 0.2388, 0.2015],

[0.2720, 0.1188, 0.2152, 0.2547, 0.1392]],

[[0.2253, 0.1991, 0.1903, 0.1606, 0.2247],

[0.2278, 0.1542, 0.2085, 0.2225, 0.1870],

[0.1131, 0.2648, 0.2393, 0.1494, 0.2334],

[0.2732, 0.1672, 0.1606, 0.2459, 0.1532]],

[[0.2616, 0.2475, 0.1982, 0.1756, 0.1171],

[0.1562, 0.1456, 0.1680, 0.2285, 0.3017],

[0.2384, 0.1484, 0.2643, 0.1990, 0.1499],

[0.2637, 0.2994, 0.1315, 0.1406, 0.1647]]])

结语:在弄清楚F.softmax()后,在Pytorch中还有一个torch.max()方法,可以将两个进行对比学习。链接如下:

【Pytorch】torch.argmax()用法

边栏推荐

猜你喜欢

MySQL has played to such a degree, no wonder the big manufacturers are rushing to ask for it!

C# control ListView usage

技能大赛训练题:域用户和组织单元的创建

C#使用NumericUpDown控件

232层3D闪存芯片来了:单片容量2TB,传输速度提高50%

Edge Cloud Explained in Simple Depth | 4. Lifecycle Management

技能大赛训练题:MS15_034漏洞验证与安全加固

The use of C# control CheckBox

多智能体协同控制研究中光学动作捕捉与UWB定位技术比较

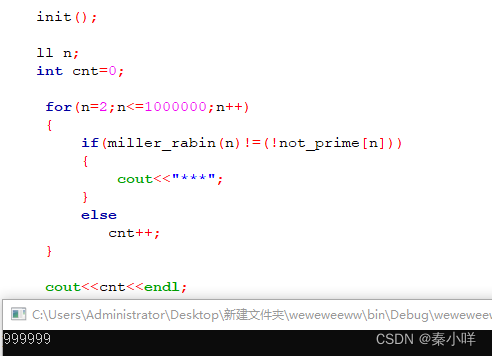

Miller_Rabin 米勒拉宾概率筛【模板】

随机推荐

C# using NumericUpDown control

go使用makefile脚本编译应用

使用CompletableFuture进行异步处理业务

百度网盘安装在c盘显示系统权限限制的解决方法

为什么 wireguard-go 高尚而 boringtun 孬种

[Niu Ke brush questions - SQL big factory interview questions] NO3. E-commerce scene (some east mall)

PartImageNet物体部件分割(Semantic Part Segmentation)数据集介绍

六石编程学:不论是哪个功能,你觉得再没用,会用的人都离不了,所以至少要做到99%

C#使用ComboBox控件

IDEA connects to MySQL database and uses data

八大排序汇总及其稳定性

ECCV2022: Recursion on Transformer without adding parameters and less computation!

最新完整代码:使用word2vec预训练模型进行增量训练(两种保存方式对应的两种加载方式)适用gensim各种版本

“听我说谢谢你”还能用古诗来说?清华搞了个“据意查句”神器,一键搜索你想要的名言警句...

pytorch gpu版本安装最新

Comparison of Optical Motion Capture and UWB Positioning Technology in Multi-agent Cooperative Control Research

多智能体协同控制研究中光学动作捕捉与UWB定位技术比较

动作捕捉系统用于柔性机械臂的末端定位控制

Node version switching management using NVM

Shell project combat 1. System performance analysis