当前位置:网站首页>Comparison of Optical Motion Capture and UWB Positioning Technology in Multi-agent Cooperative Control Research

Comparison of Optical Motion Capture and UWB Positioning Technology in Multi-agent Cooperative Control Research

2022-07-31 13:47:00 【MocapLeader】

When humans do any work, they always emphasize teamwork, teamwork.With the interdisciplinary development and integration of control science, computer science and other disciplines, in the field of intelligent body control, the control of a single robot, unmanned aerial vehicle, and unmanned vehicle can no longer meet the technical needs of the current field.These agents complete multi-unit and multi-dimensional cooperative work, and the cooperative control and application of multi-agent systems has become one of the research hotspots in many fields such as control, mathematics, communication, biology and artificial intelligence.

In the process of completing multi-agent cooperative control, the mainstream positioning technologies currently used are Optical Motion Capture (Optical Motion Capture) and UWB (Ultra Wide Band, Ultra Wide Band).Both technologies have their own characteristics.

Get Data Type

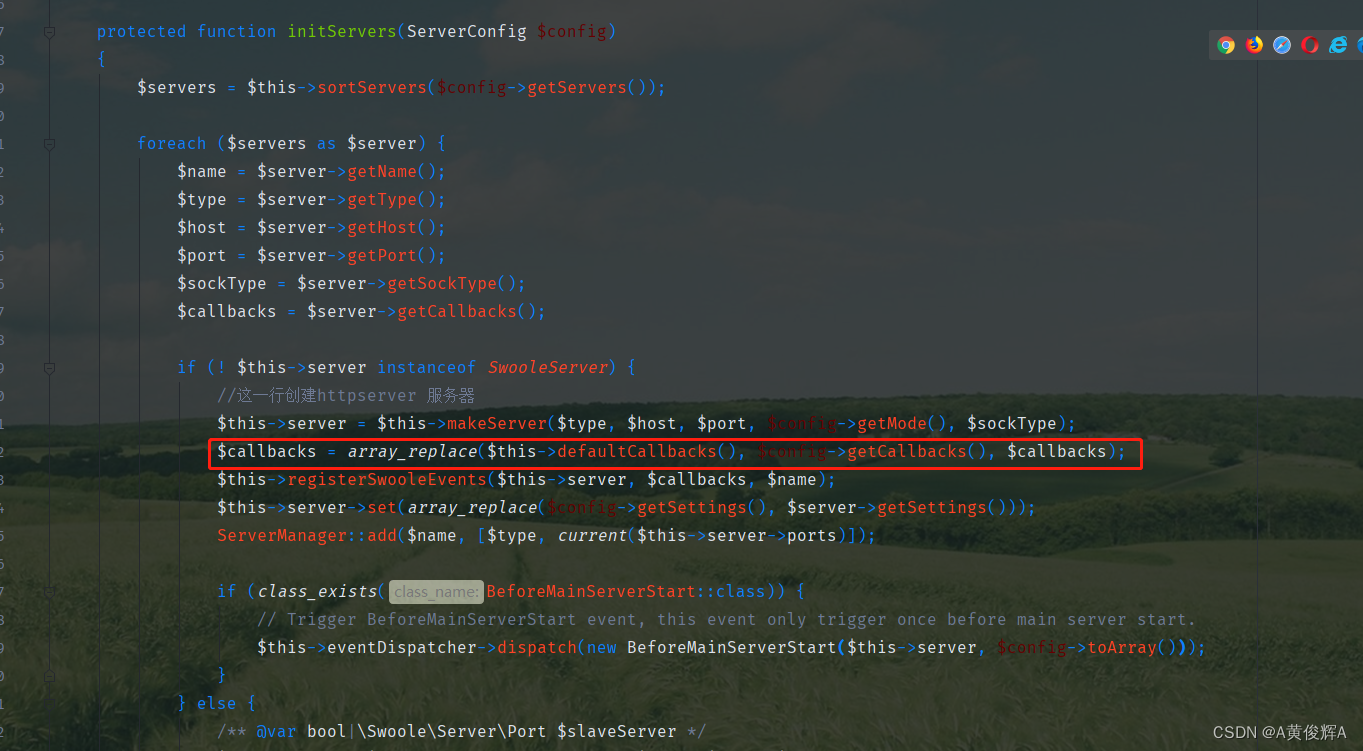

Optical motion capture obtains the position information of the marker points on the agent through the lens in the collaborative control experiment to calculate the position information and pose information of the agent, transmits the data to the host through SDK or VRPN, and then transmits the data to the host through the SDK or VRPN.The calculation and actual control software sends the intelligent body to complete real-time control through wireless signals; while UWB technology is a wireless carrier communication technology that uses a frequency bandwidth above 1GHz, and it cannot be completely a position control technology.Carry a signal transmitting device to indirectly obtain the location of the agent in this way.In contrast, optical motion capture can provide both the location information and attitude information of the agent, while UWB technology can only provide the location information of the agent alone.In a market with increasingly diverse and complex needs, agents that only move in position can no longer meet the needs of the current market.What is needed now is that the drone not only flies in a straight line, but can know its own attitude in the air, adjust it at any time, and even roll over. At this time, UWB technology cannot meet the control requirements.

Capture Accuracy and Latency

Not only the data support, but also the UWB system built indoors, the overall accuracy will be greatly reduced in the case of civil and commercial use, about centimeters, and the transmission range is only about 10m.However, the indoor capture accuracy of optical motion capture can reach sub-millimeter level, and the entire capture range has no upper limit according to the setting of the venue and the number of lenses.

The optical motion capture system can not only provide high-precision, low-interference information, but also transmit the position and attitude information of the agent back to the host in high real-time, and the overall data information returned to the agent is low-latencyYes, latency can be as low as a few milliseconds.

Hardware Comparison

In terms of hardware, UWB needs to install a device that transmits radio waves on the intelligent body, which will increase the complexity and uncontrollability of the collaborative control process.The optical motion capture system only sticks a few very light special markers on the intelligent body, no other equipment is required, and it will not cause other interference to the intelligent body, which not only ensures accurate position and attitude information, but also ensures that noInterfere with the cooperative control process and provide a stable and reliable activity space.

Typical Cases

Many teams in China are also conducting research on multi-agent collaborative control. Now, Professor Xia Yuanqing's team from Beijing Institute of Technology has completed the research on air-ground collaborative control. After comparing different positioning technologies for many times, the team adopted NOKOV metrology optical threeA motion capture system is used as the positioning system for the final study.In the whole research process, the NOKOV metric optical 3D motion capture system provides the research with the spatial position and attitude information of the unmanned vehicle, which makes the whole research proceed smoothly.Finally, the preliminary research was successfully completed, and it was reported by Xinhuanet.

边栏推荐

- C# control StatusStrip use

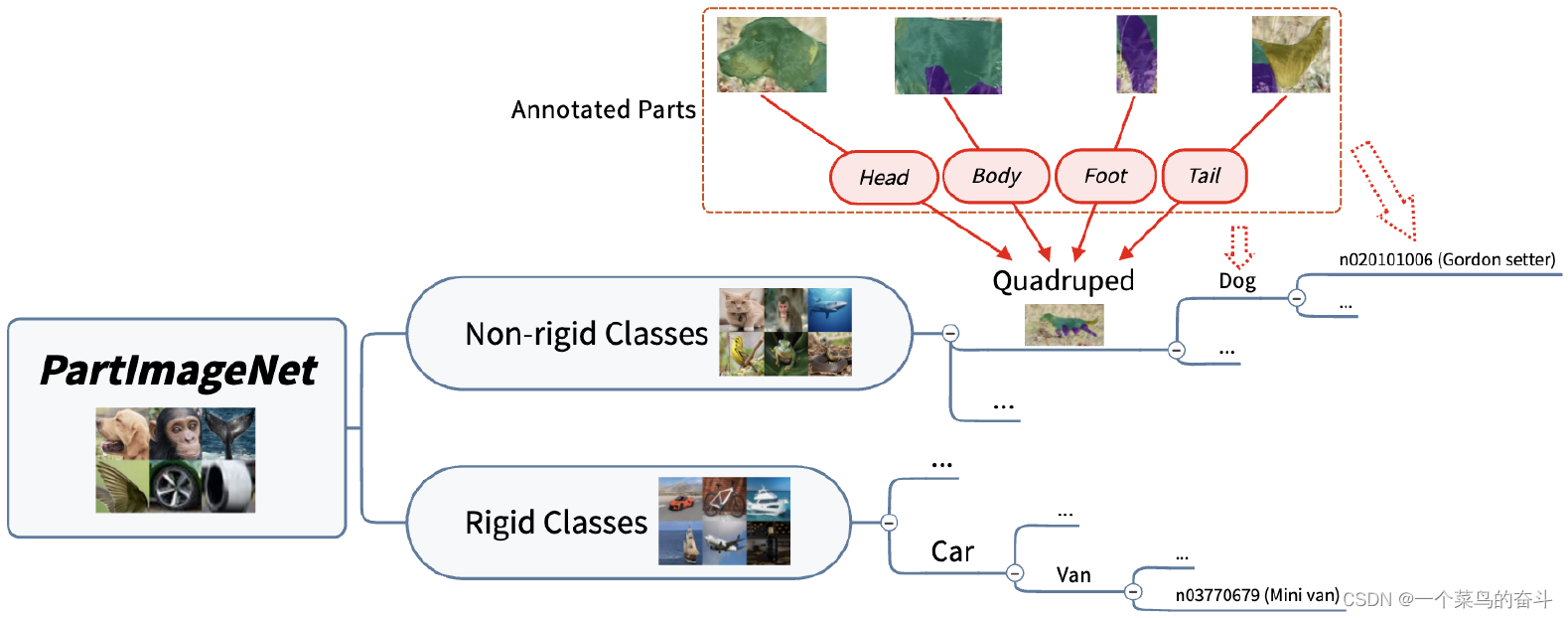

- Introduction to the PartImageNet Semantic Part Segmentation dataset

- endnote引用

- For enterprises in the digital age, data governance is difficult, but it should be done

- C#使用NumericUpDown控件

- Batch大小不一定是2的n次幂!ML资深学者最新结论

- VU 非父子组件通信

- Even if the image is missing in a large area, it can also be repaired realistically. The new model CM-GAN takes into account the global structure and texture details

- Edge Cloud Explained in Simple Depth | 4. Lifecycle Management

- ICML2022 | Fully Granular Self-Semantic Propagation for Self-Supervised Graph Representation Learning

猜你喜欢

随机推荐

模拟量差分和单端(iou计算方法)

MySQL玩到这种程度,难怪大厂抢着要!

C# using ComboBox control

六石编程学:不论是哪个功能,你觉得再没用,会用的人都离不了,所以至少要做到99%

C#控件StatusStrip使用

uniapp微信小程序引用标准版交易组件

Open Inventor 10.12 重大改进--和谐版

C#Assembly的使用

页面整屏滚动效果

技能大赛dhcp服务训练题

PHP Serialization: eval

go使用makefile脚本编译应用

Edge Cloud Explained in Simple Depth | 4. Lifecycle Management

Buffer 与 拥塞控制

Introduction to the PartImageNet Semantic Part Segmentation dataset

Invalid bound statement (not found)出现的原因和解决方法

拥塞控制,CDN,端到端

Save and load numpy matrices and vectors, and use the saved vectors for similarity calculation

leetcode:2032. 至少在两个数组中出现的值

All-round visual monitoring of the Istio microservice governance grid (microservice architecture display, resource monitoring, traffic monitoring, link monitoring)

https://www.nokov.com/support/case_studies_detail/multi-intelligent-cooperative-control-experimental-platform.html

https://www.nokov.com/support/case_studies_detail/multi-intelligent-cooperative-control-experimental-platform.html