当前位置:网站首页>Hands on deep learning (36) -- language model and data set

Hands on deep learning (36) -- language model and data set

2022-07-04 09:37:00 【Stay a little star】

List of articles

Language models and data sets

Given such a sequence of text , Language model (language model) The goal is to estimate the joint probability of the sequence .

P ( x 1 , x 2 , … , x T ) . P(x_1, x_2, \ldots, x_T). P(x1,x2,…,xT). Language models are very useful . for example , You only need to extract one tag at a time x t ∼ P ( x t ∣ x t − 1 , … , x 1 ) x_t \sim P(x_t \mid x_{t-1}, \ldots, x_1) xt∼P(xt∣xt−1,…,x1), An ideal language model can generate natural text based on the model itself . Unlike monkeys using typewriters , All texts emerging from such a model will be treated as natural language ( for example , English text ) To pass on . Besides , Just based on the text in the previous conversation segment , Enough to generate a meaningful conversation . obviously , We are still far from designing such a system , Because it needs “ understand ” Text , Not just generate grammatically reasonable content .

For all that , Language models are still very useful , Even in their limited form . for example , The phrase “to recognize speech” and “to wreck a nice beach” It sounds very similar . This similarity will lead to ambiguity in speech recognition , But it's easy to solve it through language model , Because the feeling of the second kind of translation is very strange . Again , In the document summary generation algorithm ,“ Dog bites man ” Than “ Man bites dog ” The frequency is much higher , perhaps “ I want to eat grandma ” Is a rather disturbing statement , and “ I would like to eat , grandma ” Is much kinder .

The application of language model :

- Make a pre training model (BERT,GPT-3)

- The generation of textual , Given the first few words , Continuous use x t ∼ p ( x t ∣ x t − 1 ) x_t \sim p(x_t | x_{t-1}) xt∼p(xt∣xt−1) To generate subsequent text

- Determine which of the multiple sequences is more common

One 、 Learning language models

1.1 Theoretical explanation

Obvious , The problem we face is how to manage a document , Even a tag sequence for modeling . Suppose text data is tokenized at the word level , We can rely on the analysis of sequence model in sequence model . Let's start with the basic probability rules :

Suppose the sequence length is 2, We predict

p ( x , x ′ ) = p ( x ) p ( x ′ ∣ x ) = n ( x ) n n ( x , x ′ ) n ( x ) p(x,x') = p(x)p(x'|x) = \frac{n(x)}{n} \frac{n(x,x')}{n(x)} p(x,x′)=p(x)p(x′∣x)=nn(x)n(x)n(x,x′)

- n Is the total number of words

- n(x),n(x,x’) Is the number of occurrences of a single word and consecutive word pairs

Expand to a length of 3 Under the circumstances :

p ( x , x ′ , x ′ ′ ) = p ( x ) p ( x ′ ∣ x ) p ( x ′ ′ ∣ x , x ′ ) = n ( x ) n n ( x , x ′ ) n ( x ) n ( x , x ′ , x ′ ′ ) n ( x , x ′ ) p(x,x',x'') = p(x)p(x'|x)p(x''|x,x') = \frac{n(x)}{n} \frac{n(x,x')}{n(x)} \frac{n(x,x',x'')}{n(x,x')} p(x,x′,x′′)=p(x)p(x′∣x)p(x′′∣x,x′)=nn(x)n(x)n(x,x′)n(x,x′)n(x,x′,x′′)

Expand to the case of arbitrary length :

P ( x 1 , x 2 , … , x T ) = ∏ t = 1 T P ( x t ∣ x 1 , … , x t − 1 ) . P(x_1, x_2, \ldots, x_T) = \prod_{t=1}^T P(x_t \mid x_1, \ldots, x_{t-1}). P(x1,x2,…,xT)=t=1∏TP(xt∣x1,…,xt−1).

1.2 Example derivation

for example , It contains four words (deep learning is fun) The probability of a text sequence of is :

P ( deep , learning , is , fun ) = P ( deep ) P ( learning ∣ deep ) P ( is ∣ deep , learning ) P ( fun ∣ deep , learning , is ) . P(\text{deep}, \text{learning}, \text{is}, \text{fun}) = P(\text{deep}) P(\text{learning} \mid \text{deep}) P(\text{is} \mid \text{deep}, \text{learning}) P(\text{fun} \mid \text{deep}, \text{learning}, \text{is}). P(deep,learning,is,fun)=P(deep)P(learning∣deep)P(is∣deep,learning)P(fun∣deep,learning,is).

In order to calculate the language model , We need to calculate the probability of a word and the conditional probability of a word after a given first few words . These probabilities are essentially the parameters of the language model .

here , We assume that the training data set is a large text corpus , such as , All Wikipedia entries , Project Gutenberg , And all the texts published on the Internet . The probability of words in the training data set can be calculated according to the relative word frequency of a given word . for example , The estimated value can be P ^ ( deep ) \hat{P}(\text{deep}) P^(deep) Calculate as any word “deep” The probability of the first sentence . A slightly less accurate method is to count words “deep” Number of occurrences in the dataset , Then divide it by the total number of words in the whole corpus . This method works well , Especially for frequently occurring words . Next , We can try to estimate

P ^ ( learning ∣ deep ) = n ( deep, learning ) n ( deep ) , \hat{P}(\text{learning} \mid \text{deep}) = \frac{n(\text{deep, learning})}{n(\text{deep})}, P^(learning∣deep)=n(deep)n(deep, learning),

among n ( x ) n(x) n(x) and n ( x , x ′ ) n(x, x') n(x,x′) They are the number of occurrences of a single word and consecutive word pairs . Unfortunately , Because continuous word pairs “deep learning” The frequency of occurrence is much lower , So it is much more difficult to estimate the correct probability of such words . especially , For some unusual word combinations , It may not be easy to find enough occurrences to get an accurate estimate . For a combination of three or more words , Things will get worse . Many reasonable combinations of three words may exist , But I can't find it in the dataset . Unless we provide some solution to specify these word combinations as non-zero counts , Otherwise, they cannot be used in the language model . If the data set is small , Or words are very rare , Then the chance of this kind of words appearing once may not be found .

A common strategy is to perform some form of == Laplacian smoothing (Laplace smoothing)==, The specific method is to add a small constant to all counts . use n n n Indicates the total number of words in the training set , use m m m Represents the number of unique words . This solution helps to deal with single element problems , For example, through :

P ^ ( x ) = n ( x ) + ϵ 1 / m n + ϵ 1 , P ^ ( x ′ ∣ x ) = n ( x , x ′ ) + ϵ 2 P ^ ( x ′ ) n ( x ) + ϵ 2 , P ^ ( x ′ ′ ∣ x , x ′ ) = n ( x , x ′ , x ′ ′ ) + ϵ 3 P ^ ( x ′ ′ ) n ( x , x ′ ) + ϵ 3 . \begin{aligned} \hat{P}(x) & = \frac{n(x) + \epsilon_1/m}{n + \epsilon_1}, \\ \hat{P}(x' \mid x) & = \frac{n(x, x') + \epsilon_2 \hat{P}(x')}{n(x) + \epsilon_2}, \\ \hat{P}(x'' \mid x,x') & = \frac{n(x, x',x'') + \epsilon_3 \hat{P}(x'')}{n(x, x') + \epsilon_3}. \end{aligned} P^(x)P^(x′∣x)P^(x′′∣x,x′)=n+ϵ1n(x)+ϵ1/m,=n(x)+ϵ2n(x,x′)+ϵ2P^(x′),=n(x,x′)+ϵ3n(x,x′,x′′)+ϵ3P^(x′′).

among , ϵ 1 , ϵ 2 \epsilon_1,\epsilon_2 ϵ1,ϵ2 and ϵ 3 \epsilon_3 ϵ3 It's a super parameter. . With ϵ 1 \epsilon_1 ϵ1 For example : When ϵ 1 = 0 \epsilon_1 = 0 ϵ1=0 when , Do not apply smoothing ; When ϵ 1 \epsilon_1 ϵ1 Near positive infinity , P ^ ( x ) \hat{P}(x) P^(x) Near uniform probability distribution 1 / m 1/m 1/m. The above formula is :cite:Wood.Gasthaus.Archambeau.ea.2011 A fairly primitive variation of other technical implementation methods of .

Unfortunately , A model like this can easily become invalid for the following reasons :

- First , We need to store all the counts ;

- secondly , This completely ignores the meaning of the word . for example ,“ cat ”(cat) and “ catamount ”(feline) Should appear in the relevant context , But it is quite difficult to adjust these models according to the additional context , However, the language model based on deep learning is very suitable to solve this problem .

- Last , Long word sequences are almost certainly unknown , Therefore, if a model simply counts the frequency of previously seen word sequences , Then the model must be underperforming in the face of this problem .

Two 、 Markov model and n n n Metagrammar

Before discussing solutions that involve deep learning , We need to know more concepts and terms . Recall our discussion of Markov models in sequence models , And apply it to language modeling . If P ( x t + 1 ∣ x t , … , x 1 ) = P ( x t + 1 ∣ x t ) P(x_{t+1} \mid x_t, \ldots, x_1) = P(x_{t+1} \mid x_t) P(xt+1∣xt,…,x1)=P(xt+1∣xt), Then the distribution on the sequence satisfies the first-order Markov property . The higher the order , The longer the corresponding dependency . This property deduces many approximate formulas that can be applied to sequence modeling :

P ( x 1 , x 2 , x 3 , x 4 ) = P ( x 1 ) P ( x 2 ) P ( x 3 ) P ( x 4 ) , P ( x 1 , x 2 , x 3 , x 4 ) = P ( x 1 ) P ( x 2 ∣ x 1 ) P ( x 3 ∣ x 2 ) P ( x 4 ∣ x 3 ) , P ( x 1 , x 2 , x 3 , x 4 ) = P ( x 1 ) P ( x 2 ∣ x 1 ) P ( x 3 ∣ x 1 , x 2 ) P ( x 4 ∣ x 2 , x 3 ) . \begin{aligned} P(x_1, x_2, x_3, x_4) &= P(x_1) P(x_2) P(x_3) P(x_4),\\ P(x_1, x_2, x_3, x_4) &= P(x_1) P(x_2 \mid x_1) P(x_3 \mid x_2) P(x_4 \mid x_3),\\ P(x_1, x_2, x_3, x_4) &= P(x_1) P(x_2 \mid x_1) P(x_3 \mid x_1, x_2) P(x_4 \mid x_2, x_3). \end{aligned} P(x1,x2,x3,x4)P(x1,x2,x3,x4)P(x1,x2,x3,x4)=P(x1)P(x2)P(x3)P(x4),=P(x1)P(x2∣x1)P(x3∣x2)P(x4∣x3),=P(x1)P(x2∣x1)P(x3∣x1,x2)P(x4∣x2,x3).

Usually , It involves a 、 The probability formulas for two and three variables are called “ Unary grammar ”(unigram)、“ Binary grammar ”(bigram) and “ Ternary grammar ”(trigram) Model . below , We will learn how to design better models .

3、 ... and 、 Natural language statistics

3.1 Compare multiple grammar groups

Let's see how it works on real data . Build vocabulary based on time machine data set introduced in text preprocessing , And before printing 10 10 10 The most commonly used ( The most frequent ) word .

import random

import torch

from d2l import torch as d2l

# Read text data as words

tokens = d2l.tokenize(d2l.read_time_machine())

# Because each line of text is not necessarily a sentence or a paragraph , So we put all the lines of text together

corpus = [token for line in tokens for token in line]

vocab = d2l.Vocab(corpus)

vocab.token_freqs[:10]

[('the', 2261),

('i', 1267),

('and', 1245),

('of', 1155),

('a', 816),

('to', 695),

('was', 552),

('in', 541),

('that', 443),

('my', 440)]

# The most popular words are called stop words

# Draw the word frequency diagram of all words , Abscissa is sort , The ordinate is word frequency

freqs = [freq for token, freq in vocab.token_freqs]

d2l.plot(freqs, xlabel='token: x', ylabel='frequency: n(x)', xscale='log',

yscale='log')

Word frequency decays rapidly in a definite way . Eliminate the first few words as exceptions , All the remaining words roughly follow a straight line on the double logarithm graph . This means that the frequency of words meets Zipf's law (Zipf’s law), That is to say i i i The frequency of the most commonly used words n i n_i ni by :

n i ∝ 1 i α , n_i \propto \frac{1}{i^\alpha}, ni∝iα1, Equivalent to

log n i = − α log i + c , \log n_i = -\alpha \log i + c, logni=−αlogi+c,

among α \alpha α Is the exponent that characterizes the distribution , c c c Is constant . That tells us It is not feasible to model words by counting statistics and smoothing , The result of this modeling will greatly overestimate the frequency of tail words , That is, the so-called uncommon words . But other word combinations , For example, binary grammar 、 Ternary grammar and so on , What will happen ? Let's see if the frequency of binary grammar and the frequency of unary grammar show the same behavior .

# The frequency of binary grammar

# Will all corpus And remove the first word Of corpus Splicing forms a binary corpus Corpus

bigram_tokens = [pair for pair in zip(corpus[:-1], corpus[1:])]

bigram_vocab = d2l.Vocab(bigram_tokens)

bigram_vocab.token_freqs[:10]

[(('of', 'the'), 309),

(('in', 'the'), 169),

(('i', 'had'), 130),

(('i', 'was'), 112),

(('and', 'the'), 109),

(('the', 'time'), 102),

(('it', 'was'), 99),

(('to', 'the'), 85),

(('as', 'i'), 78),

(('of', 'a'), 73)]

It's worth noting here : Of the ten most frequent word pairs , Nine are made up of two stop words , There is only one with “the time” of . further , Let's see if the frequency of ternary grammar shows the same behavior .

trigram_tokens = [

triple for triple in zip(corpus[:-2],corpus[1:-1],corpus[2:])

]

trigram_vocab = d2l.Vocab(trigram_tokens)

trigram_vocab.token_freqs[:10]

[(('the', 'time', 'traveller'), 59),

(('the', 'time', 'machine'), 30),

(('the', 'medical', 'man'), 24),

(('it', 'seemed', 'to'), 16),

(('it', 'was', 'a'), 15),

(('here', 'and', 'there'), 15),

(('seemed', 'to', 'me'), 14),

(('i', 'did', 'not'), 14),

(('i', 'saw', 'the'), 13),

(('i', 'began', 'to'), 13)]

# Of the three models frequency Draw it out

bigram_freqs = [freq for token, freq in bigram_vocab.token_freqs]

trigram_freqs = [freq for token, freq in trigram_vocab.token_freqs]

d2l.plot([freqs, bigram_freqs, trigram_freqs], xlabel='token: x',

ylabel='frequency: n(x)', xscale='log', yscale='log',

legend=['unigram', 'bigram', 'trigram'])

The above picture has many key points

- Except for monogrammatical words , Word sequences also follow Zipf's law , Even though the formula n i ∝ 1 i α , n_i \propto \frac{1}{i^\alpha}, ni∝iα1, Medium i The index is smaller ( The size of the index is affected by the length of the sequence )

- In Glossary n The number of tuples is not that large , There are quite a lot of structures in presentation languages

- quite a lot n Tuples rarely appear , Making Laplacian smoothing unsuitable for language models

3.2 Read long sequence data

Because sequence data is continuous in nature , Therefore, we need to solve this problem when processing data . In the sequence model, we do this in a quite special way . When the sequence becomes too long to be processed all at once by the model , We may want to split such a sequence to facilitate model reading . Now let's describe the overall strategy . Before introducing the model , Suppose we will use neural networks to train language models , The network processing in the model has a predefined length ( for example n n n Time steps ) A small batch sequence of . The problem now is how to randomly generate the characteristics and labels of a small batch of data for reading .

First , Because the text sequence can be arbitrarily long , For example, the whole book 《 Time machine 》(The Time Machine), Therefore, any long sequence can be divided into subsequences with the same number of time steps . When training our neural networks , Such a small batch of quantum sequences will be input into the model . Suppose that the network only deals with n n n A subsequence of time steps . The following figure shows all the different ways to obtain subsequences from the original text sequence , among n = 5 n=5 n=5, And the mark of each time step corresponds to a character . Please note that , Because we can choose any offset to indicate the initial position , So we have a lot of freedom .

therefore , Which one should we choose ? in fact , They are all equally good . However , If we only choose one offset , So what is used to train the network 、 The coverage of all possible subsequences will be limited . therefore , We can divide the sequence from the random offset , At the same time Coverage (coverage) and Randomness (randomness). below , We will describe how to implement Random sampling (random sampling) and Sequential partition (sequential partitioning) Strategy .

3.2.1 Random sampling

In random sampling , Each sample is a subsequence arbitrarily captured on the original long sequence . In the iteration process , From two adjacent 、 Random 、 Subsequences in a small batch are not necessarily adjacent to the original sequence . For language modeling , The goal is to predict the next marker based on the markers we have seen so far , Therefore, the tag is the original sequence shifted by a tag .

The following code generates a small batch randomly from the data every time . ad locum , Parameters batch_size Specifies the number of neutron sequence samples per small batch , Parameters num_steps Is the number of predefined time steps in each subsequence .

def seq_data_iter_random(corpus,batch_size,num_steps):

""" Use random sampling to generate a small batch of quantum sequences """

# The sequence is partitioned from a random offset , The random range includes `num_steps - 1`

corpus = corpus[random.randint(0, num_steps - 1):]

# subtract 1, Because we need to consider labels

num_subseqs = (len(corpus) - 1) // num_steps

# The length is `num_steps` The starting index of the subsequence of

initial_indices = list(range(0, num_subseqs * num_steps, num_steps))

# In the iterative process of random sampling ,

# From two adjacent 、 Random 、 Subsequences in a small batch are not necessarily adjacent to the original sequence

random.shuffle(initial_indices)

def data(pos):

# Return from `pos` The length at the beginning of the position is `num_steps` Sequence

return corpus[pos:pos + num_steps]

num_batches = num_subseqs // batch_size

for i in range(0, batch_size * num_batches, batch_size):

# ad locum ,`initial_indices` Random start index containing subsequences

initial_indices_per_batch = initial_indices[i:i + batch_size]

X = [data(j) for j in initial_indices_per_batch]

Y = [data(j + 1) for j in initial_indices_per_batch]

yield torch.tensor(X), torch.tensor(Y)

Let's generate an example from 0 0 0 To 34 34 34 Sequence . Suppose the batch size is 2 2 2 , The number of time steps is 5 5 5, This means that you can generate ⌊ ( 35 − 1 ) / 5 ⌋ = 6 \lfloor (35 - 1) / 5 \rfloor= 6 ⌊(35−1)/5⌋=6 individual “ features - label ” Subsequence pair . Set the small batch size to 2 2 2 when , We can only get 3 3 3 A small batch .

my_seq = list(range(35))

for X, Y in seq_data_iter_random(my_seq, batch_size=2, num_steps=5):

print('X: ', X, '\nY:', Y)

[3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34]

[0, 5, 10, 15, 20, 25]

X: tensor([[18, 19, 20, 21, 22],

[28, 29, 30, 31, 32]])

Y: tensor([[19, 20, 21, 22, 23],

[29, 30, 31, 32, 33]])

X: tensor([[ 8, 9, 10, 11, 12],

[ 3, 4, 5, 6, 7]])

Y: tensor([[ 9, 10, 11, 12, 13],

[ 4, 5, 6, 7, 8]])

X: tensor([[23, 24, 25, 26, 27],

[13, 14, 15, 16, 17]])

Y: tensor([[24, 25, 26, 27, 28],

[14, 15, 16, 17, 18]])

3.2.2 Sequential partition

Ensure that the subsequences in two adjacent small batches are also adjacent to the original sequence ( In the iteration process based on small batch, the order of split subsequences is also preserved )

def seq_data_iter_sequential(corpus,batch_size,num_steps):

""" Use sequential partitioning to generate a small batch of quantum sequences """

# Divide the sequence from the random offset

offset = random.randint(0,num_steps)

num_tokens = ((len(corpus)- offset -1)//batch_size)*batch_size

Xs = torch.tensor(corpus[offset:offset+num_tokens])

Ys = torch.tensor(corpus[offset+1:offset+num_tokens+1])

Xs,Ys = Xs.reshape(batch_size,-1),Ys.reshape(batch_size,-1)

num_batches = Xs.shape[1]//num_steps

for i in range(0,num_steps*num_batches,num_steps):

X = Xs[:,i:i+num_steps]

Y = Ys[:,i:i+num_steps]

yield X,Y

for X, Y in seq_data_iter_sequential(my_seq, batch_size=2, num_steps=5):

print('X: ', X, '\nY:', Y)

X: tensor([[ 0, 1, 2, 3, 4],

[17, 18, 19, 20, 21]])

Y: tensor([[ 1, 2, 3, 4, 5],

[18, 19, 20, 21, 22]])

X: tensor([[ 5, 6, 7, 8, 9],

[22, 23, 24, 25, 26]])

Y: tensor([[ 6, 7, 8, 9, 10],

[23, 24, 25, 26, 27]])

X: tensor([[10, 11, 12, 13, 14],

[27, 28, 29, 30, 31]])

Y: tensor([[11, 12, 13, 14, 15],

[28, 29, 30, 31, 32]])

3.2.3 Collate sampling function

class SeqDataLoader: #@save

""" An iterator that loads sequence data ."""

def __init__(self, batch_size, num_steps, use_random_iter, max_tokens):

if use_random_iter:

self.data_iter_fn = d2l.seq_data_iter_random

else:

self.data_iter_fn = d2l.seq_data_iter_sequential

self.corpus, self.vocab = d2l.load_corpus_time_machine(max_tokens)

self.batch_size, self.num_steps = batch_size, num_steps

def __iter__(self):

return self.data_iter_fn(self.corpus, self.batch_size, self.num_steps)

# Defined function load_data_time_machine Return both data iterators and vocabularies

def load_data_time_machine(batch_size, num_steps, #@save

use_random_iter=False, max_tokens=10000):

""" Returns the iterator and vocabulary of the time machine dataset ."""

data_iter = SeqDataLoader(batch_size, num_steps, use_random_iter,

max_tokens)

return data_iter, data_iter.vocab

Four 、 Arrangement

- Language model is the key of natural language processing

- n Metagrammar , By truncating the Correlation , It provides a practical model for dealing with long sequences

- There is a problem with long sequences : Rarely or never

- Zipf's law governs the distribution of words , It's not just monadic grammar , For the rest n Metagrammar also applies

- Laplacian smoothing can effectively deal with phrases composed of low-frequency words with rich structure and insufficient frequency

- The main ways to read long sequences are random sampling and sequential partitioning . In the iteration process , The latter can ensure that subsequences from two adjacent small batches are also adjacent to the original sequence .

5、 ... and 、 practice

Suppose there is 100 , 000 100,000 100,000 Word . How many word frequencies and adjacent multi word frequencies need to be stored in a quaternion grammar ?

I think word frequency is 1 0 4 10^4 104, And the adjacent multi word frequency I think is 1 0 5 ∗ 1 0 5 ∗ 1 0 5 ∗ 1 0 5 = ( 1 0 5 ) 4 10^5*10^5*10^5*10^5 = (10^5)^4 105∗105∗105∗105=(105)4How do we model a series of conversations ?

I'm not in this direction , Post some thoughts of others

https://zhuanlan.zhihu.com/p/296331236Unary grammar 、 The exponent of Zipf's law of binary grammar and ternary grammar is different , Can you try to estimate ?

Think about other ways to read long sequence data ?

I'm daydreaming :- For long sequences, in addition to cutting methods , Is it possible to save all the sequence lengths directly , And then random one seed Select the sampling location , Then continuously random sampling , If the sampling has a uniform probability distribution , In theory, each word The probability of being selected is the same . In fact, such sampling is feasible to a certain extent .

- Except for forward cutting , Reverse can also cut

Consider the random offset we use to read long sequences .

- Why random offsets are a good idea ?

While reducing the frequency of continuous words, it ensures the possibility of using each word - Will it really achieve perfect uniform distribution on the sequence of documents ?

Can't , Because of the influence of random numbers - What can you do to make the distribution more uniform ?

Reverse and re offset the sampling ?

- Why random offsets are a good idea ?

If we want a sequence sample to be a complete sentence , So what problems will this bring in small batch sampling ? How to solve ?

边栏推荐

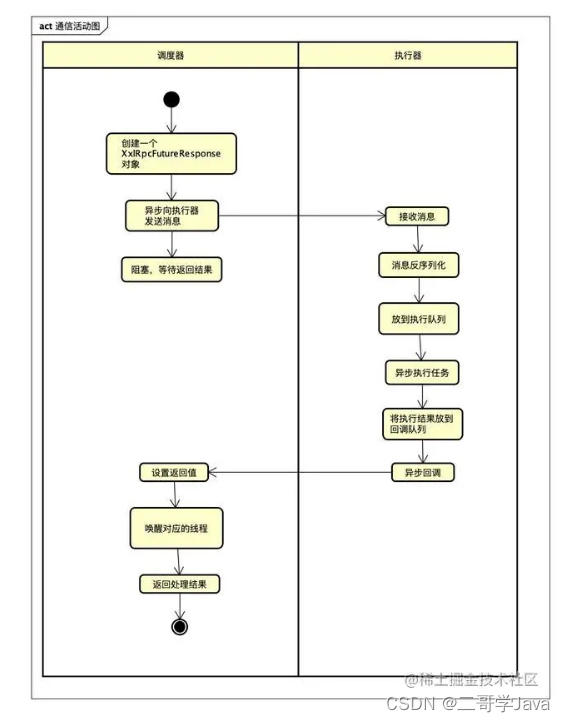

- xxl-job惊艳的设计,怎能叫人不爱

- Golang Modules

- Global and Chinese market of air fryer 2022-2028: Research Report on technology, participants, trends, market size and share

- How should PMP learning ideas be realized?

- 2022-2028 global edible probiotic raw material industry research and trend analysis report

- Reading notes on how to connect the network - tcp/ip connection (II)

- C # use gdi+ to add text with center rotation (arbitrary angle)

- 2022-2028 global industry research and trend analysis report on anterior segment and fundus OTC detectors

- QTreeView+自定义Model实现示例

- Trim leading or trailing characters from strings- Trim leading or trailing characters from a string?

猜你喜欢

百度研发三面惨遭滑铁卢:面试官一套组合拳让我当场懵逼

Hands on deep learning (34) -- sequence model

GoLand environment variable configuration

SSM online examination system source code, database using mysql, online examination system, fully functional, randomly generated question bank, supporting a variety of question types, students, teache

2022-2028 global intelligent interactive tablet industry research and trend analysis report

2022-2028 global gasket metal plate heat exchanger industry research and trend analysis report

回复评论的sql

xxl-job惊艳的设计,怎能叫人不爱

Daughter love: frequency spectrum analysis of a piece of music

2022-2028 global edible probiotic raw material industry research and trend analysis report

随机推荐

165 webmaster online toolbox website source code / hare online tool system v2.2.7 Chinese version

2022-2028 global gasket metal plate heat exchanger industry research and trend analysis report

《网络是怎么样连接的》读书笔记 - 集线器、路由器和路由器(三)

Write a jison parser from scratch (3/10): a good beginning is half the success -- "politics" (Aristotle)

mmclassification 标注文件生成

C语言指针面试题——第二弹

Global and Chinese market of planar waveguide optical splitter 2022-2028: Research Report on technology, participants, trends, market size and share

You can see the employment prospects of PMP project management

Logstack configuration details -- elasticstack (elk) work notes 020

Flutter 小技巧之 ListView 和 PageView 的各種花式嵌套

Lauchpad X | 模式

《网络是怎么样连接的》读书笔记 - Tcp/IP连接(二)

Rules for using init in golang

Report on investment analysis and prospect trend prediction of China's MOCVD industry Ⓤ 2022 ~ 2028

2022-2028 global elastic strain sensor industry research and trend analysis report

Pueue data migration from '0.4.0' to '0.5.0' versions

Hands on deep learning (32) -- fully connected convolutional neural network FCN

Hands on deep learning (35) -- text preprocessing (NLP)

Global and Chinese markets of thrombography hemostasis analyzer (TEG) 2022-2028: Research Report on technology, participants, trends, market size and share

GoLand environment variable configuration