当前位置:网站首页>[collect first and use it sooner or later] 100 Flink high-frequency interview questions series (I)

[collect first and use it sooner or later] 100 Flink high-frequency interview questions series (I)

2022-06-11 17:51:00 【Big data Institute】

【 Collect first , Sooner or later 】100 individual Flink High frequency interview question series ( One )

Continuous sharing is useful 、 valuable 、 Selected high-quality big data interview questions

We are committed to building the most comprehensive big data interview topic database in the whole network

1、Flink How to do stress testing and monitoring ?

Refer to the answer : The pressure we usually encounter comes from the following aspects :

(1) If the speed of data stream generation is too fast , If the downstream operators can't consume it , It will create back pressure . Back pressure monitoring can be used Flink Web UI(localhost:8081) To visualize monitoring , Once you call the police, you know . In general, the back pressure problem may be caused by sink This The operator is not optimized well , Just do some optimization . For example, if you write ElasticSearch, It can be changed to batch write , It can be adjusted Big ElasticSearch Queue size and so on .

(2) Set up watermark The maximum delay time of this parameter , If the setting is too large , It may cause Memory pressure . You can set the maximum delay time to be smaller , Then send the late element to the side output stream . Update the results later . perhaps Use similar to RocksDB This state back end , RocksDB It will open up extra heap storage space , but IO It's going to slow down , Trade off required .

(3) And if the length of the sliding window is too long , And if the sliding distance is very short ,Flink Performance of It's going to go down a lot . We mainly By time slicing , Store each element in only one “ Overlapping windows ”, In this way, the writing of state in window processing can be reduced .

2、 How do you reasonably evaluate Flink The parallelism of the task ?

Refer to the answer :Flink The task parallelism is reasonable. Generally, the pressure measurement is evaluated according to the peak flow , And leave a certain amount of space according to the cluster load buffer resources .

If the data source already exists , Then you can directly consume and test

If the data source does not exist , It is necessary to make pressure measurement data for testing

For one Flink In terms of tasks , In general, you can set the parallelism in fine granularity in the following ways :

source Parallelism configuration : With kafka For example ,source The parallelism of is generally set to kafka Corresponding topic The number of partitions

transform( such as flatmap、map、filter Equal operator ) Configuration of parallelism : These operators usually don't do too heavy operations , Parallelism can be compared with source bring into correspondence with , So that operators can do forward To transmit data , No network transmission

keyby The subsequent processing operator : It is suggested that the maximum parallelism is an integer multiple of the operator parallelism , In this way, we can make keyGroup It's the same , This makes the data relatively uniform shuffle To the downstream operator , The picture below shows shuffle Strategy

sink Configuration of parallelism :sink Is where data flows downstream , According to sink The amount of data and the pressure resistance of downstream services are evaluated . If sink yes kafka, Can be set as kafka Corresponding topic The number of partitions . Be careful sink The degree of parallelism is better than kafka partition In multiples , Otherwise, it may appear, such as kafka partition Uneven data . But most of the time sink Operator parallelism does not need to be specially set , It only needs to be the same as the parallelism of the whole task .

3、Flink How to guarantee Exactly-once Semantic ?

Refer to the answer :Flink The end-to-end consistency semantics can be achieved by implementing two-phase commit and state saving . It is divided into the following steps :

- Start business (beginTransaction): Create a temporary folder , To write data into this folder

- Pre submission (preCommit): Write cached data in memory to a file and close

- Formal submission (commit): Put the previously written temporary files in the target directory . This means there will be some delay in the final data

- discarded (abort): Discard temporary files

If the failure occurs after the pre submission is successful , Before formal submission . You can submit pre submitted data based on the status , You can also delete pre submitted data .

4、Flink How to deal with late data

Refer to the answer :Flink in WaterMark and Window The mechanism solves the disorder problem of streaming data , For data that is out of order due to delay , According to eventTime Conduct business processing , For delayed data Flink There are solutions of their own , The main way is to give a delay time , The processing delay data can still be accepted in this time range :

Set the time allowed to delay It's through allowedLateness(lateness: Time) Set up

Save delay data It's through sideOutputLateData(outputTag: OutputTag[T]) preservation

Get delay data It's through DataStream.getSideOutput(tag: OutputTag[X]) obtain

5、Flink Do you understand the restart strategy of ?

Refer to the answer :Flink Support different restart strategies , These restart strategies control job How to restart after failure :

1、 Fixed delay restart strategy

The fixed delay restart policy will try to restart a given number of times Job, If the maximum number of reboots is exceeded ,Job It will eventually fail . Between two consecutive restart attempts , The restart policy will wait for a fixed time .

2、 Failure rate restart strategy

Failure rate restart strategy in Job If it fails, it will restart , But beyond the failure rate ,Job Will eventually be judged as a failure . Between two consecutive restart attempts , The restart policy will wait for a fixed time .

3、 No restart policy

Job Direct failure , No attempt to restart .

Continuous sharing is useful 、 valuable 、 Selected high-quality big data interview questions

We are committed to building the most comprehensive big data interview topic database in the whole network

边栏推荐

- How does Sister Feng change to ice?

- 6-6 batch sum (*)

- 安装mariadb 10.5.7(tar包安装)

- Summary of clustering methods

- 【先收藏,早晚用得到】100个Flink高频面试题系列(二)

- R语言 mice包 Error in terms.formula(tmp, simplify = TRUE) : ExtractVars里的模型公式不对

- 6-5 统计单词数量(文件)(*)

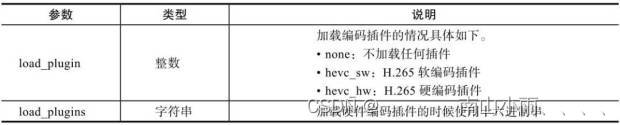

- ffmpeg CBR精准码流控制三个步骤

- Set object mapping + scene 6-face mapping + create space in threejs

- Typescipt Basics

猜你喜欢

MFSR:一种新的推荐系统多级模糊相似度量

Use of forcescan in SQL server and precautions

【先收藏,早晚用得到】100个Flink高频面试题系列(二)

Service学习笔记02-实战 startService 与bindService

ffmpeg硬编解码 Inter QSV

sql server中关于FORCESCAN的使用以及注意项

【Mysql】redo log,undo log 和binlog详解(四)

04_ Feature engineering feature selection

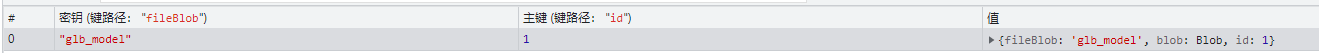

Threejs uses indexeddb cache to load GLB model

CLP information -5 keywords to see the development trend of the financial industry in 2022

随机推荐

R语言 mice包 Error in terms.formula(tmp, simplify = TRUE) : ExtractVars里的模型公式不对

【深度学习基础】神经网络的学习(3)

【先收藏,早晚用得到】49个Flink高频面试题系列(一)

av_ read_ The return value of frame is -5 input/output error

ArrayList collection, object array

Service learning notes 04 other service implementation methods and alternative methods

which is not functionally dependent on columns in GROUP BY clause; this is incompatible with sql_ mod

Intelligent overall legend, legend of wiring, security, radio conference, television, building, fire protection and electrical diagram [transferred from wechat official account weak current classroom]

Mathematical foundations of information security Chapter 3 - finite fields (II)

Rtsp/onvif protocol easynvr video platform arm version cross compilation process and common error handling

Merge K ascending linked lists ---2022/02/26

CentOS7服务器配置(四)---安装redis

tidb-cdc同步mysql没有的特性到mysql时的处理

Typescipt Basics

【Mysql】redo log,undo log 和binlog详解(四)

6-2 多个整数的逆序输出-递归

合并K个升序链表---2022/02/26

sql server中移除key lookup书签查找

CLP information -5 keywords to see the development trend of the financial industry in 2022

Use of forcescan in SQL server and precautions