当前位置:网站首页>Word vector - demo

Word vector - demo

2022-07-31 06:15:00 【Young_win】

word2vec and BERT are both landmark work in language representation. The former is a representative of the word embedding paradigm, and the latter is a representative of the pre-training paradigm.In addition to modeling polysemy, a good language representation also needs to reflect the complex characteristics of words, including syntax, semantics, and so on.

word2vec

Starting from the "distributed representation hypothesis of words (the meaning of a word is given by words that frequently appear in the context of the word)", a look-up table is finally obtained, and each word is mapped to a unique dense vector..

Static word representation, regardless of context, cannot handle polysemy.

BERT

Using the Transformer (encoder in ) as the feature extractor to train on a large-scale corpus with a denoising target such as MLM, the resulting representation is very helpful for downstream tasks.

ELMo, BERT and other pre-training methods learn a deep network, so after pre-training, different levels of features can be obtained on different network layers.The features generated at the high level reflect more abstract, context-dependent parts; while the features generated at the lower level are more concerned with the syntax-level parts.

word2vec is a BOW model and lacks modeling of position.The embedding of word2vec is the model itself, whether it is CBOW or skip-gram, there are no other parameters except embedding.

word2vec's vocab is generally huge, with 100w vocabulary at every turn, because of the limitation of mlm task and the popularity of bpe tokenizer, a single language is generally 3w-5w vocabstrong>.BERT series models generally token and sentence will be modeled together, which is also an advantage of the transformer model. The representation of each token in this layer is For the previousThe result of using attention of all tokens in the layer, so it is very simple to get the representation of the sentence, just use a special token such as [CLS], and you can also use all the models in the model.capacity.

The word2vec model does not have a particularly good way to directly obtain sentence-level representations. The general practice is to average all word vectors, but this is not equivalent to the representation of the modeled sentence displayed.From the objective point of view, the first two kinds of objective of word2vec, CBOW or skip-gram, are simpler than BERT's mlm.Among them, the objective of CBOW and BERT is closer. It can be considered that CBOW is an mlm task that only masks one token.

From the point of view of modeling, there is no essential difference between BERT and Word2vec (CBOW mode), both are Mask Language Model as the training task; the only progress is: BERT successfully willTransformer, a deep network with strong expressive ability and easy optimization, is applied to the task of Mask Language Model; assuming that each input sample only masks 1 word during BERT training, then BERT is somewhat similar to a sliding window of 512.The CBOW model, except that the word embedding here is calculated by the Transformer encoder, not a simple context embedding addition.

The word vector at each position in BERT goes through a multi-layer transformer network structure. The transformer network structure will name the word vector at each position and the word vector at other positions as self-The attention matrix changes, and finally the word vector at each position will integrate the information of the word vector at each position.With Word2Vec, what we get is just a parameter matrix of the network, and the representation of each word will not change because of the sentence it is in.Therefore, the improvement of BERT over Word2Vec is that the word vector output by multiple transformers at each position of BERT has contextual information, and it can more directly model words and distances farther than the previous RNN-based model BERT.The dependencies between words, which Word2Vec does not have.

In terms of training method, BERT uses the Denoise mode to predict the words at the positions where the random mask is dropped. Due to the word model, the number of words is less than words, so BERT predictsWhen the words dropped by MASK are directly passed through softmax and then use cross entropy as the loss function to train the model.Word2Vec generally uses a sliding window to predict the middle word of the window or predict the words on both sides of the window from the middle word. At the same time, because words are used as basic units, the number of words is relatively large. Word2Vec generally adopts hierarchical softmax or negative sample.train.Of course, regardless of the amount of calculation, it is hard to say whether the effect of directly using softmax is better than negative sample.

From the user's point of view, Word2Vec is also a pre-trained model. The parameter of the model is the word vector itself.As a more powerful pre-training model, BERT can output the words in sentences as word vectors with rich contextual information through Transformer, and can also get sentence vectors,And the pre-training of BERT is more sufficient.

Both BERT and Word2Vec can be used as part of other models, while BERT is stronger.But the strong price is that the computational efficiency of BERT is very low, and Word2Vec is a table look-up process, which is very efficient, which also limits the application of BERT in many fields that require real-time.

边栏推荐

猜你喜欢

随机推荐

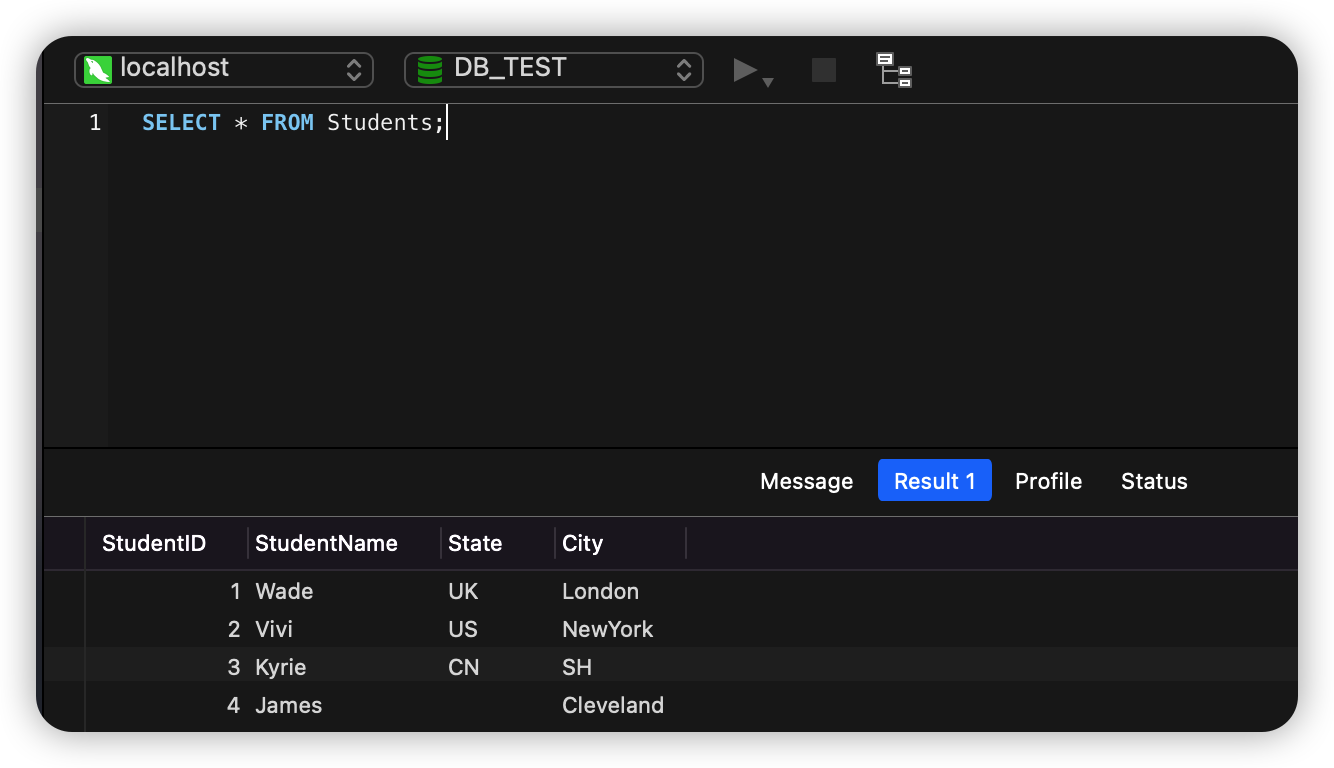

SQLite 查询表中每天插入的数量

Nmap的下载与安装

powershell statistics folder size

After unicloud is released, the applet prompts that the connection to the local debugging service failed. Please check whether the client and the host are under the same local area network.

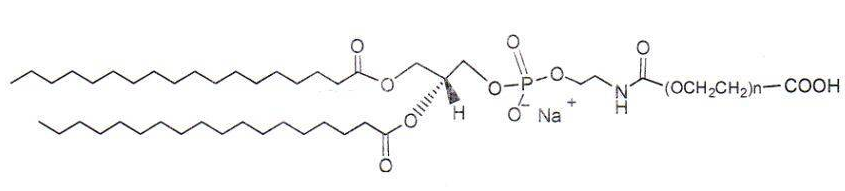

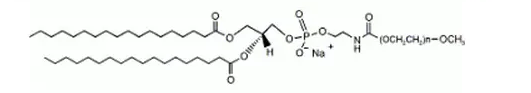

Cholesterol-PEG-Acid CLS-PEG-COOH 胆固醇-聚乙二醇-羧基修饰肽类化合物

MySQL 免安装版的下载与配置教程

【解决问题】RuntimeError: The size of tensor a (80) must match the size of tensor b (56) at non-singleton

quick-3.5 lua调用c++

cocos2d-x-3.2 image graying effect

softmax函数详解

Tencent Cloud GPU Desktop Server Driver Installation

random.randint函数用法

VS通过ODBC连接MYSQL(一)

Podspec verification dependency error problem pod lib lint , need to specify the source

Gradle sync failed: Uninitialized object exists on backward branch 142

深度学习知识点杂谈

sql add default constraint

MySQL 主从切换步骤

ERROR Error: No module factory availabl at Object.PROJECT_CONFIG_JSON_NOT_VALID_OR_NOT_EXIST ‘Error

quick-3.6源码修改纪录