当前位置:网站首页>[extensive reading of papers] multimodal attribute extraction

[extensive reading of papers] multimodal attribute extraction

2022-06-30 14:36:00 【Fish in the waves】

Introduce

Fast and efficient access to knowledge content in web pages . The content composition is diversified , The method of building knowledge base from multimodal data is needed , what's more , Combine the evidence to extract the correct answer .

The task of multimodal attribute extraction . Attribute extraction in text domain has been well studied , This is the first time to use multiple data schema combinations for attribute extraction .

Propose large data sets Multimodal Attribute Extraction(MAE), To assess the difficulty of tasks and data sets , We use Mechanical Turk Conduct human evaluation studies , This study demonstrates that all available information patterns contribute to the detection of values . We also train various machine learning models on data sets and provide results . We observed that , A simple most common value classifier always predicts the most common value of a given attribute , Provides a very difficult baseline for more complex models (33% The accuracy of ). In our present experiment , Despite the use of VGG-16 and Google Of Inception-v3 Modern neural architecture , But we can not train a pure image classifier with better performance than this simple model . however , We can only use text categorization (59% The accuracy of ). We hope to improve and obtain a more accurate model in further research .

Multimodal product attribute extraction

Because multimodal attribute extractors need to be able to return attribute values that appear in images and text , Therefore, we can not treat this problem as a label problem like the existing attribute extraction methods . contrary , We define the problem as : Given a product i And a query property a, We need to start with i Extract the corresponding value from the evidence provided v, That is, its text description (Di) And image collections (Ii). for example , In the figure 1 in , We observed the image and description of the product , And some examples of properties and values of interest . For training , For a set of product items I, We target each project I Training provided ∈ One 、 Its text description and image Ii, And a set of properties - It's worth it ( namely Ai={haj I,vj Ii}j) Set of components . Generally speaking , The product at the time of query will not be I in , And we don't work for products 、 Attributes or values assume any fixed ontology . We evaluate the performance of this task based on the accuracy of the predicted and observed values , however , Since there may be multiple correct values , We also include [email protected] evaluation .

MAE Data sets

Each item contains a text description 、 Open mode table of product image set and product attributes .Diffbot API Get this information using a machine learning based extractor , The extractor uses the vision of a fully rendered product web page 、 Text and layout functions . for example , Attribute value pairs are automatically extracted from tables on the product web page . Due to the automated nature of this collection process , There is some noise in the data set . for example , The same attribute can be represented in many different ways ( for example , length 、 length 、 length ). We use regular expression based preprocessing to normalize the most common attributes , however , We will keep the non normalized values . We also delete any attribute value pairs that satisfy any of the following frequency conditions : Attribute occurs less than 500 Time , Value occurs less than 50 Time , Or the most common value of an attribute accounts for 80% above . Use 80-10-10 The segmentation method divides the data into training 、 Verification and test sets .

Mechanical Turkish evaluation

Because attributes and values are extracted when they are displayed on the web site , Therefore, properties cannot be guaranteed - Value pairs appear in product images or text descriptions . We use Amazon Mechanical Turk A study was conducted , To determine the impact of the problem on the data set , And collect a gold evaluation data set of attribute value pairs , This data set ensures that... Is displayed in the context information . towards Mechanical Turk Workers show images and text descriptions of products , And ask them to determine whether the information provided can be used to predict the value of a given product attribute ( From the list of options ), If possible , What information to use . We use majority votes to eliminate noise in these annotations .

In this study ( preliminary ) It turns out that , Only... Can be found using context information 42% Properties of - It's worth it . among ,35% Can be found through the product picture ,70% It can be found in the text description . This shows that , Although text description is the most useful attribute extraction pattern , But the image still contains useful information .

Multimodal fusion model

A new extraction model is established , The model consists of three separate modules :

(1) Coding module , Joint embedding problem using neural networks 、 Description and images into the public potential space

(2) These embedded vectors are combined into a single dense vector fusion module using a specific attribute attention mechanism

(3) A similarity based value decoder that generates a final value prediction .

As shown in the figure :

Coding module

We specify a dense embedding for each attribute and value , namely .E attribute a from k Dimension vector ca Express , value v from cv Express , Learn vectors during training .

Text description : use first Stanford tokenizer Tag text , And then use Glove The algorithm embeds all words into all descriptions in the training data . Use Kim Of CNN Architecture , The architecture consists of CNN layer 、 Maximum pool and full connection layer , These pre trained embeddings are combined into a dense vector for description cD in .

Images : Convolution neural network generation . Use pre training 16 layer VGG Model fc7 Layer output ( application ReLU After nonlinearity ) Get an intermediate image representation . then , We provide information for output through a fully connected layer , To get the... Of each image k Dimension embedding . The final embedding is generated by executing the maximum pool for image embedding .

The fusion

To fuse attribute embedding with text and image embedding , We used two different techniques to experiment :

- Concat, It connects the three methods , They are then fed through a fully connected layer , To produce fusion codes c.

- GMU, Firstly, we use the fully connected layer to embed the attribute vector text and image and fuse them independently

experiment

The experimental results show that for this data set , The effect of the proposed model is not as good as that of the traditional direct connection

边栏推荐

- On simple code crawling Youdao translation_ 0's problem (to be solved)

- Getting started with shell Basics

- PHP multidimensional array sorting

- The first dark spring cup dnuictf

- [buuctf] [actf2020 freshman competition]exec1

- remote: Support for password authentication was removed on August 13, 2021. Please use a personal ac

- Detailed explanation of settimeout() and setinterval()

- Talk about Vue's two terminal diff algorithm, analysis of the rendering principle of the mobile terminal, and whether the database primary key must be self incremented? What scenarios do not suggest s

- Upgrade composer self update

- Problem: wechat developer tool visitor mode cannot use this function

猜你喜欢

LIS error: this configuration section cannot be used in this path

![[geek challenge 2019] PHP problem solving record](/img/bf/038082e8ee1c91eaf6e35add39f760.jpg)

[geek challenge 2019] PHP problem solving record

PS dynamic drawing

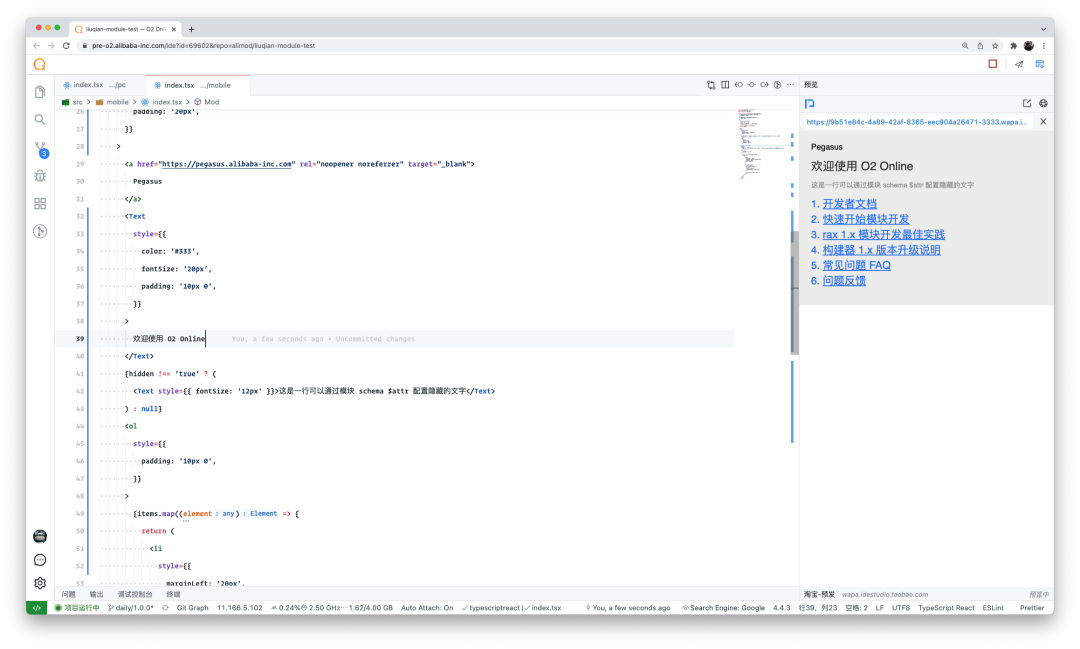

Heavyweight: the domestic ide was released, developed by Alibaba, and is completely open source!

Geoffreyhinton: my 50 years of in-depth study and Research on mental skills

Error $(...) size is not a function

Introduction to the construction and development of composer private warehouse

Jetpack compose for perfect screen fit

ThinkPHP v3.2 comment annotation injection write shell

2021-07-14 mybaitsplus

随机推荐

Is it troublesome for CITIC futures to open an account? Is it safe? How much is the handling charge for opening an account for futures? Can you offer a discount

Why does the folder appear open in another program

Pytoch viewing model parameter quantity and calculation quantity

I love network security for new recruitment assessment

Jetpack compose for perfect screen fit

Laravel artist command error

@Role of ResponseBody

Why is the resolution of the image generated by PHP GD library 96? How to change it to 72

MySQL back to table query optimization

Go common lock mutex and rwmutex

PHP generate images into Base64

Details of gets, fgetc, fgets, Getc, getchar, putc, fputc, putchar, puts, fputs functions

go time. after

@component使用案例

How to realize selective screen recording for EV screen recording

Learn about data kinship JSON format design from sqlflow JSON format

DB2 SQL Error: SQLCODE=-206, SQLSTATE=42703

[buuctf] [geek challenge 2019] secret file

The first dark spring cup dnuictf

LeetCode_ Stack_ Medium_ 227. basic calculator II (without brackets)