当前位置:网站首页>文章复现:超分辨率网络-VDSR

文章复现:超分辨率网络-VDSR

2022-08-04 20:31:00 【GIS与Climate】

VDSR全称Very Deep Super Resolution,意思就是非常深的超分辨率网络(相比于SRCNN的3层网络结构),一共有20层,其文章见参考链接【1】。

摘要

We present a highly accurate single-image super-resolution (SR) method. Our method uses a very deep con-volutional network inspired by VGG-net used for ImageNet classification. We find increasing our network depth shows a significant improvement in accuracy.Our final model uses 20 weight layers. By cascading small filters many times in a deep network structure, contextual information over large image regions is exploited in an efficient way. With very deep networks, however, convergence speed becomes a critical issue during training. We propose a simple yet effective training procedure. We learn residuals only and use extremely high learning rates (104times higher than SRCNN) enabled by adjustable gradient clipping. Our proposed method performs better than existing meth- ods in accuracy and visual improvements in our results are easily noticeable.

大意就是作者仿照VGG提出了一个有20层的深层网络,且通过残差学习来提升网络的收敛速度,在超分的任务上表现由于传统的SRCNN。

网络架构

作者认为SRCNN的主要缺点有:

其捕获到的信息是小区域的信息(对数据patch的缺点); 收敛太慢; 只适用于单个尺度(就是放大倍数);

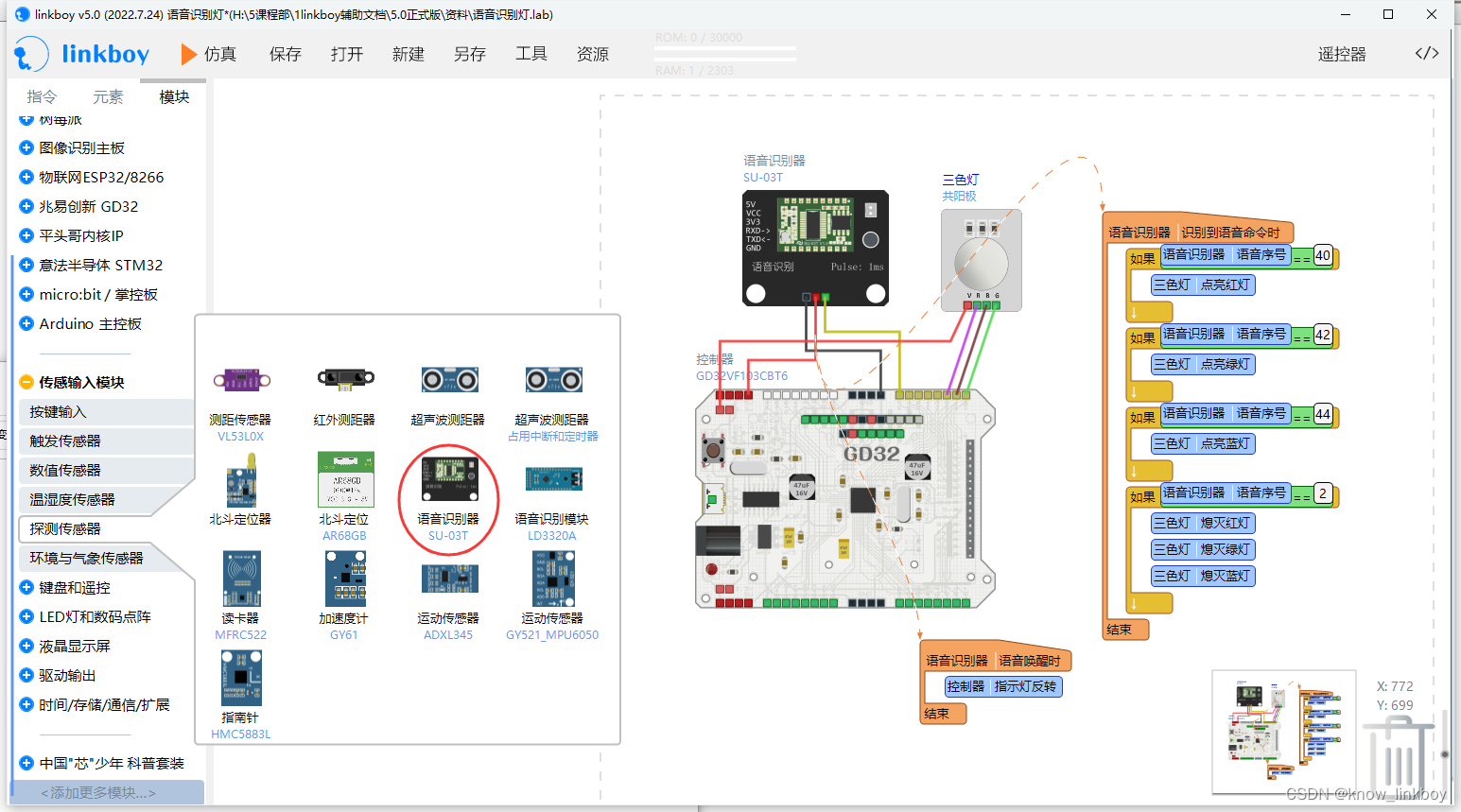

VDSR网络的架构图如下所示:

跟SRCNN主要的不同有:

输入与输出大小一样(通过padding); 每一层使用相同的学习率;

主要可以分为如下几个部分:

首先对LR图像进行插值得到ILR(Interpolated low-resolution); 中间的特征提取部分(卷积层+激活层)(其实就是残差信息); 把上面学习到的残差信息和经过插值的ILR图像相加,得到HR图像;

代码

有了上面的结构信息,就可以按照积木思想搭建网络了,只要注意几个细节就好:

中间的特征提取层为3x3x64,也就是kernel size为3,filter数量为64; 每一层记得padding以保持输入和输出的尺寸一致; 每个卷积层后面跟上ReLU激活层;

class VDSR(nn.Module):

def __init__(self, in_channels) -> None:

super(VDSR, self).__init__()

self.block1 = nn.Sequential(

nn.Conv2d(in_channels=in_channels,out_channels=64, kernel_size=3,stride=1, padding=1),

nn.ReLU()

)

self.block2 = nn.Sequential(

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

nn.Conv2d(64,64,kernel_size=3, stride=1,padding=1),

nn.ReLU(),

)

self.block3 = nn.Sequential(

nn.Conv2d(64,in_channels,kernel_size=3, stride=1,padding=1)

)

def forward(self, x):

y = self.block1(x)

y = self.block2(y)

y = self.block3(y)

y = x + y

return y

上面的层数不对,随便写的,文章中说的是20层,不过本质没有变,主要就是三块; 输入网络的数据记得要预先插值为目标尺寸。

总结

VDSR这个网络整体来说没什么特别的,就是用了残差学习的思想,然后网络层深了点。其他的跟SRCNN是一样的思想。

参考

【1】KIM J, LEE J K, LEE K M 2016. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. 2016 Ieee Conference on Computer Vision and Pattern Recognition (Cvpr) [J]: 1646-1654.

【2】https://medium.com/towards-data-science/review-vdsr-super-resolution-f8050d49362f

边栏推荐

猜你喜欢

随机推荐

【AGC】构建服务1-云函数示例

MySQL字段类型

About the state transfer problem of SAP e-commerce cloud Spartacus UI SSR

Order of lds links

微信小程序云开发 | 赠、删、改城市名称信息的应用实现

推荐系统_刘老师

C#的Dictionary字典集合按照key键进行升序和降序排列

2022-8-4 第七组 ptz 锁与线程池和工具类

Differences in the working mechanism between SAP E-commerce Cloud Accelerator and Spartacus UI

Chrome安装zotero connector 插件

动态数组底层是如何实现的

String中的hashcode缓存以及HashMap中String作key的好处

取证程序分类

Red5搭建直播平台

37.轮播图

ASP.NET商贸进销存管理系统源码(带数据库文档)源码免费分享

A complete cross-compilation environment records the shell scripts generated by peta

动态规划_双数组字符串

June To -.-- -..- -

Retrofit的使用及原理详解