Official Discussion Group (Telegram): https://t.me/video2x

A Discord server is also available. Please note that most developers are only on Telegram. If you join the Discord server, the developers might not be able to see your questions and help you. It is mostly for user-user interactions and those who do not want to use Telegram.

Download Stable/Beta Builds (Windows)

Full: full package comes pre-configured with all dependencies likeFFmpegandwaifu2x-caffe.Light: ligt package comes with only Video2X binaries and a template configuration file. The user will either have to run the setup script or install and configure dependencies themselves.

Go to the Quick Start section for usages.

Download From Mirror

In case you're unable to download the releases directly from GitHub, you can try downloading from the mirror site hosted by the author. Only releases will be updated in this directory, not nightly builds.

Download Nightly Builds (Windows)

You need to be logged into GitHub to be able to download GitHub Actions artifacts.

Nightly builds are built automatically every time a new commit is pushed to the master branch. The lates nightly build is always up-to-date with the latest version of the code, but is less stable and may contain bugs. Nightly builds are handled by GitHub's integrated CI/CD tool, GitHub Actions.

To download the latest nightly build, go to the GitHub Actions tab, enter the last run of workflow "Video2X Nightly Build, and download the artifacts generated from the run.

Docker Image

Video2X Docker images are available on Docker Hub for easy and rapid Video2X deployment on Linux and macOS. If you already have Docker installed, then only one command is needed to start upscaling a video. For more information on how to use Video2X's Docker image, please refer to the documentations.

Google Colab

You can use Video2X on Google Colab for free. Colab allows you too use a GPU on Google's Servers (Tesla K80, T4, P4, P100). Please bare in mind that Colab can only be provided for free if all users know that they shouldn't abuse it. A single free-tier tier session can last up to 12 hours. Please do not abuse the platform by creating sessions back-to-back and running upscaling 24/7. This might result in you getting banned.

Here is an example Notebook written by @Felixkruemel: Video2X_on_Colab.ipynb. This file can be used in combination of the following modified configuration file: @Felixkruemel's Video2X configuration for Google Colab.

Introduction

Video2X is a video/GIF/image upscaling software based on Waifu2X, Anime4K, SRMD and RealSR written in Python 3. It upscales videos, GIFs and images, restoring details from low-resolution inputs. Video2X also accepts GIF input to video output and video input to GIF output.

Currently, Video2X supports the following drivers (implementations of algorithms).

- Waifu2X Caffe: Caffe implementation of waifu2x

- Waifu2X Converter CPP: CPP implementation of waifu2x based on OpenCL and OpenCV

- Waifu2X NCNN Vulkan: NCNN implementation of waifu2x based on Vulkan API

- SRMD NCNN Vulkan: NCNN implementation of SRMD based on Vulkan API

- RealSR NCNN Vulkan: NCNN implementation of RealSR based on Vulkan API

- Anime4KCPP: CPP implementation of Anime4K

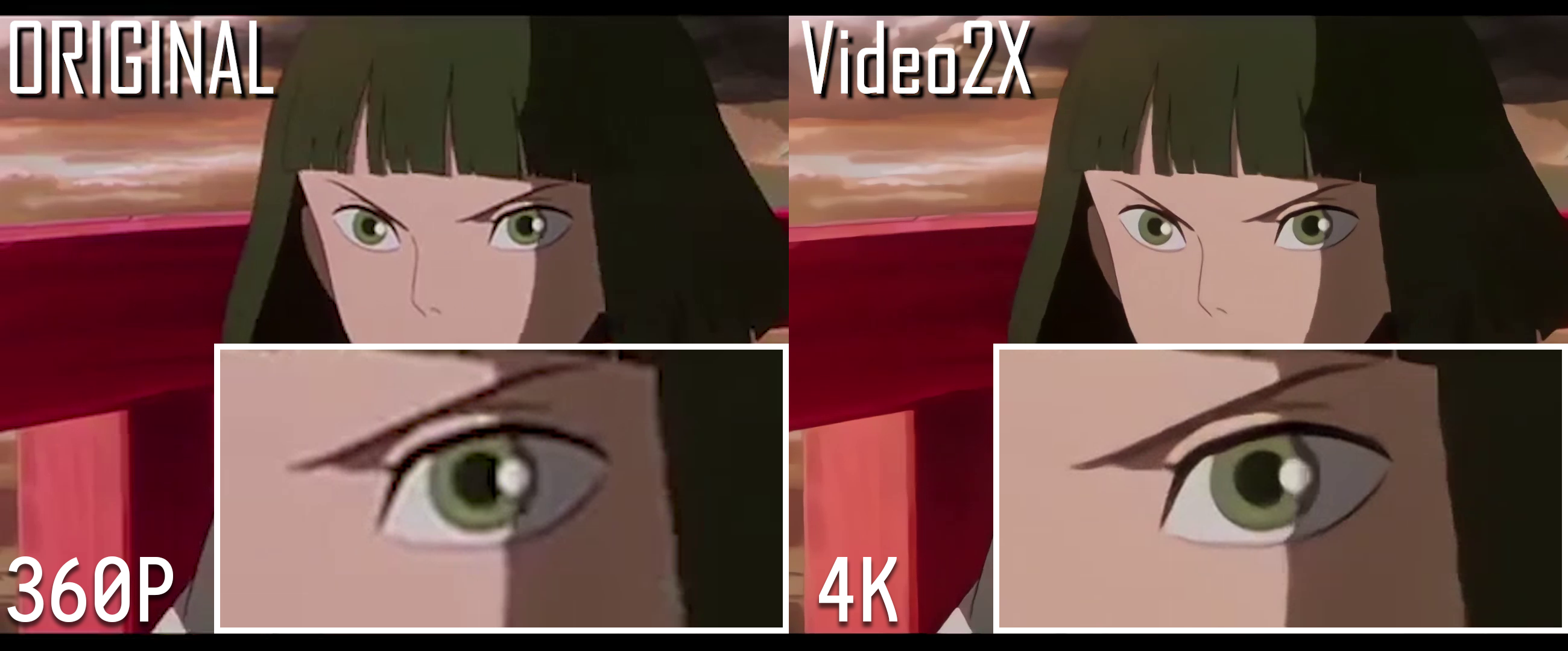

Video Upscaling

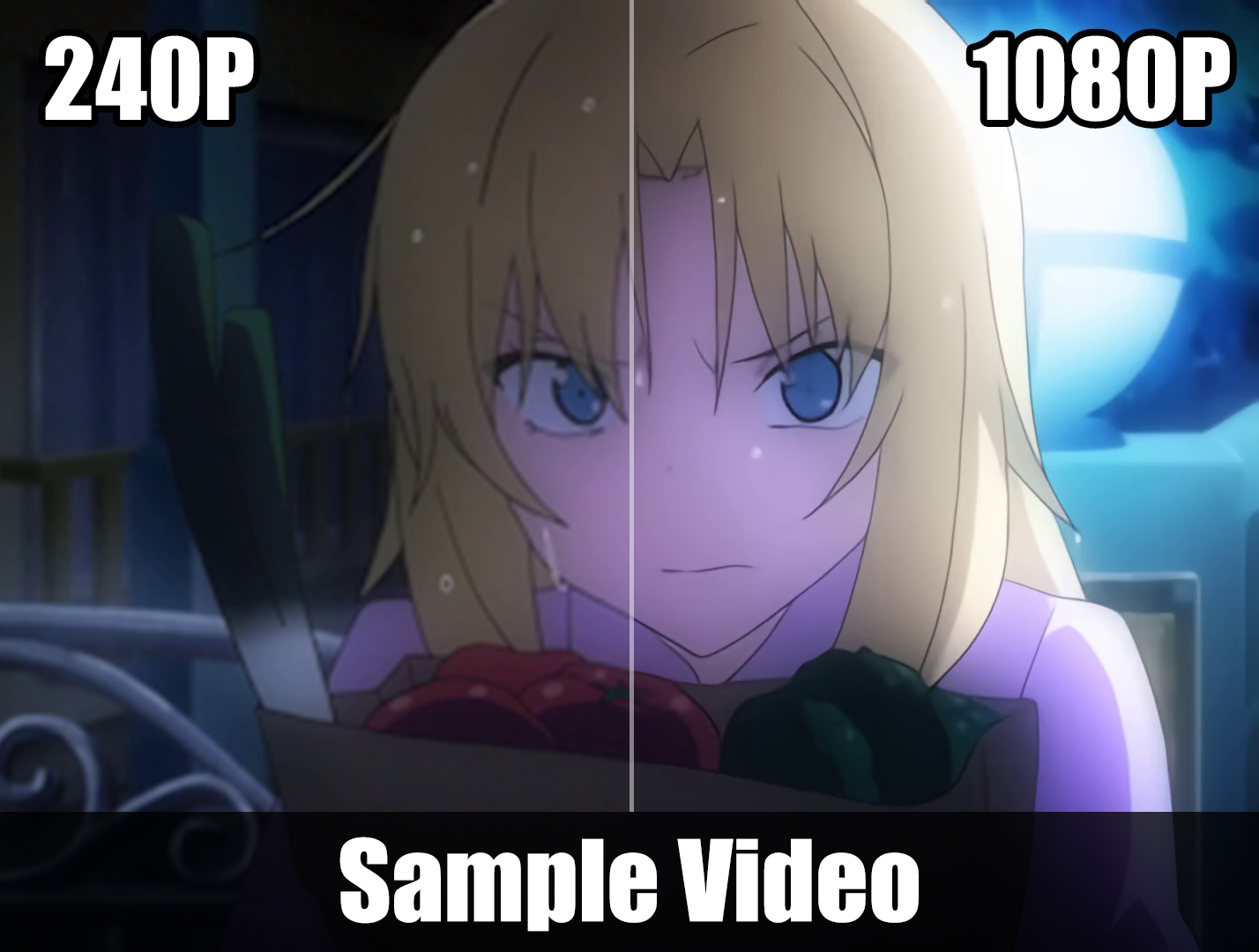

Upscale Comparison Demonstration

You can watch the whole demo video on YouTube: https://youtu.be/mGEfasQl2Zo

Clip is from trailer of animated movie "千と千尋の神隠し". Copyright belongs to "株式会社スタジオジブリ (STUDIO GHIBLI INC.)". Will delete immediately if use of clip is in violation of copyright.

GIF Upscaling

This original input GIF is 160x120 in size. This image is downsized and accelerated to 20 FPS from its original image.

Catfru original 160x120 GIF image

Below is what it looks like after getting upscaled to 640x480 (4x) using Video2X.

Image Upscaling

Original image from [email protected], edited by K4YT3X.

All Demo Videos

Below is a list of all the demo videos available. The list is sorted from new to old.

- Bad Apple!!

- YouTube: https://youtu.be/A81rW_FI3cw

- Bilibili: https://www.bilibili.com/video/BV16K411K7ue

- The Pet Girl of Sakurasou 240P to 1080P 60FPS

- Original name: さくら荘のペットな彼女

- YouTube: https://youtu.be/M0vDI1HH2_Y

- Bilibili: https://www.bilibili.com/video/BV14k4y167KP/

- Spirited Away (360P to 4K)

- Original name: 千と千尋の神隠し

- YouTube: https://youtu.be/mGEfasQl2Zo

- Bilibili: https://www.bilibili.com/video/BV1V5411471i/

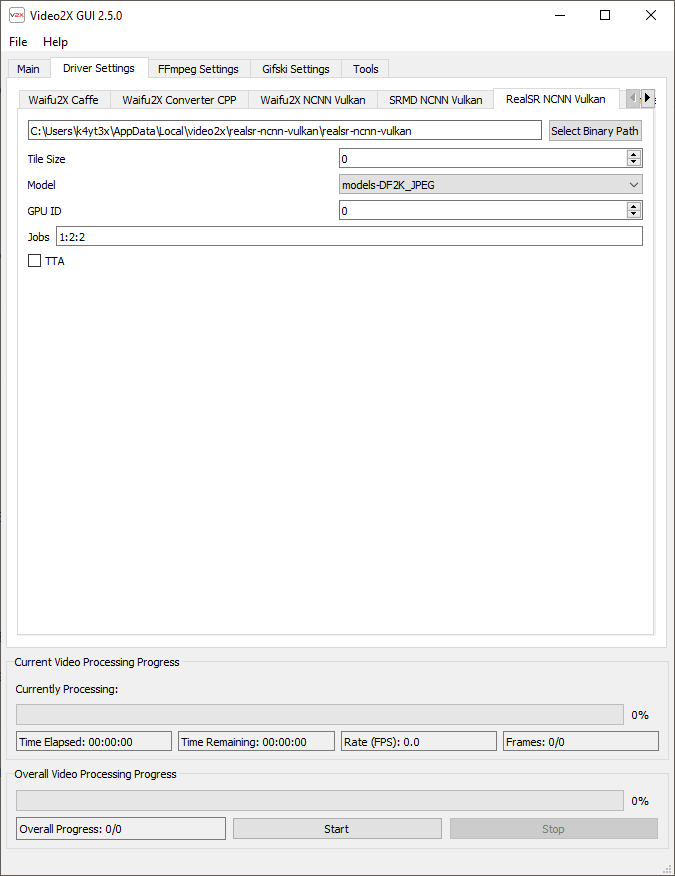

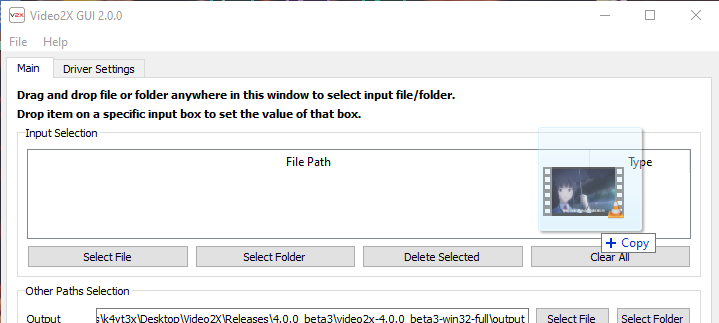

Screenshots

Video2X GUI

Video2X CLI

Sample Videos

If you can't find a video clip to begin with, or if you want to see a before-after comparison, we have prepared some sample clips for you. The quick start guide down below will also be based on the name of the sample clips.

- Sample Video (240P) 4.54MB

- Sample Video Upscaled (1080P) 4.54MB

- Sample Video Original (1080P) 22.2MB

Clip is from anime "さくら荘のペットな彼女". Copyright belongs to "株式会社アニプレックス (Aniplex Inc.)". Will delete immediately if use of clip is in violation of copyright.

Quick Start

Prerequisites

Before running Video2X, you'll need to ensure you have installed the drivers' external dependencies such as GPU drivers.

- waifu2x-caffe

- GPU mode: Nvidia graphics card driver

- cuDNN mode: Nvidia CUDA and cuDNN

- Other Drivers

- GPU driver if you want to use GPU for processing

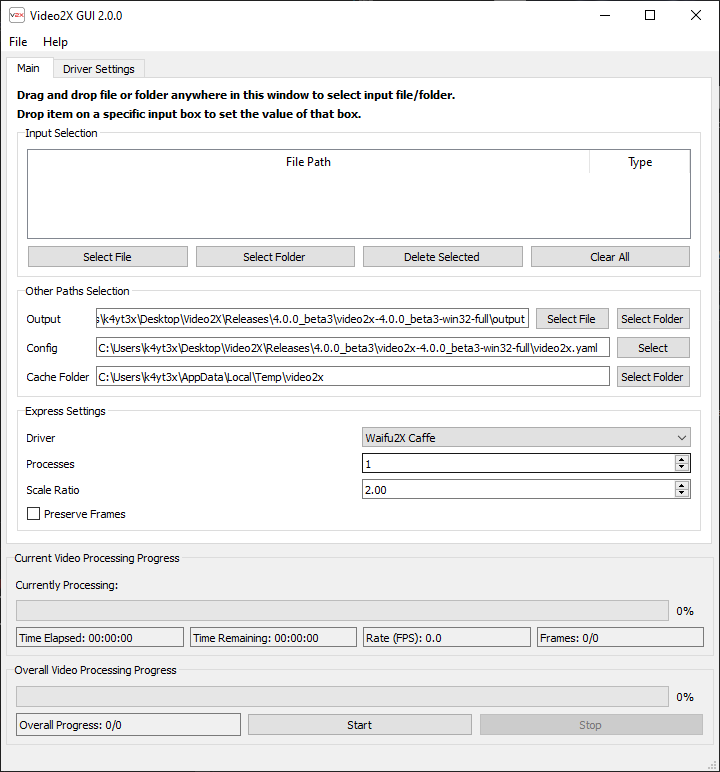

Running Video2X (GUI)

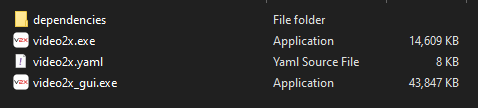

The easiest way to run Video2X is to use the full build. Extract the full release zip file and you'll get these files.

Simply double click on video2x_gui.exe to launch the GUI.

Then, drag the videos you wish to upscale into the window and select the appropriate output path.

Drag and drop file into Video2X GUI

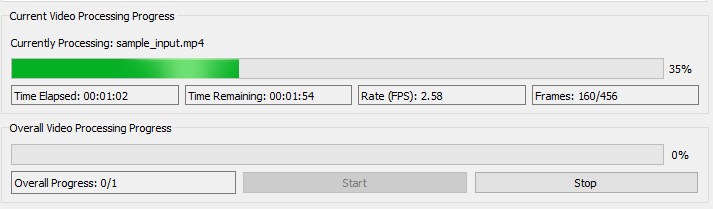

Tweak the settings if you want to, then hit the start button at the bottom and the upscale will start. Now you'll just have to wait for it to complete.

Video2X started processing input files

Running Video2X (CLI)

Basic Upscale Example

This example command below uses waifu2x-caffe to enlarge the video sample-input.mp4 two double its original size.

python video2x.py -i sample-input.mp4 -o sample-output.mp4 -r 2 -d waifu2x_caffe

Advanced Upscale Example

If you would like to tweak engine-specific settings, either specify the corresponding argument after --, or edit the corresponding field in the configuration file video2x.yaml. Command line arguments will overwrite default values in the config file.

This example below adds enables TTA for waifu2x-caffe.

python video2x.py -i sample-input.mp4 -o sample-output.mp4 -r 2 -d waifu2x_caffe -- --tta 1

To see a help page for driver-specific settings, use -d to select the driver and append -- --help as demonstrated below. This will print all driver-specific settings and descriptions.

python video2x.py -d waifu2x_caffe -- --help

Running Video2X (Docker)

Video2X can be deployed via Docker. The following command upscales the video sample_input.mp4 two times with Waifu2X NCNN Vulkan and outputs the upscaled video to sample_output.mp4. For more details on Video2X Docker image usages, please refer to the documentations.

docker run --rm -it --gpus all -v /dev/dri:/dev/dri -v $PWD:/host k4yt3x/video2x:4.6.0 -d waifu2x_ncnn_vulkan -r 2 -i sample_input.mp4 -o sample_output.mp4

Documentations

Video2X Wiki

You can find all detailed user-facing and developer-facing documentations in the Video2X Wiki. It covers everything from step-by-step instructions for beginners, to the code structure of this program for advanced users and developers. If this README page doesn't answer all your questions, the wiki page is where you should head to.

Step-By-Step Tutorial

For those who want a detailed walk-through of how to use Video2X, you can head to the Step-By-Step Tutorial wiki page. It includes almost every step you need to perform in order to enlarge your first video.

Run From Source Code

This wiki page contains all instructions for how you can run Video2X directly from Python source code.

Drivers

Go to the Drivers wiki page if you want to see a detailed description on the different types of drivers implemented by Video2X. This wiki page contains detailed difference between different drivers, and how to download and set each of them up for Video2X.

Q&A

If you have any questions, first try visiting our Q&A page to see if your question is answered there. If not, open an issue and we will respond to your questions ASAP. Alternatively, you can also join our Telegram discussion group and ask your questions there.

History

Are you interested in how the idea of Video2X was born? Do you want to know the stories and histories behind Video2X's development? Come into this page.

License

Licensed under the GNU General Public License Version 3 (GNU GPL v3) https://www.gnu.org/licenses/gpl-3.0.txt

(C) 2018-2021 K4YT3X

Credits

This project relies on the following software and projects.

- FFmpeg

- waifu2x-caffe

- waifu2x-converter-cpp

- waifu2x-ncnn-vulkan

- srmd-ncnn-vulkan

- realsr-ncnn-vulkan

- Anime4K

- Anime4KCPP

- Gifski

Special Thanks

Appreciations given to the following personnel who have contributed significantly to the project (specifically the technical perspective).

Related Projects

- Dandere2x: A lossy video upscaler also built around

waifu2x, but with video compression techniques to shorten the time needed to process a video. - Waifu2x-Extension-GUI: A similar project that focuses more and only on building a better graphical user interface. It is built using C++ and Qt5, and currently only supports the Windows platform.