当前位置:网站首页>5.4-5.5

5.4-5.5

2022-07-03 14:50:00 【III VII】

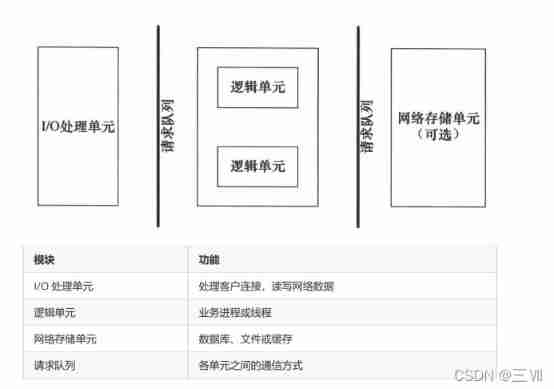

5.4 Server basic framework and event processing mode

5.4.1 Basic framework

I/O The processing unit is a module for the server to manage client connections . It usually does the following : Wait and accept new customer connections , Receive customer data , Return the server response data to the client . But the sending and receiving of data is not necessarily in I/O Execute in the processing unit , It is also possible to perform... In a logical unit , Where it is executed depends on the event handling mode .

A logical unit is usually a process or thread . It analyzes and processes customer data , Then pass the result on to I/O The processing unit may send it directly to the client ( Which method to use depends on the event processing mode ). Servers usually have multiple logical units , To realize the concurrent processing of multiple customer tasks .

The network storage unit can be a database 、 Cache and file , But it's not necessary .

The mode of communication between units is abstract .I/O When the processing unit receives a customer request , A logical unit needs to be notified in some way to process the request . Again , Multiple logical units access one storage sheet at the same time

Yuan time , It is also necessary to adopt some mechanism to coordinate and deal with race conditions . Request queues are usually implemented as part of a pool .

5.4.2 Two efficient event processing modes

Server programs usually need to handle three types of events :I/O event 、 Signals and timing events . There are two efficient event handling modes :Reactor and Proactor, Sync I/O Models are often used to implement Reactor Pattern , asynchronous I/O Models are often used to implement Proactor Pattern .

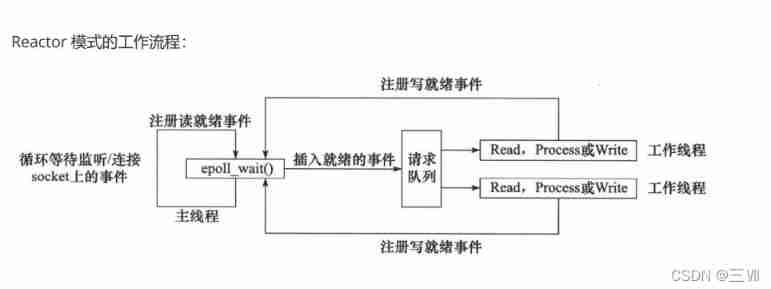

Reactor Pattern

Main thread required (I/O processing unit ) Only listen for events on the file descriptor , If so, immediately notify the worker thread of the event ( Logical unit ), take socket Put readable and writable events into the request queue , Leave it to the worker thread . besides , The main thread doesn't do any other substantive work . Read and write data , Accept new connection , And processing customer requests are done in the worker thread .

Use synchronization I/O( With epoll_wait For example ) Realized Reactor The workflow of the pattern is :

- Main route epoll Register in the kernel event table socket Read ready event on .

- Main thread call epoll_wait wait for socket There's data to read on .

- When socket When there is data to read on , epoll_wait Notification thread . The main thread will socket Put readable events into the request queue .

- A worker thread sleeping on the request queue is awakened , It is from socket Reading data , And handle customer requests , Then go to epoll

Register the... In the kernel event table socket Write ready event on . - When the main thread calls epoll_wait wait for socket Can write .

- When socket Writable time ,epoll_wait Notification thread . The main thread will socket Writable events are put into the request queue .

- A worker thread sleeping on the request queue is awakened , It went to socket Write the result of the server processing the client request .

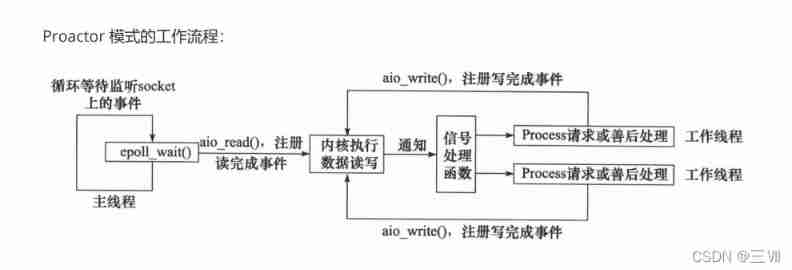

Proactor Pattern

Proactor Mode will all I/O All operations are handled by the main thread and the kernel ( Read 、 Write ), Worker threads are only responsible for business logic . Use asynchronous I/O Model ( With aio_read and aio_write For example ) Realized Proactor The workflow of the pattern is :

- Main thread call aio_read Function registers with the kernel socket Read completion event on , And tell the kernel user the location of the read buffer , And how to notify the application when the read operation is completed ( Take the signal as an example ).

- The main thread continues to process other logic .

- When socket After the data on is read into the user buffer , The kernel will send a signal to the application , To notify the application that the data is available .

- The pre-defined signal processing function of the application selects a worker thread to process the client request . After the worker thread processes the client request , call aio_write Function registers with the kernel socket Write completion event on , And tell the kernel user the location of the write buffer , And how to notify the application when the write operation is completed .

- The main thread continues to process other logic .

- When the data in the user buffer is written socket after , The kernel will send a signal to the application , To notify the application that the data has been sent .

- The pre-defined signal processing function of the application selects a working thread to deal with the aftermath , For example, decide whether to close socket.

difference : Whether the worker thread needs to read and write ;

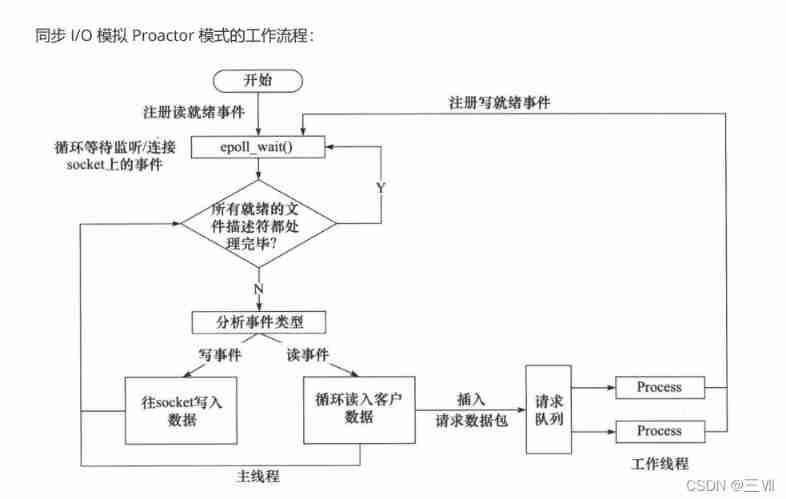

simulation Proactor Pattern

Use synchronization I/O Mode simulation Proactor Pattern . The principle is : The main thread performs data read and write operations , After reading and writing , The main thread notifies the worker of this ” Completion event “. So from the perspective of worker threads , They directly get the results of data reading and writing , The next thing to do is to logically process the results of reading and writing .

Use synchronization I/O Model ( With epoll_wait For example ) Simulated Proactor The workflow of the mode is as follows :

- Main route epoll Register in the kernel event table socket Read ready event on .

- Main thread call epoll_wait wait for socket There's data to read on .

- When socket When there is data to read on ,epoll_wait Notification thread . The main thread is from socket Loop read data , Until no more data is readable , Then the read data is encapsulated into a request object and inserted into the request queue .

- A worker thread sleeping on the request queue is awakened , It gets the request object and processes the customer request , Then go to epoll Register in the kernel event table socket Write ready event on .

- Main thread call epoll_wait wait for socket Can write .

- When socket Writable time ,epoll_wait Notification thread . Main route socket Write the result of the server processing the client request .

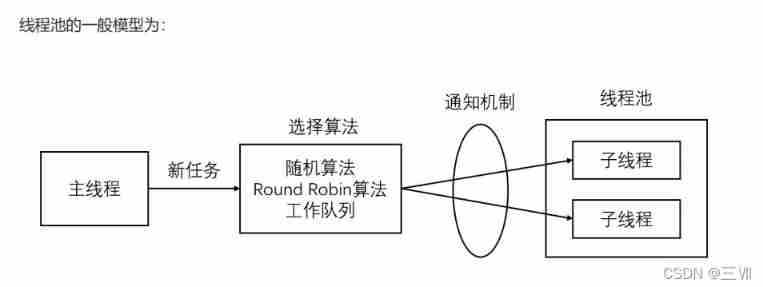

5.5 Thread pool

A thread pool is a set of child threads created in advance by the server , The number of threads in the thread pool should be the same as CPU It's about the same amount . All child threads in the thread pool run the same code . When a new task comes , The main thread will select a child thread in the thread pool to serve it in some way . Compared with the dynamic creation of sub threads , The cost of selecting an existing child thread is obviously much smaller . As for which sub thread the main thread chooses to serve the new task , There are many ways :

- The main thread uses some algorithm to actively select sub threads . The most simple 、 The most commonly used algorithms are random and Round

Robin( Take turns choosing ) Algorithm , But better 、 Smarter algorithms will allow tasks to be distributed more evenly across worker threads , So as to reduce the overall pressure of the server . - The main thread and all child threads share a work queue , All child threads sleep on the work queue . When a new task comes , The main thread adds a task to the work queue . This will wake up the child thread waiting for the task , However, only one child thread will get the new task ” Take over “, It can take tasks out of the work queue and execute them , While other child threads will continue to sleep on the work queue .

The most direct limiting factor for the number of threads in the thread pool is the CPU (CPU) The processor of (processors/cores) The number of N : If your CPU yes 4-cores Of , about CPU Intensive tasks ( Such as video clips, etc CPU The task of computing resources ) Come on , The number of threads in the thread pool should also be set to 4( perhaps +1 Prevent thread blocking caused by other factors ); about IO Intensive tasks , Generally more than CPU The number of nuclear , Because the competition between threads is not CPU Instead of computing resources IO,IO Processing is generally slow , More than cores The number of threads will be CPU Strive for more tasks , Not to thread processing IO Caused by the process of CPU Idleness leads to a waste of resources . - Space for time , Waste the hardware resources of the server , In exchange for operational efficiency .

- A pool is a collection of resources , This set of resources is completely created and initialized at the beginning of server startup , This is called static resources .

- When the server enters the formal operation stage , When you start processing customer requests , If it needs relevant resources , You can get... Directly from the pool , No need for dynamic allocation .

- When the server has finished processing a client connection , You can put related resources back into the pool , There is no need to perform a system call to free up resources .

Thread pool code

threadpool.h

#ifndef THREADPOOL_H

#define THREADPOOL_H

#include <list>

#include <cstdio>

#include <exception>

#include <pthread.h>

#include "locker.h"

template<typename T>

class threadpool{

public:

/*thread_number Is the number of threads in the thread pool ,max_requests Is the maximum number of... Allowed in the request queue 、 Number of requests waiting to be processed */

threadpool(int thread_number = 8, int max_requests = 10000);

~threadpool();

bool append(T* request);

private:

/* Functions run by worker threads , It constantly takes tasks from the work queue and executes them */

static void* worker(void* arg);

void run();

private:

// Number of threads

int m_thread_number;

// An array describing the thread pool , The size is m_thread_number

pthread_t * m_threads;

// Maximum allowed in the request queue 、 Number of requests waiting to be processed

int m_max_requests;

// Request queue

std::list< T* > m_workqueue;

// Protect the mutex of the request queue

locker m_queuelocker;

// Is there a task to deal with

sem m_queuestat;

// End thread or not

bool m_stop;

};

// Concrete realization

template <typename T>

threadpool< T >::threadpool(int thread_number, int max_requests) :

m_thread_number(thread_number), m_max_requests(max_requests),

m_stop(false), m_threads(NULL) {

if((thread_number <= 0) || (max_requests <= 0) ) {

throw std::exception();

}

m_threads = new pthread_t[m_thread_number];

if(!m_threads) {

throw std::exception();

}

// establish thread_number Threads , And set them to be out of thread .

for ( int i = 0; i < thread_number; ++i ) {

printf( "create the %dth thread\n", i);

if(pthread_create(m_threads + i, NULL, worker, this ) != 0) {

delete [] m_threads;

throw std::exception();

}

// Set thread separation ;

if( pthread_detach( m_threads[i] ) ) {

delete [] m_threads;

throw std::exception();

}

}

}

// Destructor

template< typename T >

threadpool< T >::~threadpool() {

delete [] m_threads;

m_stop = true;

}

template< typename T >

bool threadpool< T >::append( T* request )

{

// Be sure to lock the work queue , Because it is shared by all threads .

m_queuelocker.lock();

if ( m_workqueue.size() > m_max_requests ) {

m_queuelocker.unlock();

return false;

}

m_workqueue.push_back(request);

m_queuelocker.unlock();

m_queuestat.post();

return true;

}

template< typename T >

void* threadpool< T >::worker( void* arg )

{

threadpool* pool = ( threadpool* )arg;

pool->run();

return pool;

}

template< typename T >

void threadpool< T >::run() {

while (!m_stop) {

m_queuestat.wait();// Judge whether there is a task to do

m_queuelocker.lock();

if ( m_workqueue.empty() ) {

m_queuelocker.unlock();

continue;

}

T* request = m_workqueue.front();

m_workqueue.pop_front();

m_queuelocker.unlock();

if ( !request ) {

continue;

}

request->process();

}

}

#endif

locker.h

#ifndef LOCKER_H

#define LOCKER_H

// Thread synchronization mechanism encapsulates classes

#include<pthread.h>

#include<exception>

#include <semaphore.h>

// Mutex class

class locker

{

private:

pthread_mutex_t m_mutex;

public:

locker(){

if(pthread_mutex_init(&m_mutex, NULL)!= 0){

throw std::exception();

}

}

~locker()

{

pthread_mutex_destroy(&m_mutex);

}

bool lock()

{

return pthread_mutex_lock(&m_mutex) == 0;

}

bool unlock()

{

return pthread_mutex_unlock(&m_mutex) == 0;

}

pthread_mutex_t *get()

{

return &m_mutex;

}

};

class cond {

public:

cond(){

if (pthread_cond_init(&m_cond, NULL) != 0) {

throw std::exception();

}

}// Initialize semaphores

~cond() {

pthread_cond_destroy(&m_cond);

}

// Destroy semaphore

bool wait(pthread_mutex_t *m_mutex) {

int ret = 0;

ret = pthread_cond_wait(&m_cond, m_mutex);

return ret == 0;

}

bool timewait(pthread_mutex_t *m_mutex, struct timespec t) {

int ret = 0;

ret = pthread_cond_timedwait(&m_cond, m_mutex, &t);

return ret == 0;

}

// With timeout

bool signal() {

return pthread_cond_signal(&m_cond) == 0;

}

bool broadcast() {

return pthread_cond_broadcast(&m_cond) == 0;

}

private:

pthread_cond_t m_cond;

};

// Semaphore class

class sem {

public:

sem() {

if( sem_init( &m_sem, 0, 0 ) != 0 ) {

throw std::exception();

}

}

sem(int num) {

if( sem_init( &m_sem, 0, num ) != 0 ) {

throw std::exception();

}

}

~sem() {

sem_destroy( &m_sem );

}

// Wait for the semaphore

bool wait() {

return sem_wait( &m_sem ) == 0;

}

// Increase the semaphore

bool post() {

return sem_post( &m_sem ) == 0;

}

private:

sem_t m_sem;

};

#endif

边栏推荐

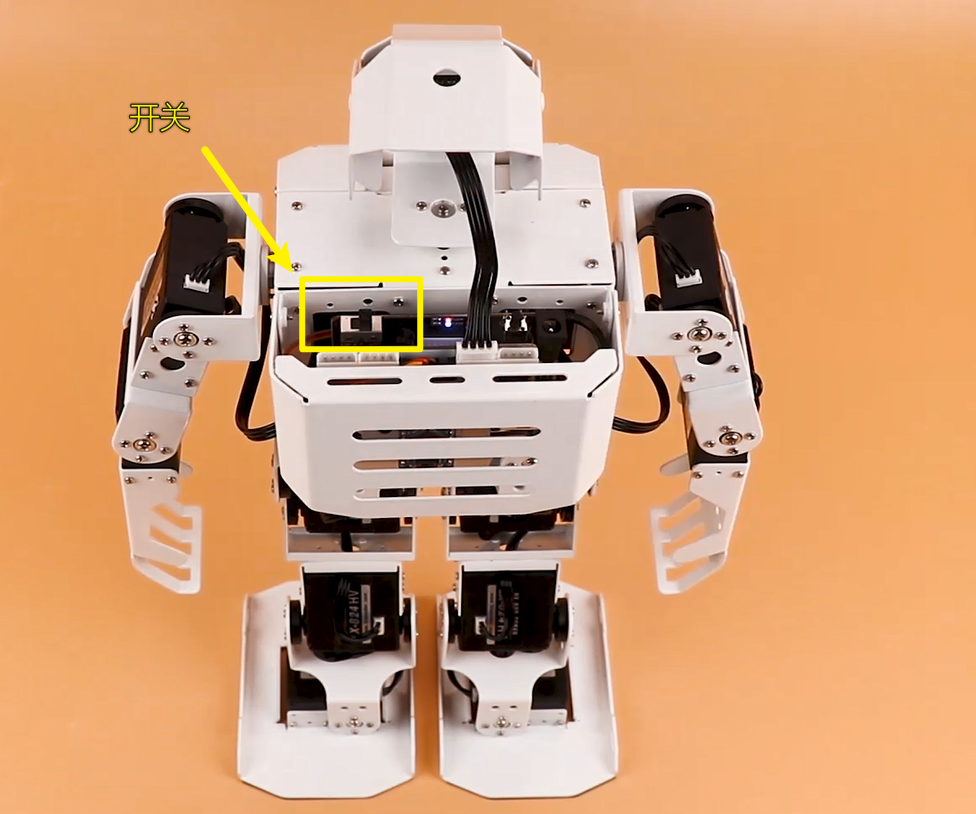

- tonybot 人形機器人 紅外遙控玩法 0630

- 提高效率 Or 增加成本,开发人员应如何理解结对编程?

- 亚马逊、速卖通、Lazada、Shopee、eBay、wish、沃尔玛、阿里国际、美客多等跨境电商平台,测评自养号该如何利用产品上新期抓住流量?

- Yolov5进阶之九 目标追踪实例1

- Output student grades

- Amazon, express, lazada, shopee, eBay, wish, Wal Mart, Alibaba international, meikeduo and other cross-border e-commerce platforms evaluate how Ziyang account can seize traffic by using products in th

- 洛谷P3065 [USACO12DEC]First! G 题解

- [ue4] Niagara's indirect draw

- Zzuli:1043 max

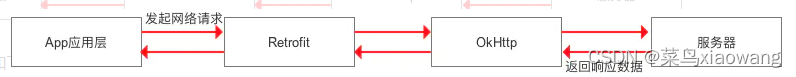

- retrofit

猜你喜欢

随机推荐

Talking about part of data storage in C language

adc128s022 ADC verilog设计实现

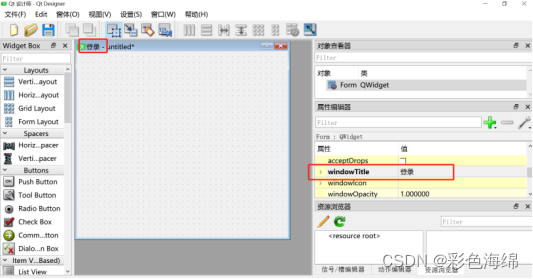

Vs+qt application development, set software icon icon

Tonybot Humanoïde Robot Infrared Remote play 0630

[opengl] face pinching system

NOI OPENJUDGE 1.5(23)

Zzuli:1052 sum of sequence 4

Zzuli: sum of 1051 square roots

[qingniaochangping campus of Peking University] in the Internet industry, which positions are more popular as they get older?

Zzuli:1045 numerical statistics

Luogu p5536 [xr-3] core city solution

dllexport和dllimport

Solve the problem that PR cannot be installed on win10 system. Pr2021 version -premiere Pro 2021 official Chinese version installation tutorial

Niuke: crossing the river

[opengl] pre bake using computational shaders

My QT learning path -- how qdatetimeedit is empty

【7.3】146. LRU缓存机制

tonybot 人形机器人 首次开机 0630

Yolov5进阶之九 目标追踪实例1

Zzuli:1048 factorial table

![洛谷P4047 [JSOI2010]部落划分 题解](/img/7f/3fab3e94abef3da1f5652db35361df.png)