当前位置:网站首页>Modify spark to support remote access to OSS files

Modify spark to support remote access to OSS files

2022-07-27 15:38:00 【wankunde】

Compile a specific version Hadoop visit OSS Of Jar package

at present Hadoop trunk The branch already contains hadoop-aliyun The code of the module ,hadoop Has default support for aliyun oss Access support for . But our company hadoop Version or CDH5 The branch version of , The default is No oss Supported by .

By downloading the official hadoop-aliyun.jar Put the bag in Hadoop in , Found a higher version of hadoop-aliyun.jar Rely on a higher version of httpclient.jar And higher versions of httpcore.jar, High and low versions cause class access conflicts .

In desperation , Try using official code , Self compile corresponding hadoop Version of hadoop-aliyun.jar To meet the needs .

Operation steps :

- Download current hadoop Version of the latest code (commit id = fa15594ae60), and copy

hadoop-tools/hadoop-aliyunModule code to current hadoop In the project . - Revised hadoop-tools pom, add to hadoop-aliyun module

- modify adoop-aliyun module pom, Change to corresponding version, modify

aliyun-sdk-oss,httpclient,httpcoreThree dependent packages , Increase the use of shade plug-in unit , Come on shade The high version of thehttpclient.jarAnd higher versions ofhttpcore.jarTwo bags - Get rid of everything

org.apache.hadoop.thirdparty.Code at the beginning - modify

import org.apache.commons.lang3.byimport org.apache.commons.lang. - copy hadoop-aws Under the module of

BlockingThreadPoolExecutorServiceandSemaphoredDelegatingExecutorTwo classes Toorg.apache.hadoop.utilUnder the table of contents - Compiler module :

mvn clean package -pl hadoop-tools/hadoop-aliyun

The modified hadoop-aliyun module pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. -->

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-project</artifactId>

<version>2.6.0-cdh5.13.3</version>

<relativePath>../../hadoop-project</relativePath>

</parent>

<artifactId>hadoop-aliyun</artifactId>

<name>Apache Hadoop Aliyun OSS support</name>

<packaging>jar</packaging>

<properties>

<file.encoding>UTF-8</file.encoding>

<downloadSources>true</downloadSources>

</properties>

<profiles>

<profile>

<id>tests-off</id>

<activation>

<file>

<missing>src/test/resources/auth-keys.xml</missing>

</file>

</activation>

<properties>

<maven.test.skip>true</maven.test.skip>

</properties>

</profile>

<profile>

<id>tests-on</id>

<activation>

<file>

<exists>src/test/resources/auth-keys.xml</exists>

</file>

</activation>

<properties>

<maven.test.skip>false</maven.test.skip>

</properties>

</profile>

</profiles>

<build>

<plugins>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>findbugs-maven-plugin</artifactId>

<configuration>

<findbugsXmlOutput>true</findbugsXmlOutput>

<xmlOutput>true</xmlOutput>

<excludeFilterFile>${basedir}/dev-support/findbugs-exclude.xml

</excludeFilterFile>

<effort>Max</effort>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<forkedProcessTimeoutInSeconds>3600</forkedProcessTimeoutInSeconds>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>deplist</id>

<phase>compile</phase>

<goals>

<goal>list</goal>

</goals>

<configuration>

<!-- build a shellprofile -->

<outputFile>

${project.basedir}/target/hadoop-tools-deps/${project.artifactId}.tools-optional.txt

</outputFile>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.0.0</version>

<executions>

<execution>

<id>shade-aliyun-sdk-oss</id>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<shadedArtifactAttached>false</shadedArtifactAttached>

<promoteTransitiveDependencies>true</promoteTransitiveDependencies>

<createDependencyReducedPom>true</createDependencyReducedPom>

<createSourcesJar>true</createSourcesJar>

<relocations>

<relocation>

<pattern>org.apache.http</pattern>

<shadedPattern>com.xxx.thirdparty.org.apache.http</shadedPattern>

</relocation>

</relocations>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.aliyun.oss</groupId>

<artifactId>aliyun-sdk-oss</artifactId>

<version>3.4.1</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.4.1</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpcore</artifactId>

<version>4.4.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpcore</artifactId>

</exclusion>

</exclusions>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<scope>test</scope>

<type>test-jar</type>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-distcp</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-distcp</artifactId>

<scope>test</scope>

<type>test-jar</type>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-tests</artifactId>

<scope>test</scope>

<type>test-jar</type>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-examples</artifactId>

<scope>test</scope>

<type>jar</type>

</dependency>

</dependencies>

</project>

Hadoop and Spark Integrated read OSS file

HDFS Read OSS file

HDFS visit OSS Need modification core-site.xml, increase OSS Rhetorical configuration . And then hadoop-aliyun.jar Put it in hadoop Node commons jar Package directory

core-site.xml To configure

<xml>

<property>

<name>fs.oss.endpoint</name>

<value>oss-cn-zhangjiakou.aliyuncs.com</value>

</property>

<property>

<name>fs.oss.accessKeyId</name>

<value>xxx</value>

</property>

<property>

<name>fs.oss.accessKeySecret</name>

<value>xxxx</value>

</property>

<property>

<name>fs.oss.impl</name>

<value>org.apache.hadoop.fs.aliyun.oss.AliyunOSSFileSystem</value>

</property>

<property>

<name>fs.oss.buffer.dir</name>

<value>/tmp/oss</value>

</property>

<property>

<name>fs.oss.connection.secure.enabled</name>

<value>false</value>

</property>

<property>

<name>fs.oss.connection.maximum</name>

<value>2048</value>

</property>

</xml>

test HDFS Read OSS file

bin/hdfs dfs -ls oss://bucket/OSS_FILES

Spark Read OSS file

because Spark The scalability of is better , Can be modified without hadoop Under the premise of any configuration , increase Spark Yes OSS File reading

spark.hadoop.fs.oss.endpoint=oss-cn-zhangjiakou.aliyuncs.com

spark.hadoop.fs.oss.accessKeyId=xxx

spark.hadoop.fs.oss.accessKeySecret=xxx

spark.hadoop.fs.oss.impl=org.apache.hadoop.fs.aliyun.oss.AliyunOSSFileSystem

spark.hadoop.fs.oss.buffer.dir=/tmp/oss

spark.hadoop.fs.oss.connection.secure.enabled=false

spark.hadoop.fs.oss.connection.maximum=2048

And then hadoop-aliyun.jar Put it in spark Dependence jar Just under the package directory

test Spark Read OSS file

val df = spark.read.format("json").load("oss://bucket/OSS_FILES")

Spark SQL Read OSS file

Spark SQL Read OSS There are two ways

The first is to establish an external view of data , This way is none catalog management model , It has the following characteristics :

- spark session When the reply is closed ,view Will delete

- view Only data under one directory can be queried , I haven't seen the support of partition data yet

- establish view When , It's going to work on the data sample sampling , When the data is large , More time-consuming

CREATE TEMPORARY VIEW view_name

USING org.apache.spark.sql.json

OPTIONS (

path "oss://bucket/OSS_DIRECTORY"

);

The second way is to directly establish OSS External table of the file

If you want to create OSS Of documents catalog External table , need hive metastore Support OSS File reading . At this point, we must Hadoop Support OSS File reading ( Reference resources HDFS Read OSS file ), Another one hadoop-aliyun.jar Put it in hive Under the dependent package directory of the node , restart Hive MetaStore service .

cp hadoop-aliyun-2.6.0-cdh5.13.3.jar /opt/cloudera/parcels/CDH/lib/hadoop-hdfs/

cp hadoop-aliyun-2.6.0-cdh5.13.3.jar /opt/cloudera/parcels/CDH/lib/hive/lib

Spark SQL Establish external table test

CREATE TABLE app_log (

`@timestamp` timestamp ,

`@version` string ,

appname string ,

containerMeta struct<appName:string,containerId:string,procName:string> ,

contextMap struct<aid:string,sid:string,spanId:string,storeId:string,traceId:string> ,

message string ,

year string,

month string,

day string,

hour string

)

USING org.apache.spark.sql.json

PARTITIONED BY (year, month, day, hour)

OPTIONS (

path "oss://bucket/OSS_DIRECTORY"

);

-- Update table metadata

MSCK REPAIR TABLE app_log;

边栏推荐

- Use double stars instead of math.pow()

- 一文读懂鼠标滚轮事件(wheelEvent)

- 后台返回来的是这种数据,是什么格式啊

- [正则表达式] 匹配分组

- Network equipment hard core technology insider router Chapter 4 Jia Baoyu sleepwalking in Taixu Fantasy (Part 2)

- Static关键字的三种用法

- Network equipment hard core technology insider router 19 dpdk (IV)

- Pictures to be delivered

- Record record record

- Leetcode 244 week competition - post competition supplementary question solution [broccoli players]

猜你喜欢

C语言:三子棋游戏

Spark 3.0 adaptive execution code implementation and data skew optimization

flutter —— 布局原理与约束

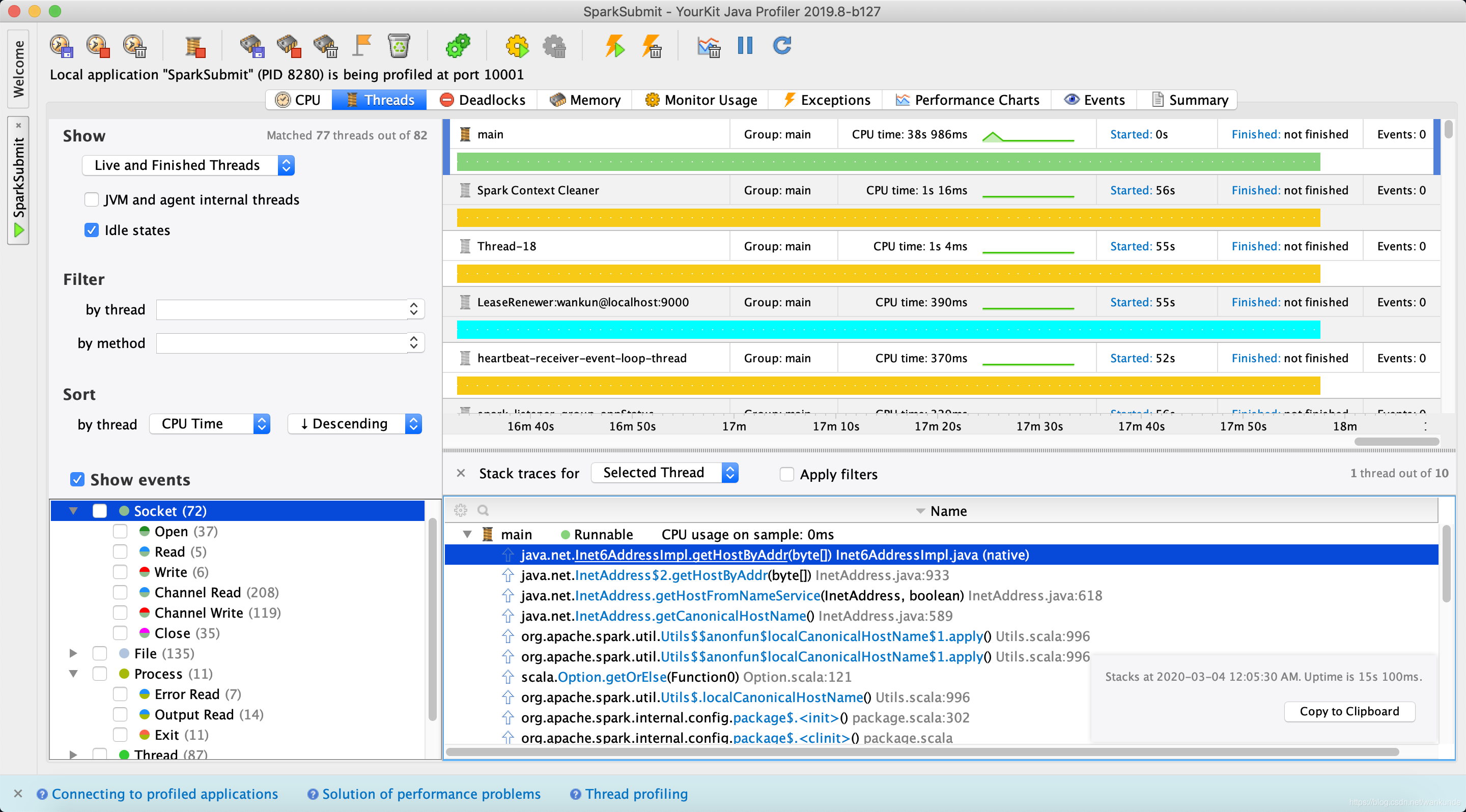

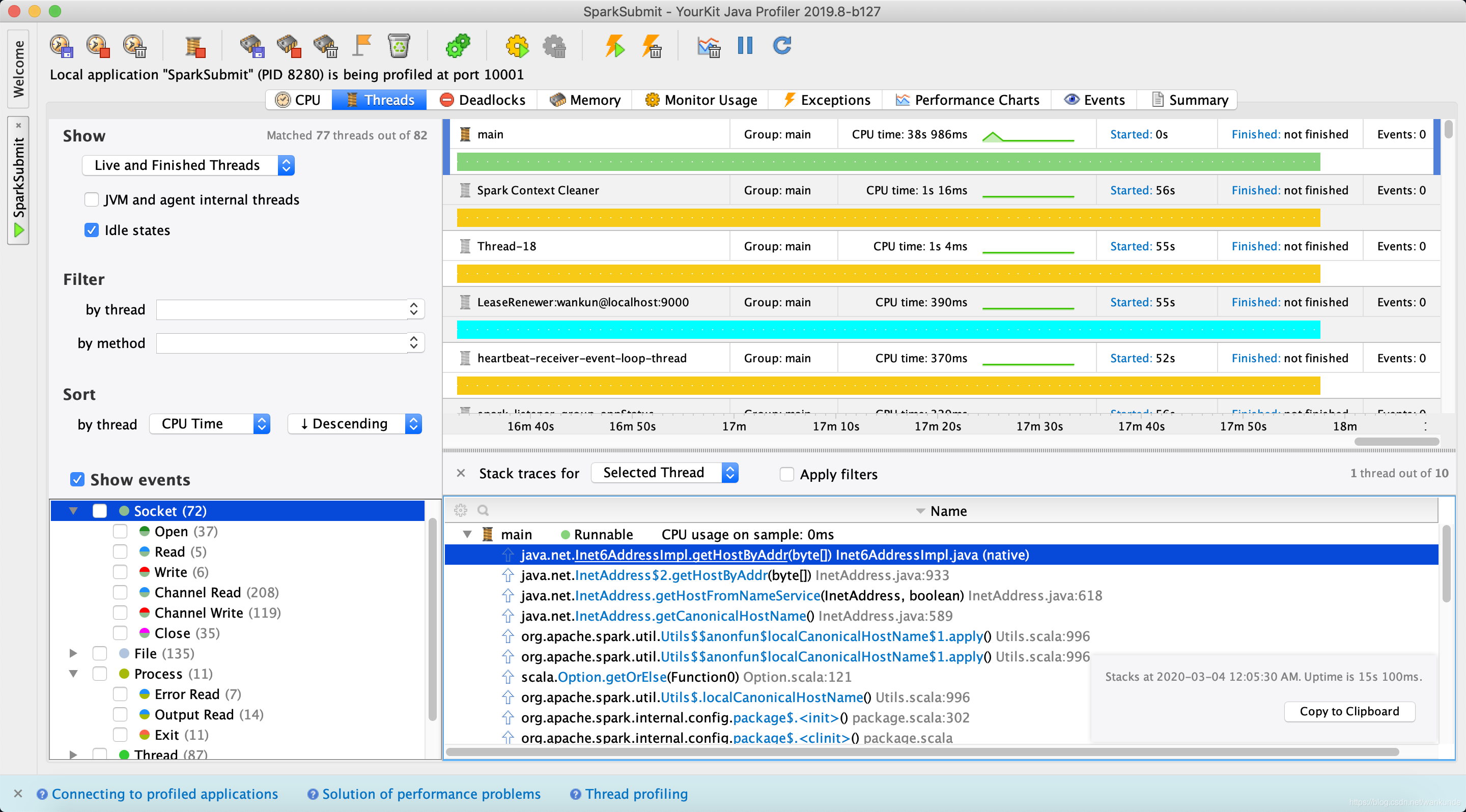

Troubleshooting the slow startup of spark local programs

【剑指offer】面试题42:连续子数组的最大和——附0x80000000与INT_MIN

Spark Filter算子在Parquet文件上的下推

Spark Bucket Table Join

Spark 本地程序启动缓慢问题排查

折半查找

Leetcode 240. search two-dimensional matrix II medium

随机推荐

flutter —— 布局原理与约束

js运用扩展操作符(…)简化代码,简化数组合并

【剑指offer】面试题50:第一个只出现一次的字符——哈希表查找

C:浅谈函数

Method of removing top navigation bar in Huawei Hongmeng simulator

Network equipment hard core technology insider router Chapter 16 dpdk and its prequel (I)

Network equipment hard core technology insider router Chapter 17 dpdk and its prequel (II)

Leetcode 190. reverse binary bit operation /easy

Implement custom spark optimization rules

“router-link”各种属性解释

Spark 3.0 测试与使用

What format is this data returned from the background

After configuring corswebfilter in grain mall, an error is reported: resource sharing error:multiplealloworiginvalues

Dan bin Investment Summit: on the importance of asset management!

2022-07-27 Daily: IJCAI 2022 outstanding papers were published, and 298 Chinese mainland authors won the first place in two items

Pictures to be delivered

《吐血整理》C#一些常用的帮助类

【云享读书会第13期】音频文件的封装格式和编码格式

$router.back(-1)

JS uses extension operators (...) to simplify code and simplify array merging