当前位置:网站首页>[300 + selected interview questions from big companies continued to share] big data operation and maintenance sharp knife interview question column (III)

[300 + selected interview questions from big companies continued to share] big data operation and maintenance sharp knife interview question column (III)

2022-07-01 15:27:00 【Big data Institute】

Continuous sharing is useful 、 valuable 、 Selected high-quality big data interview questions

We are committed to building the most comprehensive big data interview topic database in the whole network

21、 Why install HDFS HA A pattern needs to be customized nameservice What's the name of ? by what apache Hadoop Not directly with IP Address to resolve , It's about hdfs-site.xml In the configuration nameservice Resolve to the corresponding address by name , If the IP( such as keepalived) Technology can also realize active / standby switching , The official use nameservice The best of Where is the point ?

Refer to the answer :

Because there are two high availability clusters NameNode, One is Active NameNode, One yes Standby NameNode, There may be master-slave switching between the two , Only Active NameNode External services available , So we are not sure which one to visit NameNode, So we need a individual nameservice For our visit , When we have nameservice visit NameNode when , customer The client will automatically determine which is Active NameNode, Reduce the cost of users .

IP Application operation and maintenance is a highly available solution , Yes NameNode It's still too simple , DataNode want Follow two at the same time NameNode Establishing a connection , Report data to switch quickly , and NameNode Many states need to be verified during master-slave switching , such as EditLog Whether to synchronize , Use IP Words Can't judge these .

22、HDFS Both upload and download of are actually client I finished it myself , In class, teacher you Deleting is not client I finished it myself , client Send the metadata information that needs to be deleted to NameNode, Then pass NameNode and DataNode Heartbeat mechanism implementation , The previous increase You have said the principle of deletion , That modification HDFS Can the principle of document content help us analyze some ? or Can you show us the source code ?

Refer to the answer :

In the previous course, the teacher shared the source code , Students find it too difficult , Later, the teacher didn't share , If you have this need , The teacher in the back can check the source code for you again , And teach you some ways to view and analyze the source code , Help you have a better understanding when you need it . Originally, source code sharing is not within the scope of our course , Teachers don't read the source code for no reason , When needed Wait and see , For example, modify HDFS The teacher didn't read the contents of the document .

23、MapReduce Strictly speaking, there is no component name , I understand that it is just a computational idea , that We can do it in YARN see MapReduce The figure of the calculation process ? Where to look specifically Well ?

Refer to the answer :

Can be in YARN Of WEB UI Check the operation process and operation indicators , Click into the first column see .

24、 Now with the increasing popularity of cloud native technology , With CNCF Open source products led by the organization Kubernetes More and more popular , Will our later courses be explained in Kubernetes Running in cluster Big data components ? Can you tell us something in advance ?

Refer to the answer :

There are plans to explain in this issue Flink On Kubernetes The program , It may be explained later in the course in combination with actual cases , So that you can understand .

25、 Optimization of production environment HDFS After cluster parameters CDH How to restart smoothly ?

Refer to the answer :

(1) Reduce BlockReport Time data scale ; NameNode Handle BR The main reason for the low efficiency of is every time BR With Block It's too big to cause , So you can adjust Block Quantity threshold , One time BlockReport Report separately in multiple sets , Improve NameNode Processing efficiency . The reference parameters are : dfs.blockreport.split.threshold, The default is 1,000,000, Current cluster DataNode On Block The scale number is in 240,000 ~ 940,000, It is suggested to adjust it to 500,000;

(2) When it is necessary to DataNode Restart operation , And on a large scale ( Including cluster size and data size ) when , It is recommended to restart DataNode After the process NameNode restart , Avoid the previous “ An avalanche ” problem ;

(3) Control restart DataNode The number of ; According to the current node data scale , If large-scale restart DataNode, It can be scrolled , Every time 15 An example , Unit spacing 1min Scroll to restart , If the data scale grows , The number of instances needs to be adjusted appropriately ;

26、 If data skew is found in existing clusters , In production environment HBase The data is skewed, such as How to solve it ? What is the reason for data skew , let me put it another way , The culprit of data skew First to last development , Operation and maintenance or software defects ?

Refer to the answer :

The reason for data skew is rowkey The design is unreasonable , Follow HBase The relationship itself is not Big , This is where we are HBase It will be explained during component operation and maintenance .

27、 Production environment RowKey How to change the design is reasonable , Reasonable design RowKey Then it must

Can we avoid data skew ?

Refer to the answer :

This is where we are HBase It will be explained during component operation and maintenance .

28、 at present Hadoop Which versions have been officially released ? How to distinguish between Hadoop All releases Which version is the stable version , Which is the beta , Which is the long-term supported version ?

Refer to the answer :

You can view the official documents Latest news, There are specific instructions in it , See... In the box below stable It means stable , As for whether the version is supported for a long time, it depends on the features of the version , This may need to be linked Official .

29、DataXceiver The sum of this class DataNode What does it matter ? Online access to relevant information , It is said that it has nothing to do with the over lease period of file operation , But the descriptions are ambiguous , Teacher, you can use vernacular Help us answer it ?

Refer to the answer :

The first First Need to be want know Avenue DataXceiverServer yes What Well , DataXceiverServer yes DataNode The last background worker thread used to receive data read / write requests , Please read and write for each data Please create a separate thread to process , The thread mentioned here is DataXceiver.

From the source code DataXceiver Realized Runnable Interface , It shows that it is a thread , He contains DataXceiverServer By looking at DataXceiver Of run Method , It is found that DataXceiverServer My place The reason is Logic Compilation , namely Pick up closed Count According to the read Write please seek Of after platform work do Line cheng Just yes DataXceiver ,DataXceiverServer Encapsulates the processing logic .

30、 teacher , CDH6 We have completed the construction according to the video , One HDFS,HBase The cluster can bear How much pressure and how to test it ?

Refer to the answer :

HBase There is its own stress testing tool PerformanceEvaluation, You can share some practical information later . If necessary, you can also arrange time to explain to you .

Continuous sharing is useful 、 valuable 、 Selected high-quality big data interview questions

We are committed to building the most comprehensive big data interview topic database in the whole network

边栏推荐

- Flink 系例 之 TableAPI & SQL 与 MYSQL 分组统计

- 说明 | 华为云云商店「商品推荐榜」

- [antenna] [3] some shortcut keys of CST

- 常见健身器材EN ISO 20957认证标准有哪些

- opencv学习笔记六--图像拼接

- 《QT+PCL第九章》点云重建系列2

- 微信公众号订阅消息 wx-open-subscribe 的实现及闭坑指南

- "Qt+pcl Chapter 6" point cloud registration ICP Series 6

- 微服务追踪SQL(支持Isto管控下的gorm查询追踪)

- 【目标跟踪】|模板更新 时间上下文信息(UpdateNet)《Learning the Model Update for Siamese Trackers》

猜你喜欢

It's settled! 2022 Hainan secondary cost engineer examination time is determined! The registration channel has been opened!

Guide de conception matérielle du microcontrôleur s32k1xx

openssl客户端编程:一个不起眼的函数导致的SSL会话失败问题

【STM32学习】 基于STM32 USB存储设备的w25qxx自动判断容量检测

Qt+pcl Chapter 9 point cloud reconstruction Series 2

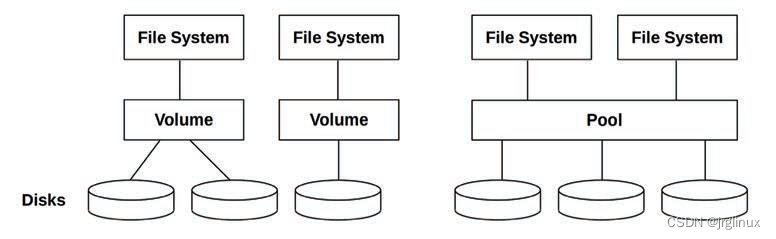

《性能之巅第2版》阅读笔记(五)--file-system监测

微服务追踪SQL(支持Isto管控下的gorm查询追踪)

点云重建方法汇总一(PCL-CGAL)

S32K1xx 微控制器的硬件設計指南

【ROS进阶篇】第五讲 ROS中的TF坐标变换

随机推荐

使用 csv 导入的方式在 SAP S/4HANA 里创建 employee 数据

微信小程序01-底部导航栏设置

What are the test items of juicer ul982

说明 | 华为云云商店「商品推荐榜」

Junda technology indoor air environment monitoring terminal PM2.5, temperature and humidity TVOC and other multi parameter monitoring

Summary of empty string judgment in the project

Fix the failure of idea global search shortcut (ctrl+shift+f)

[one day learning awk] conditions and cycles

Basic use process of cmake

Filter &(登录拦截)

Photoshop插件-HDR(二)-脚本开发-PS插件

榨汁机UL982测试项目有哪些

[stm32-usb-msc problem help] stm32f411ceu6 (Weact) +w25q64+usb-msc flash uses SPI2 to read out only 520kb

Is JPMorgan futures safe to open an account? What is the account opening method of JPMorgan futures company?

STM32F411 SPI2输出错误,PB15无脉冲调试记录【最后发现PB15与PB14短路】

Zhang Chi Consulting: lead lithium battery into six sigma consulting to reduce battery capacity attenuation

异常检测中的浅层模型与深度学习模型综述(A Unifying Review of Deep and Shallow Anomaly Detection)

Guide de conception matérielle du microcontrôleur s32k1xx

"Qt+pcl Chapter 6" point cloud registration ICP Series 6

Implementation of wechat web page subscription message