This article outlines : Come back to know -KG Open source project set Medium BERT-NER-pytorch After the project , Some of the learning records , It has reference significance for Xiaobai, who has just entered the industry .

Information : About BERT In the model transformer Introduce , What has to be shared is Jay Alammar Animation of , Why didn't I see such a foreign masterpiece early ?

One 、 preparation :

1、 Data sets

How data sets are organized 、 The way we deal with it is the most important thing in the field of depth , It can be said that an algorithm engineer 80% We're all dealing with data . Now let's talk about datasets :

- Access method : Direct use google Expand gitzip from git Download it directly ,

Raw data Only 3 term , yes

among train There is 45000 individual The sentence ,test Set is 3442, We need to artificially divide val Set .

- Raw data form - It looks like this , Let's take a look msra_train_bio Before 17 That's ok ( altogether 176042 That's ok ):

in B-ORG

common I-ORG

in I-ORG

Central I-ORG

Cause O

in B-ORG

countries I-ORG

Cause I-ORG

Male I-ORG

The party I-ORG

Ten I-ORG

One I-ORG

Big I-ORG

Of O

greetings O

word O

various O- tags( There are only three entities : Institutions , people , Location ):

O

B-ORG

I-PER

B-PER

I-LOC

I-ORG

B-LOCps: You can see , It's using BIO Tagging , We can certainly modify !

- We'll divide the dataset into (3 A catalog ):

| Dataset | Number |

|---|---|

| training set | 42000 |

| validation set | 3000 |

| test set | 3442 |

Get three directories .

- The form of data processing ( Take the first two ):

sentences.txt file :

Such as What Explain " foot The ball world Long period save stay Of various many Spear shield , heavy Vibration Once upon a time Japan tianjin door foot The ball Of Male wind , become by God tianjin foot The altar On Next Inside Outside To It's about discussion On Of word topic .

The county One hand Catch farmers trade Technology Technique PUSH wide , One hand Catch farmers civil Families, Technology teach Education and farmers Technology water flat Of carry high .

and gen new Of Turn off key Just yes know knowledge and Letter Rest Of raw production 、 Pass on seeding 、 send use .Corresponding ,tags.txt file :

O O O O O O O O O O O O O O O O O O O O O B-LOC I-LOC O O O O O O O O B-LOC I-LOC O O O O O O O O O O O O O O

O O O O O O O O O O O O O O O O O O O O O O O O O O O O O O

O O O O O O O O O O O O O O O O O O O O O O O2、 Prepare models and trained model parameters

After many attempts in the experiment , It is not difficult to find the model parameters ,

When the author reproduces the code , There is no direct access to pt The model parameters under , Now it's a matter of parameters .

train.py In the code , establish model Sometimes there is such a sentence :

model = BertForTokenClassification.from_pretrained()

Let's click in to see from_pretrained() Method , stay pytorch_pretrained_bert In the catalog modeling.py In file .

You can use pathnames or url To get models and parameters ( And then there was a little bit of an episode in training , I'm abandoning the author's offer , Compressed from the package itself , It will be mentioned later ):

PRETRAINED_MODEL_ARCHIVE_MAP = {

'bert-base-uncased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased.tar.gz",

'bert-large-uncased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-large-uncased.tar.gz",

'bert-base-cased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-cased.tar.gz",

'bert-large-cased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-large-cased.tar.gz",

'bert-base-multilingual-uncased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-multilingual-uncased.tar.gz",

'bert-base-multilingual-cased': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-multilingual-cased.tar.gz",

'bert-base-chinese': "https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese.tar.gz",

}ps: First get tf The model parameters are then converted to pt, It took me a lot of effort , Directly from Transformer The library downloaded a convert_tf_checkpoint_to_pytorch.py File to tf Model parameters directory , After a little operation, I got pt Of .bin file , The good thing is, a word now .

3、train.py Document interpretation

Data set processing and super parameter setting are simple , It's not about the core of our article , We don't make too many records here , If you want to know, go and see github project , Portal ,

This file contains a lot of things , Yes

①parse,params Set up ,logger journal

②dataloader,model,optimizer and train_and_evaluate

- Parameters parse( This parameter resolution is to run .py File parameters )

parser = argparse.ArgumentParser()

parser.add_argument('--data_dir', default='NER-BERT-pytorch-data-msra', help="Directory containing the dataset")

parser.add_argument('--bert_model_dir', default='pt_things', help="Directory containing the BERT model in PyTorch")

…… After the main in , Record parameters to memory :args = parser.parse_args()

In the next use, by args.param To get the parameters ,

- logger journal

The relevant processing is put in utils.py in , stay train.py In the direct :

# establish

utils.set_logger(os.path.join(args.model_dir, 'train.log'))

# When you need to record :

logging.info("device: {}, n_gpu: {}, 16-bits training: {}".format(params.device, params.n_gpu, args.fp16))- model

Simple , Two words :

model = BertForTokenClassification.from_pretrained(args.bert_model_dir, num_labels=len(params.tag2idx))

model.to(params.device)It includes model loading and parameter loading , In training, we're seeing modelconfig after , There are also two hints :

Weights of BertForTokenClassification not initialized from pretrained model: ['classifier.weight', 'classifier.bias']

Weights from pretrained model not used in BertForTokenClassification: ['cls.predictions.bias', 'cls.predictions.transform.dense.weight', 'cls.predictions.transform.dense.bias', 'cls.predictions.decoder.weight', 'cls.seq_relationship.weight', 'cls.seq_relationship.bias', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.transform.LayerNorm.bias']( At first, I thought it was a model parameter import failure , Find a solution .

I used to think pytorch_model.bin The parameters in the file do not match the model , Download the compressed package again from the link provided by the source code , But there are still these two words .

It turns out that Google The last forum found that these two sentences mean successful call of parameters .)

Solve the mystery : This model It includes embedding and bert NER Two parts , The parameters of the model should be embedding Part of the , Specific NER Tasks should use our own data to train !

read model Source code : Each layer explains in detail

see model(BertForTokenClassification):

There are three floors :

①BertModel

BertEmbeddings: There are multiple layers in it

(position_embeddings): Embedding(512, 768)

(token_type_embeddings): Embedding(2, 768)

(LayerNorm): BertLayerNorm()

(dropout): Dropout(p=0.1, inplace=False)BertEncoder: Is punctuated with 12 layer encoder, every last encoder It's all one BertLayer: (attention): BertAttention # A top priority , Be sure to have a chance to brush

(intermediate): BertIntermediate

(output): BertOutputBertPooler②DropOut

③Linear

- optimizer

full_finetuning

4、 Single machine multi card parallel and fp16

- Dorka

To put Models and data All assigned to multi card ;

① Specify virtual gpu:os.environ["CUDA_VISIBLE_DEVICES"] = '1,2,3,0'

The physical address corresponding to the virtual address is 1,2,3,0( At that time, because the master of elder martial brother gpu It's physics 0 Number one card , So avoid this card with a relatively small amount of remaining video memory )

ps: In the screenshot, elder martial brother no longer uses .

② Before preparing models and data , Put this sentence :params.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

It is estimated that 'cuda' The latter part is that the author does not want to see the error reported ……

Be careful : If you want to write 'cuda:[num]', Please write the previous designation gpu The first of the time , Here it is 1!

③ Random seeds are assigned to each gpu:

# Set up random seeds for repeatable trials

random.seed(args.seed)

torch.manual_seed(args.seed) # to cpu Set up

if params.n_gpu > 0:

torch.cuda.manual_seed_all(args.seed) # set random seed for all GPUsps: If a single card , Just get rid of all;

④ to model Assign multiple cards :

model = BertForTokenClassification.from_pretrained(args.bert_model_dir, num_labels=len(params.tag2idx))

model.to(params.device)

if params.n_gpu > 1 and args.multi_gpu:

model = torch.nn.DataParallel(model)⑤ Last , Assign multiple cards to the data :

# Initialize the DataLoader

data_loader = DataLoader(args.data_dir, args.bert_model_dir, params, token_pad_idx=0)

# Load training data and test data

train_data = data_loader.load_data('train')

val_data = data_loader.load_data('val')

……

stay train() Function before :

# data iterator for training

train_data_iterator = data_loader.data_iterator(train_data, shuffle=True)

# Train for one epoch on training set

train(model, train_data_iterator, optimizer, scheduler, params)stay data_iterator() Set in for each batch Assigned cards :

# shift tensors to GPU if available

batch_data, batch_tags = batch_data.to(self.device), batch_tags.to(self.device)

yield batch_data, batch_tagsthere self.device Is the parameter accepted by the class .

- fp16

Reference resources : A talk fp16 Acceleration principle CSDN

fp16 Use 2 Byte coded storage

advantage : Less memory usage ( Lord )+ Accelerate Computing

shortcoming : Addition operation is easy to overflow

( If you have a chance, you can make a special experiment )

5、 Progress bar tool

Here we calculate each one epoch Lower computation 1400 individual batch,

So put the progress bar in every one of them epoch in :

t = trange(params.train_steps)

for i in t:

# fetch the next training batch

batch_data, batch_tags = next(data_iterator)

……

loss = model(~)

……

t.set_postfix(loss='{:05.3f}'.format(loss_avg())) Result display :

6、 Evaluation index database metrics

Indicators of outcome evaluation that can be used ( The tip of the iceberg ):

from metrics import f1_score

from metrics import accuracy_score

from metrics import classification_reportTemplates :

metrics = {}

f1 = f1_score(true_tags, pred_tags)

accuracy=accuracy_score(true_tags, pred_tags)

metrics['loss'] = loss_avg()

metrics['f1'] = f1

metrics['accuracy']=accuracy

metrics_str = "; ".join("{}: {:05.2f}".format(k, v) for k, v in metrics.items())

logging.info("- {} metrics: ".format(mark) + metrics_str) Result display :

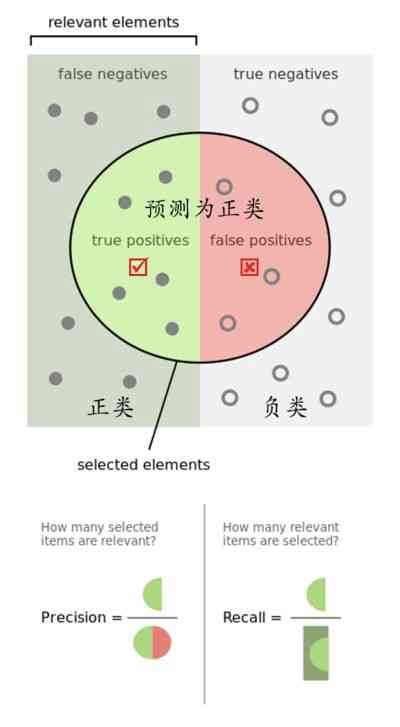

How to distinguish between accuracy and recall ?

7、 The problem with many experiments

my f1 The scores are always there 50 following , But accuracy has always been 97% near , Recently, because of the liver link extraction , So let's put this part first . I'll go back and complete it