当前位置:网站首页>Interesting erasure code

Interesting erasure code

2022-06-24 12:34:00 【Wang Lei -ai Foundation】

Redundant backup technology

Before learning about erasure codes, let's learn about the other two commonly used redundant backup technologies : raid and Multiple copies

RAID

RAID yes "Redundant Array of Independent Disk" Abbreviation , Independent redundant disk array Is an ancient disk redundant backup technology , Maybe you never understand the principle , But I must have heard of its name . Simply explain , Will be N Hard disks pass RAID Controller( branch Hardware,Software) Combined with a virtual single high-capacity hard disk , Its characteristic is N Both hard disks can be read faster and provide fault tolerance .

RAID Depending on the implementation technology, there are RAID0~9, RAID10 wait . What is used more at present is RAID 0, RAID 1, RAID 5, RAID 10.

This technology is very effective and reliable in protecting single node disk data , At the same time, there are special computer chips to support the realization of hardware RAID Algorithm , Improve efficiency and reduce resource footprint . Single use supports redundancy RAID When the algorithm , Single disk damage is caused by RAID Algorithm can be recovered , however 8T+ The recovery time of disk can be as long as several days . meanwhile RAID The flexibility of the algorithm in the cloud age is a little poor .

Multiple copies

Multi copy technology is relatively simple and direct , Since you want to redundantly protect critical data , Just save a few more , It doesn't matter how bad a single data is , There are also backups that can be used .

This technology can be seen everywhere in modern software systems , such as mysql Synchronization of / Asynchronous backup , Three copy storage in distributed database + Agreement of conformity .

The advantages and disadvantages of this method are also obvious , The advantage is high write efficiency , No extra calculations are required , Just save multiple copies directly , Data recovery is also fast , Just copy from the replica . The disadvantage is low storage efficiency , The disk capacity previously required is directly X2 perhaps X3 了 , Cost is very high .

erasure coding

ErasureCode( Erasure code ) Provide approximately three copies of reliability at a lower cost , Attract many distributed storage / Manufacturers and users of cloud storage . It can be said that erasure codes are cloud storage , Especially the core of object storage, which is widely used nowadays . Erasure code (Erasure Coding,EC) Is a coding fault tolerance technique , The earliest solution was to solve the problem of loss of some data in transmission in the communication industry . The basic principle is to segment the transmitted signal , Add a certain amount of verification, and then make the segments related to each other , Even if some signals are lost during transmission , The receiver can still calculate the complete information through the algorithm . In the data store , Erasure codes divide data into segments , Expand and encode redundant data blocks , And store it in different locations , For example, disk 、 Storage nodes or other geographical locations .

Now think about a question : Now there is 2 Copy of the data ( It is possible that a piece of data is actually divided into two parts ), Allow you to do 2 Redundancy of ( The actual use of storage is to store data (2+2)/2 = 2 times ), It is required to achieve such an effect : Any two copies of data are lost , Data can be recovered .

How to solve this problem . A simple idea is , Back up both data .

Store data as X1, X2, X3, X4 Respectively equal to A1, A2, A1, A2; Let's assume that the data X1 X2 lost , Data can be obtained from X3,X4 Recover from . But there are problems with this storage : If the missing data happens to be X1, X3 Well , So the original data A1 It is completely lost and can not be found . But you can use one of the following storage methods X1, X2 Still the same ,X3=A1+A2, X4=A1+2*A2 In this way, any two copies of data are lost , Can recover A1 and A2 了 .

This is the core principle of erasure code , Of course, it's not that simple , This algorithm is just a simplification . In practice, a method called RS Code method , According to the set m, n value , Generate a RS matrix , Store through RS The matrix calculates the check block , Single arbitrary m When block data is corrupted , Through the data blocks that still exist , and RS The inverse matrix of a part of the matrix of , All data can be recovered . Please refer to for specific calculation principle This article

Total data block = Raw data block + Check block namely n = k + m, Erasure code technology allows arbitrary in data storage m Recover data from a scene where blocks are damaged .

The practical application and improvement of erasure code

According to the introduction above , We know that the core parameter of erasure code is n and m The ratio of the , We call this value redundancy , The higher the redundancy , The more data blocks allowed to be corrupted , The safer it is , At the same time, the higher the cost of data storage . meanwhile k Value is also important , This value determines the granularity of data chunking ,k The smaller the value. , The smaller the data dispersion , The larger the fault influence surface is , The greater the cost of reconstruction ;k The bigger the value is. , Multiple copies of data increase the network and disk load , The greater the cost of reconstruction .

- EMC Object storage system ECS 12 + 4 and 10+2 The redundancy is 1.33 、 1.2

- Alibaba cloud Pangu cluster chunk Storage 8+3 Redundancy =1.375

- Google RS(6,3) in GFS II (Colossus)

Suppose we want to provide (k, m) = (12, 4) Redundancy of , The redundancy is (12+4)/12=1.33. There is a problem with using the method described above directly , When a single data is corrupted , You need from 12 Transfer data in data blocks for calculation , Network required IO It's big .Azure The improvement is ,12 The block is divided into two groups , Each group 6 block , First work out a local Check block , And then, on the whole, calculate 2 individual global Check block , This redundancy is still 1.33, But when a single data block is corrupted ( This is the most common situation in the data center ),IO From 12 Narrowed down to 6, Reduce the cost of data recovery .

actual combat

In open source object storage projects min.io The erasure code library used in is klauspost/reedsolomon, Let's use klauspost/reedsolomon Make a small tool , Demonstrate the redundancy backup effect of erasure correction code . Here we write a small program , Set up k/s Respectively 5,3 The redundancy is 1.6, I.e. delete at will 3 File , Data is always recoverable . The complete program is in here

var (

decode = flag.Bool("decode", false, "decode or not")

fileName = flag.String("file", "", "file to encode/decode")

encodePath = flag.String("encode_path", "", "folder to save encode files")

)

// usage

// ./main --file=./file//testfile.txt -encode_path=./encode

// ./main --file=./file//testfile_recover.txt -encode_path=./encode --decode

func main() {

flag.Parse()

if fileName == nil || encodePath == nil{

panic("need filename and encode path")

}

encoder, err := reedsolomon.New(5, 3)

if err != nil{

panic(err)

}

if *decode{

shards := make([][]byte, 8)

for i:= 0; i< 8; i ++{

encodeFile := path.Join(*encodePath, fmt.Sprintf("encode_%d", i))

data, err := ioutil.ReadFile(encodeFile)

if err == nil {

shards[i] = data

} else if os.IsNotExist(err){

continue

}else {

panic(err)

}

}

err = encoder.Reconstruct(shards)

if err != nil{

panic(err)

}

fmt.Printf("reconstruct data done\n")

f, err := os.OpenFile(*fileName, os.O_WRONLY|os.O_CREATE|os.O_TRUNC, 0644)

if err != nil{

panic(err)

}

dataSize := 0

for i := 0; i < 5; i++{

dataSize += len(shards[i])

}

err = encoder.Join(f, shards, dataSize)

if err != nil{

panic(err)

}

fmt.Printf("recover file success")

}else{

data, err := ioutil.ReadFile(*fileName)

if err != nil{

panic(err)

}

shards, err := encoder.Split(data)

if err != nil{

panic(err)

}

fmt.Printf("split data into 5+3=%d shards success.\n", len(shards))

err = encoder.Encode(shards)

if err != nil{

panic(err)

}

fmt.Printf("encode data success.")

err = os.MkdirAll(*encodePath, 0777)

if err != nil{

panic(err)

}

for i, s := range shards{

err := ioutil.WriteFile(path.Join(*encodePath, fmt.Sprintf("encode_%d", i)), s, 0644)

if err != nil{

panic(err)

}

}

}

}demonstration

# 1. First, let's try the storage effect * cat ./file//testfile.txt this is just a test file content is meaningless% * ./main --file=./file//testfile.txt -encode_path=./encode split data into 5+3=8 shards success. encode data success.% # Here we put the document testfile.txt Divided into 5 Share , add 3 Copies of verification data are stored ,encode The folder is the stored data content , We assume that in a real scene 7 Copies of data are stored on different nodes * ls ./encode encode_0 encode_1 encode_2 encode_3 encode_4 encode_5 encode_6 encode_7 # 2. Now we ( Because the disk of the node is damaged ) Lost one of them Any three copies of data * rm ./encode/encode_3 ./encode/encode_5 ./encode/encode_7 # 3. Try to recover data , It can be seen that , Data recovery was successful , encode file All recovered , and recover No problem with the raw data * ./main --file=./file//testfile_recover.txt -encode_path=./encode --decode reconstruct data done recover file success% * ls ./encode encode_0 encode_1 encode_2 encode_3 encode_4 encode_5 encode_6 encode_7 * cat ./file/testfile_recover.txt this is just a test file content is meaningless% # 4. Delete more data , Try to recover again , Recovery failures can be found : too few shards given * rm ./encode/encode_1 ./encode/encode_3 ./encode/encode_5 ./encode/encode_7 * ./main --file=./file//testfile_recover.txt -encode_path=./encode --decode panic: too few shards given

Reference resources

边栏推荐

- Tencent Youtu, together with Tencent security Tianyu and wechat, jointly launched an infringement protection scheme

- How is the e-commerce red envelope realized? For interview (typical high concurrency)

- Practice of dynamic load balancing based on open source tars

- What should music website SEO do?

- 嵌入式必学!硬件资源接口详解——基于ARM AM335X开发板 (上)

- Ten thousand campus developers play AI in a fancy way. It's enough to see this picture!

- Google hacking search engine attack and Prevention

- Istio practical skills: implement header based authorization

- Making daily menu applet with micro build low code

- Is it safe to open an account under the conditions of new bonds

猜你喜欢

Opencv learning notes - Discrete Fourier transform

Opencv learning notes - loading and saving images

Opencv learning notes -- Separation of color channels and multi-channel mixing

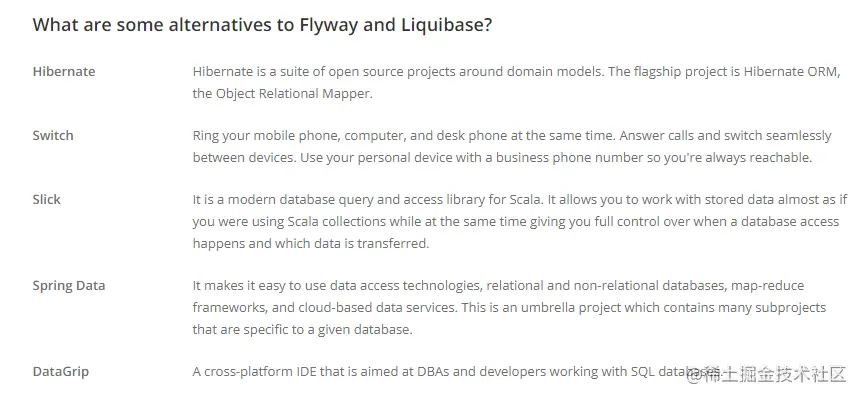

Database migration tool flyway vs liquibase (II)

巴比特 | 元宇宙每日必读:618成绩已然揭晓,在这份还算满意的答卷背后,数字藏品做出了多少贡献?...

How to write controller layer code gracefully?

《回归故里》阅读笔记

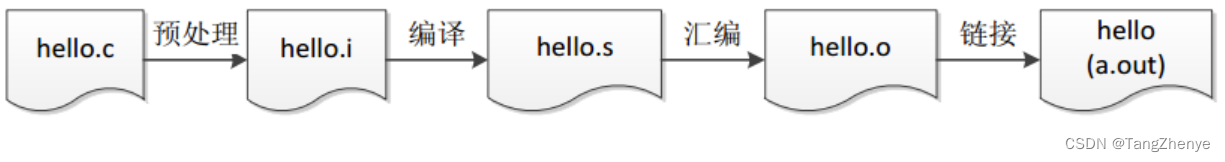

Linker --- linker

Installation and operation of libuv

Ten thousand campus developers play AI in a fancy way. It's enough to see this picture!

随机推荐

9+!通过深度学习从结直肠癌的组织学中预测淋巴结状态

How to calculate the bandwidth of video transmission? How much bandwidth is required to transmit 4K video?

How do websites and we media tap user needs? Deeply expose the secrets behind the keywords!

数据标注科普:十种常见的图像标注方法

Use go to process millions of requests per minute

Can Tencent's tendis take the place of redis?

Speculation London gold short-term stable money making skills? Where is it safe to fry London gold?

I'm in Shenzhen. Where can I open an account? Is it safe to open an account online now?

Data stack technology sharing: open source · data stack - extend flinksql to realize the join of flow and dimension tables

11+ article - machine learning builds Protics framework - deeply reveals the impact of tumor infiltrating immune cells in different molecular subtypes on prognosis

Istio practical skills: implement header based authorization

How to write controller layer code gracefully?

Opencv learning notes - matrix normalization normalize() function

A flaw in R markdown: folders cannot have Chinese

Tencent cloud and the ICT Institute launched the preparation of the "cloud native open source white paper" to deeply interpret cloud native

GTEST from getting started to getting started

11+的基于甲基化组和转录组综合分析识别葡萄膜黑色素瘤中新的预后 DNA 甲基化特征~

Examples of AES and RSA encryption operations implemented by php7.1

Detailed explanation of the execution order of the expression and loop body in the for loop

5分+的单基因泛癌纯生信思路!