当前位置:网站首页>No clue about complex data?

No clue about complex data?

2022-06-26 23:56:00 【Shengsi mindspire】

In the training process of deep learning model , Data sets play a crucial role . However , Because of the complexity of the task , The input data of deep learning model also has various forms , In the process of building the deep learning model , If you encounter particularly complex data , Researchers may spend most of their time preprocessing data sets ( Including cleaning 、 Loading and other processes ) in . therefore , Load data sets efficiently , It can build an efficient development process for researchers . Have used PyTorch The readers of ,PyTorch The framework provides us with an extremely convenient and efficient interface for custom data loading . Users only need simple inheritance torch.utils.data.Dataset And in get_item Functions and __len__ function , recycling Dataloader encapsulate , You can simply implement the automatic loading process of data sets ( Personal opinion setting PyTorch A super good point on the data level ).

● MindSpore Introduction to dataset loading ●

stay MindSpore in ,mindspore.dataset The functions inside provide us with a large number of data set specific loading operators , These operators are optimized , Good data set loading performance . however , because MindSpore The data is loaded in C Completed at the language level , It is difficult for users to perceive the specific operations carried out internally , Especially for coco This kind of more complex data sets ( Is to compare black holes , It's hard to master ). Because the author is a person who likes to grasp every step of model training in his own hands , So in addition to cifar10、cifar100、imagefolder And other classic data ( structure ) when , Try to complete the data set loading process by yourself , In order to better understand the model and data set . therefore , This blog will mainly introduce how to use MindSpore Custom customization is similar to PyTorch Normal form data set loading process .

● mindspore.dataset.Generator

Dataset ●

Differential use PyTorch,MindSpore Not like inheritance Dataset To complete the construction of data sets , however MindSpore It provides users with an example similar to DataLoader Data set encapsulation interface . Users can customize object Object's dataset object , And then use GeneratorDataset encapsulate , Next I will customize cifar10 and imagenet Data set to show the use of GeneratorDataset Interface method .

● Customize cifar10 Data sets ●

Analysis format

Before defining a dataset , The first thing we need to do is to analyze the format of the data set . stay cifar In the official website , We can know the basic format of the data set , You can also use existing blogs , View read cifar10 Code example of . As shown in the figure below is cifar-10-batches-py Catalog files for datasets , Here we mainly focus on data_batch and test_batch.

Load data

Here I mainly use torchvision Medium cifar10 Take data loading as an example , Explain build cifar10 Data set method .

train_list = [

['data_batch_1', 'c99cafc152244af753f735de768cd75f'],

['data_batch_2', 'd4bba439e000b95fd0a9bffe97cbabec'],

['data_batch_3', '54ebc095f3ab1f0389bbae665268c751'],

['data_batch_4', '634d18415352ddfa80567beed471001a'],

['data_batch_5', '482c414d41f54cd18b22e5b47cb7c3cb'],

]

test_list = [

['test_batch', '40351d587109b95175f43aff81a1287e'],

]

...

if self.train:

downloaded_list = self.train_list

else:

downloaded_list = self.test_list

...

for file_name, checksum in downloaded_list:

file_path = os.path.join(self.root, self.base_folder, file_name)

with open(file_path, 'rb') as f:

entry = pickle.load(f, encoding='latin1')

self.data.append(entry['data'])

if 'labels' in entry:

self.targets.extend(entry['labels'])

else:

self.targets.extend(entry['fine_labels'])

""" It is easy to understand , There is a in the dataset file "data" And a "label" key , Just take them out separately """

self.data = np.vstack(self.data).reshape(-1, 3, 32, 32)

self.data = self.data.transpose((0, 2, 3, 1)) # convert to HWCstructure cifar10 Dataset and complete preprocessing

because cifar10 After reading, it is already in the form of data , Therefore, the desired image decoding is not required , You can use it directly opencv perhaps PIL To deal with . Here we use cifar10 Of test Take the data .

import os

import pickle

import numpy as np

import mindspore

from mindspore.dataset import GeneratorDataset

class CIFAR10(object):

train_list = [

'data_batch_1',

'data_batch_2',

'data_batch_3',

'data_batch_4',

'data_batch_5',

]

test_list = [

'test_batch',

]

def __init__(self, root, train, transform=None, target_transform=None):

super(CIFAR10, self).__init__()

self.root = root

self.train = train # training set or test set

if self.train:

downloaded_list = self.train_list

else:

downloaded_list = self.test_list

self.data = []

self.targets = []

self.transform = transform

self.target_transform = target_transform

# now load the picked numpy arrays

for file_name in downloaded_list:

file_path = os.path.join(self.root, file_name)

with open(file_path, 'rb') as f:

entry = pickle.load(f, encoding='latin1')

self.data.append(entry['data'])

if 'labels' in entry:

self.targets.extend(entry['labels'])

else:

self.targets.extend(entry['fine_labels'])

self.data = np.vstack(self.data).reshape(-1, 3, 32, 32)

self.data = self.data.transpose((0, 2, 3, 1)) # convert to HWC

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is index of the target class.

"""

img, target = self.data[index], self.targets[index]

# doing this so that it is consistent with all other datasets

# to return a PIL Image

img = Image.fromarray(img)

if self.transform is not None:

img = self.transform(img)

if self.target_transform is not None:

target = self.target_transform(target)

return img, target

def __len__(self):

return len(self.data)

cifar10_test = CIFAR10(root="./cifar10/cifar-10-batches-py", train=False)

cifar10_test = GeneratorDataset(source=cifar10_test, column_names=["image", "label"])

cifar10_test = cifar10_test.batch(128)

for data in cifar10_test.create_dict_iterator():

print(data["image"].shape, data["label"].shape)

(128, 32, 32, 3) (128,)

(128, 32, 32, 3) (128,)

(128, 32, 32, 3) (128,)

(128, 32, 32, 3) (128,)As you can see from the code above , Although the language style is different , however MindSpore Use GeneratorDataset It can still provide us with a set of relatively convenient data set loading methods . For data set preprocessing transform Code , Researchers can pass code directly through transform Parameters of the incoming get_item function , It is very convenient ; It can also be used MindSpore Language style , adopt dataset Self contained map function , Preprocess the dataset , But the language style of the former is more Python, Recommended .

● Customize ImageNet ●

Analysis format

Next is the introduction ImageNet Data set customization process . In fact, the definition of ImageNet The data set loader is very convenient , Because the data set of image classification often has a tree structure , We just need [ route , label ] Or is it [ Images , label ] Array pairs of are passed into get_item Function , Data set preprocessing can be completed .

Data loading

Here is a simple quotation timm In the definition of folder Part of the code .

def find_images_and_targets(folder, types=IMG_EXTENSIONS, class_to_idx=None, leaf_name_only=True, sort=True):

labels = []

filenames = []

for root, subdirs, files in os.walk(folder, topdown=False, followlinks=True):

rel_path = os.path.relpath(root, folder) if (root != folder) else ''

label = os.path.basename(rel_path) if leaf_name_only else rel_path.replace(os.path.sep, '_')

for f in files:

base, ext = os.path.splitext(f)

if ext.lower() in types:

filenames.append(os.path.join(root, f))

labels.append(label)

if class_to_idx is None:

# building class index

unique_labels = set(labels)

sorted_labels = list(sorted(unique_labels, key=natural_key))

class_to_idx = {c: idx for idx, c in enumerate(sorted_labels)}

images_and_targets = [(f, class_to_idx[l]) for f, l in zip(filenames, labels) if l in class_to_idx]

if sort:

images_and_targets = sorted(images_and_targets, key=lambda k: natural_key(k[0]))

return images_and_targets, class_to_idxYou can see , We just need to traverse the directory , obtain images_and_target Just fine .

Mixup and Cutmix Use

stay ImageNet in , We often use Mixup and Cutmix Wait for data enhancement , But when aligning for data enhancement , Data sets have become [batch_size, channel, height, width] In form , stay get_item The data preprocessing function is for a single sample . stay PyTorch in ,Mixup and Cutmix Is taking the data out , Applied before entering the model . stay MindSpore in , We just need to use dataset.batch Function before preprocessing the data set . Specific code can refer to my blog how to use MindSpore Realize automatic data enhancement

(https://blog.csdn.net/qq_31768873/article/details/121283169), Some of the code is shown here .

if (mix_up > 0. or cutmix > 0.) and not is_training:

# if use mixup and not training(False), one hot val data label

one_hot = C.OneHot(num_classes=num_classes)

dataset = dataset.map(input_columns="label", num_parallel_workers=num_parallel_workers,

operations=one_hot)

dataset = dataset.batch(batch_size, drop_remainder=True, num_parallel_workers=num_parallel_workers)

if (mix_up > 0. or cutmix > 0.) and is_training:

mixup_fn = Mixup(

mixup_alpha=mix_up, cutmix_alpha=cutmix, cutmix_minmax=None,

prob=mixup_prob, switch_prob=switch_prob, mode=mixup_mode,

label_smoothing=label_smoothing, num_classes=num_classes)

dataset = dataset.map(operations=mixup_fn, input_columns=["image", "label"],

num_parallel_workers=num_parallel_workers)

return dataset● FAQ ●

When customizing data sets , Be sure to overload len function , There's no such function , Objects are not aware of the data set size .

● summary ●

This article explains how to use it GeneratorDataset This interface is customized MindSpore Data sets . although MindSpore It provides us with easy-to-use proprietary data operators , But because the data is loaded in C Language level completion , be relative to torchvision There is an imperceptible defect , So you can try to use GeneratorDataset Custom loading , Grasp the details of each step .( Of course , In fact, you can also go to torchvision Carry the code GeneratorDataset Just package ~)

MindSpore Official information

GitHub : https://github.com/mindspore-ai/mindspore

Gitee : https : //gitee.com/mindspore/mindspore

official QQ Group : 486831414

边栏推荐

- Why does EDR need defense in depth to combat ransomware?

- Leetcode 718. 最长重复子数组(暴力枚举,待解决)

- Crawler and Middleware of go language

- Serial port debugging tool mobaxtermdownload

- How to open an account on the mobile phone? Is it safe to open an account online and speculate in stocks

- In the Internet industry, there are many certificates with high gold content. How many do you have?

- Technical dry goods | top speed, top intelligence and minimalist mindspore Lite: help Huawei watch become more intelligent

- [strong foundation program] video of calculus in mathematics and Physics Competition

- 【leetcode】275. H index II

- 炒股手机上开户可靠吗? 网上开户炒股安全吗

猜你喜欢

一篇文章带你学会容器逃逸

固有色和环境色

Unity初学者肯定能用得上的50个小技巧

The fourth bullet of redis interview eight part essay (end)

浅谈分布式系统开发技术中的CAP定理

Cvpr2022 stereo matching of asymmetric resolution images

![[microservices] Understanding microservices](/img/62/e826e692e7fd6e6e8dab2baa4dd170.png)

[microservices] Understanding microservices

ASP. Net core create MVC project upload file (buffer mode)

Service discovery, storage engine and static website of go language

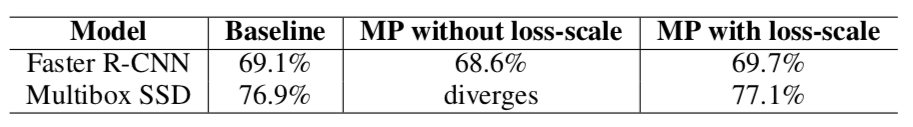

全网最全的混合精度训练原理

随机推荐

[微服务]认识微服务

【Try to Hack】正向shell和反向shell

让敏捷回归本源——读《敏捷整洁之道》有感

How to open an account on the mobile phone? Is it safe to open an account online and speculate in stocks

Why does EDR need defense in depth to combat ransomware?

我的c语言进阶学习笔记 ----- 关键字

Installing MySQL on Ubuntu

[try to hack] forward shell and reverse shell

新股民如何网上开户 网上开户炒股安全吗

Leetcode 718. Longest repeating subarray (violence enumeration, to be solved)

Where is it safer to open an account to buy funds

Safe and cost-effective payment in Thailand

消息队列简介

Intrusion trace cleaning

浅谈分布式系统开发技术中的CAP定理

客户端实现client.go客户端类型定义连接

Is it safe to buy pension insurance online? Is there a policy?

您的连接不是私密连接

有哪些劵商推荐?现在在线开户安全么?

运筹说 第66期|贝尔曼也有“演讲恐惧症”?