当前位置:网站首页>First knowledge of spark - 7000 words +15 diagrams, and learn the basic knowledge of spark

First knowledge of spark - 7000 words +15 diagrams, and learn the basic knowledge of spark

2022-07-04 11:32:00 【The way of several people】

Outline of the article

Spark yes UC Berkeley AMP Lab Open source general distributed parallel computing framework , It has become Apache Software Foundation's top open source project .

For the following reasons ,Spark Has become a data industry practitioner ( Especially in the field of big data ) A member of the inevitable technology stack :

- Spark It has become a necessary computing engine framework in the field of big data

- Spark It has basically replaced the traditional MapReduce Offline computing framework and Storm Streaming real time computing framework

- Spark In Data Science 、 Machine learning and AI And other popular technical directions continue to make efforts

Following pair Spark Characteristics of 、 contrast Hadoop The advantages of 、 Learn basic knowledge such as composition module and operation principle .

1

Spark Characteristics of

chart 1:Apache The official website describes Spark characteristic

Apache After revision Spark The official website uses four words to describe Spark Characteristics of :Simple. Fast. Scalable. Unified.

1.1

Simple( Simple and easy to use )

Spark It provides a wealth of advanced operations , Support rich operators , And support Java、Python、Scala、R、SQL Languages like API, Enables users to quickly build different applications .

Developers simply call Spark Packaged API To achieve it , No need for attention Spark The underlying architecture of .

1.2

Fast( Efficient and fast )

Spark Each task processed is constructed into a DAG(Directed Acyclic Graph, Directed acyclic graph ) To execute , The principle of implementation is based on RDD(Resilient Distributed Dataset, Elastic distributed data sets ) Iterate over the data in memory , To achieve high-performance and fast computing processing of batch and streaming data .

Previous official data showed : If the calculation data is read from disk ,Spark The computing speed is MapReduce Of 10 More than times ; If the calculation data is read from memory ,Spark The calculation speed is MapReduce Of 100 More than times .

The current official website has removed this data , It is estimated that the statistical scenarios and data are biased , Not comprehensive enough . But it also shows from the side ,Spark It is an indisputable fact that it has excellent computing performance , There is no need to use data to prove .

1.3

Scalable( Fusibility )

Spark It can be easily integrated with other open source products . such as :Spark have access to Hadoop Of YARN and Apache Mesos As its resource manager and scheduler ; Can handle all of Hadoop Supported data , Include HDFS、HBase and Cassandra etc.

This is for deployed Hadoop The users of the cluster are particularly important , Because you don't need to do any data migration to use Spark Powerful computing power .

Spark It can also be independent of third-party resource management and schedulers , It has achieved Standalone As its built-in resource management and scheduling framework , This further reduces Spark Use threshold of .

Users can flexibly choose the operation mode according to the existing big data platform , Make it very easy for everyone to deploy and use Spark.

Spark Also provided in EC2 Upper Department Standalone Of Spark Clustering tools .

Besides , because Spark It's using Scala Developed by this functional programming language , therefore Spark Also inherited Scala extensibility , You can type data structures 、 Control body structure and so on .

1.4

Unified( Unified and universal )

The traditional scheme of big data processing needs to maintain multiple platforms , such as , Offline tasks are placed on Hadoop MapRedue Up operation , The task of real-time stream computing is to Storm Up operation .

and Spark It provides a one-stop unified solution , Can be used for batch processing 、 Interactive query (Spark SQL)、 Real time streaming (Spark Streaming)、 machine learning (Spark MLlib) And graph calculation (GraphX) etc. . These different types of processing can be seamlessly combined in the same application .

Spark A unified solution is very attractive , After all, any company wants to use a unified platform to deal with the problems encountered , Reduce the human cost of development and maintenance and the physical cost of deploying the platform .

2

Spark The advantages of

here Spark The advantages of , It's the contrast Hadoop Of MapReduce for , therefore , We need to see first MapReduce Limitations and shortcomings of :

- The intermediate result of the calculation is placed in HDFS File system , inefficiency

- High delay , Applicable only Batch Data processing , For interactive data processing 、 The support of real-time data processing is not enough

- The level of abstraction is low , It needs to be done by hand , Hard to use

- Only two operations are available ,Map and Reduce, Lack of expression , And ReduceTask Need to wait for all MapTask It's all done before we start

- One Job Only Map and Reduce Two phases , Complex computing requires a lot of Job complete ,Job The dependencies are managed by the developers themselves

- The processing logic is hidden in the code details , There's no global logic

- For iterative data processing, the performance is poor , Low fault tolerance

for instance , use MapReduce Implement two tables of Join It's a very technical process , As shown in the figure below :

chart 2-1: Use MapReduce Realization Join The process of

stay Spark in ,MapReduce These limitations and deficiencies can find a good solution , and Spark Have MapReduce All the advantages of , This is it. Spark The advantage of .

2.1

High performance (*)

Hadoop MapReduce The intermediate results of each calculation are stored in HDFS On disk ; and Spark The intermediate results can be saved in memory , Data processing in memory , If there is no more memory, it will be written to the local disk , instead of HDFS.

Spark You can split the flow into small batch, To provide Discretized Stream Handle interactive real-time data .

therefore ,Spark It can solve the problems listed above MapReduce Of the 1、2 A question .

2.2

Easy to use (**)

Spark Introduced based on RDD The abstraction of , The code of data processing logic is very short , And provides a wealth of Transformation( transformation , Used to create a new RDD) and Action( perform , Used to deal with RDD Make actual calculations ) Operation and corresponding operator , Many basic operations ( Such as filter, union, join, groupby, reduce) Have been in RDD Of Transformation and Action To realize .

Spark One of them Job Can contain RDD The multiple Transformation operation , During scheduling, multiple Stage( Stage ).

RDD The internal data set is logically and physically divided into multiple Partitions( Partition ), every last Partition The data in can be executed in a single task , and Partition Different Transformation Operation requires Shuffle, Divided into different Stage in , Wait for the one in front Stage Only when it's done can we start .

stay Spark The use of Scala In language , Through anonymous functions and higher-order functions ,RDD Supports streaming API, Can provide an overall view of the processing logic . The code does not contain the implementation details of specific operations , Logic is clearer .

therefore ,Spark It can solve the problems listed above MapReduce Of the 3-6 A question .

2.3

High fault tolerance (**)

Spark Introduced RDD, It is a read-only elastic distributed data set distributed in a group of nodes , Want to update RDD Data in partition , Then only the original RDD Conduct Transformation operation , In the original RDD Create a new one based on RDD.

such , In the process of task calculation , each RDD There will be a dependent relationship between them .

When an exception occurs in the operation, resulting in the loss of partition data , According to “ ancestry ”(Lineage) Relationships rebuild data , Not for the first RDD Recalculate partition data .

Spark There is still CheckPoint Mechanism , This is a snapshot based caching mechanism , If in the task operation , Use the same... Multiple times RDD, You can put this RDD Cache processing , In the subsequent use of this RDD when , There is no need to recalculate .

chart 2-2:Spark CheckPoint Mechanism

Pictured 2-2 Shown , Yes RDD-b Do snapshot cache processing , So when RDD-n In the use of RDD-b When the data is , There is no need to recalculate RDD-b, But directly from Cache( cache ) Place RDD-b The data of .

CheckPoint Through redundant data and log update operation , Yes “ ancestry ” Fault tolerant assistance for detection , avoid “ ancestry ” The cost of fault tolerance is too high due to too long .

adopt Spark The above mechanism , It can improve the performance and fault tolerance of iterative computing , Solve the problems listed above MapReduce Of the 7 A question .

3

Spark The ecosystem ( Component module )

Spark The ecosystem contains multiple tightly integrated module components , These modules are closely combined and can be called each other . at present ,Spark The ecosystem has evolved from big data computing and data mining , Extend to machine learning 、NLP、 Speech recognition and so on .

chart 3-1:Apache Spark ecosystem

Spark Supporting application scenarios with multiple components , Its presence Spark Core On the basis of the Spark SQL、Spark Streaming、MLlib、GraphX、SparkR And other core components .

Spark SQL Designed to bring familiar SQL Database query language is combined with more complex algorithm based analysis ,Spark Streaming For real time flow calculation ,MLlib Applied to the field of machine learning ,GraphX Applied to graph calculation ,SparkR Used to deal with R Data calculation of language .

Spark Support for multiple programming languages , Include Java、Python(PySpark)、R(SparkR) and Scala.

Spark In the computing resource scheduling layer Local Pattern 、Standalone Pattern 、YARN Pattern 、Mesos Patterns, etc .

Spark Support a variety of storage media , In the storage layer Spark Support from the HDFS、HBase、Hive、ES、MongoDB、MySQL、PostgreSQL、AWS、Ali Cloud And other different storage systems 、 Big database 、 Read and write data in relational database , In real-time stream computing, we can learn from Flume、Kafka And other data sources to obtain data and perform stream computing .

Spark It also supports very rich data file formats , such as txt、json、csv etc. . It also supports parquet、orc、avro Equiform , These formats have obvious advantages in data compression and massive data query .

3.1

Spark Core

Spark Core Realized Spark Basic core functions , It includes the following parts :

chart 3-2:Spark Core structure

3.1.1. Spark Basic configuration

- SparkConf : Used for definition Spark Application Configuration information .

- SparkContext :Spark Application The main entry point for all functions , It hides network communication 、 Message communication , Distributed deployment 、 Storage system 、 Computing storage and other underlying logic , Developers only need to use the API Can finish Application Submission and execution of . The core function is initialization Spark Application Components needed , At the same time, he is also responsible for Master Process registration, etc .

- SparkRPC : be based on Netty Realized Spark RPC Framework for Spark Network communication between components , It can be divided into asynchronous and synchronous modes .

- SparkEnv :Spark Execution environment , It encapsulates a lot of Spark The basic environment components needed to run .

- ListenerBus : Event bus , It is mainly used for SparkContext Event interaction between internal components , It belongs to listener mode , Using asynchronous calls .

- MetricsSystem : Measurement system , For the whole Spark Operation monitoring of the status of each component in the cluster .

3.1.2. Spark The storage system

Spark The storage system is used to manage Spark The storage mode and location of the data relied on in the operation . The storage system will give priority to storing data in the memory of each node , Write data to disk when out of memory , This is also Spark An important reason for high computing performance .

The boundary between memory and disk where data is stored can be flexibly controlled , At the same time, the result can be output to remote storage through remote network call , such as HDFS、HBase etc. .

3.1.3. Spark Scheduling system

Spark The dispatching system is mainly composed of DAGScheduler and TaskScheduler form .

- DAGScheduler: Responsible for creating Job, Put one Job according to RDD The dependency between , Divided into different Stage in , And divide each Stage Are abstracted into one or more Task Composed of TaskSet, Batch submit to TaskScheduler For further task scheduling .

- TaskScheduler: Be responsible for each specific according to the scheduling algorithm Task Perform batch scheduling execution , Coordinate physical resources , Track and get status results .

The main scheduling algorithms are FIFO、FAIR.

- FIFO Dispatch : fifo , This is a Spark Default scheduling mode .

- FAIR Dispatch : Support grouping jobs into pools , And set different scheduling weights for each pool , Tasks can be executed in order of weight .

3.1.4. Spark Calculation engine

Spark The computing engine is mainly composed of memory manager 、TaskSet Manager 、Task Manager 、Shuffle Manager, etc .

3.2

Spark SQL

Spark SQL yes Spark Packages for manipulating structured data , It provides based on SQL、Hive SQL、 With the traditional RDD Data processing method combined with programmed data operation , It makes distributed data set processing simpler , This is also Spark It is widely used for important reasons .

At present, an important evaluation index of big data related computing engine is : Do you support SQL, This will lower the threshold of users .Spark SQL Provides two abstract data sets :DataFrame and DataSet.

- DataFrame:Spark SQL The abstraction of structured data , It can be simply understood as Spark In the table , be relative to RDD More data table structure information , Is distributed Row Set , Provides a ratio RDD Richer operators , At the same time, it improves the efficiency of data execution .

- DataSet: A distributed collection of data , have RDD The advantages of strong typing and Spark SQL The advantages of optimized execution . This can be done by jvm Object building , And then use map、filter、flatmap etc. Transformation operation .

3.3

Spark Streaming

Spark Streaming It provides the method of streaming calculation for real-time data API, Support scalable and fault tolerant processing of streaming data , It can be done with Kafka、Flume、TCP And other streaming data sources .

Spark Streaming The implementation of the , Also used RDD Abstract concept , Enable writing for stream data Application Time is more convenient .

Besides ,Spark Streaming It also provides batch flow operation based on time window , It is used to perform batch processing on stream data within a certain time period .

3.4

MLlib

Spark MLlib As a provider of common machine learning (ML) Function library , Including classification 、 Return to 、 Clustering and other machine learning algorithms , Its easy to use API The interface reduces the threshold of machine learning .

3.5

GraphX

GraphX For distributed graph calculation , For example, a friend relationship graph that can be used to operate social networks , What can be provided through it API Quickly solve common problems in graph calculation .

3.6

PySpark

For use Spark Support Python,Apache Spark The community released a tool PySpark. Use PySpark, You can use Python In programming language RDD .

PySpark Provides PySpark Shell , It will Python API link to Spark Core and initialize SparkContext.

3.7

SparkR

SparkR It's a R Language pack , Provides lightweight based R Language use Spark The way , Make based on R Language makes it easier to deal with large-scale data sets .

4

Spark Operating principle

Let's introduce Spark Operation mode and architecture of .

4.1

Spark The mode of operation of (**)

Spark The bottom layer of is designed to efficiently perform scalable computing between one to thousands of nodes . In order to achieve this demand , Get maximum flexibility at the same time ,Spark Support running on various cluster managers .

Spark There are mainly the following modes of operation :

chart 4-1-1:Spark Operation mode

except Local It is outside the local mode ,Standalone、YARN、Mesos、Cloud It's all cluster mode , You need to build a cluster environment to run .

4.2

Spark Cluster architecture and role (**)

Spark The cluster architecture of is mainly composed of Cluster Manager( Cluster explorer )、Worker ( Work node )、Executor( actuator )、Driver( Driver )、Application( Applications ) There are five parts in total , As shown in the figure below :

chart 4-2-1:Spark Cluster architecture

The following is a brief introduction to each part of the role .

4.2.1. Cluster Manager

Cluster Manager yes Spark Cluster resource manager of , Exist in Master In progress , It is mainly used to manage and allocate the resources of the whole cluster , According to the different deployment modes , Can be divided into Local、Standalone、YARN、Mesos、Cloud Equal mode .

4.2.2. Worker

Worker yes Spark Working nodes of , Used to perform submitted tasks , His main responsibilities are as follows :

- Worker Node through the register machine to Cluster Manager Report your own CPU、 Memory and other resource usage information .

- Worker Nodes in the Spark Master Under the instruction of , Create and enable Executor( Real computing units ).

- Spark Master Combine resources with Task Assigned to Worker nodes Executor And carry out the application of .

- Worker Node synchronization Executor Status and resource information to Cluster Manager.

chart 4-2-2:Spark Worker Node working mechanism

stay YARN Run in cluster mode Worker Nodes generally refer to NodeManager node ,Standalone Running in mode generally means slave node .

4.2.3. Executor

Executor It's a component that actually performs computing tasks , yes Application Running on the Worker Last process . This process is responsible for Task Operation of , And save the data in memory or disk storage , It can also return the result data to Driver.

4.2.4. Application

Application Is based on Spark API Written applications , Including the realization of Driver The code of the function and each in the cluster Executor Code to execute on .

One Application By multiple Jobs form .

among Application The entry of is user-defined main() Method .

4.2.5. Driver

Driver yes Spark Drive node of , Can run in Application Node , Also can be Application Submit to Cluster Manager, Again by Cluster Manager arrange Worker Run , His main responsibilities include :

- Application adopt Driver Follow Cluster Manager And Executor communicate ;

- function Application Medium main() function ;

- establish SparkContext;

- Divide RDD And generate DAG;

- establish Job And will each Job Split into multiple Stage, Every Stage By multiple Task constitute , Also known as Task Set;

- Generate and send Task To Executor;

- In all Executor Inter process coordination Task The scheduling ;

- And Spark Resource coordination with other components in .

4.3

Worker Operation disassembly (***)

chart 4-3-1:Worker Disassembly of internal operation process

Spark One of them Worker You can run one or more Executor.

One Executor It can run Task The number depends on Executor Of Core Number , Default one Task Take up one Core.

4.3.1. Job

RDD Of Transformation The operation process adopts inert computer system , It doesn't work out immediately . When it really triggers Action In operation , Will execute the calculation , Produce a Job.

Job It's made up of many Stage Built parallel computing tasks .

One Job Contains multiple RDD And acting on RDD The various operators of .

4.3.2. RDD rely on

RDD(Resilient Distributed Dataset, Elastic distributed data sets ) yes Spark The most important concept in , yes Spark A basic abstraction of all data processing , It represents an immutable 、 Divisible 、 A collection of data whose elements can be computed in parallel .

RDD It has the characteristics of data flow model : Automatic fault tolerance 、 Location aware scheduling and scalability .

stay Spark Through a series of operator pairs RDD To operate , It is mainly divided into Transformation( transformation ) and Action( perform ) Two kinds of operations :

- Transformation: To what already exists RDD Transform to generate new RDD, The conversion process adopts the inert evaluation computer system , The actual conversion will not be triggered immediately , But first record RDD The transformational relationship between , Only when triggered Action The conversion will be really executed when the operation , And return the calculation results , In order to avoid all operations are performed once , Reduce data calculation steps , Improve Spark Operational efficiency . Common methods are map、filter、flatmap、union、groupByKey etc. .

- Action: Mandatory evaluation must be used RDD The conversion operation of , Perform actual calculations on the dataset , And return the final calculation result to Driver Program , Or write to external storage . Commonly used methods are reduce、collect、count、countByKey、 saveAsTextFile etc. .

chart 4-3-2:RDD Operation process

because RDD Is a read-only elastic partitioned dataset , If the RDD Change the data in , You can only go through Transformation operation , By one or more RDD Calculate to generate a new RDD, therefore RDD A similar assembly line will be formed between (Pipeline) Before and after dependencies , The one in front is called the father RDD, The latter is called the son RDD

When abnormal conditions occur in the calculation process, some Partition When data is lost ,Spark This dependency can be obtained from the parent RDD Recalculate lost partition data in , It doesn't need to be right RDD Recalculate all partitions in , To improve iterative computing performance .

RDD The dependencies between them are divided into Narrow Dependency( Narrow dependence ) and Wide Dependency( Wide dependence ).

- Narrow Dependency: Father RDD Each of the Partition Data can only be quilt at most RDD One of the Partition Used by the , for example map、filter、union And other operations will produce narrow dependencies ( Similar to the only child ).

- Wide Dependency: Father RDD Each of the Partition Data can be divided into several sub RDD The multiple Partition Used by the , for example groupByKey、reduceByKey、sortByKey And other operations will produce wide dependence ( Similar to multiple children ).

chart 4-3-3:RDD Narrow dependence and wide dependence

Simply speaking , Two RDD Of Partition Between , If it's a one-to-one relationship , Is narrow dependence , Otherwise, it is wide dependency .

RDD yes Spark A very important knowledge point in , A separate chapter will be introduced in detail later .

4.3.3. Stage

When Spark When performing a job , Will be based on RDD The width dependence between , take DAG Divided into multiple interdependent Stage.

Spark Divide Stage The whole idea is , Push forward in reverse order :

If you encounter RDD There is a narrow dependence between , because Partition The certainty of dependency ,Transformation The operation can be completed in the same thread , Narrow dependencies are divided into the same Stage in ;

If you encounter RDD There is wide dependence between , Is divided into a new Stage in , And new Stage Before Stage Of Parent, Then recursion is executed by analogy ,Child Stage Need to wait for all Parent Stages Only after the execution is completed .

So each of them Stage Internal RDD Are executed in parallel on each node as far as possible , To improve operational efficiency .

chart 4-3-4:Stage The process of division ( Black indicates the pre computed partition )

In an effort to 4-3-4 As an example , Use this idea to Stage Divide , Push back and forth :

RDD C, RDD D, RDD E, RDD F Are narrow dependencies , So it is built on the same Stage2 in ;

RDD A And RDD B There is a wide dependency between , So it is built on different Stage in ,RDD A stay Stage1 in ,RDD B stay Stage3 in ;

RDD B And RDD G There is a narrow dependence between , So it is built on the same Stage3 in .

In a Stage Inside , All operations are in serial form Pipeline The way , By one or more Task Composed of TaskSet Complete the calculation .

4.3.4. Partition

chart 4-3-5:RDD Medium Partitions

RDD The internal data set is logically and physically divided into multiple Partitions( Partition ), every last Partition The data in can be executed in a single task , such Partition The number determines the parallelism of computation .

If... Is not specified in the calculation RDD Medium Partition Number , that Spark default Partition Number is Applicaton Run the assigned CPU Check the number .

Official website recommends Partition The quantity is set to Task The number of 2-3 times , To make full use of resources .

4.3.5. TaskSet

chart 4-3-6:Tasks Composed of TaskSet

TaskSet It can be understood as a task , Corresponding to one Stage, yes Task A set of tasks .

One TaskSet All in Task No, Shuffle Dependencies can be computed in parallel .

4.3.6. Task

Task yes Spark The most independent unit of computing in , Every Task The data executed in usually corresponds to only one Partition.

Task It is divided into ShuffleMapTask and ResultTask Two kinds of , In the last place Stage Of Task by ResultTask, Other stages belong to ShuffleMapTask.

ShuffleMapTask amount to MapReduce Medium Mapper( Pictured 4-3-4 Medium Stage1 and Stage2);ResultTask amount to MapReduce Medium Reducer( Pictured 4-3-4 Medium Stage3).

Cover picture : Pierce boulder in St. Lawrence Bay, Quebec, Canada

Copyright information : Pietro Canali / SOPA / eStock Photo

边栏推荐

- 试题库管理系统–数据库设计[通俗易懂]

- MBG combat zero basis

- Postman advanced

- Solaris 10 network services

- No response after heartbeat startup

- Force buckle 142 Circular linked list II

- netstat

- Four sorts: bubble, select, insert, count

- 本地Mysql忘记密码的修改方法(windows)[通俗易懂]

- [Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 8

猜你喜欢

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 7](/img/44/1861f9016e959ed7c568721dd892db.jpg)

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 7

![Entitas learning [iv] other common knowledge points](/img/1c/f899f4600fef07ce39189e16afc44a.jpg)

Entitas learning [iv] other common knowledge points

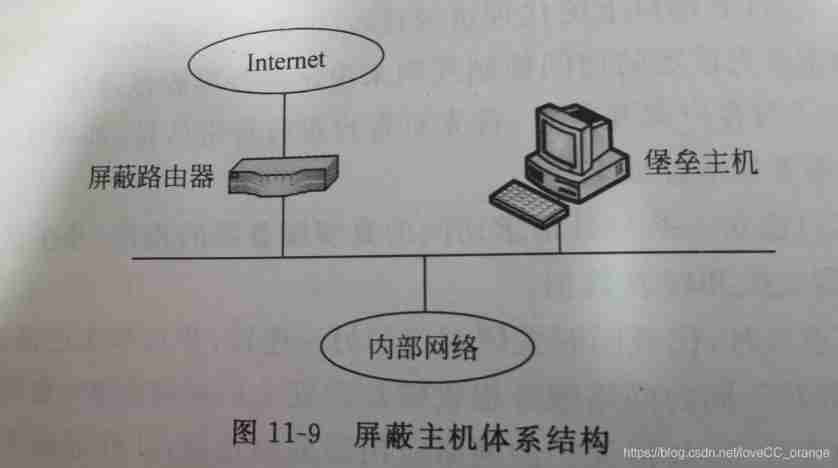

Summary of Shanghai Jiaotong University postgraduate entrance examination module firewall technology

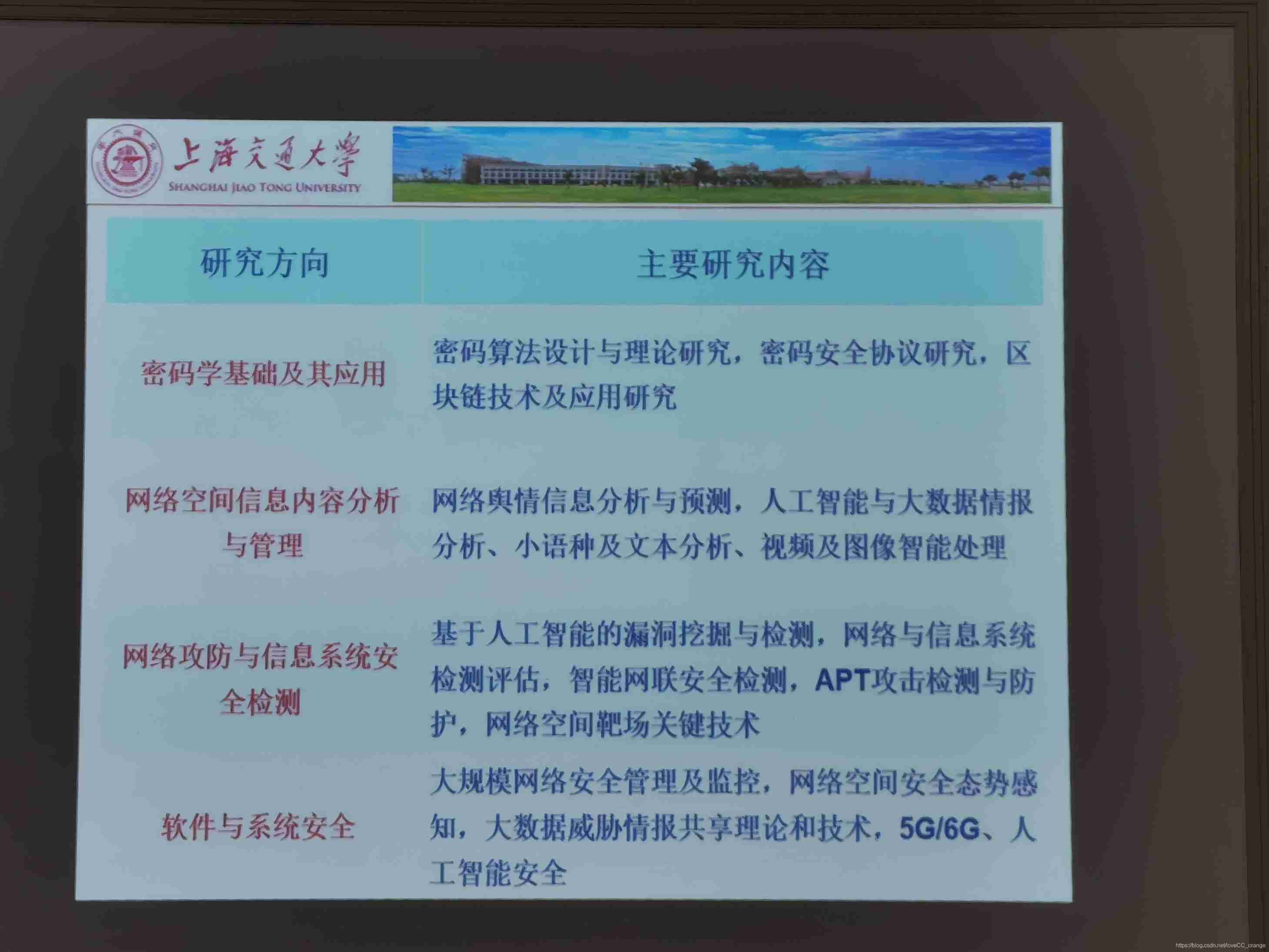

Introduction of network security research direction of Shanghai Jiaotong University

CSDN documentation specification

Failed to configure a DataSource: ‘url‘ attribute is not specified... Bug solution

How to judge the advantages and disadvantages of low code products in the market?

Take advantage of the world's sleeping gap to improve and surpass yourself -- get up early

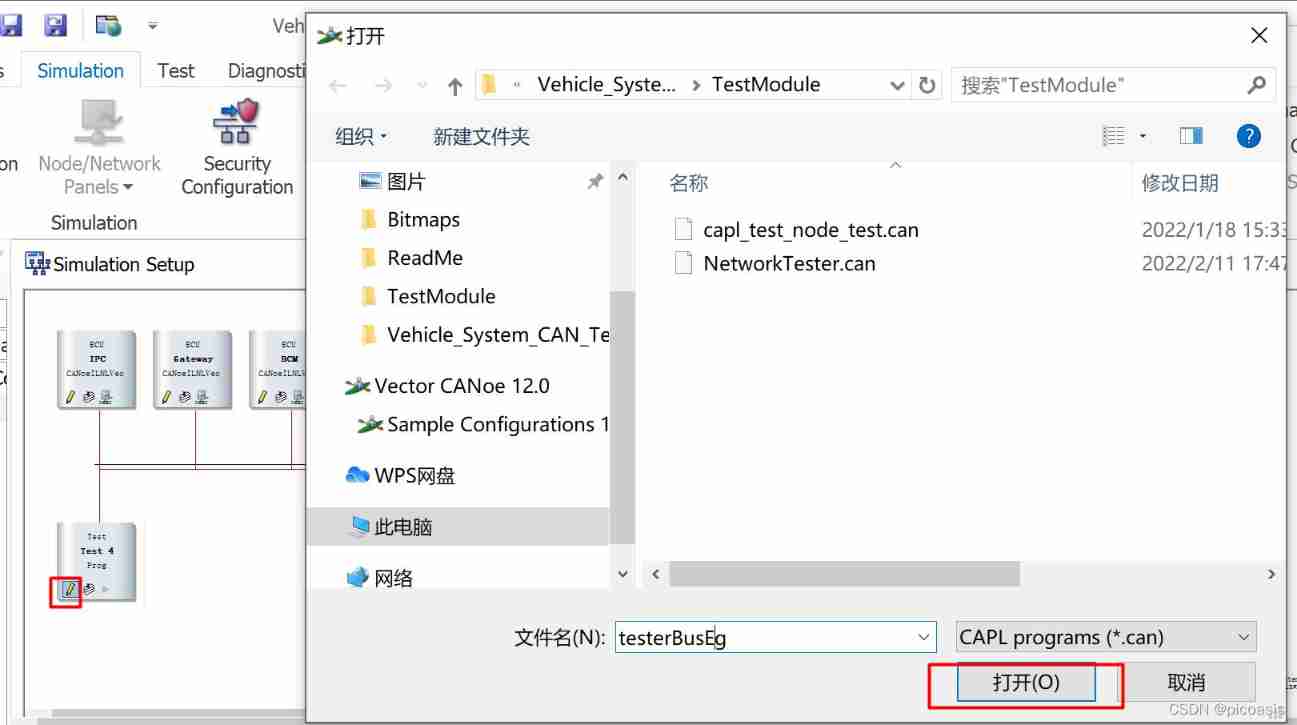

Canoe test: two ways to create CAPL test module

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 5](/img/68/4f92ca7cbdb90a919711b86d401302.jpg)

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 5

随机推荐

Canoe the second simulation engineering xvehicle 3 CAPL programming (operation)

Process communication and thread explanation

Local MySQL forgot the password modification method (Windows)

Lvs+kept realizes four layers of load and high availability

Sys module

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 22

Capl: timer event

C language compilation process

Usage of case when then else end statement

How to create a new virtual machine

Replace() function

Reptile learning 4 winter vacation series (3)

DDS-YYDS

VPS installation virtualmin panel

OSI seven layer model & unit

Detailed array expansion analysis --- take you step by step analysis

No response after heartbeat startup

Some tips on learning database

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 21

MBG combat zero basis