当前位置:网站首页>Introduction Guide to stereo vision (3): Zhang calibration method of camera calibration [ultra detailed and worthy of collection]

Introduction Guide to stereo vision (3): Zhang calibration method of camera calibration [ultra detailed and worthy of collection]

2022-07-05 08:54:00 【Li Yingsong~】

Dear students , Our world is 3D The world , Our eyes can observe three-dimensional information , Help us perceive distance , Navigation obstacle avoidance , So as to soar between heaven and earth . Today's world is an intelligent world , Our scientists explore a variety of machine intelligence technologies , Let the machine have the three-dimensional perception of human beings , And hope to surpass human beings in speed and accuracy , For example, positioning navigation in autopilot navigation , Automatic obstacle avoidance of UAV , Three dimensional scanning in the measuring instrument, etc , High intelligent machine intelligence technology is 3D Visual realization .

Stereo vision is an important direction in the field of 3D reconstruction , It simulates the structure of the human eye and simulates the binocular with two cameras , Project in Perspective 、 Based on triangulation , Through the logical complex homonymous point search algorithm , Restore the 3D information in the scene . It is widely used , Autopilot 、 Navigation obstacle avoidance 、 Cultural relic reconstruction 、 Face recognition and many other high-tech applications have its key figure .

This course will help you to understand the theoretical and practical knowledge of stereo vision from simple to profound . We will talk about the coordinate system and camera calibration , From passive stereoscopic to active stereoscopic , Even from deep recovery to grid construction and processing , Interested students , Come and explore the charm of stereovision with me !

This course is an electronic resource , So there will not be too many restrictions in the writing , But it will be logically clear 、 Easy to understand as the goal , Level co., LTD. , If there are deficiencies , Please give me some advice !

Personal wechat :EthanYs6, Add me to the technical exchange group StereoV3D, Talk about technology together .

CSDN Search for :Ethan Li Li Yingsong , see Web based courses .

Lesson code , Upload to github On , Address :StereoV3DCode:https://github.com/ethan-li-coding/StereoV3DCode

Hello, students , In the last article Introduction to stereo vision (2): Key matrix in , Our understanding of stereovision 3 Key matrix : The essential matrix E E E、 Basic matrix F F F、 Homography matrix H H H A more detailed description is given , At the same time, the essential matrix is given 、 The solution method of homography matrix and the external parameters of essential matrix decomposition R , t R,t R,t The specific formula . What's more valuable is , At the end of the blog, we provided several homework questions and put them in Github Open source reference answer code 【 I know a lot of psychology are meditating on Li Bo 666】【 Of course, there must be some people meditating on this too easy I wonder if Li Bo can have some difficulty 】. in any case , Bloggers think this is a meaningful thing , I just hope it doesn't hurt people's children .

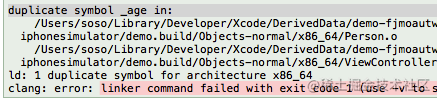

And the content of this article , Is the absolute core module of stereo vision : Camera calibration . Although it is relatively mature because of its technology , Nowadays, few people study , It is also easy to be ignored , Often use an open source algorithm library, such as Opencv perhaps Matlab Calibrate the toolbox directly , But in reality, stereovision Engineering 、 When commercializing , Open source tools because of their low accuracy 、 Low flexibility and not recommended for direct use , Enterprises often develop camera calibration algorithms by themselves . Camera calibration is the core module of stereo vision , It is quite necessary to master its theory , It is very helpful for us to deeply understand stereo vision technology , It has the effect of opening mountains and roads .

Camera calibration , The purpose is to determine the camera Internal parameter matrix K K K、 External parameter matrix R , t R,t R,t and Distortion coefficient d ( k 1 , k 2 , k 3 , p 1 , p 2 ) d(k_1,k_2,k_3,p_1,p_2) d(k1,k2,k3,p1,p2). Let's not consider the distortion coefficient for the moment ( We will discuss it later ), As we know from the previous chapter , Image coordinates p p p And world coordinates P w P_w Pw Between , Establish projection relationship through internal and external parameters , The formula is as follows :

λ p = K [ R t ] [ P w 1 ] (1) \lambda p=K\left[\begin{matrix}R&t\end{matrix}\right] \left[\begin{matrix}P_w\\1\end{matrix}\right] \tag 1 λp=K[Rt][Pw1](1)

The so-called calibration , That is, the process of fitting a parametric model from a large number of observations , And the parameter model fitted here is known , Therefore, we should try our best to explore a scheme that can easily obtain a large number of observations , If some other geometric constraints are satisfied between the observed values, it is more helpful to solve the specific single parameter values .

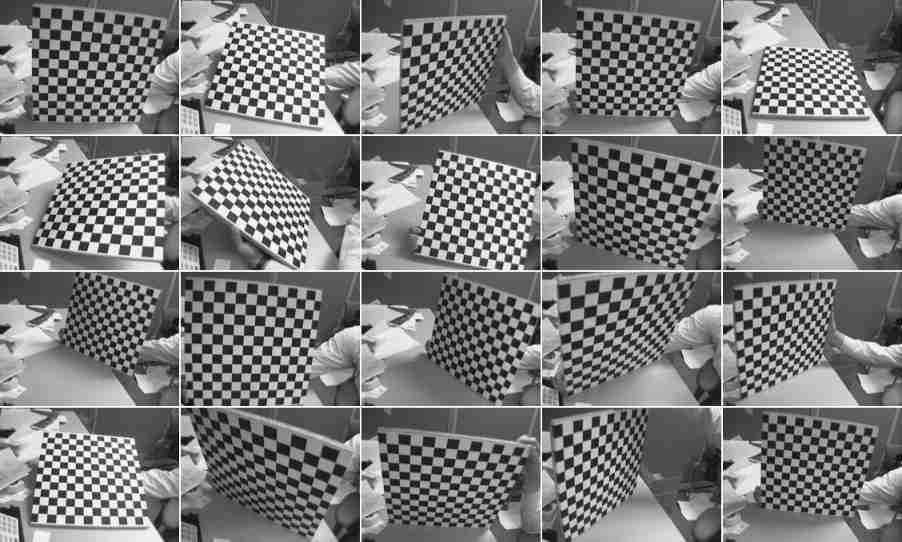

What we are talking about today Zhang Formula calibration method 1 That is, it provides a convenient scheme to obtain a large number of observations , At the same time, the observed values also meet a class of obvious geometric constraints ( Plane constraint ), Internal and external parameters can be directly solved . Its operation mode is very simple , Just shoot a plane with a calibration plate pattern , Camera calibration can be completed , The difficulty of calibration is greatly reduced , If we don't pursue high precision , Print a checkerboard calibration board pattern and paste it on a nearly flat cardboard to complete the calibration , Speed up the introduction and popularization of stereo vision , Far reaching , It is an absolute classic in the field of camera calibration .

This article will take you to know more about Zhang Camera calibration method , Mastering this article is very necessary for mastering the theory of stereo vision and engineering practice .

Implementation method

Zhang The formula calibration method can be widely used , One important reason is that its implementation method is very simple , It can be completed without professional process .

First step , Design a sheet with obvious corner features , And the pattern of two-dimensional coordinates of each corner is known as the calibration pattern , There are three common patterns :

|  |  |

Regular pattern design can easily calculate the two-dimensional coordinates of corners in the pattern , Take checkerboard , The number of pixels between corners is fixed , Suppose the coordinates of the upper left corner are ( 0 , 0 ) (0,0) (0,0), Then the pixel coordinates of other corners can be calculated by the offset of the grid , And a known DPI Calibration board image of , After printing, the two-dimensional space coordinates of each corner are also completely known ( Convert into space size through pixels ).

The second step , Place the calibration plate pattern on a plane in some way . For example, the simplest way is to print out the original size of the calibration chart , Then find an approximately flat plate , Paste the printed calibration pattern onto the tablet ; A more professional and high-precision way is to find professional manufacturers to make high-precision flat plates ( Such as ceramic plate ) And the calibration pattern is engraved on the flat plate by some process . The purpose of this step is to make the corners of the calibration pattern lie on a plane .

such , The two-dimensional coordinates described in the first step can be converted into the third dimension Z Z Z The coordinates are equal to 0 Three dimensional coordinates of ( Place the origin of the world coordinate system at a corner of the calibration plate ,Z The axis is perpendicular to the calibration plate ).

The third step , Move the camera to N(N>=3) Shoot the pattern of the calibration board with different poses .

Step four , Extract the corners of the calibration plate pattern taken in the previous step , Calculate the calibration parameters .

The above is the implementation steps of camera calibration , In conclusion , There is a set of corner points with known spatial coordinates on a plane calibration plate , The camera takes the corner pattern under multiple different poses and extracts the pixel coordinates of the corner , You can solve the internal and external parameters of the camera . This summary contains 2 An important knowledge point :

- The spatial coordinates of the corners in the calibration pattern are known , And they are all on the same plane , Z Z Z The coordinates are equal to 0

- The camera needs to take corner patterns in multiple different poses and extract pixel coordinates

You can see Zhang The formula calibration method is indeed an easy method to implement , The calibration theory contained therein , It's very valuable , For beginners of stereo vision , It is necessary to master its theory , Please also be sure to read the rest of this article .

Theoretical basis

Definition

In order to keep consistent with the formula in the paper , Let's change the naming habits in the first two blogs , Suppose the pixel coordinates of a point on the image are m = [ u , v ] T \text m=[u,v]^T m=[u,v]T, The three-dimensional coordinates of a point in space are M = [ X , Y , Z ] T \text M=[X,Y,Z]^T M=[X,Y,Z]T, The homogeneous expressions of the two are m ~ = [ u , v , 1 ] T , M ~ = [ X , Y , Z , 1 ] T \widetilde{\text m}=[u,v,1]^T,\widetilde{\text M}=[X,Y,Z,1]^T m=[u,v,1]T,M=[X,Y,Z,1]T. The projection formula of the title of the article can be expressed as :

s m ~ = A [ R t ] M ~ (2) s\widetilde{\text m}=\text A\left[\begin{matrix}\text R&\text t\end{matrix}\right]\widetilde{\text M} \tag 2 sm=A[Rt]M(2)

among , s s s Is the scale factor , R , t \text R,\text t R,t External parameter matrix , Used to convert world coordinate system coordinates into camera coordinate system coordinates , The former is the rotation matrix , The latter is the translation vector . A \text A A Is the camera's internal parameter matrix , Concrete ,

A = [ α γ u 0 0 β v 0 0 0 1 ] A=\left[\begin{matrix}\alpha&\gamma&u_0\\0&\beta&v_0\\0&0&1\end{matrix}\right] A=⎣⎡α00γβ0u0v01⎦⎤

among , ( u 0 , v 0 ) (u_0,v_0) (u0,v0) Is the coordinate of the image principal point , α , β \alpha,\beta α,β They are images u u u Axis and v v v Focal length in axial direction ( Pixel unit ) value , γ \gamma γ Is the tilt factor of the pixel axis .

Homography matrix

In the implementation method , We must be able to pay attention to a very critical message : The calibration pattern is placed on a plane . Its purpose is to make the corners in the calibration pattern lie on a spatial plane , So when the camera takes corner imaging , There is a homography transformation relationship between the space plane and the image plane , Through a homography matrix H \text H H Convert the spatial coordinates of corners into image coordinates .

In general , We place the world coordinate system on a corner of the calibration plate , And let Z Z Z The axis is perpendicular to the calibration plate , At this point Z Z Z The coordinates will all be equal to 0, Then the three-dimensional coordinates can be expressed as [ X , Y , 0 , 1 ] T [X,Y,0,1]^T [X,Y,0,1]T. Also define the rotation matrix R \text R R Of the i i i The column vectors are r i \text r_i ri, The formula s m ~ = A [ R t ] M ~ s\widetilde{\text m}=\text A\left[\begin{matrix}\text R&\text t\end{matrix}\right]\widetilde{\text M} sm=A[Rt]M Can be expressed as

s [ u v 1 ] = A [ r 1 r 2 r 3 t ] [ X Y 0 1 ] = A [ r 1 r 2 t ] [ X Y 1 ] (3) s\left[\begin{matrix}u\\v\\1\end{matrix}\right]=\text A\left[\begin{matrix}\text r_1&\text r_2&\text r_3&\text t\end{matrix}\right]\left[\begin{matrix}X\\Y\\0\\1\end{matrix}\right]=\text A\left[\begin{matrix}\text r_1&\text r_2&\text t\end{matrix}\right]\left[\begin{matrix}X\\Y\\1\end{matrix}\right] \tag 3 s⎣⎡uv1⎦⎤=A[r1r2r3t]⎣⎢⎢⎡XY01⎦⎥⎥⎤=A[r1r2t]⎣⎡XY1⎦⎤(3)

As mentioned earlier , Homography matrix can be used between calibration plane and image plane H \text H H To transform , That is to say

s m ~ = H M ~ (4) s\widetilde{\text m}=\text H\widetilde{\text M}\tag 4 sm=HM(4)

Due to the space point Z Z Z Coordinate for 0, So in the above formula M ~ = [ X , Y , 1 ] T \widetilde{\text M}=[X,Y,1]^T M=[X,Y,1]T

Combined (3), Available

H = λ A [ r 1 r 2 t ] (5) \text H=\lambda \text A\left[\begin{matrix}\text r_1&\text r_2&\text t\end{matrix}\right] \tag 5 H=λA[r1r2t](5)

H \text H H It's a 3 × 3 3\times 3 3×3 Matrix , λ \lambda λ Is an arbitrary scalar ( λ \lambda λ Because of the scale invariance of homogeneous coordinates , You can also argue that λ \lambda λ Is equal to 1).

Constraints on internal parameters

Bearing on the , We define H = [ h 1 h 2 h 3 ] \text H=\left[\begin{matrix}\text h_1&\text h_2&\text h_3\end{matrix}\right] H=[h1h2h3], Then there are

[ h 1 h 2 h 3 ] = λ A [ r 1 r 2 t ] (6) \left[\begin{matrix}\text h_1&\text h_2&\text h_3\end{matrix}\right]=\lambda \text A\left[\begin{matrix}\text r_1&\text r_2&\text t\end{matrix}\right] \tag 6 [h1h2h3]=λA[r1r2t](6)

Because of rotation R \text R R A matrix is an orthogonal matrix , r 1 \text r_1 r1 and r 2 \text r_2 r2 It's a standard orthogonal basis , That is, they are not only perpendicular to each other , And the mold length is 1. The meet r 1 T r 2 = 0 \text r_1^T\text r_2=0 r1Tr2=0, r 1 T r 1 = r 2 T r 2 = 1 \text r_1^T\text r_1=\text r_2^T\text r_2=1 r1Tr1=r2Tr2=1.

And the above formula can be deduced r 1 = 1 λ A − 1 h 1 \text r_1=\frac 1 \lambda \text A^{-1}\text h_1 r1=λ1A−1h1, r 2 = 1 λ A − 1 h 2 \text r_2=\frac 1 \lambda \text A^{-1}\text h_2 r2=λ1A−1h2.

It's easy to get :

h 1 T A − T A − 1 h 2 = 0 h 1 T A − T A − 1 h 1 = h 2 T A − T A − 1 h 2 (7) \begin{aligned} \text h_1^T\text A^{-T}\text A^{-1}\text h_2&=0\\ ~\\ \text h_1^T\text A^{-T}\text A^{-1}\text h_1&=\text h_2^T\text A^{-T}\text A^{-1}\text h_2 \end{aligned} \tag 7 h1TA−TA−1h2 h1TA−TA−1h1=0=h2TA−TA−1h2(7)

It can be seen from the above formula that , Homography matrix H \text H H And internal parameter matrix A \text A A The elements of satisfy the constraints of two linear equations . If we can calculate the homography matrix , Intuitively, it feels that the internal parameter matrix can be solved by solving the linear equation . Is it possible to be specific ? Let's move on .

Camera parameter solution

This section will take you to the specific solution of camera parameters , Answer the questions left over from the previous section , That is, whether it can pass the formula (7) To solve camera parameters .

This section will be divided into 3 Parts of , The first part introduces the direct by formula (7) Closed form solution formula for solving camera parameters ; The second part introduces the maximum likelihood solution of camera parameters ; The third part introduces the solution of distortion parameters after considering camera distortion .

Closed solution

First define B = A − T A − 1 = [ B 11 B 12 B 13 B 12 B 22 B 23 B 13 B 23 B 33 ] \text B=\text A^{-T}\text A^{-1}=\left[\begin{matrix}B_{11}&B_{12}&B_{13}\\B_{12}&B_{22}&B_{23}\\B_{13}&B_{23}&B_{33}\end{matrix}\right] B=A−TA−1=⎣⎡B11B12B13B12B22B23B13B23B33⎦⎤.

Concrete , You can calculate that B \text B B Each element of :

B = [ 1 α 2 − γ α 2 β v 0 γ − u 0 β α 2 β − γ α 2 β γ 2 α 2 β 2 + 1 β 2 − γ ( v 0 γ − u 0 β ) α 2 β 2 − v 0 β 2 v 0 γ − u 0 β α 2 β − γ ( v 0 γ − u 0 β ) α 2 β 2 − v 0 β 2 ( v 0 γ − u 0 β ) 2 α 2 β 2 + v 0 2 β 2 + 1 ] (8) \text B=\left[\begin{matrix}\frac 1 {\alpha^2}&-\frac \gamma {\alpha^2\beta}&\frac {v_0\gamma-u_0\beta}{\alpha^2\beta}\\-\frac \gamma {\alpha^2\beta}&\frac {\gamma^2}{\alpha^2\beta^2}+\frac 1 {\beta^2}&-\frac {\gamma(v_0\gamma-u_0\beta)}{\alpha^2\beta^2}-\frac {v_0} {\beta^2}\\\frac {v_0\gamma-u_0\beta}{\alpha^2\beta}&-\frac {\gamma(v_0\gamma-u_0\beta)}{\alpha^2\beta^2}-\frac {v_0} {\beta^2}&\frac {(v_0\gamma-u_0\beta)^2}{\alpha^2\beta^2}+\frac {v_0^2}{\beta^2}+1\end{matrix}\right] \tag 8 B=⎣⎢⎡α21−α2βγα2βv0γ−u0β−α2βγα2β2γ2+β21−α2β2γ(v0γ−u0β)−β2v0α2βv0γ−u0β−α2β2γ(v0γ−u0β)−β2v0α2β2(v0γ−u0β)2+β2v02+1⎦⎥⎤(8)

( It is suggested that students should work out the formula (8), Although it looks complicated , But the derivation is actually very simple )

obviously , B \text B B It's a symmetric matrix , We can use one 6 The vector of dimension :

b = [ B 11 B 12 B 22 B 13 B 23 B 33 ] T (9) \text b=\left[\begin{matrix}B_{11}&B_{12}&B_{22}&B_{13}&B_{23}&B_{33}\end{matrix}\right]^T \tag 9 b=[B11B12B22B13B23B33]T(9)

Define homography matrix H \text H H Of the i i i The column vector is h i = [ h i 1 h i 2 h i 3 ] T \text h_i=\left[\begin{matrix}h_{i1}&h_{i2}&h_{i3}\end{matrix}\right]^T hi=[hi1hi2hi3]T, Then there are

h i T B h j = v i j T b (10) \text h_i^T\text B\text h_j=\text v_{ij}^T\text b \tag {10} hiTBhj=vijTb(10)

among , v i j = [ h i 1 h j 1 h i 1 h j 2 + h i 2 h j 1 h i 2 h j 2 h i 3 h j 1 + h i 1 h j 3 h i 3 h j 2 + h i 2 h j 3 h i 3 h j 3 ] T \text v_{ij}=\left[\begin{matrix}h_{i1}h_{j1}&h_{i1}h_{j2}+h_{i2}h_{j1}&h_{i2}h_{j2}&h_{i3}h_{j1}+h_{i1}h_{j3}&h_{i3}h_{j2}+h_{i2}h_{j3}&h_{i3}h_{j3}\end{matrix}\right]^T vij=[hi1hj1hi1hj2+hi2hj1hi2hj2hi3hj1+hi1hj3hi3hj2+hi2hj3hi3hj3]T.

successively , The formula (7) Can be converted to :

[ v 12 T ( v 11 − v 22 ) T ] b = 0 (11) \left[\begin{matrix}\text v_{12}^T\\(\text v_{11}-\text v_{22})^T\end{matrix}\right]\text b=0 \tag {11} [v12T(v11−v22)T]b=0(11)

This is a typical system of linear equations , The number of rows of the coefficient matrix is 2, solve 6 Dimension vector b \text b b. By formula (4), When the camera is 1 After shooting the pattern of the calibration board in three positions , The pixel coordinates of corners are extracted , The corresponding relationship between the world coordinate system and the pixel coordinate system of all corners can be obtained , Then the homography transformation matrix under the current pose is solved by the least square method of linear equations H \text H H, We can get the formula (11) The concrete expression of .

But the formula (11) The coefficient matrix of is only 2 That's ok , Demand solution 6 Dimension vector b \text b b It's not enough. . So we need a camera in n n n Shoot the calibration pattern in three positions , obtain n n n A homography matrix , And the number of lines is 2 n 2n 2n The coefficient matrix of , When n > = 3 n>=3 n>=3 when , It can be solved 6 Dimension vector b \text b b. That is, at least 3 The camera calibration can only be completed with pictures . The final total equations can be expressed as :

Vb = 0 (12) \text V\text b=0 \tag {12} Vb=0(12)

V \text V V It's a 2 n × 6 2n\times6 2n×6 The coefficient matrix of .

Solve the formula (12) Common methods , One is matrix V T V \text V^T\text V VTV The eigenvector corresponding to the minimum eigenvalue of is the solution of the equation , The second is the coefficient matrix V \text V V Singular value decomposition V = U S V \text V=USV V=USV, Decomposition matrix V V V The third column of is the solution of the equation .

It should be noted that , The formula (12) Is scale equivalent , Solved b \text b b Multiplying by any multiple is still the correct solution , So by the b \text b b Matrix of composition B \text B B Not strictly satisfied B = A − T A − 1 \text B=\text A^{-T}\text A^{-1} B=A−TA−1, But there is an arbitrary scale factor λ \lambda λ, Satisfy B = λ A − T A − 1 \text B=\lambda\text A^{-T}\text A^{-1} B=λA−TA−1. By derivation , The formula can be used by (13) To calculate all camera internal parameters :

v 0 = ( B 12 B 13 − B 11 B 23 ) / ( B 11 B 22 − B 12 2 ) λ = B 33 − [ B 13 2 + v 0 ( B 12 B 13 − B 11 B 23 ) ] / B 11 α = λ / B 11 β = λ B 11 / ( B 11 B 22 − B 12 2 ) γ = − B 12 α 2 β / λ u 0 = γ v 0 / β − B 13 α 2 / λ (13) \begin{aligned} v_0&=(B_{12}B_{13}-B_{11}B_{23})/(B_{11}B_{22}-B_{12}^2)\\ \lambda&=B_{33}-[B_{13}^2+v_0(B_{12}B_{13}-B_{11}B_{23})]/B_{11}\\ \alpha&=\sqrt {\lambda/B_{11}}\\ \beta&=\sqrt{\lambda B_{11}/(B_{11}B_{22}-B_{12}^2)}\\ \gamma&=-B_{12}\alpha^2\beta/\lambda\\ u_0&=\gamma v_0/\beta -B_{13}\alpha^2/\lambda \end{aligned} \tag {13} v0λαβγu0=(B12B13−B11B23)/(B11B22−B122)=B33−[B132+v0(B12B13−B11B23)]/B11=λ/B11=λB11/(B11B22−B122)=−B12α2β/λ=γv0/β−B13α2/λ(13)

When A \text A A After solving , External parameters under each pose R , t \text R,\text t R,t You can use the formula (14) Calculation :

r 1 = λ A − 1 h 1 r 2 = λ A − 1 h 2 r 3 = r 1 × r 2 t = λ A − 1 h 3 (14) \begin{aligned} \text r_1&=\lambda \text A^{-1} \text h_1\\ \text r_2&=\lambda \text A^{-1} \text h_2\\ \text r_3&=\text r_1\times \text r_2\\ \text t&=\lambda \text A^{-1}\text h_3 \end{aligned}\tag {14} r1r2r3t=λA−1h1=λA−1h2=r1×r2=λA−1h3(14)

among , λ = 1 / ∣ ∣ A − 1 h 1 ∣ ∣ = 1 / ∣ ∣ A − 1 h 2 ∣ ∣ \lambda=1/||\text A^{-1}\text h_1||=1/||\text A^{-1}\text h_2|| λ=1/∣∣A−1h1∣∣=1/∣∣A−1h2∣∣.

It should be noted that , Because of the noise , Calculated rotation matrix Q = [ r 1 , r 2 , r 3 ] \text Q=[\text r_1,\text r_2,\text r_3] Q=[r1,r2,r3] Does not satisfy orthogonality , Further calculations and Q \text Q Q The nearest orthogonal matrix R \text R R, It can be calculated by singular value decomposition . The best orthogonal matrix R \text R R The matrix should be satisfied R − Q \text R-\text Q R−Q Of Frobenius Norm minimum , That is to solve the following problem :

min R ∣ ∣ R − Q ∣ ∣ F 2 subject to R T R = I (15) \min_\text R||\text R-\text Q||_F^2 \ \ \ \ \text{subject to} \ \text R^T\text R=\text I \tag {15} Rmin∣∣R−Q∣∣F2 subject to RTR=I(15)

because

∣ ∣ R − Q ∣ ∣ F 2 = t r a c e ( ( R − Q ) T ( R − Q ) ) = 3 + t r a c e ( Q T Q ) − 2 t r a c e ( R T Q ) \begin{aligned} ||\text R-\text Q||_F^2&=trace((\text R-\text Q)^T(\text R-\text Q))\\ &=3+trace(\text Q^T\text Q)-2trace(\text R^T\text Q) \end{aligned} ∣∣R−Q∣∣F2=trace((R−Q)T(R−Q))=3+trace(QTQ)−2trace(RTQ)

therefore , Turn the problem into a solution t r a c e ( R T Q ) trace(\text R^T\text Q) trace(RTQ) maximal R \text R R.

We define the matrix Q \text Q Q The singular value of is decomposed into US V T \text U\text S\text V^T USVT, among S = d i a g ( σ 1 , σ 2 , σ 3 ) \text S=diag(\sigma_1,\sigma_2,\sigma_3) S=diag(σ1,σ2,σ3). If we define an orthogonal matrix Z = V T R T U \text Z=\text V^T\text R^T\text U Z=VTRTU, Then there are

t r a c e ( R T Q ) = t r a c e ( R T US V T ) = t r a c e ( V T R T US ) = t r a c e ( ZS ) = ∑ i = 1 3 z i i σ i ≤ ∑ i = 1 3 σ i \begin{aligned} trace(\text R^T\text Q)&=trace(\text R^T\text U\text S\text V^T)=trace(\text V^T\text R^T\text U\text S)\\ &=trace(\text Z\text S)=\sum_{i=1}^3z_{ii}\sigma_{i}\le\sum_{i=1}^3\sigma_i \end{aligned} trace(RTQ)=trace(RTUSVT)=trace(VTRTUS)=trace(ZS)=i=1∑3ziiσi≤i=1∑3σi

obviously , When Z = I \text Z=\text I Z=I when , t r a c e ( R T Q ) trace(\text R^T\text Q) trace(RTQ) To the maximum , Available R = U V T \text R=\text U\text V^T R=UVT.

above , All internal and external parameters are solved , The whole closed solution is completed .

Maximum likelihood estimation

The solution with the minimum algebraic distance can be obtained by the closed solution , The actual geometric meaning of each parameter is not taken into account , Because of the noise , The solution is not very accurate . We can obtain a more accurate solution by maximum likelihood estimation .

If we observe the calibration plate n n n In the shot image m m m A point is right . Suppose that all points have independent equal scale noise , Then the maximum likelihood estimation minimizes the following expression :

∑ i = 1 n ∑ j = 1 m ∣ ∣ m i j − m ^ ( A , R i , t i , M j ) ∣ ∣ 2 (16) \sum_{i=1}^n \sum_{j=1}^m ||\text m_{ij} - \hat {\text m} (\text A,\text R_i,\text t_i,\text M_j)||^2 \tag {16} i=1∑nj=1∑m∣∣mij−m^(A,Ri,ti,Mj)∣∣2(16)

among , m ^ ( A , R i , t i , M j ) \hat {\text m}(\text A,\text R_i,\text t_i,\text M_j) m^(A,Ri,ti,Mj) It's a space point M j \text M_j Mj In the image i i i The point of projection on , By formula (2) Calculation . Rotation matrix R \text R R Through three-dimensional vector r \text r r To express , r \text r r It is parallel to the rotation axis and the die length is the rotation angle , R \text R R and r \text r r It can be converted to each other through rodry format formula .

type (16) Is a nonlinear expression , So this is a nonlinear minimization problem , It can be used Levenberg-Marquardt Algorithm to solve . Presumably, some students know that solving this kind of problem requires a relatively accurate initialization value , This value can be obtained by using the closed solution obtained in the previous section .

In engineering practice , We usually use ceres-solver Library to handle this part .

Camera distortion

up to now , We haven't considered camera distortion , All derivations are based on the ideal case without distortion , The actual situation is that the camera will inevitably have more or less distortion , There are mainly two kinds of : Radial distortion and tangential distortion . In general , Will consider 3 Radial distortion of the term k 1 , k 2 , k 3 k_1,k_2,k_3 k1,k2,k3 and 2 Tangential distortion of the term p 1 , p 2 p_1,p_2 p1,p2. stay Zhang In the formula calibration method , Only considered 2 Radial distortion of the term k 1 , k 2 k_1,k_2 k1,k2, And in practice , We will consider more , The same principle , And so on .

Definition ( u , v ) (u,v) (u,v) Is the ideal pixel coordinate , ( u ˘ , v ˘ ) (\breve u,\breve v) (u˘,v˘) Is the actually observed pixel coordinates , Consider 2 After radial distortion , Both satisfy the following formula :

u ˘ = u + ( u − u 0 ) [ k 1 ( x 2 + y 2 ) + k 2 ( x 2 + y 2 ) 2 ] v ˘ = v + ( v − v 0 ) [ k 1 ( x 2 + y 2 ) + k 2 ( x 2 + y 2 ) 2 ] (17) \begin{aligned} \breve u&=u+(u-u_0)[k_1(x^2+y^2)+k_2(x^2+y^2)^2]\\ \breve v&=v+(v-v_0)[k_1(x^2+y^2)+k_2(x^2+y^2)^2] \tag {17} \end{aligned} u˘v˘=u+(u−u0)[k1(x2+y2)+k2(x2+y2)2]=v+(v−v0)[k1(x2+y2)+k2(x2+y2)2](17)

among , x = u − u 0 x=u-u_0 x=u−u0, y = v − v 0 y=v-v_0 y=v−v0.

type (17) It's a system of linear equations , solve k 1 , k 2 k_1,k_2 k1,k2 It seems to be a simple thing , But in fact, there is a problem , That is, it is impossible to obtain the ideal coordinates without distortion ( u , v ) (u,v) (u,v), It needs to know the accurate internal and external parameters through the projection formula (2) To solve , Students may ask : In the first two sections, we solved the internal and external parameters !? Don't forget to , The camera distortion is not considered in the front , The solution of internal and external parameters is actually inaccurate ( The observed value is regarded as the internal and external parameters of distortion free coordinates , It's biased ). So this has become a contradiction between chicken and egg .

Then simply do not expect to calculate the exact distortion through the linear equation , The internal and external parameters obtained from the closed solution are substituted into the formula (2) Find the approximate ideal coordinates ( u , v ) (u,v) (u,v), Thus, the formula (17) Establish linear equations to solve approximate k 1 , k 2 k_1,k_2 k1,k2.

then , We establish a new maximum likelihood estimation expression :

∑ i = 1 n ∑ j = 1 m ∣ ∣ m i j − m ˘ ( A , k 1 , k 2 , R i , t i , M j ) ∣ ∣ 2 (18) \sum_{i=1}^n \sum_{j=1}^m ||\text m_{ij} - \breve {\text m}(\text A,k_1,k_2,\text R_i,\text t_i,\text M_j)||^2 \tag {18} i=1∑nj=1∑m∣∣mij−m˘(A,k1,k2,Ri,ti,Mj)∣∣2(18)

Similarly, all internal and external parameters and distortion coefficients are solved by nonlinear solution method . The initial value of the distortion coefficient is solved by the method described above .

actually , Because the distortion coefficient is very small , Therefore, the initial values of the distortion coefficients are directly set to 0 It's OK, too , There is no need to solve linear equations .

summary

Next to the whole Zhang Make a summary of the calibration process :

- Print the calibration pattern and paste it on a plane , Call it a calibration plate .

- By moving the camera or the calibration plate, multiple calibration plate images are taken in different positions and attitudes ( Number of images >=3).

- Detect feature points on all images ( Corner or center point ).

- Use the closed solution to solve all internal and external parameters .

- Approximate distortion coefficients are solved by linear equations ( Or directly assign a value of 0).

- Accurate internal and external parameters and distortion coefficients are calculated through nonlinear optimization .

The above is Zhang All theoretical explanations of the formula calibration method , I hope students can be enlightened after reading this article , And be able to understand , It is handy in practical engineering application .

stay Zhang In the original text of formula calibration method , There are also some high-level knowledge points worth learning that are not reflected in this article , Including key formulas (7) The geometric interpretation of , And the discussion of degradation , Interested students can continue to read the original text to understand .

In the next article, we will bring another method of camera calibration :DLT Direct linear transformation .

Homework

Here are some exercises for you , We can deepen our understanding through practice :

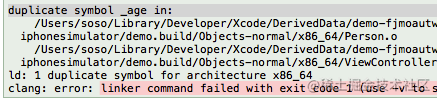

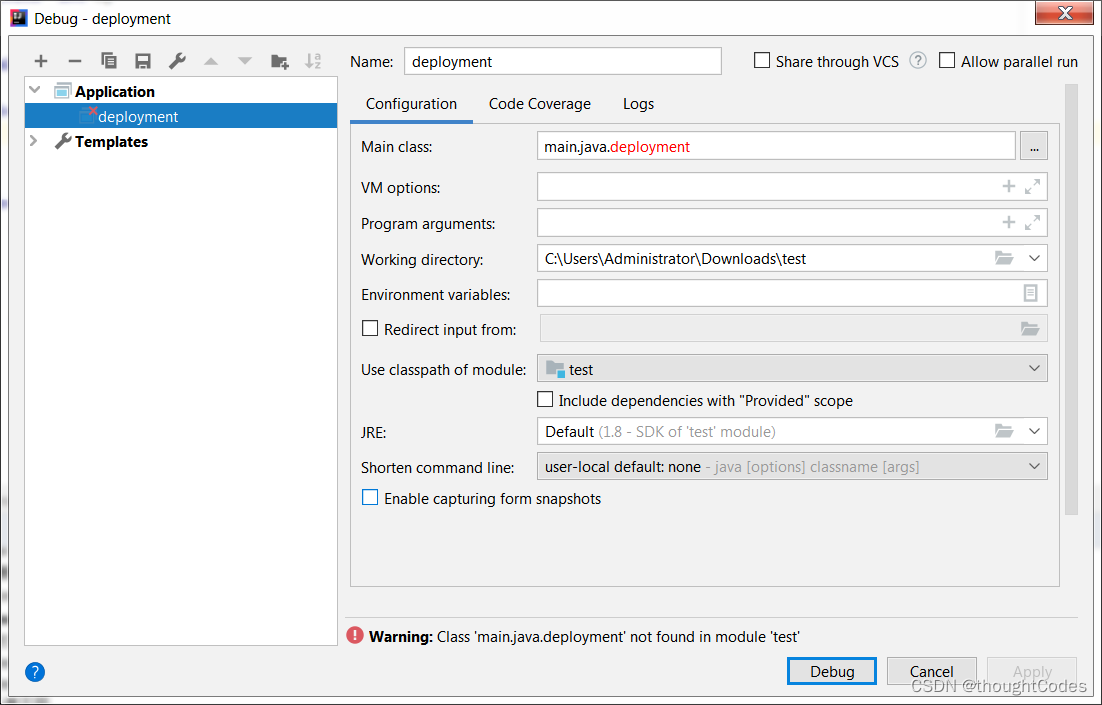

1 adopt opencv The interface provided by the open source library completes camera calibration , You can use opencv Images provided , You can also use your own image .

2 Higher order is , You can be independent opencv Library to write a camera calibration algorithm ? Or just use opencv To detect the corner coordinates , Other steps are implemented by yourself .

Please refer to the address :https://github.com/ethan-li-coding/StereoV3DCode [ The code is constantly updated , If you are interested, you can star and watch, This code is temporarily missing ]

reference

- [1] Zhang Z . A Flexible New Technique for Camera Calibration[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(11):1330-1334.边栏推荐

- Kubedm series-00-overview

- Illustration of eight classic pointer written test questions

- Guess riddles (9)

- Understanding rotation matrix R from the perspective of base transformation

- 696. Count binary substring

- Ecmascript6 introduction and environment construction

- Guess riddles (8)

- Add discount recharge and discount shadow ticket plug-ins to the resource realization applet

- Use arm neon operation to improve memory copy speed

- Guess riddles (4)

猜你喜欢

The combination of deep learning model and wet experiment is expected to be used for metabolic flux analysis

Numpy pit: after the addition of dimension (n, 1) and dimension (n,) array, the dimension becomes (n, n)

容易混淆的基本概念 成员变量 局部变量 全局变量

Run menu analysis

Confusing basic concepts member variables local variables global variables

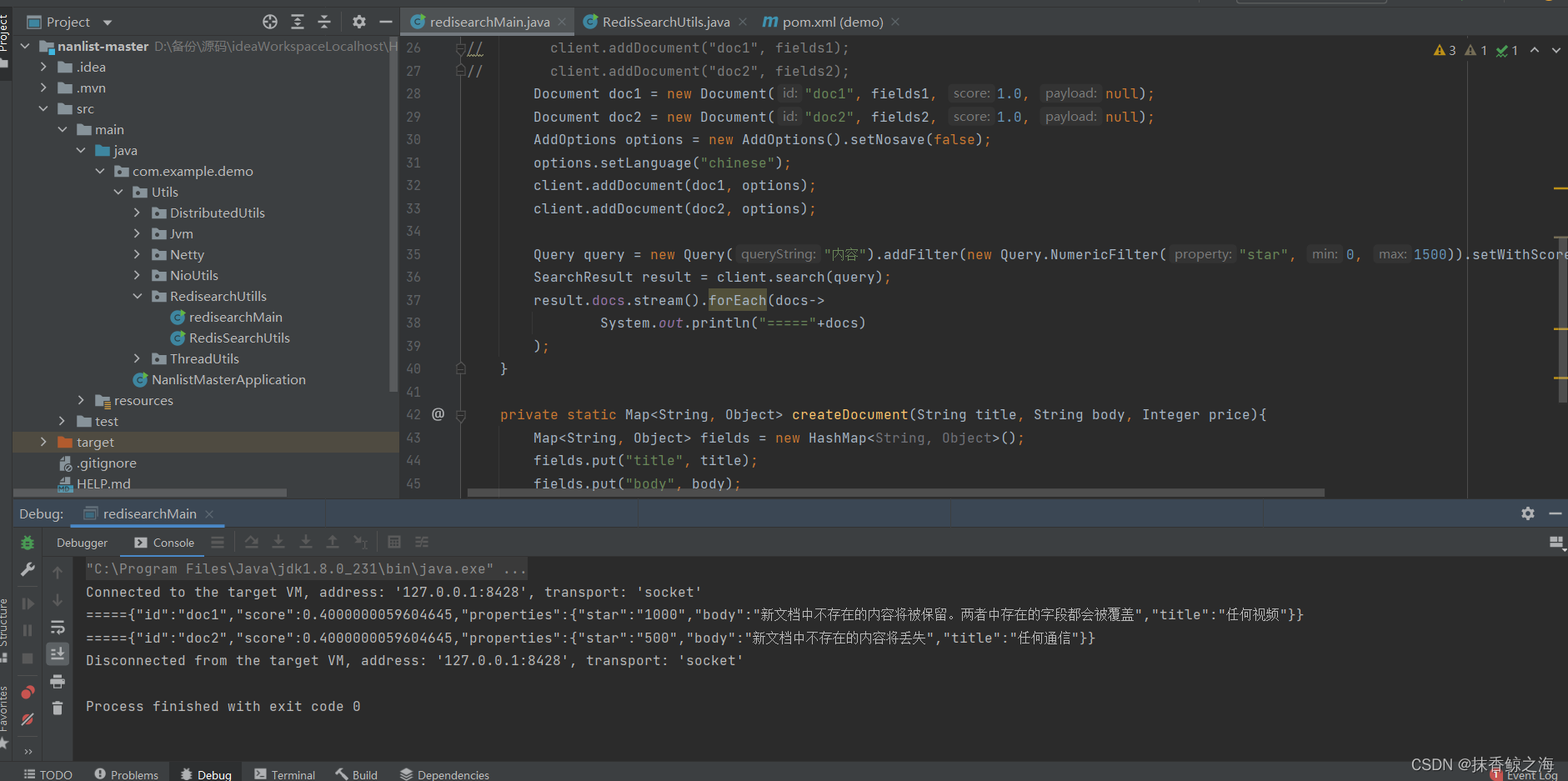

Redis实现高性能的全文搜索引擎---RediSearch

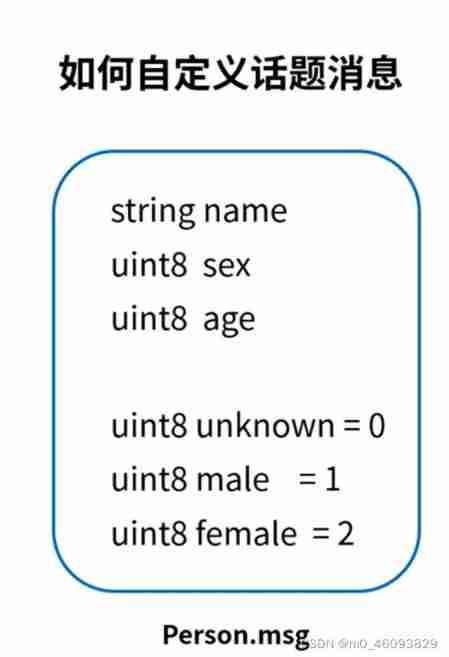

ROS learning 4 custom message

嗨 FUN 一夏,与 StarRocks 一起玩转 SQL Planner!

Illustration of eight classic pointer written test questions

It cold knowledge (updating ing~)

随机推荐

Codeforces round 684 (Div. 2) e - green shopping (line segment tree)

Introduction Guide to stereo vision (1): coordinate system and camera parameters

Illustration of eight classic pointer written test questions

C# LINQ源码分析之Count

Programming implementation of ROS learning 5-client node

Hello everyone, welcome to my CSDN blog!

容易混淆的基本概念 成员变量 局部变量 全局变量

Halcon affine transformations to regions

Ecmascript6 introduction and environment construction

[formation quotidienne - Tencent Selection 50] 557. Inverser le mot III dans la chaîne

Pearson correlation coefficient

Count of C # LINQ source code analysis

Business modeling of software model | overview

Shift operation of complement

【日常训练】1200. 最小绝对差

Guess riddles (11)

Introduction Guide to stereo vision (2): key matrix (essential matrix, basic matrix, homography matrix)

Halcon color recognition_ fuses. hdev:classify fuses by color

皮尔森相关系数

Install the CPU version of tensorflow+cuda+cudnn (ultra detailed)