当前位置:网站首页>kubeadm系列-00-overview

kubeadm系列-00-overview

2022-07-05 08:44:00 【runzhliu】

文章目录

Overview

Kubernetes 1.24 是正式弃用 Dockershim 的版本,本文主要描述一下安装 Kubernetes 1.24 + containerd 1.6.6 作为 CRI 的集群,主要是采用包管理器的方式来安装,安装的流程也可以参考官方文档

软件 | 版本 | 其他 |

|---|---|---|

kubernetes | 1.24.1 | |

containerd | 1.6.6 | |

runc | 1.1.2 | |

Centos | 8 Stream | |

内核 | 5.18.5-1.el8.elrepo.x86_64 |

配置yum repo文件

在 1.24 的版本,安装的时候可能会遇到这个奇怪的问题, gpgcheck=0 repo_gpgcheck=0 把这俩参数关掉就好

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# 国内源也可以

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF机器初始化的配置

如果是公有云的,这些配置可能会跟公有云本身的机器和系统初始化自带的一些软件和镜像有关,所以下面这些命令并不一定100%全 ,当然,如果缺什么软件或者配置的话,后面执行 kubeadm init 也会发现的

# Set SELinux in permissive mode (effectively disabling it)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

# 关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

sysctl -w net.ipv4.ip_forward=1

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/ipv4/ip_forward

# 加载内核模块

modprobe br_netfilter

modprobe overlay

# 时间同步

yum install ntpdate -y

# 方便排查ipvs的问题

yum install -y ipset ipvsadm软件依赖

如果不指定版本的话,以 yum install kubeadm 的操作,默认都是找最新的,所以是建议按下面的命令来安装指定的版本

# 安装1.24.1

sudo yum install -y kubelet-1.24.1-0 kubeadm-1.24.1-0 kubectl-1.24.1-0 --disableexcludes=kubernetes

# 安装1.21.7

sudo yum install -y kubelet-1.21.7-0 kubeadm-1.21.7-0 kubectl-1.21.7-0 --disableexcludes=kubernetes

# 删除1.24.1

sudo yum remove -y kubelet-1.24.1-0 kubeadm-1.24.1-0 kubectl-1.24.1-0 --disableexcludes=kubernetes

# 删除1.21.7

sudo yum remove -y kubelet-1.21.7-0 kubeadm-1.21.7-0 kubectl-1.21.7-0 --disableexcludes=kubernetes另外,作为依赖,在安装 kubelet/kubeadm/kubectl 的时候,下面这些软件也会被安装,同样需要注意一下版本的问题,不要出现版本差别太大的情况,如果是包管理器的方式安装,一般这些软件版本都是对应好的

cri-tools.x86_64 0:1.24.0-0

kubernetes-cni.x86_64 0:0.8.7-0

socat.x86_64 0:1.7.3.2-2.el7kubelet.service

查看一下 kubelet.service 的文件结构,比较普通,但是需要知道,kubeadm 初始化的集群,默认是把 kubelet 作为 service 来部署的,不像其他组件 kube-apiserver/etcd 那样以 Static Pod 的形式运行

cat /etc/systemd/system/multi-user.target.wants/kubelet.service

[Unit]

Description=kubelet: The Kubernetes Node Agent

Documentation=https://kubernetes.io/docs/

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/usr/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.targetkubeadm init

实际上 kubeadm init 会做很多配置的检查,比如会检查到 cri runtime 是什么等等,或者设置一下主机名之类的

# 设置主机名

hostnamectl set-hostname master镜像问题

在国内公有云环境下,镜像下载卡住,其实可以先执行下面的命令确认一下,如果 ps -ef 一看,下面这个进程在国内肯定是拉不到镜像的了,所以得想想配置的问题

kubeadm config images pull --kubernetes-version 1.24.1

# 用crictl下载

/usr/bin/crictl -r unix:///var/run/containerd/containerd.sock pull k8s.gcr.io/kube-apiserver:v1.24.1Containerd的常见配置

# 必须更换两个endpoint,这个操作也可以直接修改kubeadm的初始化配置文件

crictl config runtime-endpoint unix:///run/containerd/containerd.sock

crictl config image-endpoint unix:///run/containerd/containerd.sock

# 测试一下

crictl pull docker.io/library/redis:alpine3.13

# 替换成国内的镜像查看镜像

注意 ctr 查看镜像是需要带 namespace 的

ctr --namespace k8s.io images list排查日志

安装过程中如果有任何问题,请仔细看清楚kube init 之后的命令输出,另外就是查看 kubelet 以及 containerd 这两个服务的日志,下面是可能会用到的排查命令

journalctl -xeu containerd --no-page -f

journalctl -xeu kubelet --no-page -f修改证书

具体做法可以参考博文

执行

在对 kubeadm 分析的时候,笔者主要参考了两个大版本,分别是 Kubernetes 1.21.7 以及 Kubernetes 1.24.1,老铁们在阅读的时候,如果有疑问的话,可以下载这两个版本的代码进行参考,如果不特别标明,一般是指 Kubernetes 1.24.1 的代码

下面的 kubeadm init 的日志,省去了一些个人的信息,并且加入了一些步骤的注释,相关信息也可以通过 kubeadm init --help 命令来打印出来

# 创建的k8s版本

[init] Using Kubernetes version: v1.24.1

# 从这里开始进入preflight的阶段

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

# 从这里进入证书的阶段

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [ip-172-31-90-126 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.31.90.126]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [ip-172-31-90-126 localhost] and IPs [172.31.90.126 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [ip-172-31-90-126 localhost] and IPs [172.31.90.126 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

# 进入kubeconfig的阶段

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

# 开始启动kubelet

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

# 开始controlplane启动的阶段

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

# 健康检查

[apiclient] All control plane components are healthy after 16.503764 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

# 给controlplane打上必要的label

[mark-control-plane] Marking the node ip-172-31-90-126 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node ip-172-31-90-126 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

# 创建token

[bootstrap-token] Using token: qlk4br.83yi47aqacj3cwzh

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

# 插件安装的阶段

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy下面是接上面的流程之后的安装成功的日志,会提示你去把 kubeconig 给配置好

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.31.90.126:6443 --token qlk4br.83yi47aqacj3cwzh \

--discovery-token-ca-cert-hash sha256:cc121a0e581abbdedcbad370077c46e11da9d6ea60a201dd54be4c70893f98f4其实对于 kubeadm 的理解,个人是这么认为的,他做了很多脚本化的工作,这得益于 go 比较丰富的跟系统绑定的一些工具包,所以在调用的会很方便,另外就是用实际上,所有的这些检查和安装的工作,用脚本 shell 之类的也能写的很好,实际上在本人以前搞 Kubernetes 1.8 以及之前的版本,部署都是用运维写的脚本来做的,本质上是没有太大的区别的,但是用 go 来写,可以增加一些扩展性和健壮性,这是从语法层面上考虑的东西

边栏推荐

- Basic number theory - factors

- 猜谜语啦(10)

- Redis implements a high-performance full-text search engine -- redisearch

- How can fresh students write resumes to attract HR and interviewers

- Cinq détails de conception du régulateur de tension linéaire

- 每日一题——输入一个日期,输出它是该年的第几天

- 【三层架构及JDBC总结】

- Esphone retrofits old fans

- Shift operation of complement

- Mathematical modeling: factor analysis

猜你喜欢

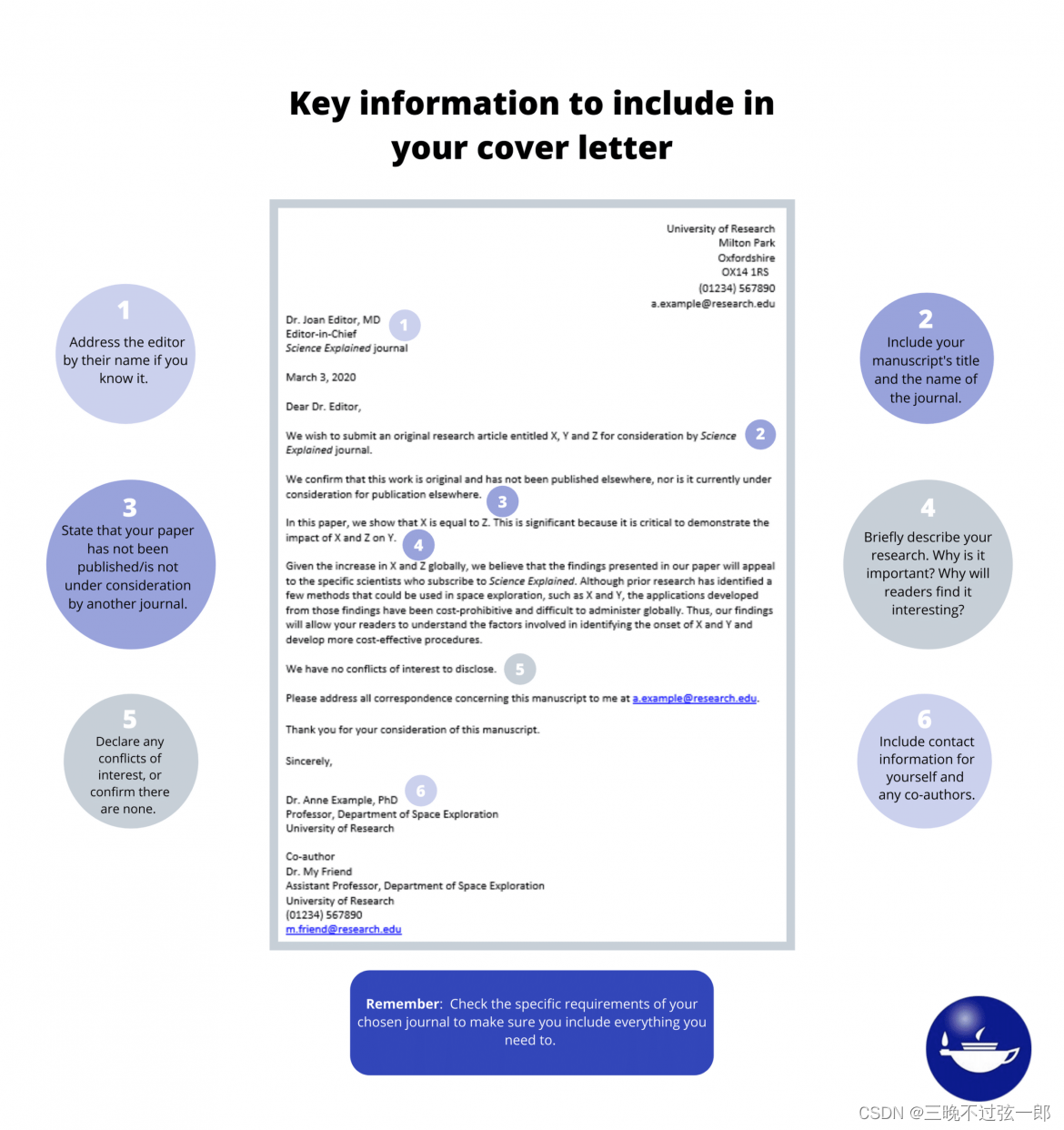

How to write cover letter?

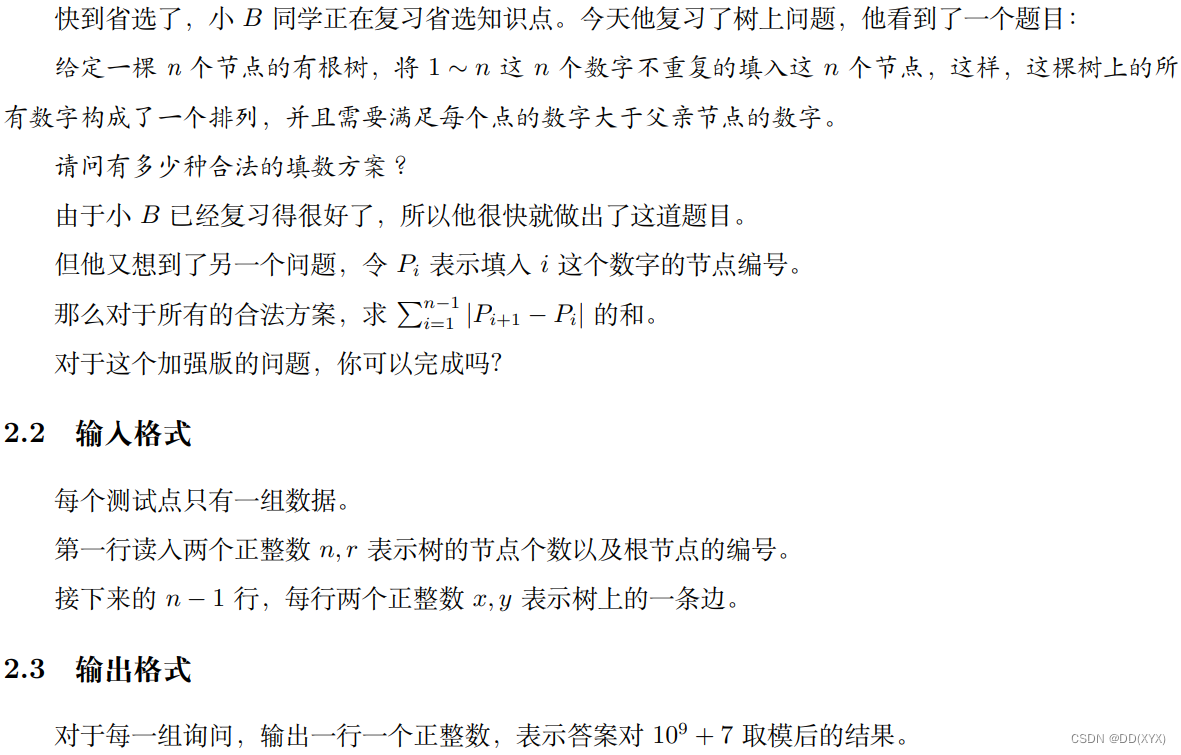

【NOI模拟赛】汁树(树形DP)

My university

Example 005: three numbers sorting input three integers x, y, Z, please output these three numbers from small to large.

Halcon shape_ trans

Lori remote control LEGO motor

Halcon blob analysis (ball.hdev)

Meizu Bluetooth remote control temperature and humidity access homeassistant

Explore the authentication mechanism of StarUML

![[nas1] (2021cvpr) attentivenas: improving neural architecture search via attentive sampling (unfinished)](/img/3b/c94b8466370f4461875c85b4f66860.png)

[nas1] (2021cvpr) attentivenas: improving neural architecture search via attentive sampling (unfinished)

随机推荐

Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding

Arduino+a4988 control stepper motor

Redis implements a high-performance full-text search engine -- redisearch

Example 001: the number combination has four numbers: 1, 2, 3, 4. How many three digits can be formed that are different from each other and have no duplicate numbers? How many are each?

Digital analog 2: integer programming

Meizu Bluetooth remote control temperature and humidity access homeassistant

特征工程

Lori remote control commissioning record

Numpy 小坑:维度 (n, 1) 和 维度 (n, ) 数组相加运算后维度变为 (n, n)

Guess riddles (142)

Old Wang's esp8266 and old Wu's ws2818 light strip

Business modeling of software model | overview

Halcon color recognition_ fuses. hdev:classify fuses by color

Guess riddles (3)

图解八道经典指针笔试题

轮子1:QCustomPlot初始化模板

Guess riddles (8)

Basic number theory - fast power

An enterprise information integration system

L298N module use