当前位置:网站首页>ShuffleNet v2 network structure reproduction (Pytorch version)

ShuffleNet v2 network structure reproduction (Pytorch version)

2022-08-04 08:02:00 【Diffie Herman】

ShuffleNet v2网络结构复现

from torch import nn

from torch.nn import functional

import torch

from torchsummary import summary

# ---------------------------- ShuffleBlock start -------------------------------

# 通道重排,跨group信息交流

def channel_shuffle(x, groups):

batchsize, num_channels, height, width = x.data.size()

channels_per_group = num_channels // groups

# reshape

x = x.view(batchsize, groups,

channels_per_group, height, width)

x = torch.transpose(x, 1, 2).contiguous()

# flatten

x = x.view(batchsize, -1, height, width)

return x

class CBRM(nn.Module):

def __init__(self, c1, c2): # ch_in, ch_out

super(CBRM, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(c1, c2, kernel_size=3, stride=2, padding=1, bias=False),

nn.BatchNorm2d(c2),

nn.ReLU(inplace=True),

)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

def forward(self, x):

return self.maxpool(self.conv(x))

class Shuffle_Block(nn.Module):

def __init__(self, inp, oup, stride):

super(Shuffle_Block, self).__init__()

if not (1 <= stride <= 3):

raise ValueError('illegal stride value')

self.stride = stride

branch_features = oup // 2

assert (self.stride != 1) or (inp == branch_features << 1)

if self.stride > 1:

self.branch1 = nn.Sequential(

self.depthwise_conv(inp, inp, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(inp),

nn.Conv2d(inp, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

)

self.branch2 = nn.Sequential(

nn.Conv2d(inp if (self.stride > 1) else branch_features,

branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

self.depthwise_conv(branch_features, branch_features, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(branch_features),

nn.Conv2d(branch_features, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

)

@staticmethod

def depthwise_conv(i, o, kernel_size, stride=1, padding=0, bias=False):

return nn.Conv2d(i, o, kernel_size, stride, padding, bias=bias, groups=i)

def forward(self, x):

if self.stride == 1:

x1, x2 = x.chunk(2, dim=1) # 按照维度1进行split

out = torch.cat((x1, self.branch2(x2)), dim=1)

else:

out = torch.cat((self.branch1(x), self.branch2(x)), dim=1)

out = channel_shuffle(out, 2)

return out

class ShuffleNetV2(nn.Module):

def __init__(self):

super(ShuffleNetV2, self).__init__()

self.MobileNet_01 = nn.Sequential(

CBRM(3, 32), # 160x160

Shuffle_Block(32, 128, 2), # 80x80

Shuffle_Block(128, 128, 1), # 80x80

Shuffle_Block(128, 256, 2), # 40x40

Shuffle_Block(256, 256, 1), # 40x40

Shuffle_Block(256, 512, 2), # 20x20

Shuffle_Block(512, 512, 1), # 20x20

)

def forward(self, x):

x = self.MobileNet_01(x)

return x

if __name__ == '__main__':

shufflenetv2 = ShuffleNetV2()

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

inputs = shufflenetv2.to(device)

summary(inputs, (3, 640, 640), batch_size=1, device="cuda") # 分别是输入数据的三个维度

#print(shufflenetv2)

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 32, 320, 320] 864

BatchNorm2d-2 [1, 32, 320, 320] 64

ReLU-3 [1, 32, 320, 320] 0

MaxPool2d-4 [1, 32, 160, 160] 0

CBRM-5 [1, 32, 160, 160] 0

Conv2d-6 [1, 32, 80, 80] 288

BatchNorm2d-7 [1, 32, 80, 80] 64

Conv2d-8 [1, 64, 80, 80] 2,048

BatchNorm2d-9 [1, 64, 80, 80] 128

ReLU-10 [1, 64, 80, 80] 0

Conv2d-11 [1, 64, 160, 160] 2,048

BatchNorm2d-12 [1, 64, 160, 160] 128

ReLU-13 [1, 64, 160, 160] 0

Conv2d-14 [1, 64, 80, 80] 576

BatchNorm2d-15 [1, 64, 80, 80] 128

Conv2d-16 [1, 64, 80, 80] 4,096

BatchNorm2d-17 [1, 64, 80, 80] 128

ReLU-18 [1, 64, 80, 80] 0

Shuffle_Block-19 [1, 128, 80, 80] 0

Conv2d-20 [1, 64, 80, 80] 4,096

BatchNorm2d-21 [1, 64, 80, 80] 128

ReLU-22 [1, 64, 80, 80] 0

Conv2d-23 [1, 64, 80, 80] 576

BatchNorm2d-24 [1, 64, 80, 80] 128

Conv2d-25 [1, 64, 80, 80] 4,096

BatchNorm2d-26 [1, 64, 80, 80] 128

ReLU-27 [1, 64, 80, 80] 0

Shuffle_Block-28 [1, 128, 80, 80] 0

Conv2d-29 [1, 128, 40, 40] 1,152

BatchNorm2d-30 [1, 128, 40, 40] 256

Conv2d-31 [1, 128, 40, 40] 16,384

BatchNorm2d-32 [1, 128, 40, 40] 256

ReLU-33 [1, 128, 40, 40] 0

Conv2d-34 [1, 128, 80, 80] 16,384

BatchNorm2d-35 [1, 128, 80, 80] 256

ReLU-36 [1, 128, 80, 80] 0

Conv2d-37 [1, 128, 40, 40] 1,152

BatchNorm2d-38 [1, 128, 40, 40] 256

Conv2d-39 [1, 128, 40, 40] 16,384

BatchNorm2d-40 [1, 128, 40, 40] 256

ReLU-41 [1, 128, 40, 40] 0

Shuffle_Block-42 [1, 256, 40, 40] 0

Conv2d-43 [1, 128, 40, 40] 16,384

BatchNorm2d-44 [1, 128, 40, 40] 256

ReLU-45 [1, 128, 40, 40] 0

Conv2d-46 [1, 128, 40, 40] 1,152

BatchNorm2d-47 [1, 128, 40, 40] 256

Conv2d-48 [1, 128, 40, 40] 16,384

BatchNorm2d-49 [1, 128, 40, 40] 256

ReLU-50 [1, 128, 40, 40] 0

Shuffle_Block-51 [1, 256, 40, 40] 0

Conv2d-52 [1, 256, 20, 20] 2,304

BatchNorm2d-53 [1, 256, 20, 20] 512

Conv2d-54 [1, 256, 20, 20] 65,536

BatchNorm2d-55 [1, 256, 20, 20] 512

ReLU-56 [1, 256, 20, 20] 0

Conv2d-57 [1, 256, 40, 40] 65,536

BatchNorm2d-58 [1, 256, 40, 40] 512

ReLU-59 [1, 256, 40, 40] 0

Conv2d-60 [1, 256, 20, 20] 2,304

BatchNorm2d-61 [1, 256, 20, 20] 512

Conv2d-62 [1, 256, 20, 20] 65,536

BatchNorm2d-63 [1, 256, 20, 20] 512

ReLU-64 [1, 256, 20, 20] 0

Shuffle_Block-65 [1, 512, 20, 20] 0

Conv2d-66 [1, 256, 20, 20] 65,536

BatchNorm2d-67 [1, 256, 20, 20] 512

ReLU-68 [1, 256, 20, 20] 0

Conv2d-69 [1, 256, 20, 20] 2,304

BatchNorm2d-70 [1, 256, 20, 20] 512

Conv2d-71 [1, 256, 20, 20] 65,536

BatchNorm2d-72 [1, 256, 20, 20] 512

ReLU-73 [1, 256, 20, 20] 0

Shuffle_Block-74 [1, 512, 20, 20] 0

================================================================

Total params: 445,824

Trainable params: 445,824

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 4.69

Forward/backward pass size (MB): 270.31

Params size (MB): 1.70

Estimated Total Size (MB): 276.70

----------------------------------------------------------------

ShuffleNetV2(

(MobileNet_01): Sequential(

(0): CBRM(

(conv): Sequential(

(0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

)

(1): Shuffle_Block(

(branch1): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=32, bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): Conv2d(32, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(32, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=64, bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): Shuffle_Block(

(branch2): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=64, bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): Shuffle_Block(

(branch1): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=128, bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=128, bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(4): Shuffle_Block(

(branch2): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=128, bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(5): Shuffle_Block(

(branch1): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=256, bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=256, bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(6): Shuffle_Block(

(branch2): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=256, bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

)

边栏推荐

- 【CNN基础】转置卷积学习笔记

- 金仓数据库 KDTS 迁移工具使用指南 (7. 部署常见问题)

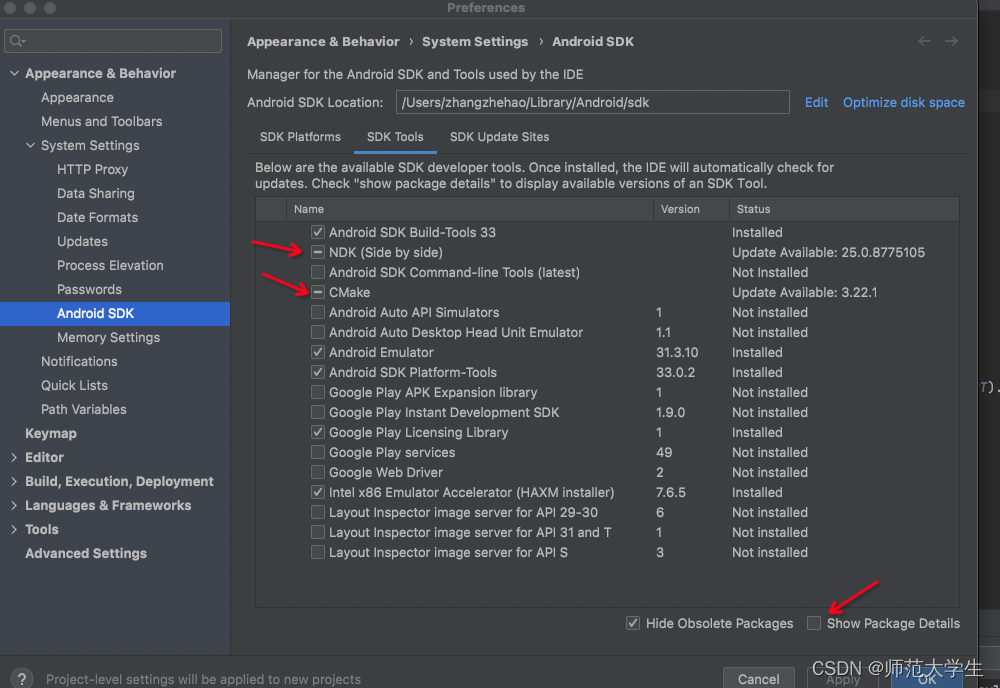

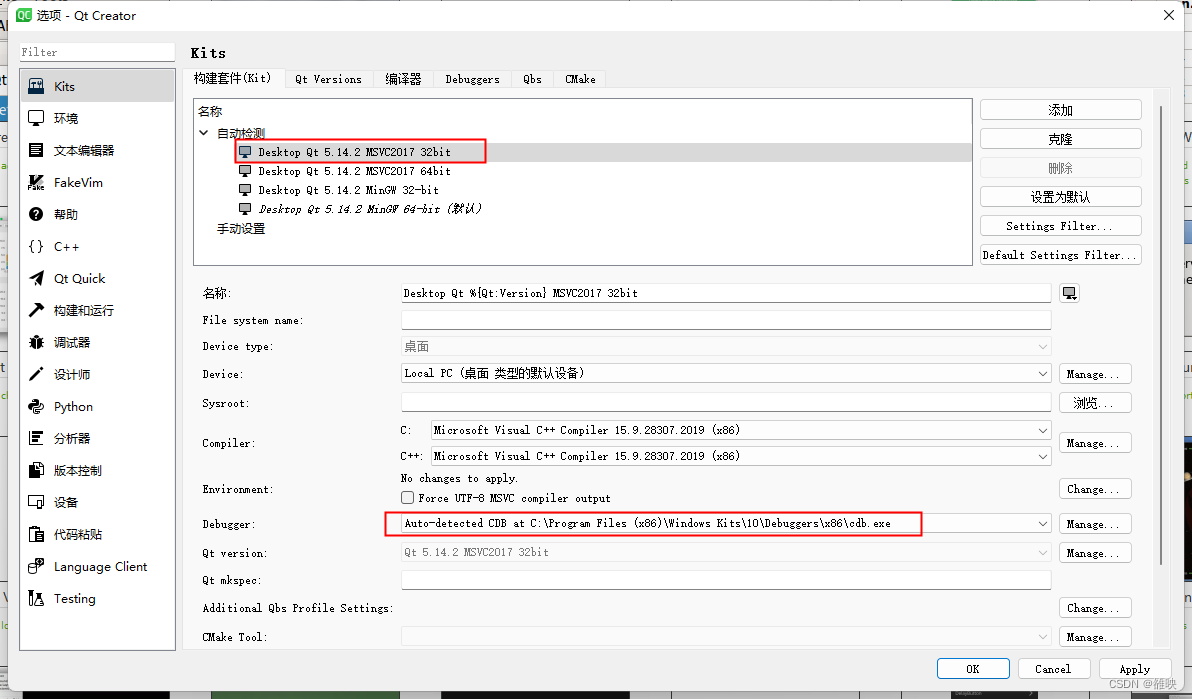

- JNI学习1.环境配置与简单函数实现

- 解决:Hbuilder工具点击发行打包,一直报尚未完成社区身份验证,请点击链接xxxxx,项目xxx发布H5失败的错误。

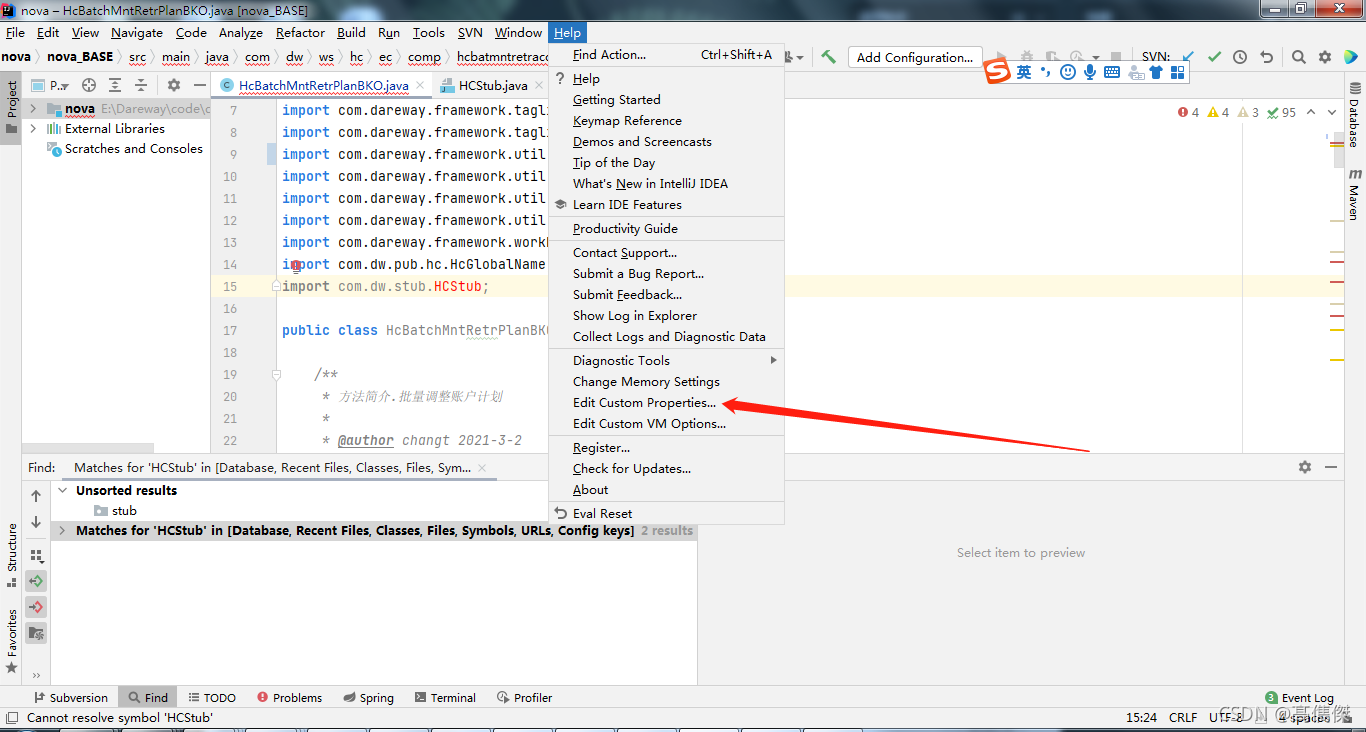

- IDEA引入类报错:“The file size (2.59 MB) exceeds the configured limit (2.56MB)

- The sorting algorithm including selection, bubble, and insertion

- 一天搞定JDBC02:开启事务

- GIS数据与CAD数据间带属性字段互相转换还原工具,解决ArcGIS等软件进行GIS数据转CAD数据无法保留属性字段问题

- 经典递归回溯问题之——解数独(LeetCode 37)

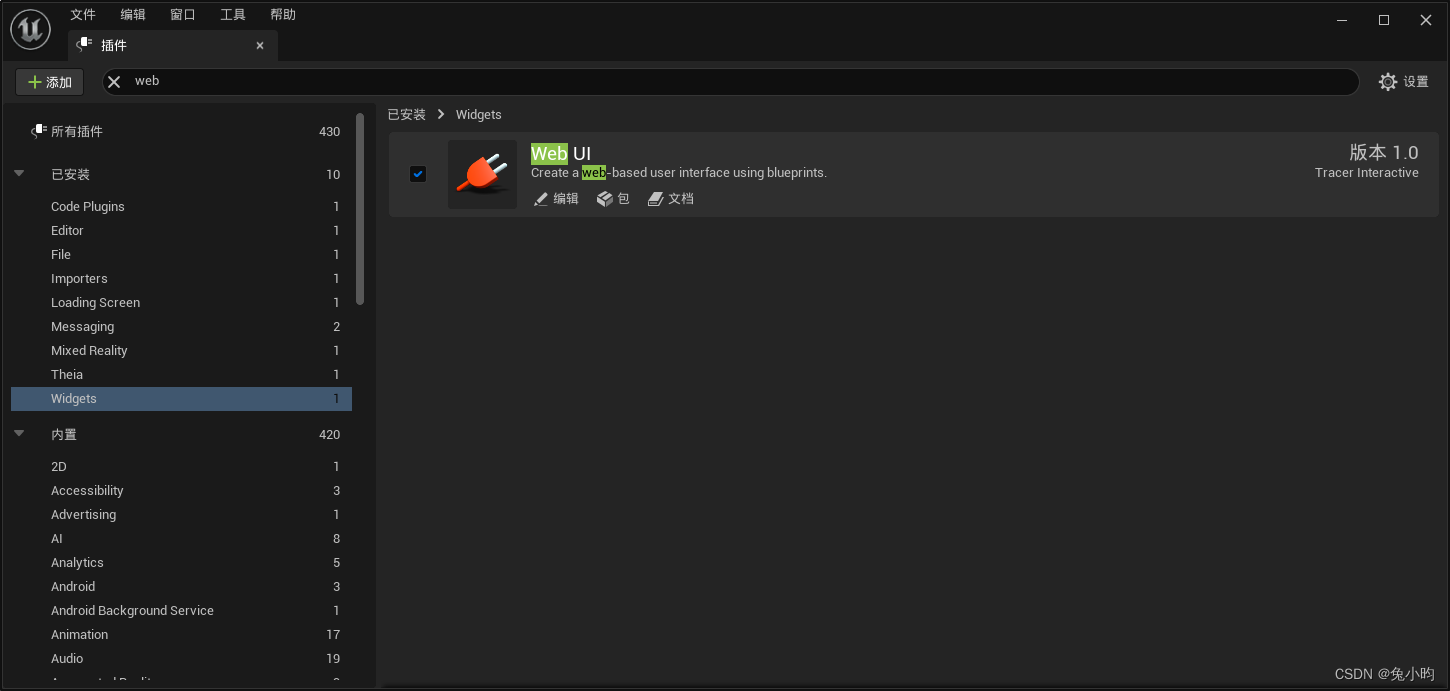

- 【UE虚幻引擎】UE5三步骤实现AI漫游与对话行为

猜你喜欢

随机推荐

IDEA引入类报错:“The file size (2.59 MB) exceeds the configured limit (2.56MB)

YOLOv5应用轻量级通用上采样算子CARAFE

TCP协议详解

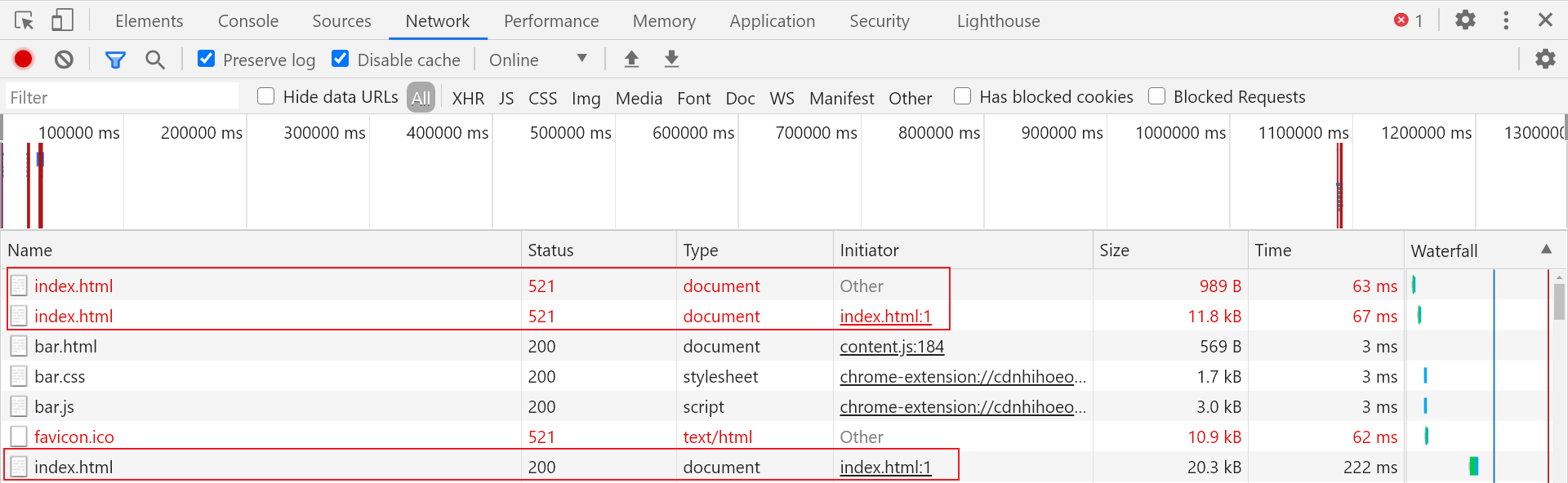

【JS 逆向百例】某网站加速乐 Cookie 混淆逆向详解

js异步变同步、同步变异步

【UE虚幻引擎】UE5三步骤实现AI漫游与对话行为

DWB主题事实及ST数据应用层构建,220803,,

设计信息录入界面,完成人员基本信息的录入工作,

【NOI模拟赛】纸老虎博弈(博弈论SG函数,长链剖分)

字节跳动岗位薪酬体系曝光,看完我真的酸了...

解决报错: YarnScheduler: Initial job has not accepted any resources

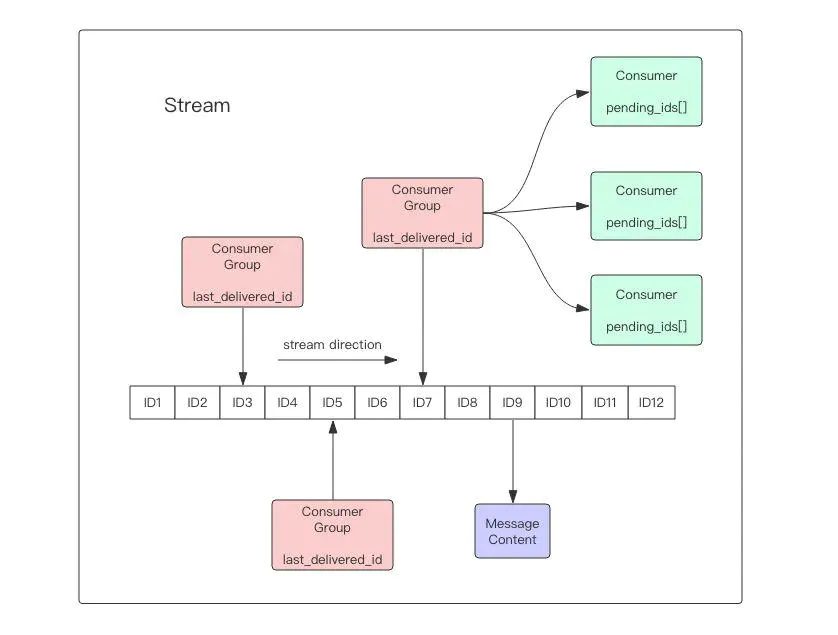

Redis分布式锁的应用

IntelliJ新建一个类或者包的快捷键是什么?

RT-Thread Studio学习(十一)IIC

MYSQL JDBC图书管理系统

「PHP基础知识」转换数据类型

form表单提交到数据库储存

卷积神经网络CNN

经典二分法查找的进阶题目——LeetCode33 搜索旋转排序数组

unity2D横版游戏教程7-敌人AI死亡效果