当前位置:网站首页>Storage space modifier in CUDA

Storage space modifier in CUDA

2022-07-02 06:28:00 【Little Heshang sweeping the floor】

Variable Memory Space Specifiers

The variable memory space specifier indicates the memory location of the variable on the device .

Nothing described in this section is declared in the device code __device__、__shared__ and __constant__ Automatic variables of memory space specifiers usually reside in registers . however , In some cases , The compiler may choose to place it in local memory , This may have adverse performance consequences , Such as Device memory access Described in .

1 __device__

__device__ The memory space specifier declares a variable residing on the device .

At most one of the other memory space specifiers defined in the next three sections can be associated with __device__ Use it together , To further indicate which memory space the variable belongs to . If they don't exist , Then the variable :

- Resides in the global memory space ,

- Has the ability to create it CUDA The life cycle of context ,

- Each device has a different object ,

- From all threads and hosts in the grid through the runtime library (

cudaGetSymbolAddress() / cudaGetSymbolSize() / cudaMemcpyToSymbol() / cudaMemcpyFromSymbol()) visit .

2. __constant__

__constant__ Memory space specifier , Optional and __device__ Use it together , Declare a variable :

- Resides in constant memory space ,

- Has the ability to create it CUDA The life cycle of context ,

- Each device has a different object ,

- From all threads and hosts in the grid through the runtime library (

cudaGetSymbolAddress() / cudaGetSymbolSize() / cudaMemcpyToSymbol() / cudaMemcpyFromSymbol()) visit .

3 __shared__

__shared__ Memory space specifier , Optional and __device__ Use it together , Declare a variable :

- Resides in the shared memory space of the thread block ,

- Have a life cycle of blocks ,

- Each block has a different object ,

- It can only be accessed from all threads in the block ,

- No fixed address .

When declaring variables in shared memory as external arrays , for example :

extern __shared__ float shared[];

The size of the array is determined at startup ( see also Perform configuration ). All variables declared in this way start at the same address in memory , Therefore, the layout of variables in the array must be explicitly managed by offsets . for example , If you want to be equivalent to ,

short array0[128];

float array1[64];

int array2[256];

Arrays can be declared and initialized in the following ways :

extern __shared__ float array[];

__device__ void func() // __device__ or __global__ function

{

short* array0 = (short*)array;

float* array1 = (float*)&array0[128];

int* array2 = (int*)&array1[64];

}

Please note that , Pointers need to be aligned with the type they point to , So the following code doesn't work , because array1 Not aligned to 4 Bytes .

extern __shared__ float array[];

__device__ void func() // __device__ or __global__ function

{

short* array0 = (short*)array;

float* array1 = (float*)&array0[127];

}

surface 4 Lists the alignment requirements for built-in vector types .

4. managed

__managed__ Memory space specifier , Optional and __device__ Use it together , Declare a variable :

- Can be referenced from device and host code , for example , You can get its address , It can also be read or written directly from the device or host function .

- With application lifecycle .

For more details , see also__managed__Memory space specifier .

5. restrict

nvcc adopt __restrict__ Keywords support restricted pointers .

C99 Restricted pointers are introduced in , To alleviate the presence of c The problem of aliasing in type languages , This problem suppresses various optimizations from code reordering to common subexpression elimination .

The following is an example affected by the aliasing problem , Using restricted pointers can help the compiler reduce the number of instructions :

void foo(const float* a,

const float* b,

float* c)

{

c[0] = a[0] * b[0];

c[1] = a[0] * b[0];

c[2] = a[0] * b[0] * a[1];

c[3] = a[0] * a[1];

c[4] = a[0] * b[0];

c[5] = b[0];

...

}

The effect here is to reduce the number of memory accesses and calculations . This is due to “ cache ” The load is balanced by the increased register pressure caused by the common sub expression .

Due to register pressure in many CUDA Code is a key problem , Therefore, due to the reduced occupancy , Using a restricted pointer will affect CUDA Code has a negative performance impact .

边栏推荐

- 阿里云MFA绑定Chrome浏览器

- 10 erreurs classiques de MySQL

- Sentinel rules persist to Nacos

- Tensorrt command line program

- ShardingSphere-JDBC篇

- Singleton mode compilation

- 华为MindSpore开源实习机试题

- LeetCode 90. Subset II

- DeprecationWarning: .ix is deprecated. Please use.loc for label based indexing or.iloc for positi

- CUDA中的Warp matrix functions

猜你喜欢

It is said that Kwai will pay for the Tiktok super fast version of the video? How can you miss this opportunity to collect wool?

Decryption skills of encrypted compressed files

Linear DP (split)

稀疏数组(非线性结构)

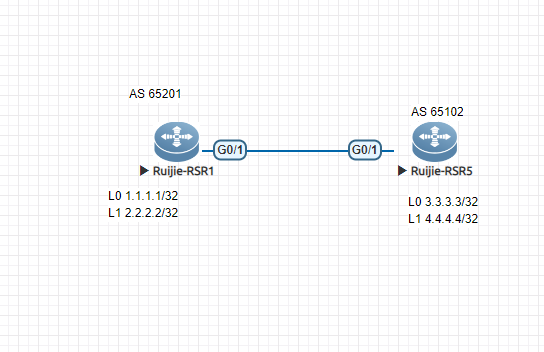

Ruijie ebgp configuration case

Log (common log framework)

【张三学C语言之】—深入理解数据存储

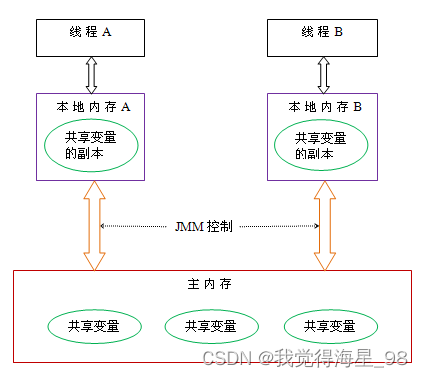

深入了解JUC并发(二)并发理论

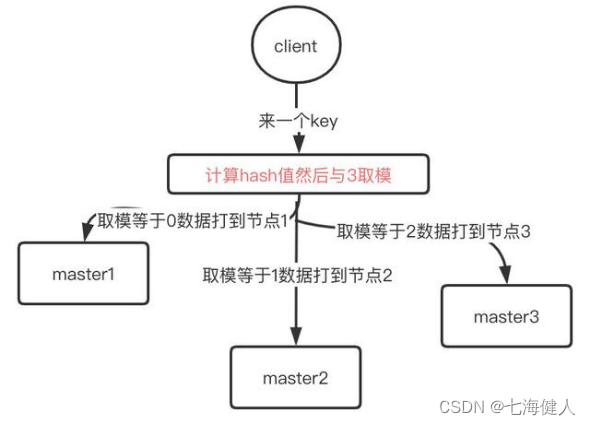

Redis——Cluster数据分布算法&哈希槽

Monitoring uplink of VRRP

随机推荐

AWD学习

日期时间API详解

ShardingSphere-JDBC篇

记录一次RDS故障排除--RDS容量徒增

MySQL的10大经典错误

DeprecationWarning: .ix is deprecated. Please use.loc for label based indexing or.iloc for positi

Redis——热点key问题

Golang -- map capacity expansion mechanism (including source code)

日志(常用的日志框架)

LeetCode 90. Subset II

Tensorrt command line program

LeetCode 40. Combined sum II

Amazon AWS data Lake Work Pit 1

构建学习tensorflow

FE - Weex 使用简单封装数据加载插件为全局加载方法

The Chinese word segmentation task is realized by using traditional methods (n-gram, HMM, etc.), neural network methods (CNN, LSTM, etc.) and pre training methods (Bert, etc.)

unittest.TextTestRunner不生成txt测试报告

数据科学【八】:SVD(一)

Does the assignment of Boolean types such as tag attribute disabled selected checked not take effect?

TensorRT的功能