当前位置:网站首页>Double contextual relationship network for polyp segmentation

Double contextual relationship network for polyp segmentation

2022-06-28 19:20:00 【sigmoidAndRELU】

Colonoscopy image segmentation paper reading

- The overall structure of the thesis

The overall structure of the thesis

Title of thesis : A dual context network for polyp segmentation (ISBI2022)

Author's unit : Beijing University of Posts and telecommunications

Author's name : Yinzijin et al

Code address : https://github.com/PRIS-CV/DCRNet/blob/master/lib/DCRNet.py

Abstract

Automatic segmentation of polyps in colonoscopy in colorectal cancer (CRC) It plays a key role in the early diagnosis of . However , The diversity of polyp images greatly increases the difficulty of accurate segmentation . The existing research mainly focuses on learning Context information in a single image , But failed to take advantage of Synchronous visual pattern of polyps across images . This article explores context dependency from the overall perspective of the entire data set , A duplex context network is proposed (DCRNet) To capture Within and between images Of Context . Based on the above two similarities , The features of each input region can be enhanced by embedding the context region . In order to store the feature area embedded in the previous image in the training process , Episodic memory is designed and operated as a queue . We are EndoScene、Kvasir-SEG And recently released large-scale PICCOLO The proposed method is evaluated on the dataset . Experimental results show that , What we proposed DCRNet It is superior to the most advanced methods in terms of widely used evaluation indicators .

contribution :

1、 Propose to embed the context area ;

2、 Episodic memory is designed and operated as a queue ;

3、 Put forward DCRNet;

4、 The model performs well on multiple colon cancer datasets .

introduction

Diagnosis and treatment of colon cancer , Regional analysis of polyps is a key step , Polypectomy is a direct method to prevent and treat early colorectal cancer . The colonoscopy image can clearly show the information of the whole patient's colon , However, there are still some difficulties in the localization and segmentation of polyps :1、 Polyps are various ;2、 The boundary between polyp and colonic mucosa is too vague . As shown in the figure :

From the image we can observe , Some are obvious , image a b, The swollen part is , and d It's very exaggerated ,c It's not obvious , You can't see it without looking carefully .

Related work

In the existing work , Here is a brief introduction :

1、 Multi-scale feature extraction network :ACSNet(MICCAI 2020), Combining context information and local details to deal with the problem of polyp feature diversity .

PraNet Using multi-scale feature aggregation , The contour map is extracted according to local features and the segmentation map is refined by up sampling .

2、 Use auxiliary information to constrain the segmentation results :SFANet(MICCAI 2019), Using region boundary constraints , To select feature aggregation , Improve segmentation accuracy .

a key : These jobs , forehead , It seems that we are all looking for feature segmentation on a single image , In this case, it is not related to a recessive lesion similarity , Then select the corresponding segmentation parameters ?? If so , What a model can do is to segment the obvious lesions , For different types of polyp images, the corresponding invisible classification , A simple image is simply divided , Complex images and inconspicuous images are special methods , A lot of sense !

So this article will mention a mechanism , It's called episodic memory !

Theoretical proof :(Content-based medical image retrieval of ct images of

liver lesions using manifold learning) The significance of retrieving from other images in the treatment of radiological lesions has been demonstrated .

Related achievements : It has been used in measurement learning .

therefore , This paper adopts this idea , From the whole point of view of the whole data set, this paper discusses the cross image and the feature association in the image .

Job summary :

1、 Intra image context module

2、 Context relation module outside the image

These two modules are also plug and play .

Model structure

First picture

First, see the network framework diagram , It consists of three parts , Encoder 、 decoder 、 Bottom information processing module .

Codec used in this paper is based on ResNet34 Of UNet, No more details here . Watch the main play directly !

Internal context

class PAM_Module(Module):

""" Position attention module"""

#Ref from SAGAN

def __init__(self, in_dim):

super(PAM_Module, self).__init__()

self.chanel_in = in_dim

self.query_conv = Conv2d(in_channels=in_dim, out_channels=in_dim//8, kernel_size=1)

self.key_conv = Conv2d(in_channels=in_dim, out_channels=in_dim//8, kernel_size=1)

self.value_conv = Conv2d(in_channels=in_dim, out_channels=in_dim, kernel_size=1)

self.gamma = Parameter(torch.zeros(1))

self.softmax = Softmax(dim=-1)

def forward(self, x):

""" inputs : x : input feature maps( B X C X H X W) returns : out : attention value + input feature attention: B X (HxW) X (HxW) """

m_batchsize, C, height, width = x.size()

proj_query = self.query_conv(x).view(m_batchsize, -1, width*height).permute(0, 2, 1)

proj_key = self.key_conv(x).view(m_batchsize, -1, width*height)

energy = torch.bmm(proj_query, proj_key)

attention = self.softmax(energy)

proj_value = self.value_conv(x).view(m_batchsize, -1, width*height)

out = torch.bmm(proj_value, attention.permute(0, 2, 1))

out = out.view(m_batchsize, C, height, width)

out = self.gamma*out + x

return out

This code , The notes written by the author are very detailed , This function is to establish the relationship between all pixels in the current image , Then multiply this relationship by the input , So as to obtain the weighted effect ! Of course , The residual structure is always a reserved item , Um. , That's it .

External context ( This is the first time in my life , It is worth observing )

class DCRNet(ResNet34Unet):

def __init__(self,

bank_size=20,

num_classes=1,

num_channels=3,

is_deconv=False,

decoder_kernel_size=3,

pretrained=True,

feat_channels=512

):

super().__init__(num_classes=1,

num_channels=3,

is_deconv=False,

decoder_kernel_size=3,

pretrained=True)

self.bank_size = bank_size

self.register_buffer("bank_ptr", torch.zeros(1, dtype=torch.long)) # memory bank pointer

self.register_buffer("bank", torch.zeros(self.bank_size, feat_channels, num_classes)) # memory bank

self.bank_full = False

# =====Attentive Cross Image Interaction==== #

self.feat_channels = feat_channels

self.L = nn.Conv2d(feat_channels, num_classes, 1)

self.X = conv2d(feat_channels, 512, 3)

self.phi = conv1d(512, 256)

self.psi = conv1d(512, 256)

self.delta = conv1d(512, 256)

self.rho = conv1d(256, 512)

self.g = conv2d(512 + 512, 512, 1)

# =========Dual Attention========== #

self.sa_head = PAM_Module(feat_channels)

#=========Attention Fusion=========#

self.fusion = nn.Conv2d(feat_channels, feat_channels, 1)

#==Initiate the pointer of bank buffer==#

def init(self):

self.bank_ptr[0] = 0

self.bank_full = False

@torch.no_grad() # This is very important !!!!

def update_bank(self, x):

ptr = int(self.bank_ptr)

batch_size = x.shape[0]

vacancy = self.bank_size - ptr

if batch_size >= vacancy:

self.bank_full = True

pos = min(batch_size, vacancy)

self.bank[ptr:ptr+pos] = x[0:pos].clone()

# update pointer

ptr = (ptr + pos) % self.bank_size

self.bank_ptr[0] = ptr

def down(self, x):

e1 = self.encoder1(x)

e2 = self.encoder2(e1)

e3 = self.encoder3(e2)

e4 = self.encoder4(e3)

return e4, e3, e2, e1

def up(self, feat, e3, e2, e1, x):

center = self.center(feat)

d4 = self.decoder4(torch.cat([center, e3], 1))

d3 = self.decoder3(torch.cat([d4, e2], 1))

d2 = self.decoder2(torch.cat([d3, e1], 1))

d1 = self.decoder1(torch.cat([d2, x], 1))

f1 = self.finalconv1(d1)

f2 = self.finalconv2(d2)

f3 = self.finalconv3(d3)

f4 = self.finalconv4(d4)

f4 = F.interpolate(f4, scale_factor=8, mode='bilinear', align_corners=True)

f3 = F.interpolate(f3, scale_factor=4, mode='bilinear', align_corners=True)

f2 = F.interpolate(f2, scale_factor=2, mode='bilinear', align_corners=True)

return f4, f3, f2, f1

def region_representation(self, input):

X = self.X(input)

L = self.L(input)

aux_out = L

batch, n_class, height, width = L.shape

l_flat = L.view(batch, n_class, -1)

# M = B * N * HW

M = torch.softmax(l_flat, -1)

channel = X.shape[1]

# X_flat = B * C * HW

X_flat = X.view(batch, channel, -1)

# f_k = B * C * N

f_k = (M @ X_flat.transpose(1, 2)).transpose(1, 2)

return aux_out, f_k, X_flat, X

def attentive_interaction(self, bank, X_flat, X):

batch, n_class, height, width = X.shape

# query = S * C

query = self.phi(bank).squeeze(dim=2)

# key: = B * C * HW

key = self.psi(X_flat)

# logit = HW * S * B (cross image relation)

logit = torch.matmul(query, key).transpose(0,2)

# attn = HW * S * B

attn = torch.softmax(logit, 2) ##softmax Correct dimension

# delta = S * C

delta = self.delta(bank).squeeze(dim=2)

# attn_sum = B * C * HW

attn_sum = torch.matmul(attn.transpose(1,2), delta).transpose(1,2)

# x_obj = B * C * H * W

X_obj = self.rho(attn_sum).view(batch, -1, height, width)

concat = torch.cat([X, X_obj], 1)

out = self.g(concat)

return out

def forward(self, x, flag='train'):

batch_size = x.shape[0]

#=== Stem ===#

x = self.firstconv(x)

x = self.firstbn(x)

x = self.firstrelu(x)

x_ = self.firstmaxpool(x)

#=== Encoder ===#

e4, e3, e2, e1 = self.down(x_)

#=== Attentive Cross Image Interaction ===#

aux_out, patch, feats_flat, feats = self.region_representation(e4)

if flag == 'train':

self.update_bank(patch)

ptr = int(self.bank_ptr)

if self.bank_full == True:

feature_aug = self.attentive_interaction(self.bank, feats_flat, feats)

else:

feature_aug = self.attentive_interaction(self.bank[0:ptr], feats_flat, feats)

elif flag == 'test':

feature_aug = self.attentive_interaction(patch, feats_flat, feats)

#=== Dual Attention ===#

sa_feat = self.sa_head(e4)

#=== Fusion ===#

feats = sa_feat + feature_aug

#=== Decoder ===#

f4, f3, f2, f1 = self.up(feats, e3, e2, e1, x)

aux_out = F.interpolate(aux_out, scale_factor=32, mode='bilinear', align_corners=True)

return aux_out, f4, f3, f2, f1

experimental analysis

The experimental part mainly includes the following aspects :

| Dataset name | Number of images | train | valid | test |

|---|---|---|---|---|

| EndoScene | 912 | 548 | 182 | 182 |

| Kvasir-SEG | 1000 | 600 | 200 | 200 |

| PICCOLO | 3433 | 2203 | 897 | 333 |

| equipment | Learning rate | epoches | batchsize | memory size |

|---|---|---|---|---|

| NVIDIA RTX 2080Ti | 1e-4 | 150 | 4 | 20(Kvasir) / 40(E & P) |

From the visual and tabular data , We can see the validity of this model !

For these two classical models , Has a good improvement , The design of the model and the rationality of the internal and external context reasoning system are explained .

Discuss

The biggest highlight of this article should be the external memory Set up , For the architecture of the whole model , We should learn this kind of implicit classification thought and idea , So is the mechanism of the so-called external context module !

Cheeky , Want a like collection , Thank you for your support !!!

边栏推荐

- C语言-函数知识点

- Live app system source code, automatically playing when encountering video dynamically

- try except 添加辅助新列

- math_ Proving common equivalent infinitesimal & Case & substitution

- Collection of real test questions

- new String(“hello“)之后,到底创建了几个对象?

- 从设计交付到开发,轻松畅快高效率!

- matlab 受约束的 Delaunay 三角剖分

- pd. Difference between before and after cut interval parameter setting

- Building tin with point cloud

猜你喜欢

First day of new work

C#连接数据库完成增删改查操作

Technical methodology of new AI engine under the data infrastructure upgrade window

数据基础设施升级窗口下,AI 新引擎的技术方法论

Hands on Teaching of servlet use (1)

Render function parsing

Summary of the use of qobjectcleanuphandler in QT

new String(“hello“)之后,到底创建了几个对象?

应用实践 | 10 亿数据秒级关联,货拉拉基于 Apache Doris 的 OLAP 体系演进(附 PPT 下载)

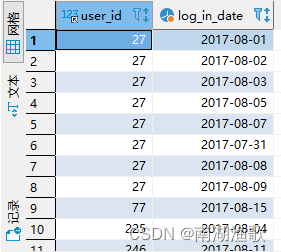

sql面试题:求连续最大登录天数

随机推荐

SQL Server2019 新建 SQL Server身份验证用户名 并登录

[unity3d] emission (raycast) physical ray (Ray)

1 goal, 3 fields, 6 factors and 9 links of digital transformation

深度学习需要多强的数学基础?

Are there any regular and safe foreign exchange dealers in China?

There are thousands of roads. Why did this innovative storage company choose this one?

About Critical Values

[C #] explain the difference between value type and reference type

Find out the users who log in for 7 consecutive days and 30 consecutive days

月环比sql实现

微博评论的高性能高可用计算架构方案

Graduation project - Design and development of restaurant management game based on unity (with source code, opening report, thesis, defense PPT, demonstration video and database)

Opengauss kernel: analysis of SQL parsing process

Ffmpeg usage in video compression processing

Live app system source code, automatically playing when encountering video dynamically

Paper 3 vscode & texlive & sumatrapdf create a perfect tool for writing papers

3D rotatable particle matrix

Sound network releases lingfalcon Internet of things cloud platform, which can build sample scenarios in one hour

多测师肖sirapp中riginal error: Could not extract PIDs from ps output. PIDS: [], Procs: [“bad pid

Bayesian inference problem, MCMC and variational inference