当前位置:网站首页>Neural Network Study Notes 3 - LSTM Long Short-Term Memory Network

Neural Network Study Notes 3 - LSTM Long Short-Term Memory Network

2022-07-30 10:40:00 【Oreos are delicious】

目录

1.1The general structure of the recurrent neural network

1.2延时神经网络(Time Delay Neural Network,TDNN)

entire blog post,The principle and code implementation are recommended to be eaten with the video,口感更佳

LSTMLong short-term memory network from principle to programming implementation

LSTMLong short-term memory network from principle to programming implementation_哔哩哔哩_bilibili

代码链接

链接:https://pan.baidu.com/s/1lrtbjpgpegiAGLOLLZcYoA

提取码:5lt3

--来自百度网盘超级会员V5的分享

要讲LSTMFirst of all, we need to understand the general network of circulating gods to which he belongs

1.循环神经网络

1.1The general structure of the recurrent neural network

循环神经网络(Recurrent Neural Network ,RNN)是一类具有短期记忆能力的神经网络.在循环神经网络中,神经元不但可以接受其他神经元的信息,也可以接受自身的信息,形成具有环路的网络结构.

1.2延时神经网络(Time Delay Neural Network,TDNN)

How recurrent neural networks form short-term memory?

答:Build an additional delay unit,用来存储网络的历史信息(可以包括输入、输出、隐状态等)

1.3按时间展开

The impact can be seen intuitively from the figure

1.4反向传播

![]()

δt,k为第t时刻的损失对第kNet input to hidden neuronszk的导数

1.5 梯度消失,梯度爆炸

梯度

梯度爆炸问题: 梯度消失问题:

权重衰减 调整模型

梯度截断

2.lstmgating principle

![]()

3Matlab实现

(1)加载序列数据

Load speech training data. XTrain是一个包含270个12A cell array of dimensional variable-length sequences. Y是标签“1”,“2”,… ,“9”,对应9个说话者. XTrainThe entry in is there12行(每个特性一行)and a different number of columns(One column per time step)的矩阵.

[XTrain,YTrain] = japaneseVowelsTrainData;

XTrain(1:5)(2)Visualize the first time series in a plot.每行对应一个特征.

figure

plot(XTrain{1}')

xlabel("Time Step")

title("Training Observation 1")

numFeatures = size(XTrain{1},1);

legend("Feature " + string(1:numFeatures),'Location','northeastoutside')(3)准备要填充的数据

准备填充数据

在训练过程中,默认情况下,The software divides the training data into mini-batches,and fill the sequence,使它们具有相同的长度. Excessive padding can negatively impact network performance.

To prevent adding too much padding during training,The training data can be sorted by sequence length,and choose a mini batch size,so that the sequences in the mini-batches are of similar length. The figure below shows the effect of padding the sequence before and after sorting.

Get the sequence length for each observation.

numObservations = numel(XTrain); % Take the number of samples

for i=1:numObservations

sequence = XTrain{i};

sequenceLengths(i) = size(sequence,2); %Take the number of sequence lengths

end(4)按序列长度对数据进行排序.Sorting uses mini-batches in order to keep similar ones together.

[sequenceLengths,idx] = sort(sequenceLengths);

XTrain = XTrain(idx);

YTrain = YTrain(idx);(5)在条形图中查看排序的序列长度.

figure

bar(sequenceLengths)

ylim([0 30])

xlabel("Sequence")

ylabel("Length")

title("Sorted Data")(6)Choose a mini-batch size 27 to evenly divide the training data,And reduce the amount of padding in mini-batches.The image below illustrates the padding added to the sequence.Because the total amount of data is270So use mini-batches per group of ten

miniBatchSize = 27;(7)定义 LSTM 网络架构

定义 LSTM 网络架构.将输入大小指定为序列大小 12(输入数据的维度).指定具有 100 个隐含单元的双向 LSTM 层,并输出序列的最后一个元素.最后,通过包含大小为 9 的全连接层,后跟 softmax 层和分类层,to specify nine classes.

If you have access to the full sequence at forecast time,Then you can use bidirectional in the network LSTM 层.双向 LSTM Layers learn from the complete sequence at each time step.If you don't have access to the full sequence at forecast time,例如,When you are forecasting values or forecasting one time step at a time,则改用 LSTM 层.

inputSize = 12;

numHiddenUnits = 100;

numClasses = 9;

layers = [ ...

sequenceInputLayer(inputSize) %输入层

bilstmLayer(numHiddenUnits,'OutputMode','last') %lstmStructure and hidden layers lastRepresents sequence-to-label classification

fullyConnectedLayer(numClasses) %Fully connected layer settings category

softmaxLayer %softmaxFind the probability of each class for multiple classes

classificationLayer] 现在,指定训练选项.指定求解器为 'adam',梯度阈值为 1,最大轮数为 100.To reduce padding in minibatches,请选择 27 作为小批量大小.The data is to be padded so that the length is the same as the longest sequence,Please specify the sequence length as 'longest'.要确保数据保持按序列长度排序的状态,请指定从不打乱数据.

Since the mini-batch data store is smaller and the sequence is shorter,So it is more suitable for CPU 上训练.将 'ExecutionEnvironment' 指定为 'cpu'.要在 GPU(如果可用)上进行训练,请将 'ExecutionEnvironment' 设置为 'auto'(这是默认值).

maxEpochs = 100; %设置迭代次数

miniBatchSize = 27; %Set minibatch parameters

options = trainingOptions('adam', ... %Train the network solver The training option specifies the decay rate of the gradient and the moving average of the squared gradient.

'ExecutionEnvironment','cpu', ... %选择cpu,或者gpu

'GradientThreshold',1, ... % Adam The gradient moving average decay rate of the solver,Specify less than The non-negative scalar of 1.Gradient decay rate in Adamsection indicated.必须是 'adam'.Default values are suitable for most tasks

'MaxEpochs',maxEpochs, ... % 迭代步数

'MiniBatchSize',miniBatchSize, ... %The size of the mini-batch used for each training iteration,指定为正整数.A mini-batch is a subset of the training set,Used to evaluate the gradient of the loss function and update the weights.Improve performance and increase generalization ability

'SequenceLength','longest', ... %序列长度

'Shuffle','never', ...

'Verbose',0, ... % An indicator showing training progress information in the command window,指定为 1(true) 或0(false).

'Plots','training-progress'); % 绘制训练过程Shuffle用法

Option for data shuffling,指定为下列之一:

'once'— Shuffle the training and validation data once before training.

'never'— 不要打乱数据.

'every-epoch'— 在每个训练 epoch 之前打乱训练数据,And scramble the validation data before each network validation.如果 mini-batch The size does not evenly divide the number of training samples,则trainNetworkDiscard does not fit every epoch the final completeness mini-batch 的训练数据.to avoid in each epoch Discard the same data,请将ShuffleThe training options are set to 'every-epoch'.

(8)训练 LSTM 网络

使用 trainNetwork 以指定的训练选项训练 LSTM 网络.

net = trainNetwork(XTrain,YTrain,layers,options); (9)测试 LSTM 网络

Load the test set and classify sequences to different speakers.

Load voice test data.XTest 是包含 370 个不同长度的 12 维序列的元胞数组.YTest is composed of labels corresponding to nine speakers "1"、"2"、...、"9" 组成的分类向量.

[XTest,YTest] = japaneseVowelsTestData;

XTest(1:3)LSTM 网络 net 已使用相似长度的小批量序列进行训练.Make sure to organize your test data in the same way.按序列长度对测试数据进行排序.

numObservationsTest = numel(XTest);

for i=1:numObservationsTest

sequence = XTest{i};

sequenceLengthsTest(i) = size(sequence,2);

end

[sequenceLengthsTest,idx] = sort(sequenceLengthsTest);

XTest = XTest(idx);

YTest = YTest(idx);对测试数据进行分类.To reduce the amount of padding introduced during classification,Please set the minibatch size to 27.To apply the same padding as the training data,Please specify the sequence length as 'longest'.

miniBatchSize = 27;

YPred = classify(net,XTest, ...

'MiniBatchSize',miniBatchSize, ...

'SequenceLength','longest');(10)计算预测值的分类准确度.

acc = sum(YPred == YTest)./numel(YTest)边栏推荐

- BERT预训练模型系列总结

- kubernetes的一些命令

- Re16:读论文 ILDC for CJPE: Indian Legal Documents Corpus for Court Judgment Prediction and Explanation

- By building a sequence table - teach you to calculate time complexity and space complexity (including recursion)

- The thread pool method opens the thread -- the difference between submit() and execute()

- Determine whether a tree is a complete binary tree - video explanation!!!

- spark udf 接受并处理 null值.

- Multithreading--the usage of threads and thread pools

- 第2章 常用安全工具

- Neural Ordinary Differential Equations

猜你喜欢

BERT pre-training model series summary

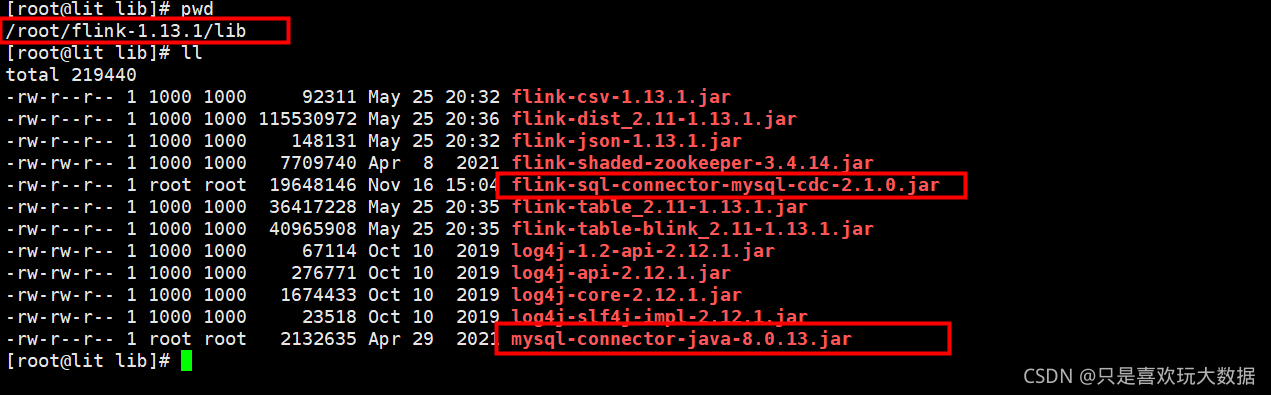

Flink_CDC construction and simple use

图像去噪——Neighbor2Neighbor: Self-Supervised Denoising from Single Noisy Images

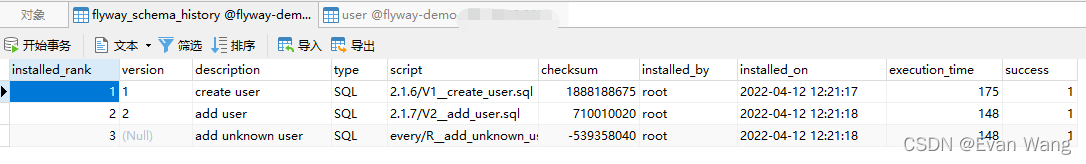

Quick Start Tutorial for flyway

JCL learning

Re19:读论文 Paragraph-level Rationale Extraction through Regularization: A case study on European Court

新一代开源免费的终端工具,太酷了

【HMS core】【FAQ】HMS Toolkit Typical Questions Collection 1

多线程--线程和线程池的用法

Re19: Read the paper Paragraph-level Rationale Extraction through Regularization: A case study on European Court

随机推荐

Always remember: one day you will emerge from the chrysalis

Scrapy爬虫之网站图片爬取

Online target drone prompt.ml

wsl操作

nacos实战项目中的配置

Meikle Studio-Look at the actual combat notes of Hongmeng device development six-wireless networking development

图像去噪——Neighbor2Neighbor: Self-Supervised Denoising from Single Noisy Images

Verilog之数码管译码

In the robot industry professionals, Mr Robot industry current situation?

MFCC转音频,效果不要太逗>V<!

Flask's routing (app.route) detailed

PyQt5 - draw text on window

shell script

The thread pool method opens the thread -- the difference between submit() and execute()

New in GNOME: Warn users when Secure Boot is disabled

Re15: Read the paper LEVEN: A Large-Scale Chinese Legal Event Detection Dataset

105. Construct binary tree from preorder and inorder traversal sequence (video explanation!!)

Beijing suddenly announced big news in the Metaverse

Re19: Read the paper Paragraph-level Rationale Extraction through Regularization: A case study on European Court

(***Key points***) Flink common memory problems and tuning guide (1)