当前位置:网站首页>Redis cache and existing problems--cache penetration, cache avalanche, cache breakdown and solutions

Redis cache and existing problems--cache penetration, cache avalanche, cache breakdown and solutions

2022-08-05 08:07:00 【Wind wind】

Redis cache and existing problems - cache penetration, cache avalanche, cache breakdown and solutions

Redis cache

Cache is a buffer for data exchange, a temporary place to store data, and generally has high read and write performance.

Why use a Redis cache?Because the IO speed of ordinary disk-based databases is too slow compared to business needs, Redis (memory-based database) can be used as cache middleware to store frequently accessed data in the database into Redis.Improve database access speed to meet business needs.

Using Redis cache can reduce backend load, improve read and write efficiency, and reduce access time.

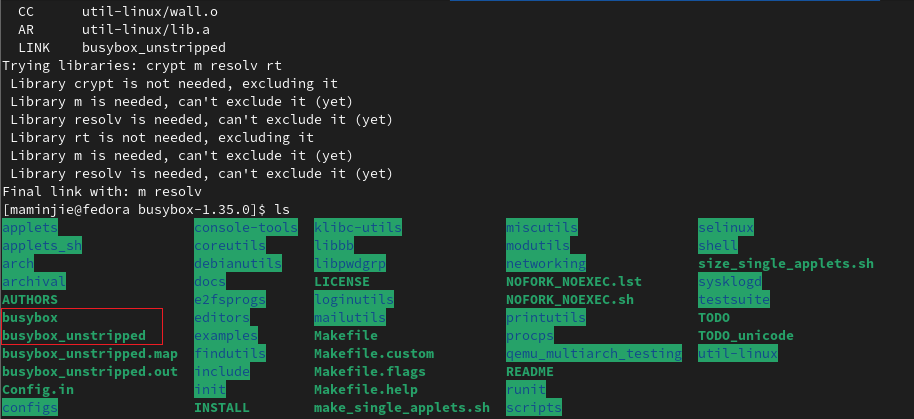

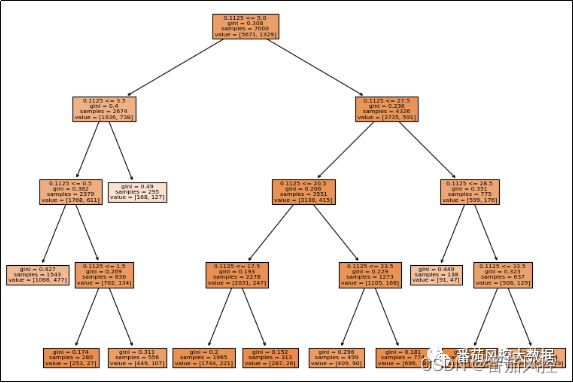

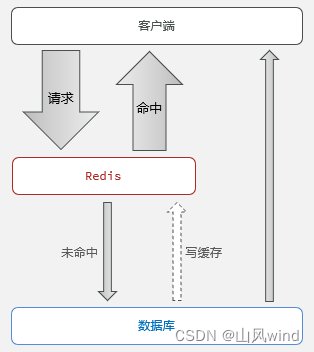

Use Redis for caching, generally as shown below:

When the client has a data request, it will first access Redis. If it exists in Redis, it will return directly. If it does not exist, it will request the database, and the data will write the returned data to Redis, which is convenient for the next access., and return the data to the client.

Cache Update Policy

- Low consistency requirements: Use the memory elimination mechanism that comes with Redis.

- High consistency requirements

Read operation: If the cache hits, return directly; if the cache misses, query the database, write to the cache, and set the timeout time.

Write operation: Write the database first, then update the cache.To ensure the atomicity of database operations and cache operations.

Notes on using Redis cache

Cache penetration

Cache penetration means that the data requested by the client does not exist in the cache and the database, so the cache will never take effect, and these requests will hit the database, and such requests will be continuously initiated, which will bring huge pressure to the database.

Solution:

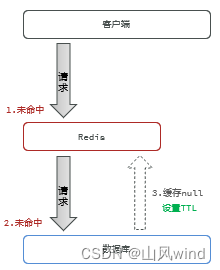

1. Cache empty objects

For non-existent data, also create a cache in Redis, the value is empty, and set a relativelyShort TTL time.

Advantages: Simple implementation and easy maintenance;

Disadvantages: Additional memory consumption; there will be short-term data inconsistencies.

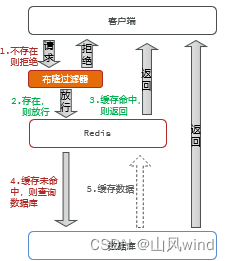

2. Bloom filtering

Using the Bloom filtering algorithm, first determine whether the request exists before entering Redis, and if it does not exist, the request is rejected directly.

Benefits: Low memory usage.

Disadvantages: Complex implementation; potential for misjudgment.

3. Other ways

- Do the basic format verification of the data;

- Enhanced user permission verification;

- Do a good job of limiting the current of hotspot parameters.

Cache Avalanche

At the same time, a large number of cache keys are invalid at the same time or the Redis service is down, resulting in a large number of requests reaching the database, bringing huge pressure.We can look for a solution based on the described problem.

Workaround:

- Add random values to the TTL of different keys;

- Using Redis Cluster to improve service availability;

- Add downgrade current limiting policy to cache service;

- Add multi-level cache to the business;

Cache breakdown

The cache breakdown problem is also called the hot key problem, which is a sudden failure of a key that is accessed by high concurrent access and the cache reconstruction service is more complicatedNow, countless requests to access will bring a huge impact to the database in an instant.

Solution:

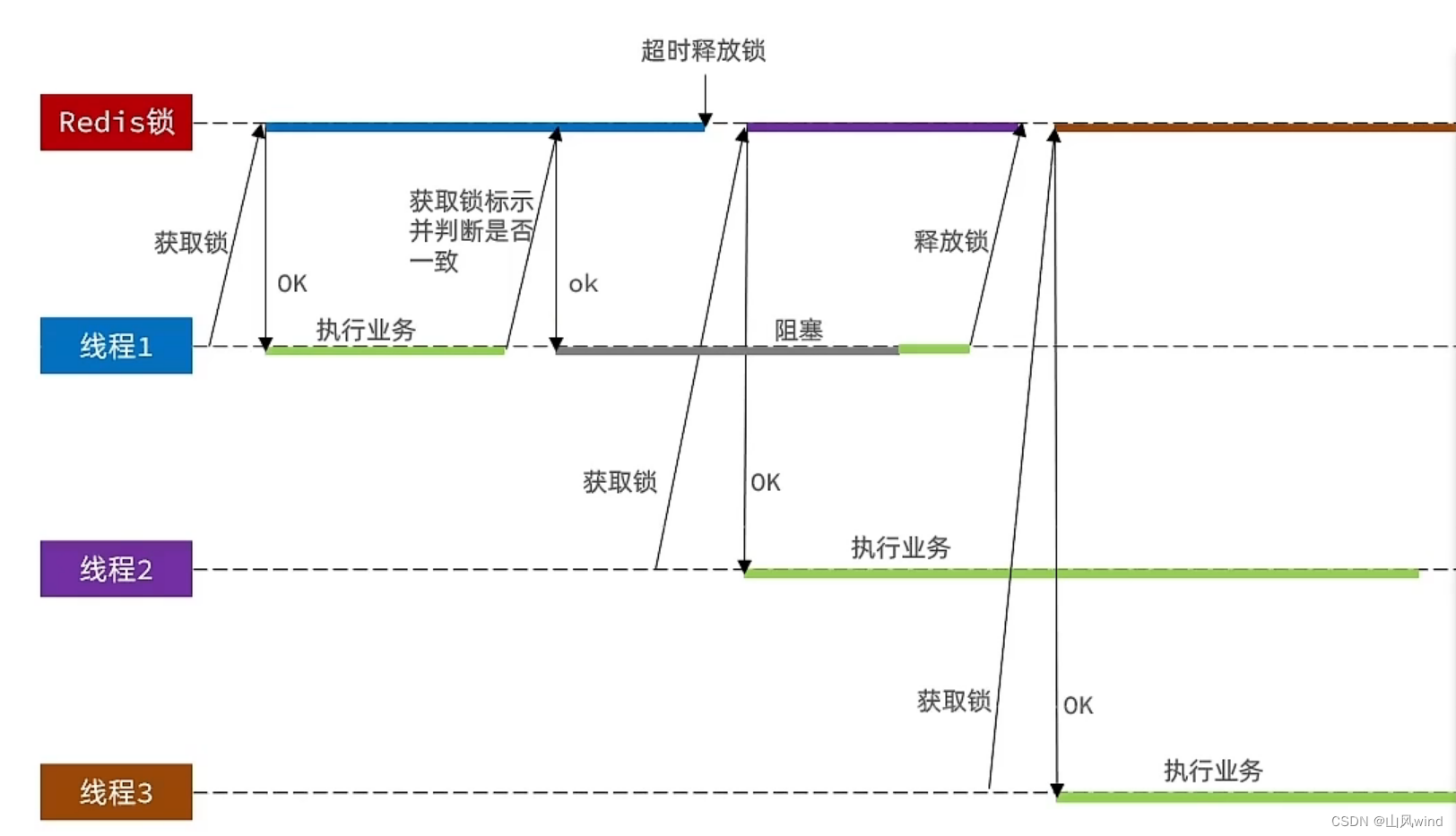

1. Mutual exclusion lock

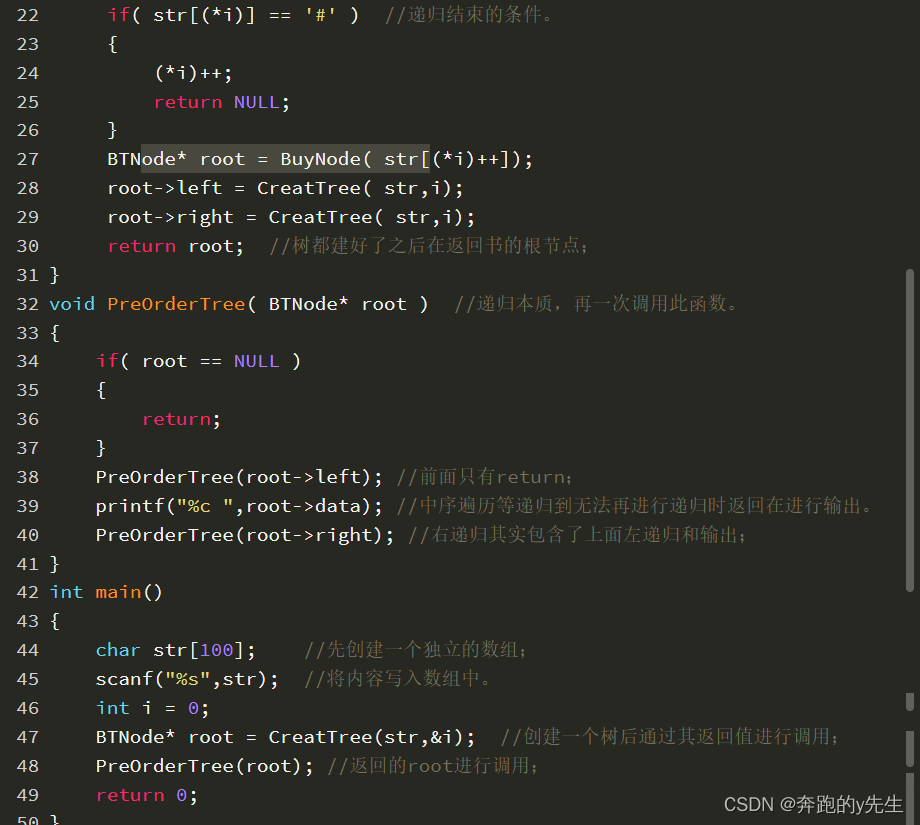

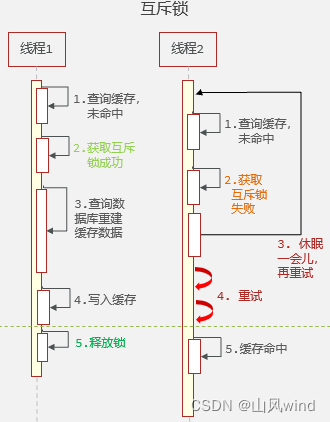

Because when the hotspot key suddenly expires, there will be multiple requests to access the database at the same time, withIt has a huge impact on the database, so only one request needs to access the database and rebuild the cache, and other requests can access the cache after the cache is rebuilt.This can be achieved by adding a mutex.That is, lock the cache reconstruction process to ensure that only one thread executes the reconstruction process and other threads wait.

Advantages: Simple implementation; no extra memory consumption; good consistency.

Disadvantages: Slow performance due to waiting; risk of deadlock.

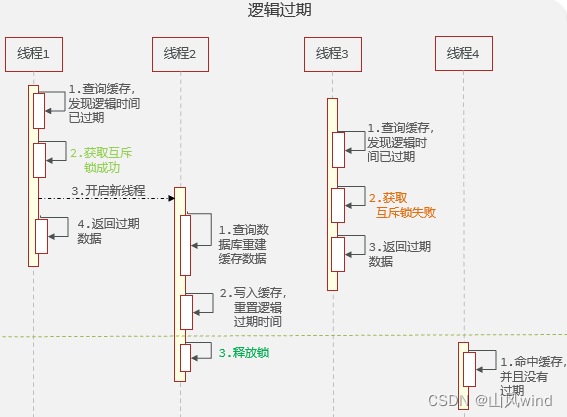

2. Set the hotspot key to never expire, that is, set the logical expiration time.

The hotspot key cache never expires, but a logical expiration time must be set. When the data is queried, the logical expiration time is judged to determine whether the cache needs to be rebuilt.**Rebuilding the cache also guarantees single-threaded execution through mutex locks.Rebuilding the cache is performed asynchronously using a separate thread.Other threads do not need to wait, just query the old data directly.

Advantages: The thread does not need to wait, and the performance is better.

Disadvantages: No consistency guarantee (weak consistency); additional memory consumption; complex implementation.

边栏推荐

猜你喜欢

随机推荐

uniapp时间组件封装年-月-日-时-分-秒

MongoDB 语法大全

控制器-----controller

漂亮MM和普通MM的区别

Adb 授权过程分析

字符串提取 中文、英文、数字

RedisTemplate: 报错template not initialized; call afterPropertiesSet() before using it

Iptables implementation under the network limited (NTP) synchronization time custom port

Chapter 12 贝叶斯网络

【 LeetCode 】 235. A binary search tree in recent common ancestor

DataFrame在指定位置插入行和列

【每日一题】1403. 非递增顺序的最小子序列

关于MP3文件中找不到TAG标签的问题

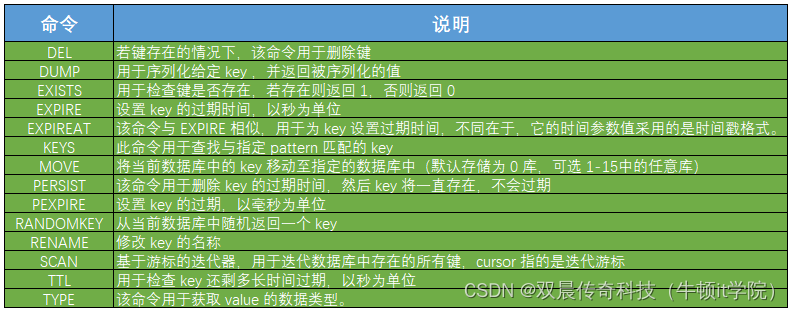

Redis数据库学习

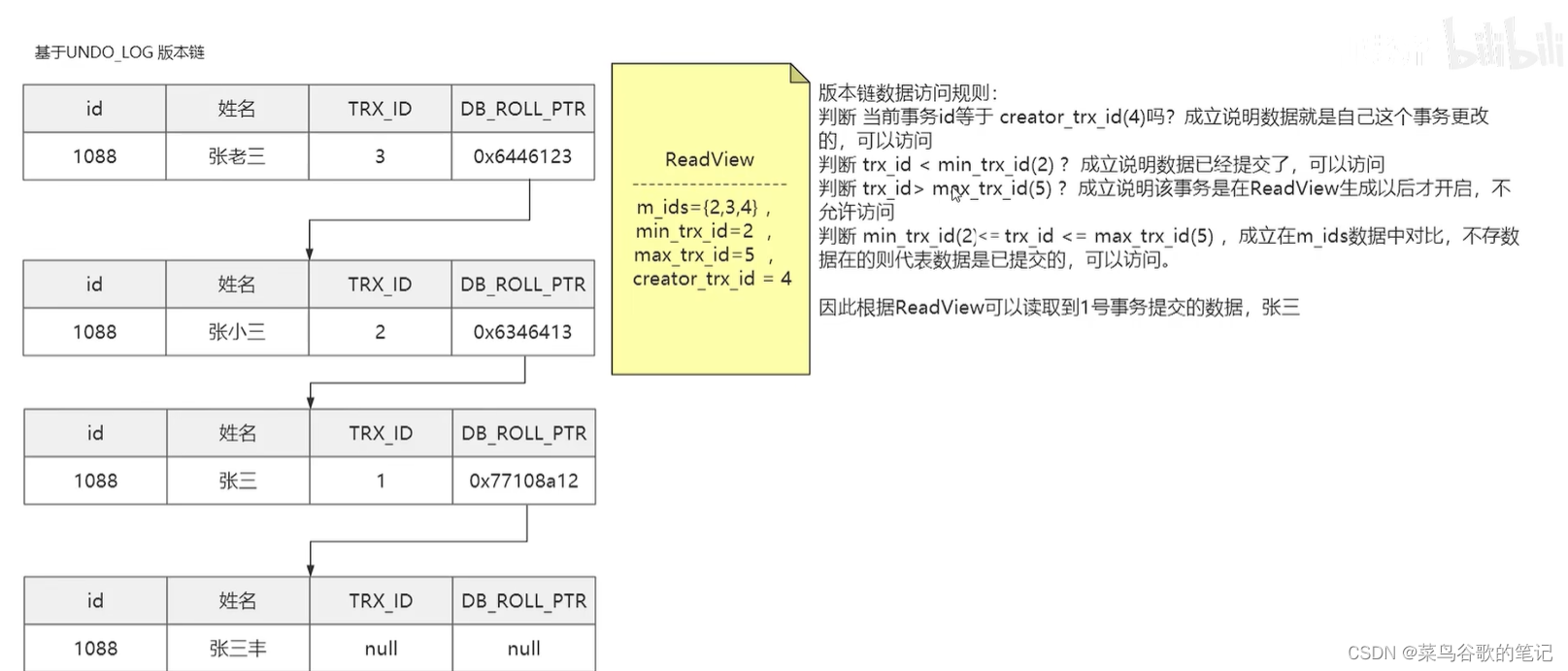

MVCC of Google's Fragmented Notes (Draft)

unity urp 渲染管线顶点偏移的实现

Use of thread pool (combined with Future/Callable)

MobileNetV1架构解析

向美国人学习“如何快乐”

VXE-Table融合多语言