当前位置:网站首页>[ml] Li Hongyi III: gradient descent & Classification (Gaussian distribution)

[ml] Li Hongyi III: gradient descent & Classification (Gaussian distribution)

2022-07-02 23:26:00 【Exotic moon】

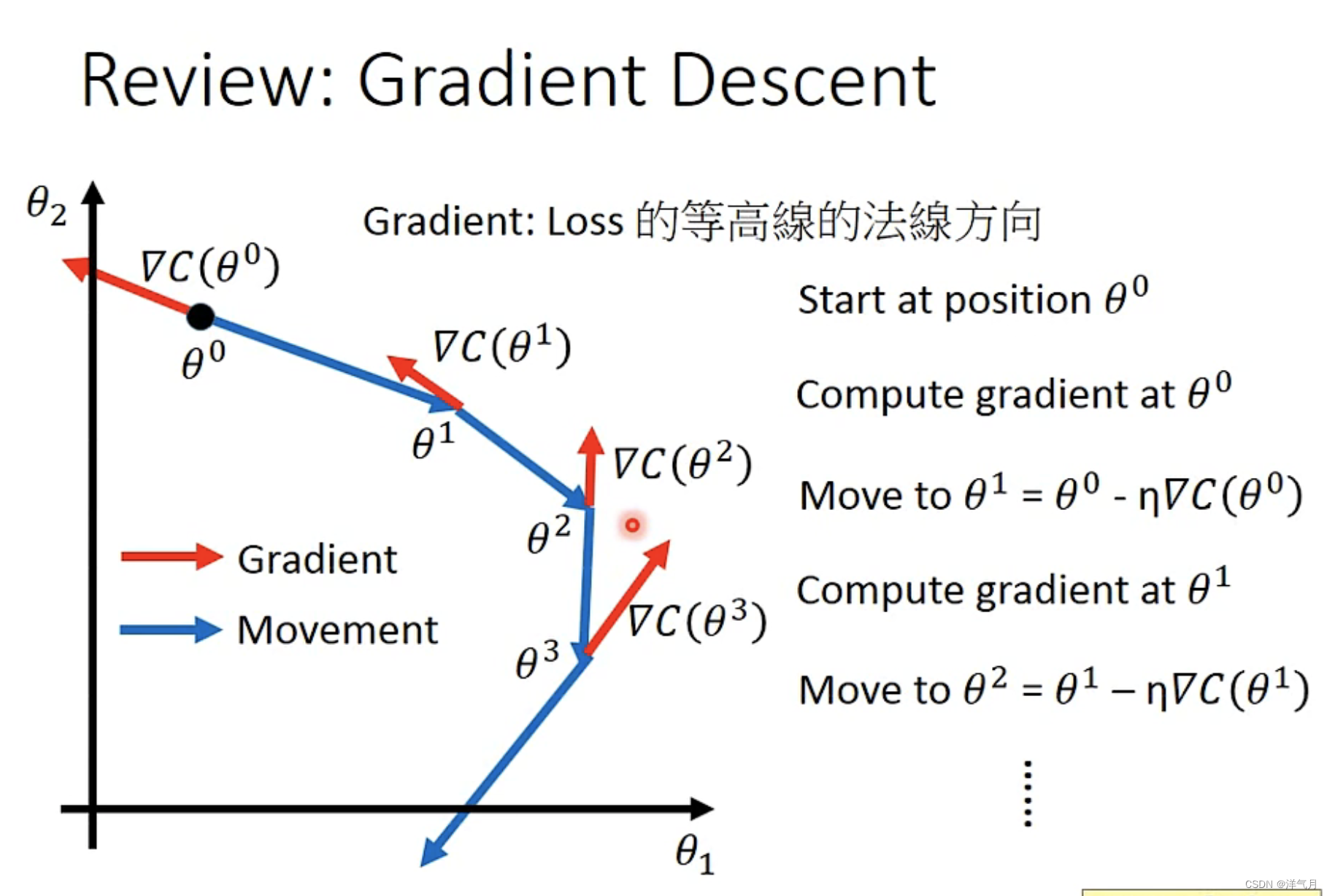

The normal of the contour line is perpendicular to the tangent ,:

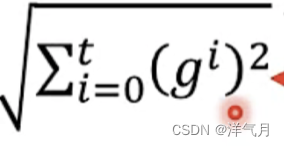

Every time calculation

One time return , Then according to the gradient of return point ; Then calculate a new return point according to the result , Then gradient down .....

When doing gradient descent , Careful adjustment learning rate

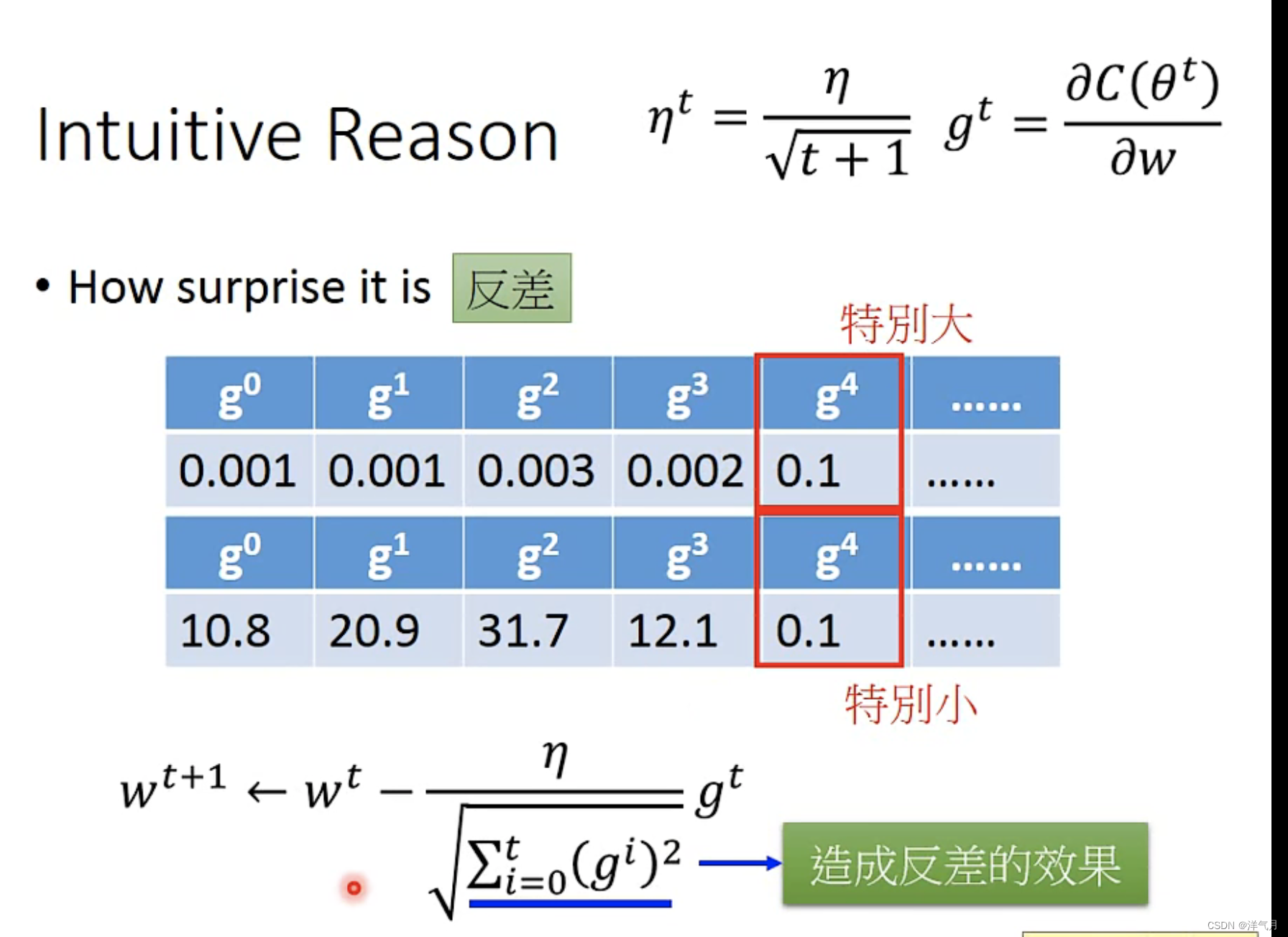

It is better to have one for every parameter learning rate, Recommended adagrad

case: Use the root mean square of all differential values in the past

explain :

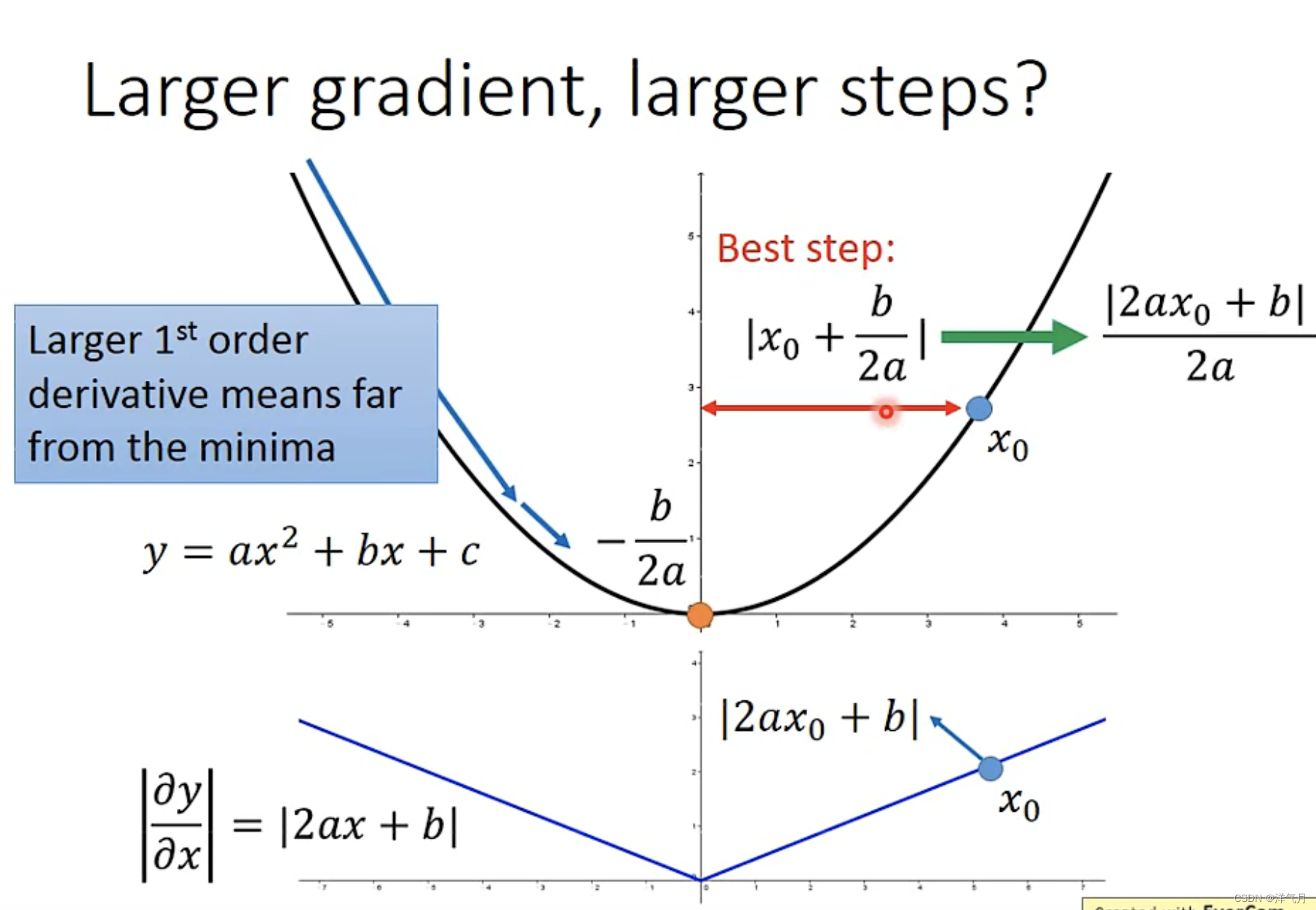

When only one parameter is considered :

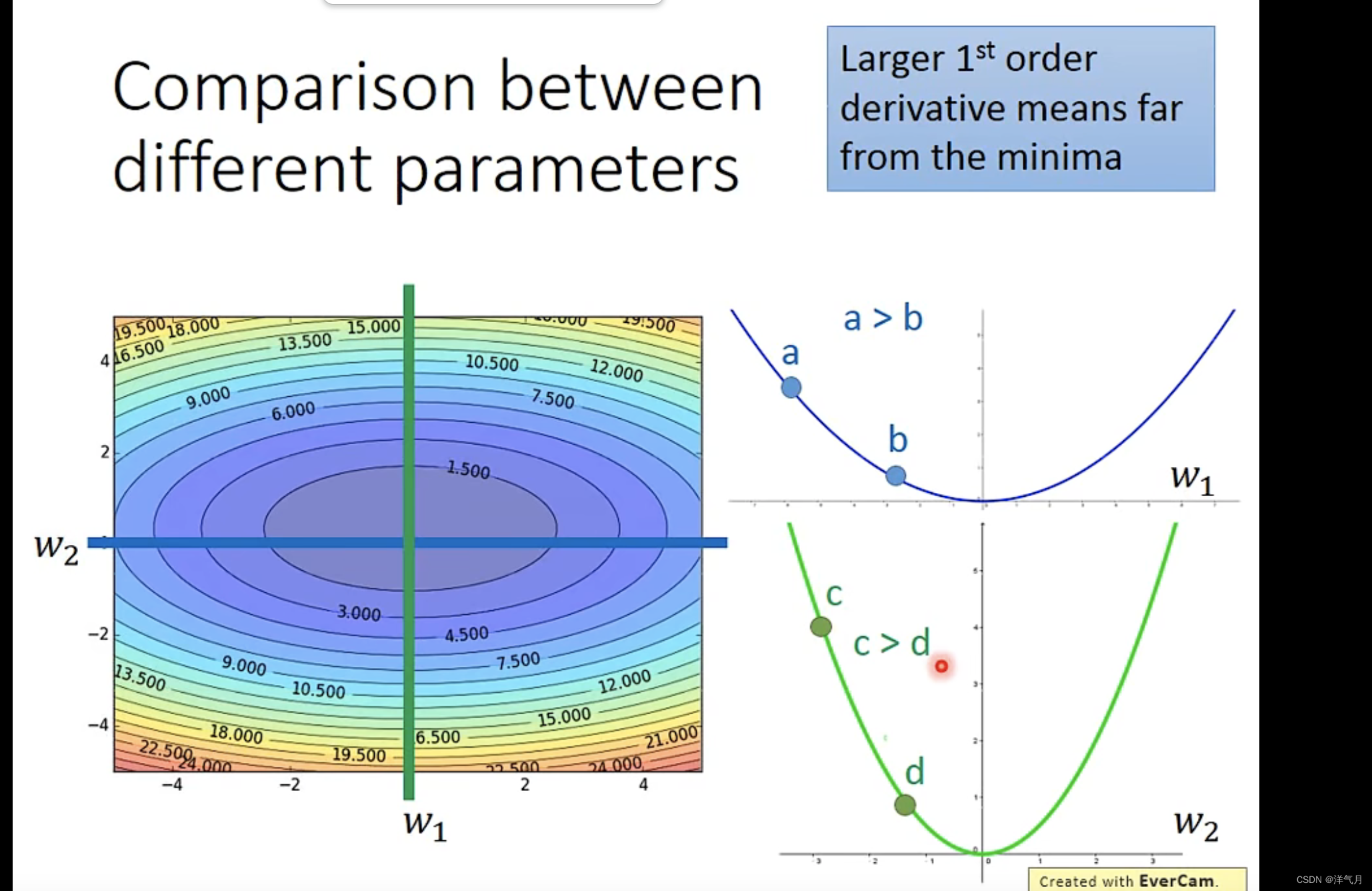

When considering multiple parameters , The above discussion is not necessarily true :

Just look at w1( Blue ):a Than b Far away , Then the greater the differential value

Just look at w2( green ):c Than d Far away , Then the greater the differential value

But not when combined :a The differential value of is significantly higher than c Small , however a Farther from the origin , So cross parameter comparison , The above is not true !

So the right thing to do is : Use first-order differential value / Quadratic differential value , To calculate the distance from the lowest point

![]() It's a constant ,

It's a constant ,![]() Express a differential ,

Express a differential , To replace quadratic differentiation ( In order to calculate )

To replace quadratic differentiation ( In order to calculate )

Stochastic gradient descent :

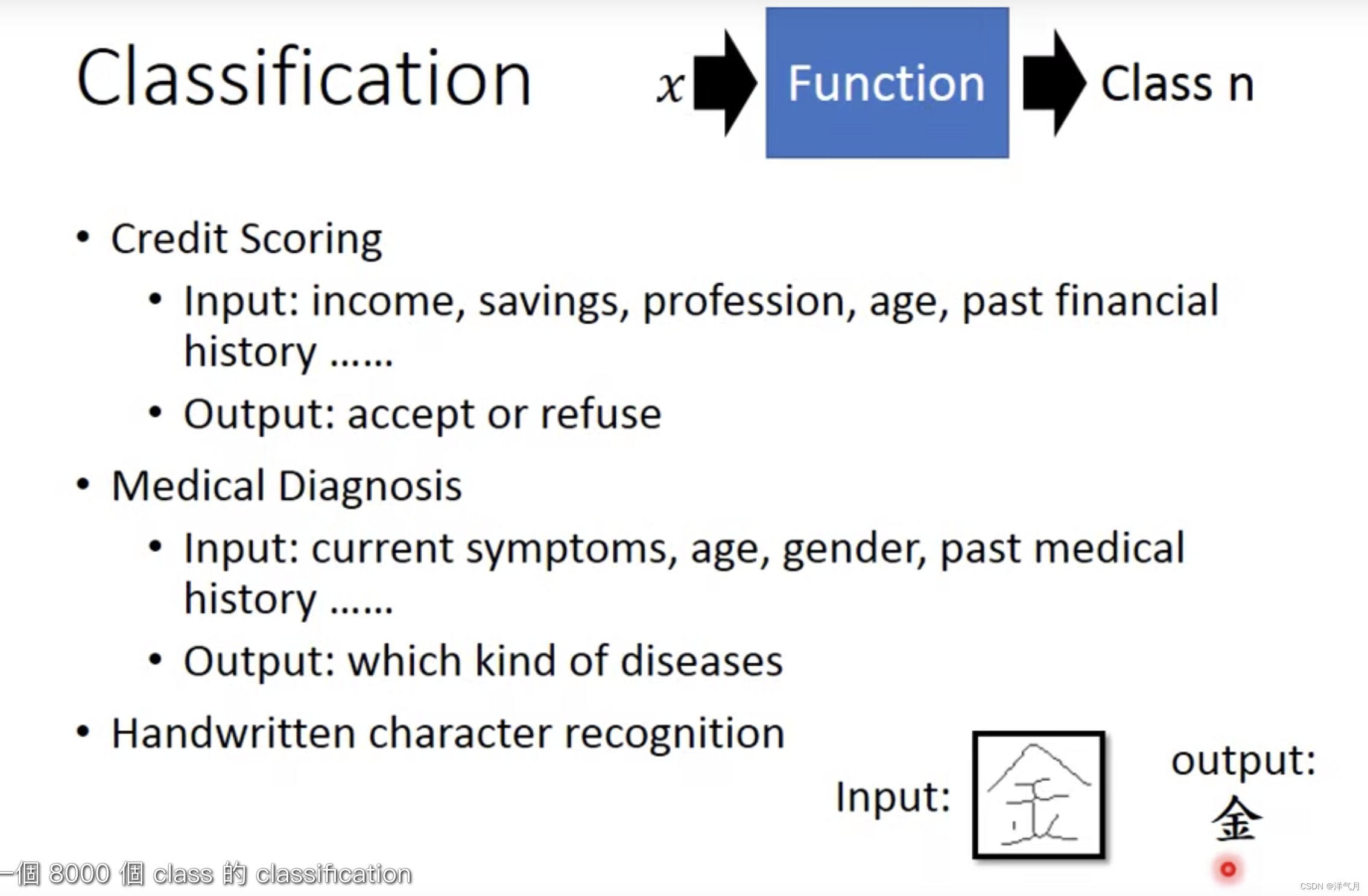

use vector To describe an input ( Baokemeng )

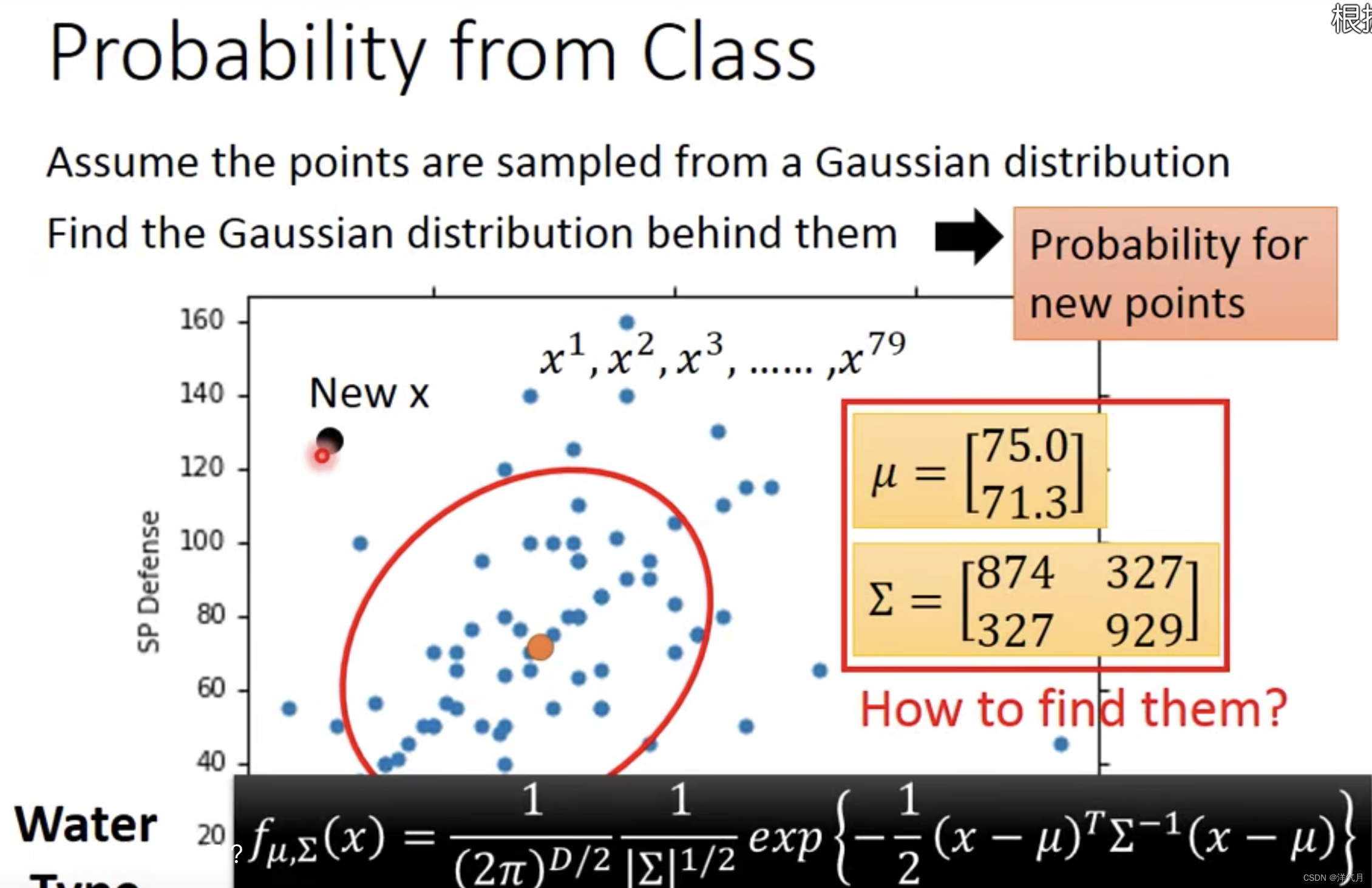

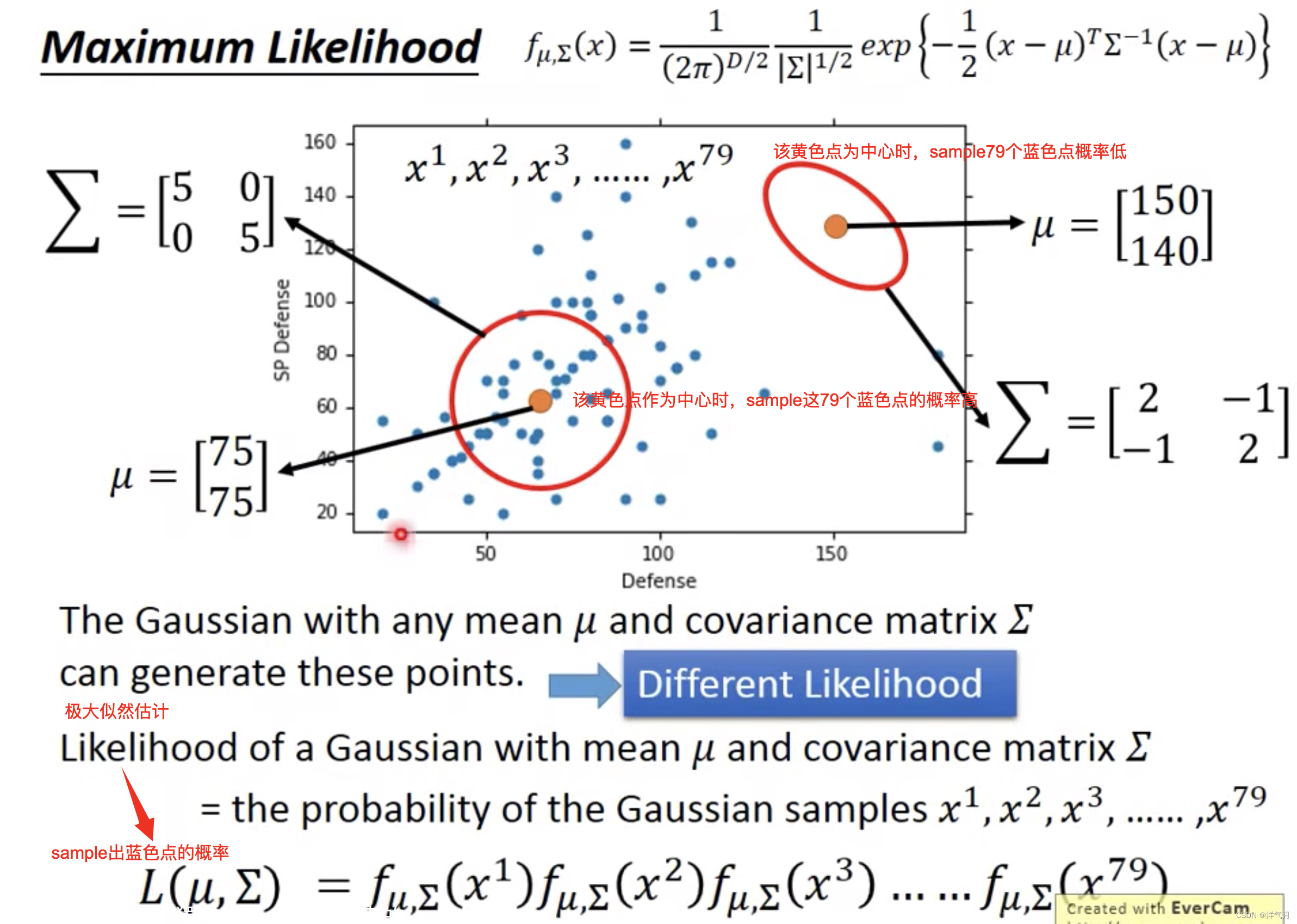

Maximum likelihood estimation : Calculate the probability of the source from the results

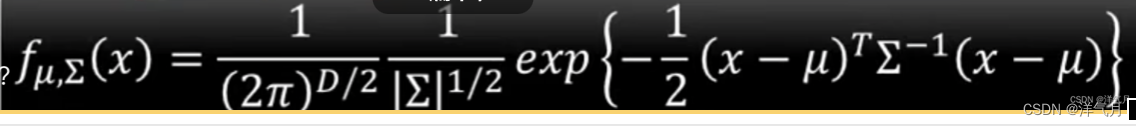

Gaussian distribution  , Suppose it's a function, Each point is sampled from the Gaussian distribution .

, Suppose it's a function, Each point is sampled from the Gaussian distribution .

The closer each point is to the center of the yellow , The greater the probability of sampling

Because the probability of each blue dot is independent , So yellow dot sample all 79 The probability of a blue dot is equal to each person who wants to multiply

Calculate the maximum likelihood value : Take the average ( Because Gaussian distribution is normal distribution )

Calculate the most likely sample After the maximum likelihood of all blue dots , Start sorting :

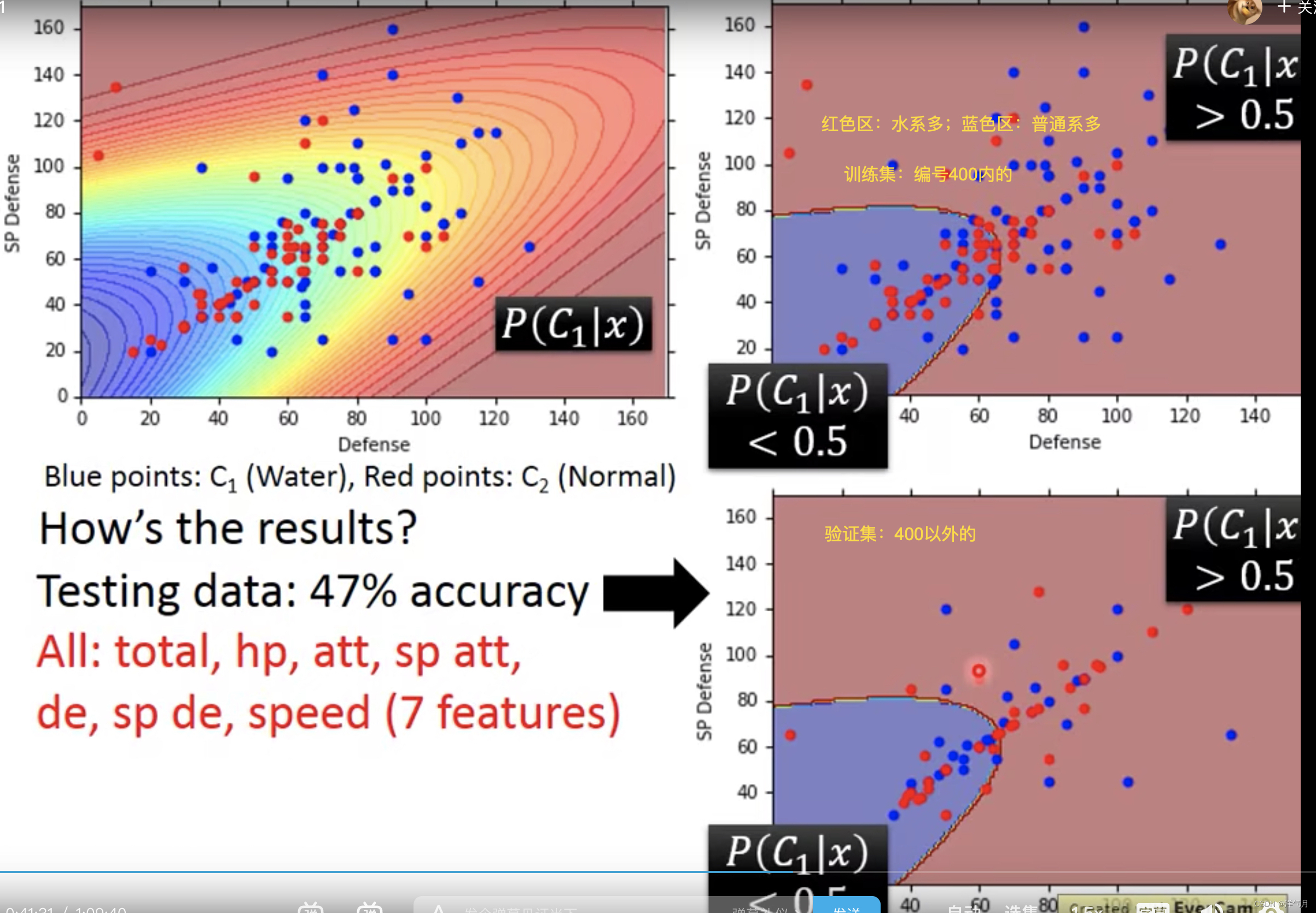

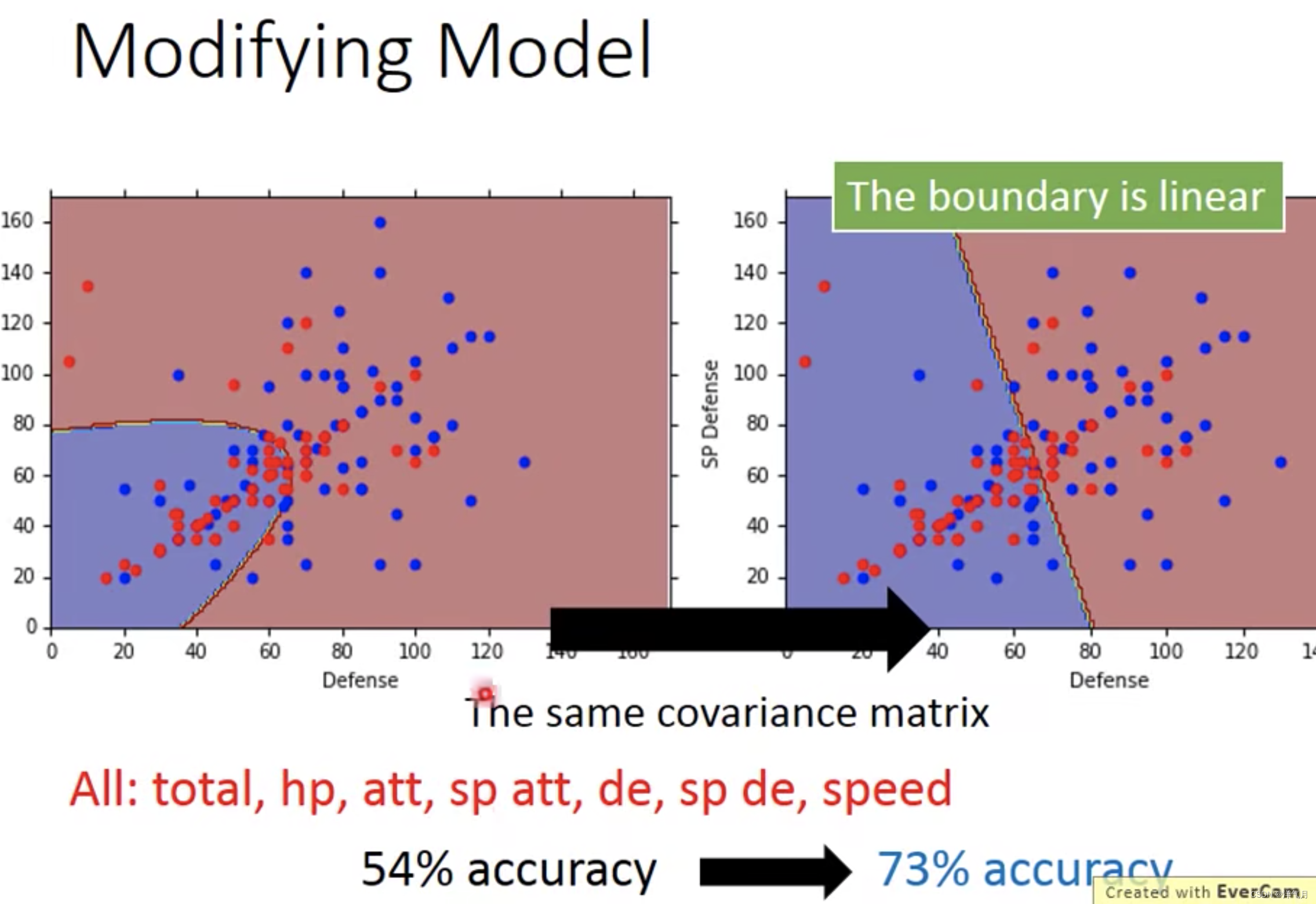

The classification effect of water system and common system is not very good , The correct rate is only 47%, What about Shengwei ?

Add attribute to 7 only

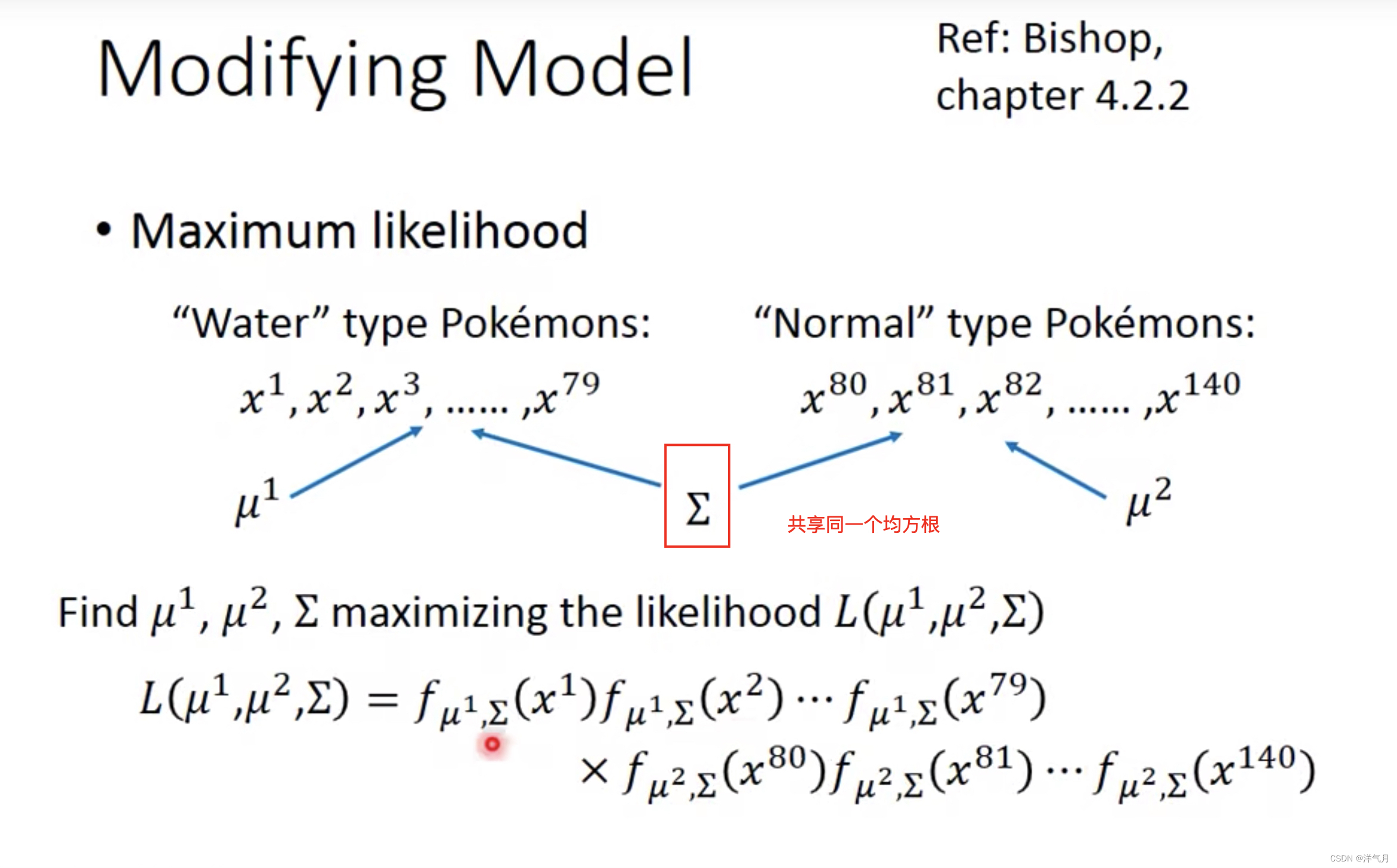

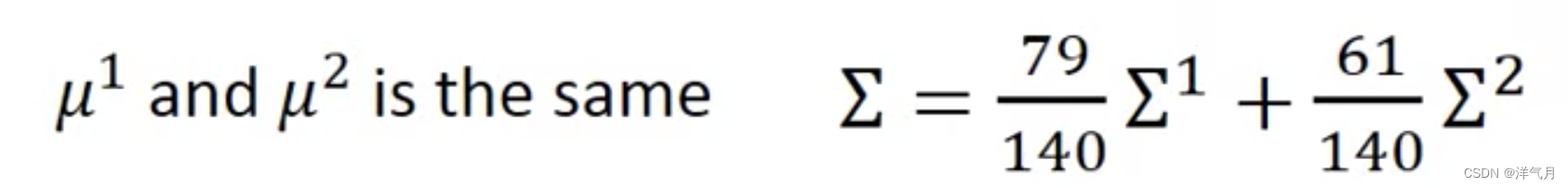

7 Dimensional effect is still not ideal , Reduce two function Parameters of

After sharing the covariance matrix , Improved accuracy

3 Step summary :

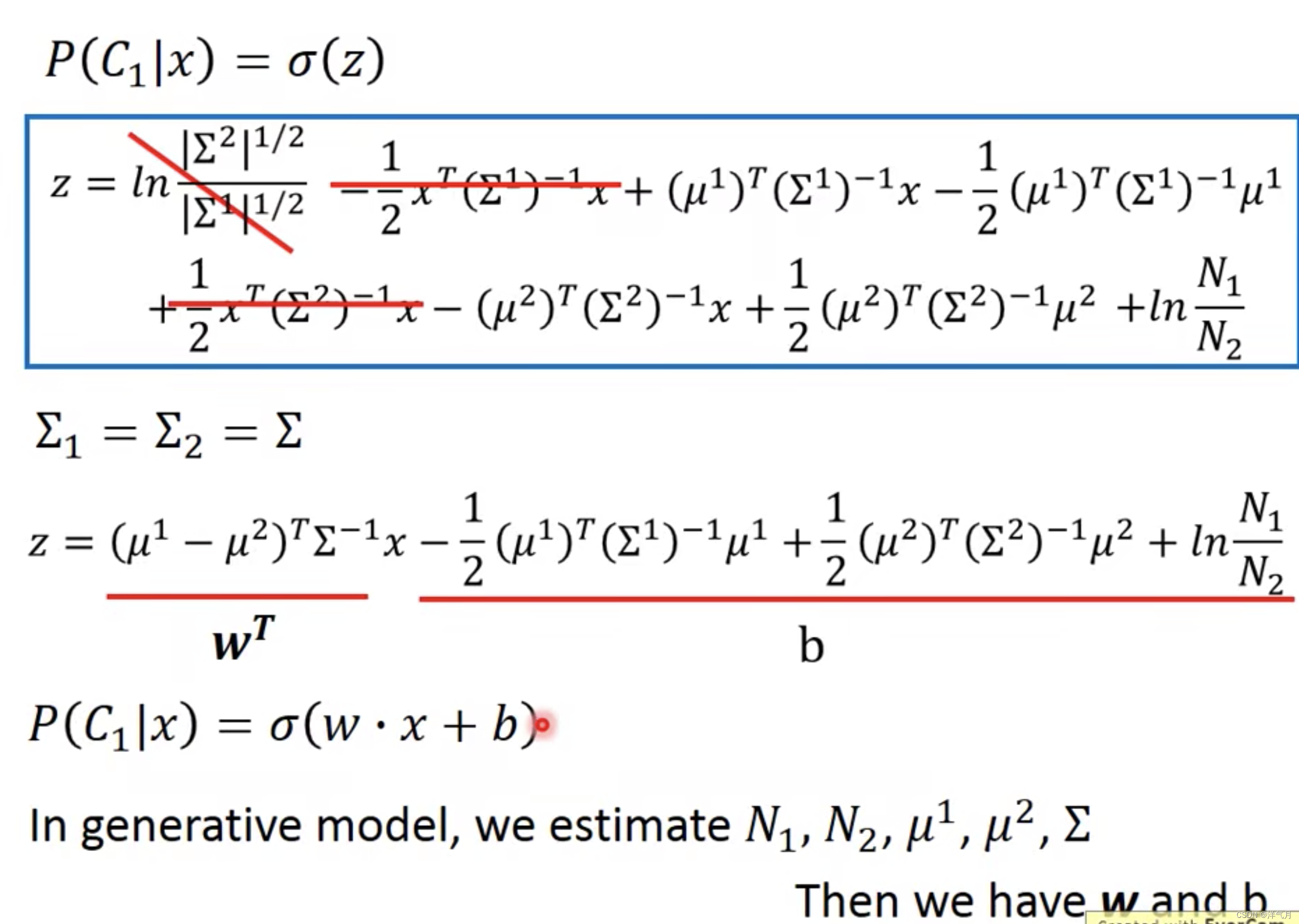

Posterior probability :

Simplify consensus :

边栏推荐

- Typical case of data annotation: how does jinglianwen technology help enterprises build data solutions

- Where is the win11 microphone test? Win11 method of testing microphone

- 聊聊内存模型与内存序

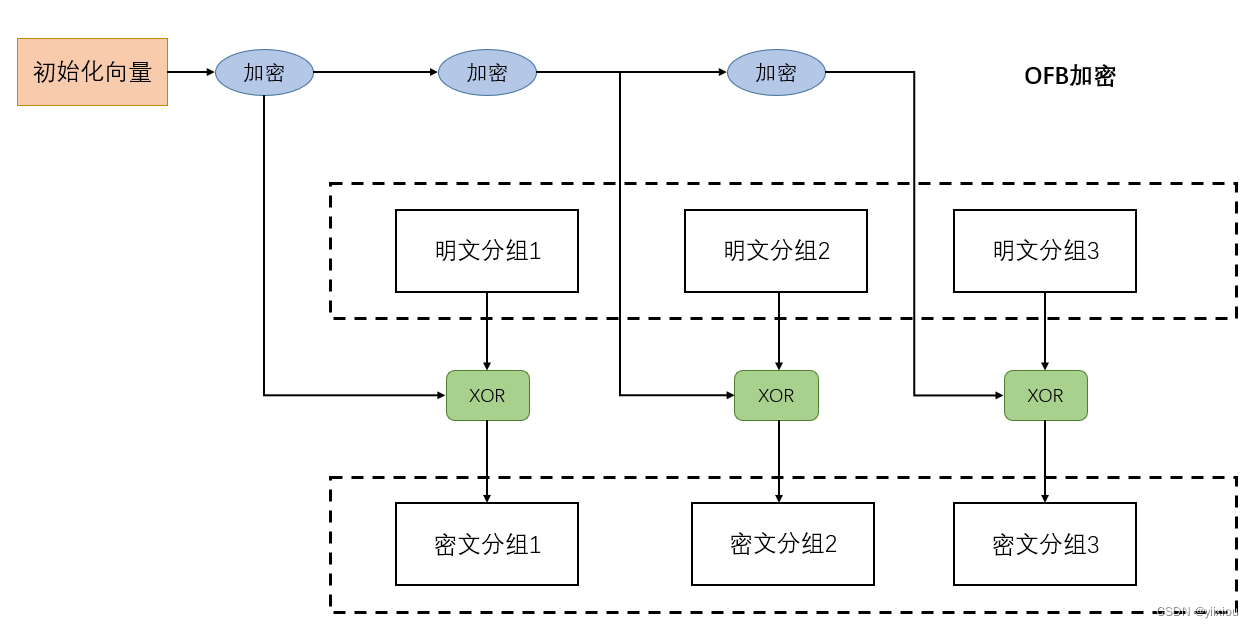

- Cryptographic technology -- key and ssl/tls

- Interface switching based on pyqt5 toolbar button -2

- 非路由组件之头部组件和底部组件书写

- The use of 8255 interface chip and ADC0809

- Loss function~

- 基于FPGA的VGA协议实现

- 【Proteus仿真】51单片机+LCD12864推箱子游戏

猜你喜欢

RuntimeError: no valid convolution algorithms available in CuDNN

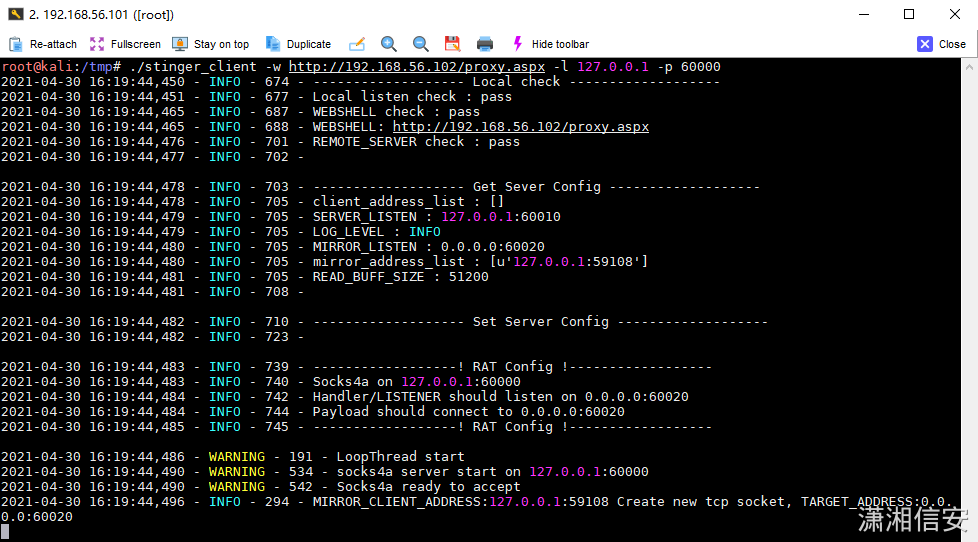

(stinger) use pystinger Socks4 to go online and not go out of the network host

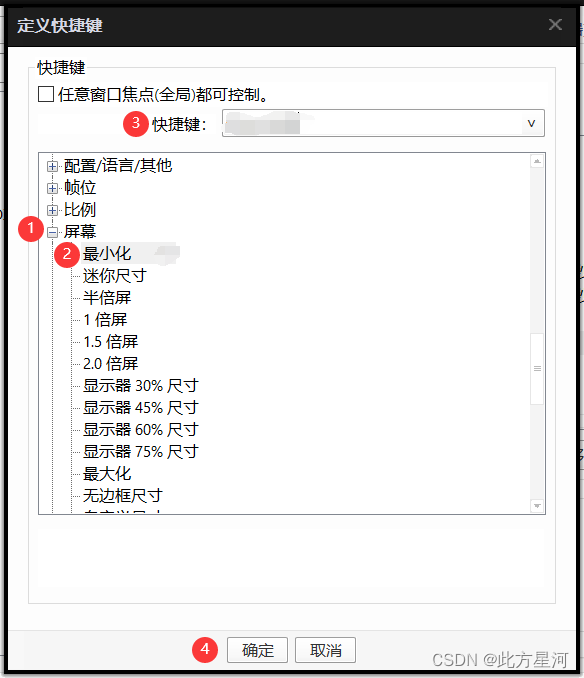

PotPlayer设置最小化的快捷键

公司里只有一个测试是什么体验?听听他们怎么说吧

密码技术---分组密码的模式

Where is the win11 microphone test? Win11 method of testing microphone

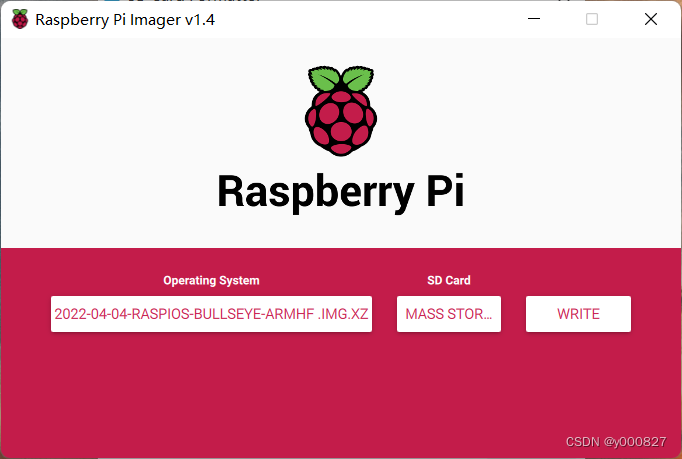

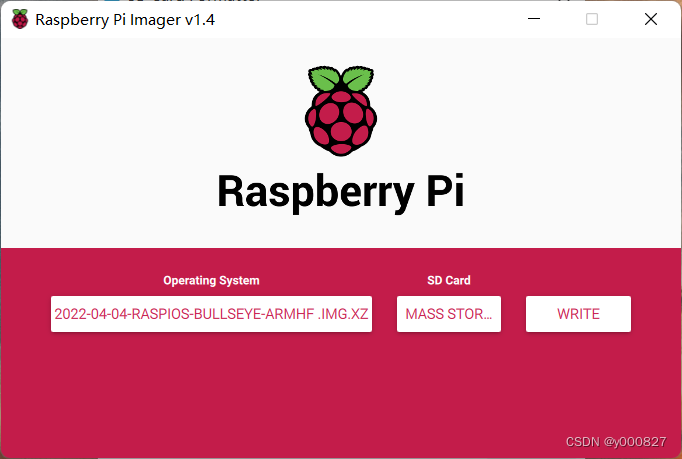

Connexion à distance de la tarte aux framboises en mode visionneur VNC

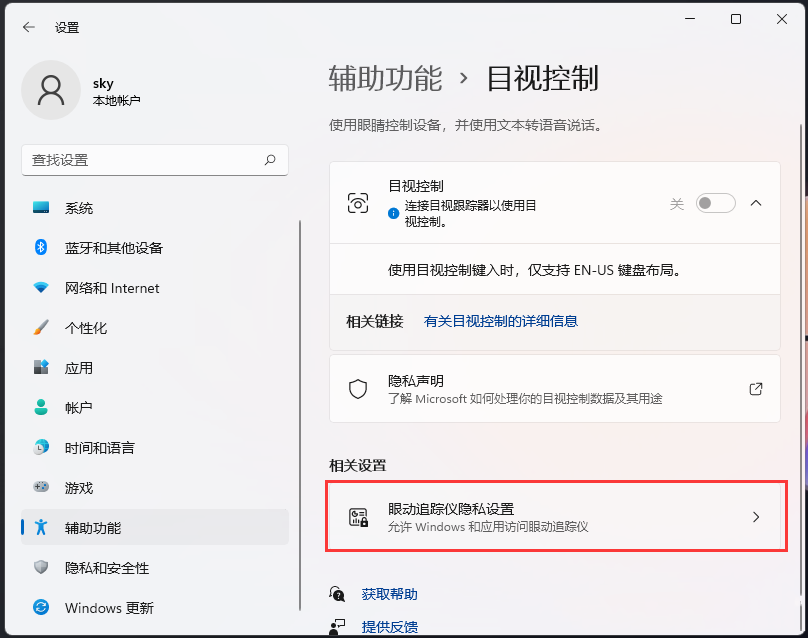

How does win11 turn on visual control? Win11 method of turning on visual control

Pandora IOT development board learning (HAL Library) - Experiment 4 serial port communication experiment (learning notes)

Remote connection of raspberry pie by VNC viewer

随机推荐

RuntimeError: no valid convolution algorithms available in CuDNN

抖音实战~点赞数量弹框

RuntimeError: no valid convolution algorithms available in CuDNN

C MVC creates a view to get rid of the influence of layout

基于FPGA的VGA协议实现

Why does RTOS system use MPU?

C# MVC创建一个视图摆脱布局的影响

Configuration clic droit pour choisir d'ouvrir le fichier avec vs Code

Ping domain name error unknown host, NSLOOKUP / system d-resolve can be resolved normally, how to Ping the public network address?

万物并作,吾以观复|OceanBase 政企行业实践

Temperature measurement and display of 51 single chip microcomputer [simulation]

Tronapi wave field interface - source code without encryption - can be opened twice - interface document attached - packaging based on thinkphp5 - detailed guidance of the author - July 1, 2022 08:43:

@BindsInstance在Dagger2中怎么使用

Brief introduction to common sense of Zhongtai

Quantitative analysis of PSNR, SSIM and RMSE

面试过了,起薪16k

What can I do after buying a domain name?

RecyclerView结合ViewBinding的使用

数字图像处理实验目录

Static file display problem