当前位置:网站首页>Hyper parameter optimization of deep neural networks using Bayesian Optimization

Hyper parameter optimization of deep neural networks using Bayesian Optimization

2022-06-11 19:11:00 【deephub】

In this paper , We will delve into hyperparametric optimization .

For convenience, this article will use Tensorflow It contains Fashion MNIST[1] Data sets . This data set contains... In the training set 60,000 Gray scale image , Include in the test set 10,000 Zhang image . Each picture represents belonging to 10 A piece in one of the categories (“T T-shirt / jacket ”、“ The trousers ”、“ Pullover ” etc. ). So this is a multi class classification problem .

Here is a brief introduction to the steps of preparing a dataset , Because the main content of this paper is the optimization of hyperparameters , So this part is just a brief introduction to the process , In general , The process is as follows :

- Load data .

- Divided into training sets 、 Validation set and test set .

- Change the pixel value from 0–255 Standardize to 0–1 Range .

- One-hot Coding target variable .

#load data

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

# split into train, validation and test sets

train_x, val_x, train_y, val_y = train_test_split(train_images, train_labels, stratify=train_labels, random_state=48, test_size=0.05)

(test_x, test_y)=(test_images, test_labels)

# normalize pixels to range 0-1

train_x = train_x / 255.0

val_x = val_x / 255.0

test_x = test_x / 255.0

#one-hot encode target variable

train_y = to_categorical(train_y)

val_y = to_categorical(val_y)

test_y = to_categorical(test_y)

All our training 、 The shape of the validation and test set is :

print(train_x.shape) #(57000, 28, 28)

print(train_y.shape) #(57000, 10)

print(val_x.shape) #(3000, 28, 28)

print(val_y.shape) #(3000, 10)

print(test_x.shape) #(10000, 28, 28)

print(test_y.shape) #(10000, 10)

Now? , We will use Keras Tuner library [2]: It will help us to easily adjust the super parameters of neural network :

pip install keras-tuner

Keras Tuner need Python 3.6+ and TensorFlow 2.0+

Superparametric adjustment is the basic part of machine learning project . There are two types of superparameters :

- Structural hyperparameters : Define the overall architecture of the model ( For example, the number of hidden cells 、 The layer number )

- Optimizer parameters : Parameters that affect training speed and quality ( For example, learning rate and optimizer type 、 Batch size 、 Number of rounds, etc )

Why do I need a super parameter tuning Library ? We can't try all the possible combinations , See what is the best on the validation set ?

This is definitely not possible because deep neural networks require a lot of time to train , Even for a few days . If you train large models on cloud servers , So every experiment costs a lot of money .

therefore , We need a pruning strategy to limit the search space of super parameters .

keras-tuner Provides Bayesian optimizer . It searches for every possible combination , Instead, the first few are chosen at random . Then according to the performance of these super parameters , Select the next best possible value . Therefore, the choice of each super parameter depends on the previous attempt . Select the next set of superparameters based on the history and evaluate the performance , Until the best combination is found or the maximum number of tests is reached . We can use parameters “max_trials” To configure it .

In addition to the Bayesian optimizer ,keras-tuner Two other common methods are also provided :RandomSearch and Hyperband. We will discuss them at the end of this article .

The next step is to adjust the super parameters of our network applications . We try two network architectures , Standard multilayer perceptron (MLP) And convolution neural network (CNN).

First let's look at the baseline MLP What is the model :

model_mlp = Sequential()

model_mlp.add(Flatten(input_shape=(28, 28)))

model_mlp.add(Dense(350, activation='relu'))

model_mlp.add(Dense(10, activation='softmax'))

print(model_mlp.summary())

model_mlp.compile(optimizer="adam",loss='categorical_crossentropy')

The tuning process requires two main approaches :

hp.Int(): Set the range of the super parameter , Its value is an integer - for example , The number of hidden cells in the dense layer :

model.add(Dense(units = hp.Int('dense-bot', min_value=50, max_value=350, step=50))

hp.Choice(): Provide a set of values for the superparameter —— for example ,Adam or SGD As the best optimizer ?

hp_optimizer=hp.Choice('Optimizer', values=['Adam', 'SGD'])

In our MLP Example , We tested the following super parameters :

- Number of hidden layers :1-3

- The size of the first dense layer :50–350

- The size of the second and third dense layers :50–350

- Dropout:0、0.1、0.2

- Optimizer :SGD(nesterov=True,momentum=0.9) or Adam

- Learning rate :0.1、0.01、0.001

The code is as follows :

model = Sequential()

model.add(Dense(units = hp.Int('dense-bot', min_value=50, max_value=350, step=50), input_shape=(784,), activation='relu'))

for i in range(hp.Int('num_dense_layers', 1, 2)):

model.add(Dense(units=hp.Int('dense_' + str(i), min_value=50, max_value=100, step=25), activation='relu'))

model.add(Dropout(hp.Choice('dropout_'+ str(i), values=[0.0, 0.1, 0.2])))

model.add(Dense(10,activation="softmax"))

hp_optimizer=hp.Choice('Optimizer', values=['Adam', 'SGD'])

if hp_optimizer == 'Adam':

hp_learning_rate = hp.Choice('learning_rate', values=[1e-1, 1e-2, 1e-3])

elif hp_optimizer == 'SGD':

hp_learning_rate = hp.Choice('learning_rate', values=[1e-1, 1e-2, 1e-3])

nesterov=True

momentum=0.9

Here we need to pay attention to the 5 Yes for loop : Let the model determine the depth of the network !

Last , It's running . Please note that we mentioned max_trials Parameters .

model.compile(optimizer = hp_optimizer, loss='categorical_crossentropy', metrics=['accuracy'])

tuner_mlp = kt.tuners.BayesianOptimization(

model,

seed=random_seed,

objective='val_loss',

max_trials=30,

directory='.',

project_name='tuning-mlp')

tuner_mlp.search(train_x, train_y, epochs=50, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

We get the results

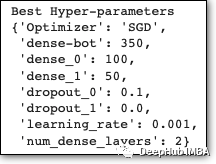

This process runs out of iterations , About need 1 Hours to complete . We can also print the best super parameters of the model using the following command :

best_mlp_hyperparameters = tuner_mlp.get_best_hyperparameters(1)[0]

print("Best Hyper-parameters")

best_mlp_hyperparameters.values

Now we can retrain our model with the optimal hyperparameters :

model_mlp = Sequential()

model_mlp.add(Dense(best_mlp_hyperparameters['dense-bot'], input_shape=(784,), activation='relu'))

for i in range(best_mlp_hyperparameters['num_dense_layers']):

model_mlp.add(Dense(units=best_mlp_hyperparameters['dense_' +str(i)], activation='relu'))

model_mlp.add(Dropout(rate=best_mlp_hyperparameters['dropout_' +str(i)]))

model_mlp.add(Dense(10,activation="softmax"))

model_mlp.compile(optimizer=best_mlp_hyperparameters['Optimizer'], loss='categorical_crossentropy',metrics=['accuracy'])

history_mlp= model_mlp.fit(train_x, train_y, epochs=100, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

perhaps , We can retrain our model with these parameters :

model_mlp=tuner_mlp.hypermodel.build(best_mlp_hyperparameters)

history_mlp=model_mlp.fit(train_x, train_y, epochs=100, batch_size=32,

validation_data=(dev_x, dev_y), callbacks=callback)

Then test the accuracy

mlp_test_loss, mlp_test_acc = model_mlp.evaluate(test_x, test_y, verbose=2)

print('\nTest accuracy:', mlp_test_acc)

# Test accuracy: 0.8823

Compared with the model test accuracy of the baseline :

The baseline MLP Model :86.6 % The best MLP Model :88.2 %. The difference in test accuracy is about 3%!

Let's use the same process , take MLP Change it to CNN, This allows you to test more parameters .

First , This is our baseline model :

model_cnn = Sequential()

model_cnn.add(Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

model_cnn.add(MaxPooling2D((2, 2)))

model_cnn.add(Flatten())

model_cnn.add(Dense(100, activation='relu'))

model_cnn.add(Dense(10, activation='softmax'))

model_cnn.compile(optimizer="adam", loss='categorical_crossentropy', metrics=['accuracy'])

Baseline model Contains convolution and pooling layers . For tuning , We will test the following :

- Convolution 、MaxPooling and Dropout Layer of “ block ” Count

- In each block Conv Filter size of the layer :32、64

- A valid or identical fill on the transition layer

- The size of the hidden layer of the last frontal layer :25-150, multiply 25

- Optimizer :SGD(nesterov=True, momentum =0.9) or Adam

- Learning rate :0.01、0.001

model = Sequential()

model = Sequential()

model.add(Input(shape=(28, 28, 1)))

for i in range(hp.Int('num_blocks', 1, 2)):

hp_padding=hp.Choice('padding_'+ str(i), values=['valid', 'same'])

hp_filters=hp.Choice('filters_'+ str(i), values=[32, 64])

model.add(Conv2D(hp_filters, (3, 3), padding=hp_padding, activation='relu', kernel_initializer='he_uniform', input_shape=(28, 28, 1)))

model.add(MaxPooling2D((2, 2)))

model.add(Dropout(hp.Choice('dropout_'+ str(i), values=[0.0, 0.1, 0.2])))

model.add(Flatten())

hp_units = hp.Int('units', min_value=25, max_value=150, step=25)

model.add(Dense(hp_units, activation='relu', kernel_initializer='he_uniform'))

model.add(Dense(10,activation="softmax"))

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])

hp_optimizer=hp.Choice('Optimizer', values=['Adam', 'SGD'])

if hp_optimizer == 'Adam':

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])

elif hp_optimizer == 'SGD':

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])

nesterov=True

momentum=0.9

Like before , We let the network determine its depth . The maximum number of iterations is set to 100:

model.compile( optimizer=hp_optimizer,loss='categorical_crossentropy', metrics=['accuracy'])

tuner_cnn = kt.tuners.BayesianOptimization(

model,

objective='val_loss',

max_trials=100,

directory='.',

project_name='tuning-cnn')

give the result as follows :

The resulting hyperparameter

Finally, we train our CNN Model :

model_cnn = Sequential()

model_cnn.add(Input(shape=(28, 28, 1)))

for i in range(best_cnn_hyperparameters['num_blocks']):

hp_padding=best_cnn_hyperparameters['padding_'+ str(i)]

hp_filters=best_cnn_hyperparameters['filters_'+ str(i)]

model_cnn.add(Conv2D(hp_filters, (3, 3), padding=hp_padding, activation='relu', kernel_initializer='he_uniform', input_shape=(28, 28, 1)))

model_cnn.add(MaxPooling2D((2, 2)))

model_cnn.add(Dropout(best_cnn_hyperparameters['dropout_'+ str(i)]))

model_cnn.add(Flatten())

model_cnn.add(Dense(best_cnn_hyperparameters['units'], activation='relu', kernel_initializer='he_uniform'))

model_cnn.add(Dense(10,activation="softmax"))

model_cnn.compile(optimizer=best_cnn_hyperparameters['Optimizer'],

loss='categorical_crossentropy',

metrics=['accuracy'])

print(model_cnn.summary())

history_cnn= model_cnn.fit(train_x, train_y, epochs=50, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

Check the accuracy of the test set :

cnn_test_loss, cnn_test_acc = model_cnn.evaluate(test_x, test_y, verbose=2)

print('\nTest accuracy:', cnn_test_acc)

# Test accuracy: 0.92

With baseline CNN The accuracy of model test is compared with that of :

- The baseline CNN Model :90.8 %

- The best CNN Model :92%

We see an improvement in the performance of the optimization model !

In addition to accuracy , We can also see that the optimization effect is very good , because :

In each case, a non-zero Dropout value , Even if we provide zero Dropout. This is to be expected , because Dropout Is a mechanism to reduce over fitting . Interestingly , first-class CNN Architecture is the standard CNN, The number of filters increases gradually in each layer . This is to be expected , Because with the increase of subsequent layers , Patterns become more complex ( This is also the result that we have been proved when studying various models and papers ) More filters are needed to capture these pattern combinations .

The above example also shows Keras Tuner It's using Tensorflow A very useful tool for optimizing deep neural networks .

We also said above that the Bayesian optimizer is selected in this paper . But there are two other options :

RandomSearch: Randomly select some of them to avoid exploring the entire search space of the hyperparameter . however , It is not guaranteed that the best superparameters will be found

Hyperband: Choose a random combination of some super parameters , And only use them to train a few models epoch. Then use these super parameters to train the model , Until all is used up epoch And choose the best .

Last dataset address and keras_tuner The documentation for is as follows

https://avoid.overfit.cn/post/c3f904fab4f84914b8a1935f8670582f

author :Nikos Kafritsas

边栏推荐

- PyMySQL利用游标操作数据库方法封装!!!

- cf:C. Restoring the Duration of Tasks【找规律】

- 求数据库设计毕业信息管理

- BottomSheetDialog 使用详解,设置圆角、固定高度、默认全屏等

- Cf:f. shifting string [string rearrangement in specified order + grouping into rings (cutting connected components) + minimum same moving cycle of each group + minimum common multiple]

- Financial bank_ Introduction to collection system

- Cf:g. count the trains [sortedset + bisect + simulation maintaining strict decreasing sequence]

- 让我们的坦克欢快的动起来吧

- collect. stream(). Use of the collect() method

- 今天睡眠质量记录60分

猜你喜欢

Review of software testing technology

Replace the backbone of target detection (take the fast RCNN as an example)

![leetcode:926. Flip the string to monotonically increasing [prefix and + analog analysis]](/img/e8/a43b397155c6957b142dd0feb59885.png)

leetcode:926. Flip the string to monotonically increasing [prefix and + analog analysis]

The Economist: WTO MC12 restarts the digital economy and becomes the core engine of global economic recovery and growth

What is the workflow of dry goods MapReduce?

The US inflation rate reached a 41 year high of 8.6%! High inflation fever? The stock and encryption markets fell first!

Cf:d. black and white stripe

cf:B. Array Decrements【模拟】

【Multisim仿真】利用运算放大器产生方波、三角波发生器

记录一下phpstudy配置php8.0和php8.1扩展redis

随机推荐

01. Telecommunications_ Field business experience

Visual slam lecture notes-10-2

MySQL in-depth and complete learning - stage 1 - overview of learning

開發中必備的文件的上傳與下載

cf:C. Restoring the Duration of Tasks【找规律】

KMP!你值得拥有!!! 直接运行直接跑!

动态爆炸效果

5g communication test manual based on Ti am5728 + artix-7 FPGA development board (dsp+arm)

Internet_ Business Analysis Overview

[Multisim Simulation] using operational amplifier to generate sawtooth wave

Longest strictly increasing subsequence

Judge whether it is a balanced binary tree

Expandable type of system

一款自适应的聊天网站-匿名在线聊天室PHP源码

《经济学人》:WTO MC12重启 数字经济成为全球经济复苏和增长的核心引擎

Crop disease detection using image processing technology and convolutional neural network (CNN)

Project management of workflow and business service on SAP BTP

美国通胀率8.6%创41年历史新高!通胀高烧不退?股票、加密市场先跌为敬!

手把手教你学会FIRST集和FOLLOW集!!!!吐血收藏!!保姆级讲解!!!

High concurrency architecture design