当前位置:网站首页>Hands on deep learning (38) -- realize RNN from scratch

Hands on deep learning (38) -- realize RNN from scratch

2022-07-04 09:37:00 【Stay a little star】

List of articles

【 explain 】: Here is the data of two books , They are all the data mentioned in Li Mu's God book . Because the code encapsulated by Mu Shen is loaded 《Time machine》, So I'm going to cover the first two Blog The code of is copied here again , Used to load 《 Star Wars: 》 Data sets . The following content is a reproduction of Mu Shen's curriculum and code . I will mark the knowledge points in yellow .

%matplotlib inline

import math

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

import re

import collections

import random

1. Data loading and conversion

1.1 load Time machine This book

# Load data

batch_size,num_steps = 32,35

train_iter,vocab = d2l.load_data_time_machine(batch_size,num_steps)

# Encode each marked number independently

# The index for 0 and 2 The unique heat code of is as follows

F.one_hot(torch.tensor([0,2]),len(vocab))

tensor([[1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0],

[0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0]])

The small batch shape we sample each time is ( Batch size , Time steps ).one_hot Coding converts such small batches into three-dimensional tensors , The last dimension is equal to the size of the vocabulary (len(vocab)). We often replace the input dimension , In order to obtain the shape ( Time steps , Batch size , Vocabulary size ) Output . This will make it easier for us to pass through the outermost dimension , Update the hidden status of small batches step by step .( Imagine the data on each time step when we train later , The transposed data is convenient for us python The read , It has certain computational acceleration effect )

X = torch.arange(10).reshape((2, 5))

F.one_hot(X.T, 28).shape

torch.Size([5, 2, 28])

1.2 Read 《 Star Wars: 》 Book data set

Manually modify the previous code a little . For convenience of viewing, there is no encapsulation

def read_book(dir):

""" The pretreatment operation here is violent , Eliminate punctuation marks and special characters , There's only... Left 26 Letters and spaces """

with open(dir,'r') as f:

lines = f.readlines()

return [re.sub('[^A-Za-z]+',' ',line).strip().lower() for line in lines]

def tokenize(lines,token='word'):

if token=="word":

return [line.split() for line in lines]

elif token =="char":

return [list(line) for line in lines]

else:

print("Error: Unknown token type :"+token)

lines = read_book('./data/RNN/36-0.txt')

def count_corpus(tokens):

""" Count the frequency of tags : there tokens yes 1D List or 2D list """

if len(tokens) ==0 or isinstance(tokens[0],list):

# take tokens Flatten into a list filled with tags

tokens = [token for line in tokens for token in line]

return collections.Counter(tokens)

class Vocab:

""" Build a text vocabulary """

def __init__(self,tokens=None,min_freq=0,reserved_tokens=None):

if tokens is None:

tokens=[]

if reserved_tokens is None:

reserved_tokens = []

# Sort according to frequency

counter = count_corpus(tokens)

self.token_freqs = sorted(counter.items(),key=lambda x:x[1],reverse=True)

# Index of unknown tag is 0

self.unk , uniq_tokens = 0, ['<unk>']+reserved_tokens

uniq_tokens += [token for token,freq in self.token_freqs

if freq >= min_freq and token not in uniq_tokens]

self.idx_to_token,self.token_to_idx = [],dict() # Find the tag according to the index and find the index according to the tag

for token in uniq_tokens:

self.idx_to_token.append(token)

self.token_to_idx[token] = len(self.idx_to_token)-1

def __len__(self):

return len(self.idx_to_token)

def __getitem__(self,tokens):

""" Switch to one by one item For the output """

if not isinstance(tokens,(list,tuple)):

return self.token_to_idx.get(tokens,self.unk)

return [self.__getitem__(token) for token in tokens]

def to_tokens(self,indices):

""" If it is a single index Direct output , If it is list perhaps tuple Iterative output """

if not isinstance(indices,(list,tuple)):

return self.idx_to_token[indices]

return [self.idx_to_token[index] for index in indices]

def load_corpus_book(max_tokens=-1):

""" return book Tag index list and glossary in dataset """

lines = read_book('./data/RNN/36-0.txt')

tokens = tokenize(lines,'char')

vocab = Vocab(tokens)

# Because every text line in the dataset , Not necessarily a sentence or paragraph

# So flatten all text lines into a list

corpus = [vocab[token] for line in tokens for token in line]

if max_tokens >0:

corpus = corpus[:max_tokens]

return corpus,vocab

def seq_data_iter_random(corpus,batch_size,num_steps):

""" Use random sampling to generate a small batch of quantum sequences """

# The sequence is partitioned from a random offset , The random range includes `num_steps - 1`

corpus = corpus[random.randint(0, num_steps - 1):]

# subtract 1, Because we need to consider labels

num_subseqs = (len(corpus) - 1) // num_steps

# The length is `num_steps` The starting index of the subsequence of

initial_indices = list(range(0, num_subseqs * num_steps, num_steps))

# In the iterative process of random sampling ,

# From two adjacent 、 Random 、 Subsequences in a small batch are not necessarily adjacent to the original sequence

random.shuffle(initial_indices)

def data(pos):

# Return from `pos` The length at the beginning of the position is `num_steps` Sequence

return corpus[pos:pos + num_steps]

num_batches = num_subseqs // batch_size

for i in range(0, batch_size * num_batches, batch_size):

# ad locum ,`initial_indices` Random start index containing subsequences

initial_indices_per_batch = initial_indices[i:i + batch_size]

X = [data(j) for j in initial_indices_per_batch]

Y = [data(j + 1) for j in initial_indices_per_batch]

yield torch.tensor(X), torch.tensor(Y)

def seq_data_iter_sequential(corpus,batch_size,num_steps):

""" Use sequential partitioning to generate a small batch of quantum sequences """

# Divide the sequence from the random offset

offset = random.randint(0,num_steps)

num_tokens = ((len(corpus)- offset -1)//batch_size)*batch_size

Xs = torch.tensor(corpus[offset:offset+num_tokens])

Ys = torch.tensor(corpus[offset+1:offset+num_tokens+1])

Xs,Ys = Xs.reshape(batch_size,-1),Ys.reshape(batch_size,-1)

num_batches = Xs.shape[1]//num_steps

for i in range(0,num_steps*num_batches,num_steps):

X = Xs[:,i:i+num_steps]

Y = Ys[:,i:i+num_steps]

yield X,Y

class SeqDataLoader: #@save

""" An iterator that loads sequence data ."""

def __init__(self, batch_size, num_steps, use_random_iter, max_tokens):

if use_random_iter:

self.data_iter_fn = seq_data_iter_random

else:

self.data_iter_fn = seq_data_iter_sequential

self.corpus, self.vocab = load_corpus_book(max_tokens)

self.batch_size, self.num_steps = batch_size, num_steps

def __iter__(self):

return self.data_iter_fn(self.corpus, self.batch_size, self.num_steps)

# Defined function load_data_book Return both data iterators and vocabularies

def load_data_book(batch_size, num_steps, #@save

use_random_iter=False, max_tokens=10000):

""" Returns the iterator and vocabulary of the time machine dataset ."""

data_iter = SeqDataLoader(batch_size, num_steps, use_random_iter,max_tokens)

return data_iter, data_iter.vocab

# Load data

batch_size,num_steps = 32,35

train_iter,vocab = load_data_book(batch_size,num_steps)

2. Initialize model parameters

num_hiddens Is an adjustable super parameter , When training the network model , The input and output come from the same Thesaurus , So it has the same dimension , Equal to the size of the vocabulary

def get_params(vocab_size,num_hiddens,device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape,device=device)*0.01

# Parameters of hidden layer

W_xh = normal((num_inputs,num_hiddens)) # Map input to hidden layers

W_hh = normal((num_hiddens,num_hiddens)) # Hidden variables from the previous time to the next time

b_h = torch.zeros(num_hiddens,device=device) # For every hidden variable bias

# Output layer

W_hq = normal((num_hiddens,num_outputs)) # Hide variables to output

b_q = torch.zeros(num_outputs,device=device)

# Additional gradient

params = [W_xh,W_hh,b_h,W_hq,b_q]

for param in params:

param.requires_grad_(True)

return params

3. Cyclic neural network model

In order to define the cyclic neural network model , We first need a function to return the hidden state during initialization . It returns a tensor , All use 0 fill , Its shape is ( Batch size , Number of hidden units ). Using tuples makes it easier to deal with situations where hidden states contain multiple variables .

def init_rnn_state(batch_size,num_hiddens,device):

return (torch.zeros((batch_size,num_hiddens),device=device),)

Use rnn The function defines how to calculate the hidden state and output in a time step . Please note that , The recurrent neural network model passes through the outermost dimension inputs loop , In order to update the hidden status of small batches step by step H. Activate function using tanh function . As described in the chapter of multi-layer perceptron , When elements are evenly distributed over real numbers ,tanh The average value of is 0

def rnn(inputs, state, params):

# `inputs` The shape of the :(` Number of time steps `, ` Batch size `, ` Vocabulary size `)

W_xh, W_hh, b_h, W_hq, b_q = params

H, = state # H Is the hidden state of the previous moment

outputs = []

# `X` The shape of the :(` Batch size `, ` Vocabulary size `)

for X in inputs:

""" Traverse the data in chronological order """

H = torch.tanh(torch.mm(X, W_xh) + torch.mm(H, W_hh) + b_h)

Y = torch.mm(H, W_hq) + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

# Integrate all the above functions with one class , And store RNN Model parameters of

class RNNModelScratch: #@save

""" A recurrent neural network model implemented from scratch """

def __init__(self, vocab_size, num_hiddens, device, get_params,

init_state, forward_fn):

self.vocab_size, self.num_hiddens = vocab_size, num_hiddens

self.params = get_params(vocab_size, num_hiddens, device)

self.init_state, self.forward_fn = init_state, forward_fn

def __call__(self, X, state):

X = F.one_hot(X.T, self.vocab_size).type(torch.float32)

return self.forward_fn(X, state, self.params)

def begin_state(self, batch_size, device):

return self.init_state(batch_size, self.num_hiddens, device)

# Check whether the output shape is correct , Ensure that the dimension of the hidden state remains unchanged

num_hiddens = 512

net = RNNModelScratch(len(vocab),num_hiddens,d2l.try_gpu(),get_params,init_rnn_state,rnn)

state = net.begin_state(X.shape[0],d2l.try_gpu())

Y,new_state = net(X.to(d2l.try_gpu()),state)

Y.shape, len(new_state), new_state[0].shape

(torch.Size([10, 28]), 1, torch.Size([2, 512]))

The output shape is ( Time steps * Batch size , Vocabulary size ), The shape of the hidden state remains unchanged , namely ( Batch size , Number of hidden units )

4. forecast

Define prediction functions to generate user provided prefix New characters after ,prefix Is a string containing multiple characters . stay prefix When you loop through these starting characters , Constantly pass the hidden state to the next time step , Without generating any output .

This is known as " Preheat period "(warm-up), During this period, the model will update itself ( for example , Update hidden status ), But no prediction .

After the forecast period , The hidden state is better than the initial value .

def predict(prefix,num_preds,net,vocab,device):

""" stay prefix New characters are generated later """

state = net.begin_state(batch_size=1,device=device)

outputs = [vocab[prefix[0]]]

get_input = lambda: torch.tensor([outputs[-1]], device=device).reshape((1, 1))

for y in prefix[1:]: # Preheat period

_,state = net(get_input(),state)

outputs.append(vocab[y])

for _ in range(num_preds): # Conduct `num_preds` Next step prediction

y,state = net(get_input(),state)

outputs.append(int(y.argmax(dim=1).reshape(1)))

return "".join([vocab.idx_to_token[i] for i in outputs])

predict('time traveller ', 10, net, vocab, d2l.try_gpu())

'time traveller awmjvgtmjv'

5. Gradient cut

For length is T T T Sequence , We calculate these in iterations T T T Gradient over time steps , Thus, a length of O ( T ) \mathcal{O}(T) O(T) Matrix multiplication chain . When T T T large , It may cause numerical instability , For example, the gradient may explode or disappear . therefore , Recurrent neural network models often need additional help to stabilize training .

Generally speaking , When solving optimization problems , We take steps to update the model parameters , For example, in vector form x \mathbf{x} x in , Negative gradient in small batches g \mathbf{g} g In the direction of . for example , Use η > 0 \eta > 0 η>0 As a learning rate , In an iteration , We will x \mathbf{x} x Updated to x − η g \mathbf{x} - \eta \mathbf{g} x−ηg. Let's further assume that the objective function f f f To perform well , for example , Liebhiz continuous (Lipschitz continuous) constant L L L. in other words , For any x \mathbf{x} x and y \mathbf{y} y We have :

∣ f ( x ) − f ( y ) ∣ ≤ L ∥ x − y ∥ . |f(\mathbf{x}) - f(\mathbf{y})| \leq L \|\mathbf{x} - \mathbf{y}\|. ∣f(x)−f(y)∣≤L∥x−y∥.

under these circumstances , We can reasonably assume that , If we pass the parameter vector η g \eta \mathbf{g} ηg to update , that :

∣ f ( x ) − f ( x − η g ) ∣ ≤ L η ∥ g ∥ , |f(\mathbf{x}) - f(\mathbf{x} - \eta\mathbf{g})| \leq L \eta\|\mathbf{g}\|, ∣f(x)−f(x−ηg)∣≤Lη∥g∥,

This means that we will not observe more than L η ∥ g ∥ L \eta \|\mathbf{g}\| Lη∥g∥ The change of . This is both a curse and a blessing . On the cursed side , It limits the speed of progress ; And on the blessing side , It limits if we move in the wrong direction , The extent to which things can go wrong .

Sometimes the gradient can be very large , The optimization algorithm may not converge . We can reduce η \eta η Learning rate to solve this problem . But what if we rarely get large gradients ? under these circumstances , This seems totally groundless . A popular alternative is by incorporating gradients g \mathbf{g} g Project back to the ball of a given radius ( for example θ \theta θ) To crop the gradient g \mathbf{g} g. As follows :

g ← min ( 1 , θ ∥ g ∥ ) g . \mathbf{g} \leftarrow \min\left(1, \frac{\theta}{\|\mathbf{g}\|}\right) \mathbf{g}. g←min(1,∥g∥θ)g.

By doing so , We know that the gradient norm will never exceed θ \theta θ, And the updated gradient is completely consistent with g \mathbf{g} g Align with the original direction of the . It also has an ideal side effect , That is, limit any given small batch ( And any given sample ) Effect on parameter vector . This gives the model a certain degree of robustness . Gradient clipping provides a fast way to repair gradient explosion . Although it doesn't completely solve the problem , But it is one of many technologies to alleviate the problem .

Next, we define a function to trim the model implemented from scratch or by advanced API Gradient of the constructed model . Also pay attention to , We calculate the gradient norm of all model parameters .

def grad_clipping(net, theta): #@save

""" Clipping gradient ."""

if isinstance(net, nn.Module):

params = [p for p in net.parameters() if p.requires_grad]

else:

params = net.params

norm = torch.sqrt(sum(torch.sum((p.grad**2)) for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

6. Training

Before training the model , Let's define a function to train a model with only one iteration cycle . It trains with us softmax The way of regression model has three differences :

- Different sampling methods for sequential data ( Random sampling and sequential partitioning ) Will result in differences in hidden state initialization .

- We crop the gradient before updating the model parameters . This ensures that even at some point in the training process, the gradient explodes , The model does not diverge .

- We use the degree of confusion to evaluate the model . It ensures the comparability of sequences with different lengths .

To be specific , When using sequential partitions , We only initialize the hidden state at the beginning of each iteration cycle . Due to the next small batch i t h i^\mathrm{th} ith Subsequence samples and current i t h i^\mathrm{th} ith Subsequence samples are adjacent , Therefore, the hidden state at the end of the current small batch will be used to initialize the hidden state at the beginning of the next small batch . such , The sequence history information stored in the hidden state can flow through adjacent subsequences in an iteration cycle . However , Any hidden state calculation depends on all the previous small batches in the same iteration cycle , This makes the gradient calculation complex . In order to reduce the amount of calculation , We separate the gradient before processing any small batch , The gradient calculation of hidden state is always limited to a small batch of time steps .

When using random sampling , We need to reinitialize the hidden state for each iteration cycle , Because each sample is sampled at a random location .

def train_epoch(net, train_iter, loss, updater, device, use_random_iter):

""" The training model has an iterative cycle ."""

state, timer = None, d2l.Timer()

metric = d2l.Accumulator(2) # The sum of training losses , Number of tags

for X, Y in train_iter:

if state is None or use_random_iter:

# Initialize at the first iteration or when random sampling is used `state`

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

# `state` about `nn.GRU` It's a tensor

state.detach_()

else:

# `state` about `nn.LSTM` Or for the model we implemented from scratch, it's a tensor

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat, y.long()).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

grad_clipping(net, 1)

updater.step()

else:

l.backward()

grad_clipping(net, 1)

# Because... Has been called `mean` function

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return math.exp(metric[0] / metric[1]), metric[1] / timer.stop()

#@save

def train(net, train_iter, vocab, lr, num_epochs, device,use_random_iter=False):

""" Training models """

loss = nn.CrossEntropyLoss()

animator = d2l.Animator(xlabel='epoch', ylabel='perplexity',

legend=['train'], xlim=[10, num_epochs])

# initialization

if isinstance(net, nn.Module):

updater = torch.optim.SGD(net.parameters(), lr)

else:

updater = lambda batch_size: d2l.sgd(net.params, lr, batch_size)

predict_train = lambda prefix: predict(prefix, 50, net, vocab, device)

# Training and forecasting

for epoch in range(num_epochs):

ppl, speed = train_epoch(net, train_iter, loss, updater, device,

use_random_iter)

if (epoch + 1) % 10 == 0:

print(predict_train('time traveller'))

animator.add(epoch + 1, [ppl])

print(f' Confusion {

ppl:.1f}, {

speed:.1f} Mark / second {

str(device)}')

print(predict_train('the planet mars i scarcely'))

print(predict_train('scarcely'))

Now we can train the recurrent neural network model . Because we only use 10000 A sign , So the model needs more iteration cycles to converge better .

num_epochs, lr = 500, 1

train(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu())

Confusion 1.0, 172140.2 Mark / second cuda:0

the planet mars i scarcely need remind the reader revolves about thesun at a

scarcely ore seventh ofthe volume of the earth must have a

Last , Let's check the results of using random sampling .

train(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu(),use_random_iter=True)

Confusion 1.4, 172089.0 Mark / second cuda:0

<unk>he planet <unk>ars<unk> <unk> scarcely one seventh ofthe volume of the earth must have a

scarcely one seventh ofthe volume of the earth must have a

Summary

- We can train a character level language model based on recurrent neural network , Generate text according to the text prefix provided by the user .

- A simple recurrent neural network language model includes input coding 、 Cyclic neural network model and output generation .

- The recurrent neural network model needs state initialization to train , Although random sampling and sequential division use different methods .

- When using sequential partitioning , We need to separate the gradient to reduce the amount of calculation .

- The warm-up period allows the model to update itself before making any predictions ( for example , Get a better hidden state than the initial value ).

- Gradient clipping can prevent gradient explosion , But it can't cope with the disappearance of the gradient .

practice

- It shows that exclusive coding is equivalent to selecting different embedding for each object .

- By adjusting the super parameters ( Such as the number of iteration cycles 、 Number of hidden units 、 Time steps of small batch 、 Learning rate, etc ) To improve confusion .

- How low can you lower ?

- Replace the hot code with learnable embedding . Will this lead to better performance ?

- How does it work in other books , for example Star Wars: ?

- Modify the prediction function , For example, use sampling , Instead of choosing the most likely next character .

- What's going to happen ?

- Bias the model towards more likely outputs , for example , from q ( x t ∣ x t − 1 , … , x 1 ) ∝ P ( x t ∣ x t − 1 , … , x 1 ) α q(x_t \mid x_{t-1}, \ldots, x_1) \propto P(x_t \mid x_{t-1}, \ldots, x_1)^\alpha q(xt∣xt−1,…,x1)∝P(xt∣xt−1,…,x1)α Mid extraction α > 1 \alpha > 1 α>1.

- Run the code in this section without clipping the gradient . What will happen ?

- Change the order division , Make it not separate the hidden state from the calculation diagram . Is there any change in the running time ? How confused is it ?

- use ReLU Replace the activation function used in this section , And repeat the experiment in this section . Do we still need gradient clipping ? Why? ?

边栏推荐

- How does idea withdraw code from remote push

- Implementing expired localstorage cache with lazy deletion and scheduled deletion

- 回复评论的sql

- UML sequence diagram [easy to understand]

- pcl::fromROSMsg报警告Failed to find match for field ‘intensity‘.

- Summary of small program performance optimization practice

- xxl-job惊艳的设计,怎能叫人不爱

- "How to connect the Internet" reading notes - FTTH

- Research Report on the development trend and Prospect of global and Chinese zinc antimonide market Ⓚ 2022 ~ 2027

- el-table单选并隐藏全选框

猜你喜欢

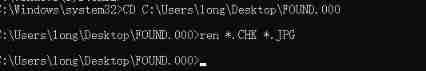

How to batch change file extensions in win10

mmclassification 标注文件生成

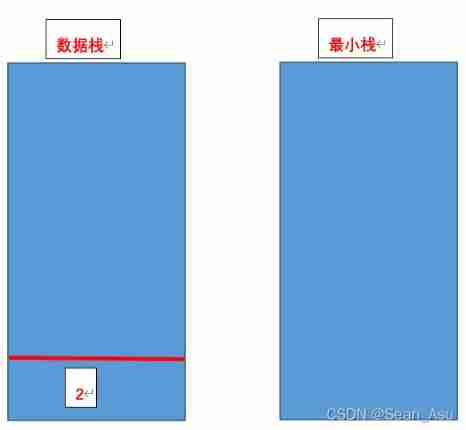

Sword finger offer 30 contains the stack of Min function

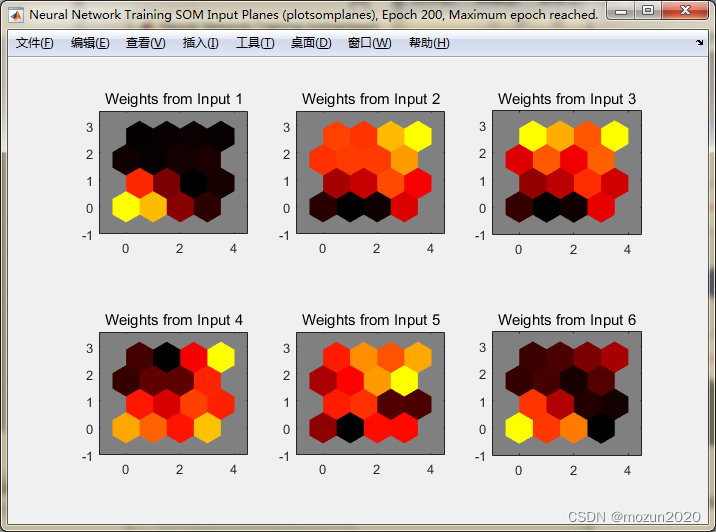

MATLAB小技巧(25)竞争神经网络与SOM神经网络

2022-2028 global elastic strain sensor industry research and trend analysis report

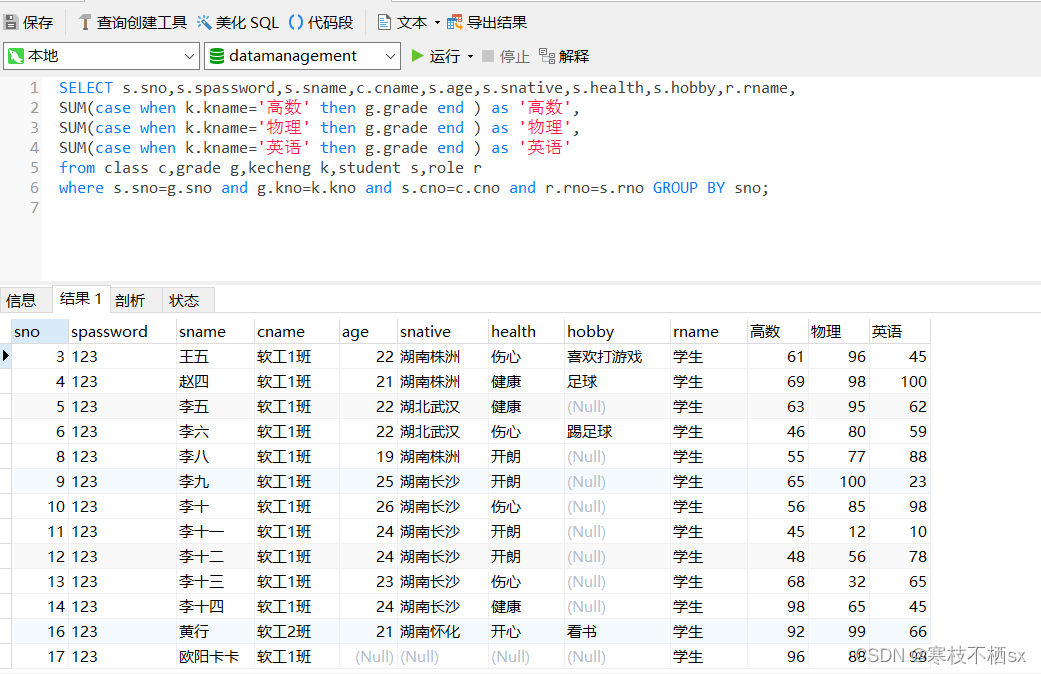

回复评论的sql

How does idea withdraw code from remote push

el-table单选并隐藏全选框

At the age of 30, I changed to Hongmeng with a high salary because I did these three things

2022-2028 global seeder industry research and trend analysis report

随机推荐

HMS core helps baby bus show high-quality children's digital content to global developers

如何编写单元测试用例

C # use gdi+ to add text with center rotation (arbitrary angle)

Opencv environment construction (I)

Daughter love in lunch box

Report on investment analysis and prospect trend prediction of China's MOCVD industry Ⓤ 2022 ~ 2028

2022-2028 global intelligent interactive tablet industry research and trend analysis report

什么是uid?什么是Auth?什么是验证器?

Luogu deep foundation part 1 Introduction to language Chapter 4 loop structure programming (2022.02.14)

"How to connect the Internet" reading notes - FTTH

Write a jison parser (7/10) from scratch: the iterative development process of the parser generator 'parser generator'

2022-2028 global tensile strain sensor industry research and trend analysis report

QTreeView+自定义Model实现示例

The 14th five year plan and investment risk analysis report of China's hydrogen fluoride industry 2022 ~ 2028

2022-2028 global special starch industry research and trend analysis report

H5 audio tag custom style modification and adding playback control events

"How to connect the network" reading notes - Web server request and response (4)

2022-2028 global optical transparency industry research and trend analysis report

Golang 类型比较

《网络是怎么样连接的》读书笔记 - 认识网络基础概念(一)