当前位置:网站首页>Pytorch training process was interrupted

Pytorch training process was interrupted

2022-07-05 11:17:00 【IMQYT】

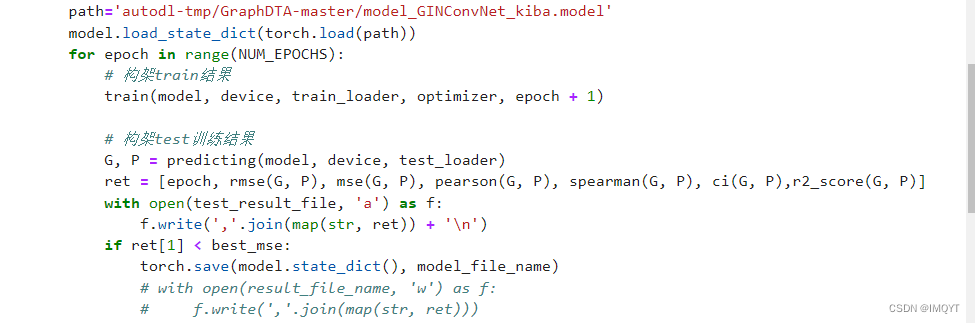

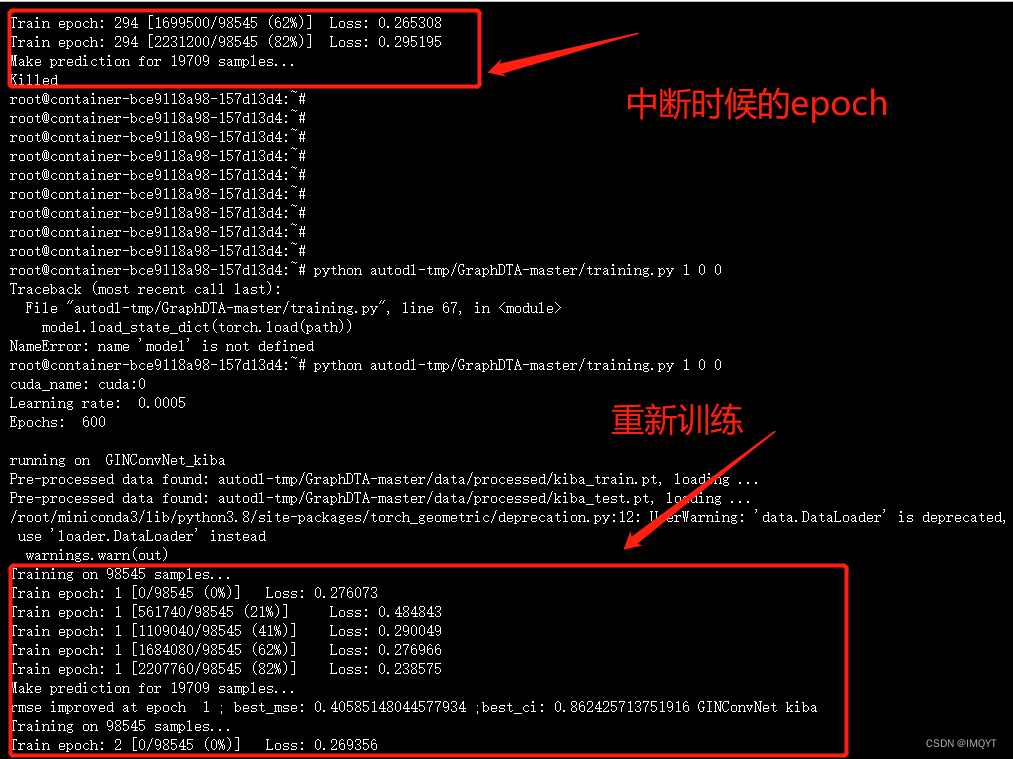

I'm scared to death , Training 3 The process of Tian's model was killed by his own hand , I almost cried , Has the money for renting a server for a week been wasted , Is time wasted , Can it be remedied ! For the first time , And my code runs very slowly (RTXA5000, It's reasonable to say that it's not slow , Too much data , In order to reduce the number of logs IO Wasted time , There is no log ), Only the model is saved . Already my hands are shaking

Don't talk much , How to remedy it ?

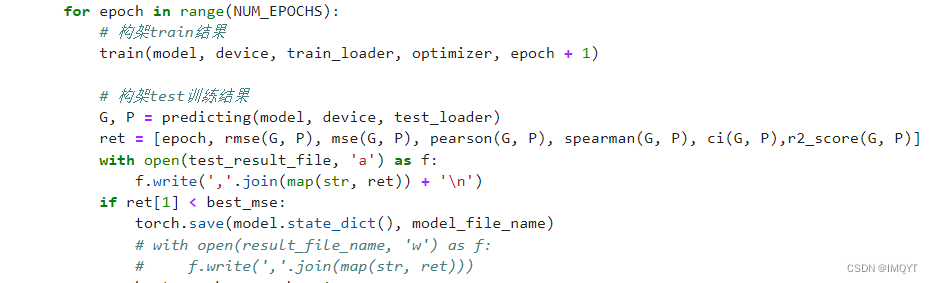

Save the model in the code only torch.save. Other parameters are not saved .epoch Nothing is saved , Found a lot of experience , Finally find a remedy

Reload the model

path='autodl-tmp/GraphDTA-master/model_GINConvNet_kiba.model'

model.load_state_dict(torch.load(path))In this case , What the model learned is back , Include loss And so on. .

From here I can see ,loss It did continue 294 The training of the time , The same is true of the predicted value. Continue 294 The result after the first time , Fortunately, I got it back , But there was a problem , Because I saw epoch It seems to be from 1 Here we go , In this case, we need to train 600 Time ?, So remember to revise epoch The total number of times ,600-294=306, Although the control interrupt writes this 1, But retraining 306 This time it will end . Be accomplished

边栏推荐

- -26374 and -26377 errors during coneroller execution

- COMSOL--三维图形的建立

- Process control

- Operators

- MFC pet store information management system

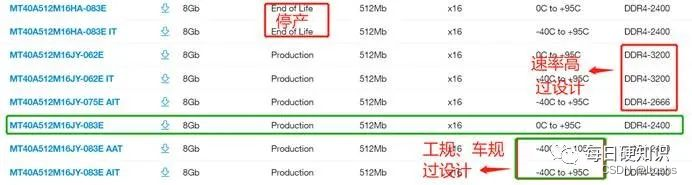

- Detailed explanation of DDR4 hardware schematic design

- Array

- go语言学习笔记-初识Go语言

- Advanced scaffold development

- Go language learning notes - first acquaintance with go language

猜你喜欢

2022 t elevator repair operation certificate examination questions and answers

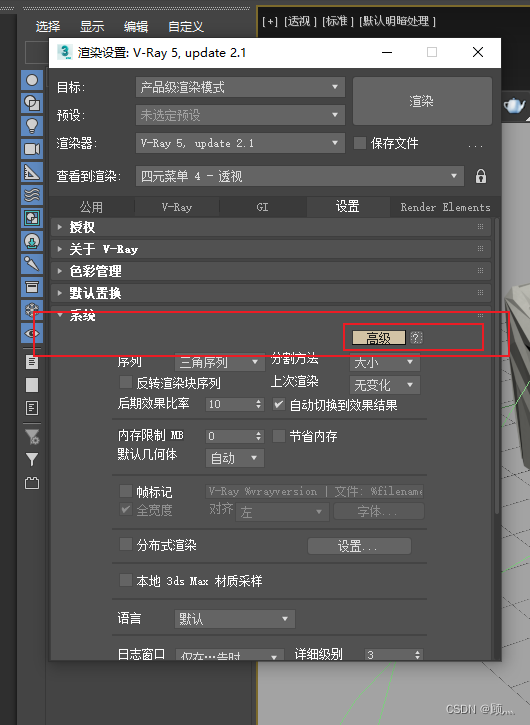

How to close the log window in vray5.2

2022 mobile crane driver examination question bank and simulation examination

Intelligent metal detector based on openharmony

Go language learning notes - first acquaintance with go language

DDR4硬件原理图设计详解

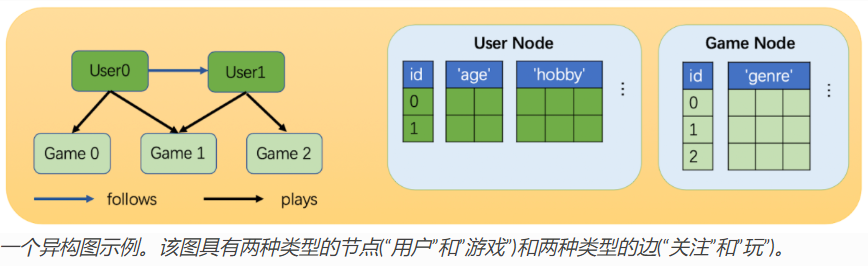

Some understandings of heterogeneous graphs in DGL and the usage of heterogeneous graph convolution heterographconv

How to make full-color LED display more energy-saving and environmental protection

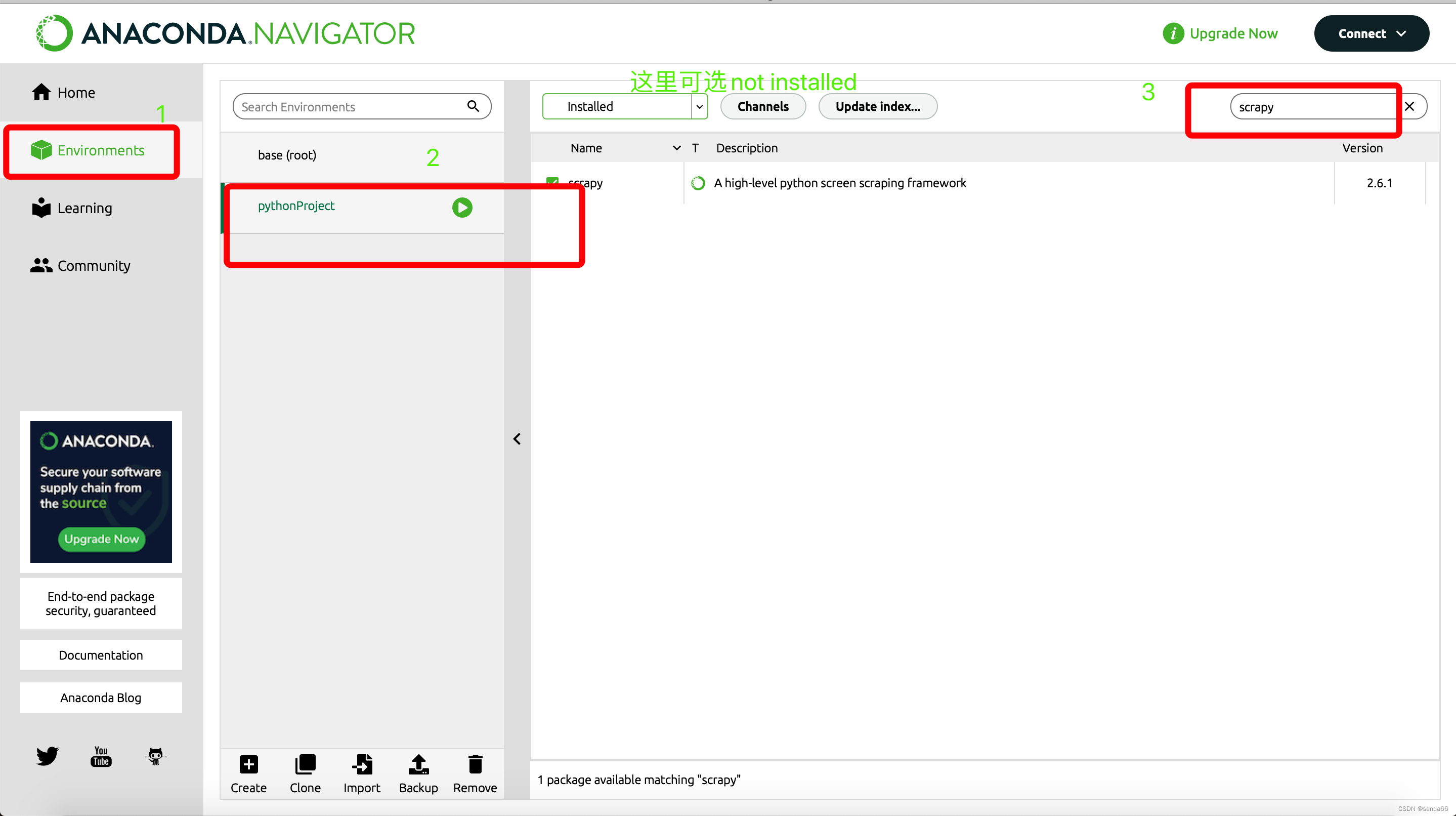

Modulenotfounderror: no module named 'scratch' ultimate solution

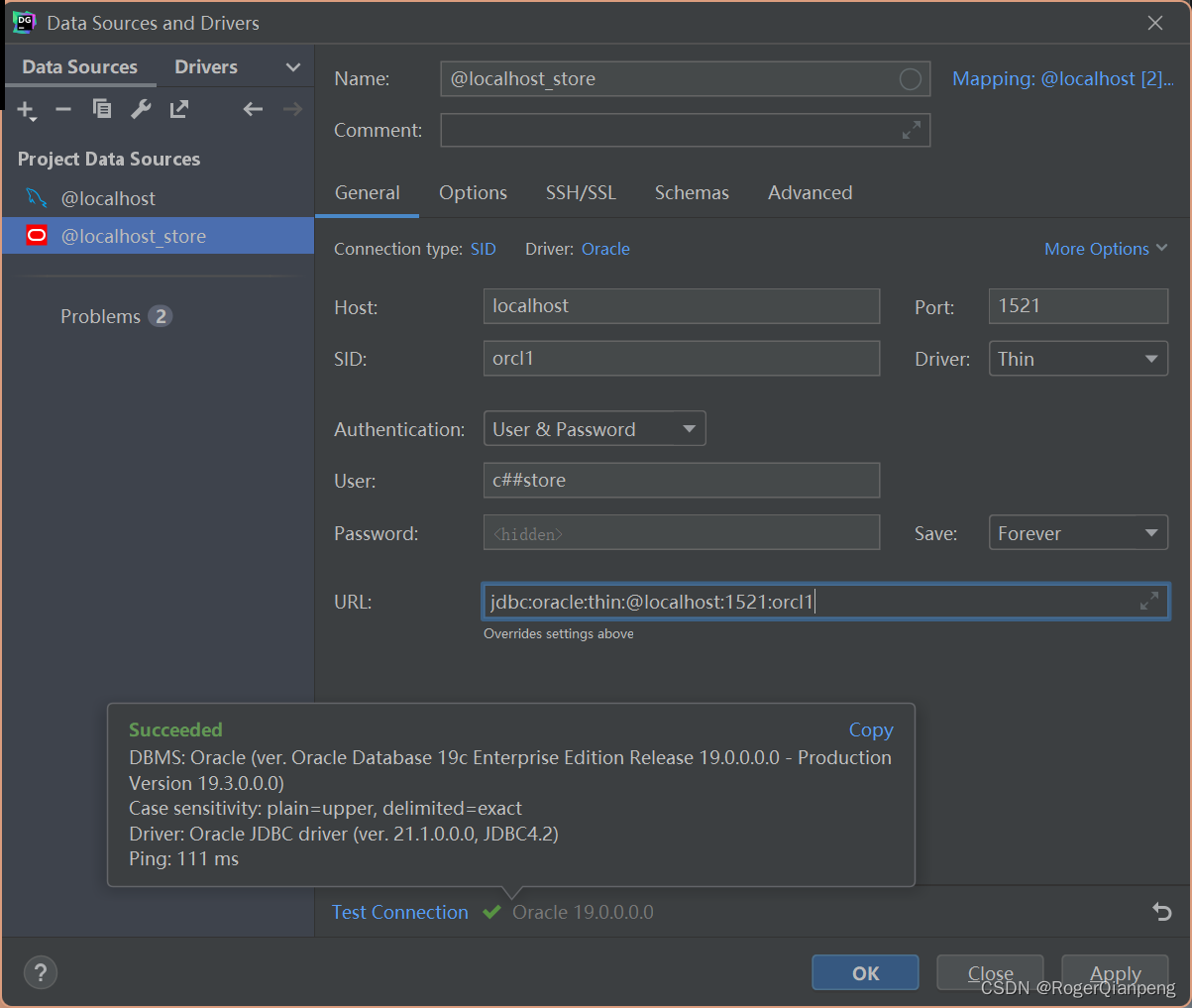

【Oracle】使用DataGrip连接Oracle数据库

随机推荐

uniapp

【DNS】“Can‘t resolve host“ as non-root user, but works fine as root

Scaffold development foundation

Advanced scaffold development

Technology sharing | common interface protocol analysis

解决readObjectStart: expect { or n, but found N, error found in #1 byte of ...||..., bigger context ..

A mining of edu certificate station

Three suggestions for purchasing small spacing LED display

32: Chapter 3: development of pass service: 15: Browser storage media, introduction; (cookie,Session Storage,Local Storage)

go语言学习笔记-初识Go语言

高校毕业求职难?“百日千万”网络招聘活动解决你的难题

LSTM applied to MNIST dataset classification (compared with CNN)

How to make full-color LED display more energy-saving and environmental protection

一次edu证书站的挖掘

When using gbase 8C database, an error is reported: 80000502, cluster:%s is busy. What's going on?

[there may be no default font]warning: imagettfbbox() [function.imagettfbbox]: invalid font filename

力扣(LeetCode)185. 部门工资前三高的所有员工(2022.07.04)

comsol--三维图形随便画----回转

Bracket matching problem (STL)

spark调优(一):从hql转向代码