当前位置:网站首页>[Multi-task optimization] DWA, DTP, Gradnorm (CVPR 2019, ECCV 2018, ICML 2018)

[Multi-task optimization] DWA, DTP, Gradnorm (CVPR 2019, ECCV 2018, ICML 2018)

2022-08-01 20:00:00 【chad_lee】

Optimization of multi-task learning models

有多个task就有多个loss,常见的MTL模型lossCan be directly and simply for multiple tasksloss相加:

L = ∑ i L i L=\sum_{i} L_{i} L=i∑Li

Obviously there is a big problem with this approach,因为不同task的label分布不同,同时不同task的lossThe magnitudes are also different,The entire model is likely to be used by somelossEspecially large tasks dominate.The easiest way is weightingloss,Manually designed weights:

L = ∑ i w i ∗ L i L=\sum_{i} w_{i} * L_{i} L=i∑wi∗Li

But this way this weight is fixed throughout the training epoch,The weights may vary in different training stages,Dynamic weights are :

L = ∑ i w i ( t , θ ) ∗ L i L=\sum_{i} w_{i}(t, \theta) * L_{i} L=i∑wi(t,θ)∗Li

t是训练的step,thetaare other parameters of the model.However, this approach is not necessarily better than manual design weights.

一些设计 w i ( t , θ ) w_{i}(t, \theta) wi(t,θ) 的方法:

《End-to-End Multi-Task Learning with Attention》 CVPR 2019

CVPR 2019的《End-to-End Multi-Task Learning with Attention》提出的Dynamic Weight Averaging(DWA),核心公式如下所示:

r n ( t − 1 ) = L n ( t − 1 ) L n ( t − 2 ) w i ( t ) = N exp ( r i ( t − 1 ) / T ) ∑ n exp ( r n ( t − 1 ) / T ) \begin{gathered} r_{n}(t-1)=\frac{L_{n}(t-1)}{L_{n}(t-2)} \\ w_{i}(t)=\frac{N \exp \left(r_{i}(t-1) / T\right)}{\sum_{n} \exp \left(r_{n}(t-1) / T\right)} \end{gathered} rn(t−1)=Ln(t−2)Ln(t−1)wi(t)=∑nexp(rn(t−1)/T)Nexp(ri(t−1)/T)

$L_{n}(t-1) 是任务 n 在 t − 1 时的训练 l o s s ,因此 是任务 n 在 t-1 时的训练loss,因此 是任务n在t−1时的训练loss,因此r_{n}(t-1) $ 是此时loss的下降速度,$r_{n}(t-1) $越小,训练速度越快.(has begun to converge,loss=0time is over)

w i ( t ) w_i(t) wi(t)代表不同任务loss的权重,直观理解就是loss收敛越快的任务,权重越小,The average degree of weights is determined by the temperature coefficientT控制

《Dynamic task prioritization for multitask learning》 ECCV 2018

DTP(Dynamic Task Prioritization):

w i ( t ) = − ( 1 − k i ( t ) ) γ i log ( k i ( t ) ) w_{i}(t)=-\left(1-k_{i}(t)\right)^{\gamma_{i}} \log \left(k_{i}(t)\right) wi(t)=−(1−ki(t))γilog(ki(t))

$k_i(t) $ 表示第t步的a measurekpi值,Value dimension0~1之间,比如在分类任务中KPIIt can be the accuracy rate on the training set, etc,It can reflect how well the model fits this task,γis the manually adjusted temperature coefficient.Intuitive understanding is similarfocal loss,The better the task, the better,获得的权重越小.

《Gradnorm: Gradient normalization for adaptive loss balancing in deep multitask networks》 ICML 2018

影响力最大的是GradNorm.其核心思想相对前述的DWA和DTP更为复杂,核心观点为

不仅考虑loss收敛的速度,进一步希望loss本身的量级能尽量接近

不同的任务以相近的速度训练(与gradient相关)

From dynamic weights with parameters $L=\sum_{i} w_{i}(t, \theta) * L_{i} $出发,The authors also define a training weight $w_i(t,\theta) $ 相关的 gradient loss.(定一个lossused to optimize trainingloss的权重)

w i ( t ) w_i(t) wi(t) 刚开始初始化为1 or hyperparameters,然后用gradient loss来优化.

Get the mission first i 在 t 时刻的 梯度的2范数,and all tasks平均值:

G W ( i ) ( t ) = ∥ ∇ W ( w i ( t ) L i ( t ) ) ∥ 2 G ˉ W ( t ) = A V G ( G W ( i ) ( t ) ) \begin{gathered} G_{W}^{(i)}(t)=\left\|\nabla_{W}\left(w_{i}(t) L_{i}(t)\right)\right\|_{2} \\ \bar{G}_{W}(t)=A V G\left(G_{W}^{(i)}(t)\right) \end{gathered} GW(i)(t)=∥∇W(wi(t)Li(t))∥2GˉW(t)=AVG(GW(i)(t))

其中Wis a subset of model parameters,also needs to be appliedGradient Normalization的参数集,Typically the last layer in the model to share parameters is selected.Then get different tasksloss的训练速度:

L ~ i ( t ) = L i ( t ) / L i ( 0 ) r i ( t ) = L ~ i ( t ) / A V G ( L ~ i ( t ) ) \begin{gathered} \tilde{L}_{i}(t)=L_{i}(t) / L_{i}(0) \\ r_{i}(t)=\tilde{L}_{i}(t) / A V G\left(\tilde{L}_{i}(t)\right) \end{gathered} L~i(t)=Li(t)/Li(0)ri(t)=L~i(t)/AVG(L~i(t))

$r_{i}(t) $Measures the speed of task training, $r_{i}(t) $ 越大,Indicates that the task is trained more slowly.这点和DWA的思想接近,但是这里使用的是第一步的loss,而不是DWA中的前一步loss.

最终gradient loss为:

L g r a d ( t ; w i ( t ) ) = ∑ i ∣ G W ( i ) ( t ) − G ˉ W ( t ) ∗ [ r i ( t ) ] α ∣ 1 L_{g r a d}\left(t ; w_{i}(t)\right)=\sum_{i}\left|G_{W}^{(i)}(t)-\bar{G}_{W}(t) *\left[r_{i}(t)\right]^{\alpha}\right|_{1} Lgrad(t;wi(t))=i∑∣∣GW(i)(t)−GˉW(t)∗[ri(t)]α∣∣1

- $\bar{G}{W}(t) *\left[r{i}(t)\right]^{\alpha} $表示理想的梯度标准化后的值.**这里的gradient loss只用于更新 $w_{i}(t) ∗ ∗ . **. ∗∗.w_{i}(t) $It will also go through the final weightnormalize,使得 $\sum_{i} w_{i}(t)=N $,N是任务的数量.

- αis a hyperparameter that sets the strength of the restoring force,That is, the training speed of the task is adjusted to the average level of intensity.If the complexity of the tasks is very different,The learning rates vary widely between roughly figures,should use the higher onealphafor a stronger training rate balance;Conversely for multiple similar tasks,应该使用较小的α.

- 从gradient loss的定义来看, $r_i(t) $ 越大,Indicates faster training,gradient loss越大;$\left|G_{W}^{(i)}(t)-\bar{G}{W}(t)\right| 表明 l o s s 量级的变化,不论 表明loss量级的变化,不论 表明loss量级的变化,不论G{W}^{(i)}(t) $过大或者过小都会导致gradient loss变大.

- 所以gradient loss 希望:1、不同任务的loss的量级接近;2、Different tasks are trained at similar speeds(收敛速度)

边栏推荐

- 为什么限制了Oracle的SGA和PGA,OS仍然会用到SWAP?

- 密码学的基础:X.690和对应的BER CER DER编码

- 【nn.Parameter()】生成和为什么要初始化

- 【webrtc】sigslot : 继承has_slot 及相关流程和逻辑

- 如何记录分析你的炼丹流程—可视化神器Wandb使用笔记【1】

- 数据库系统原理与应用教程(072)—— MySQL 练习题:操作题 121-130(十六):综合练习

- 小数据如何学习?吉大最新《小数据学习》综述,26页pdf涵盖269页文献阐述小数据学习理论、方法与应用

- 智能硬件开发怎么做?机智云全套自助式开发工具助力高效开发

- 手撸代码,Redis发布订阅机制实现

- deploy zabbix

猜你喜欢

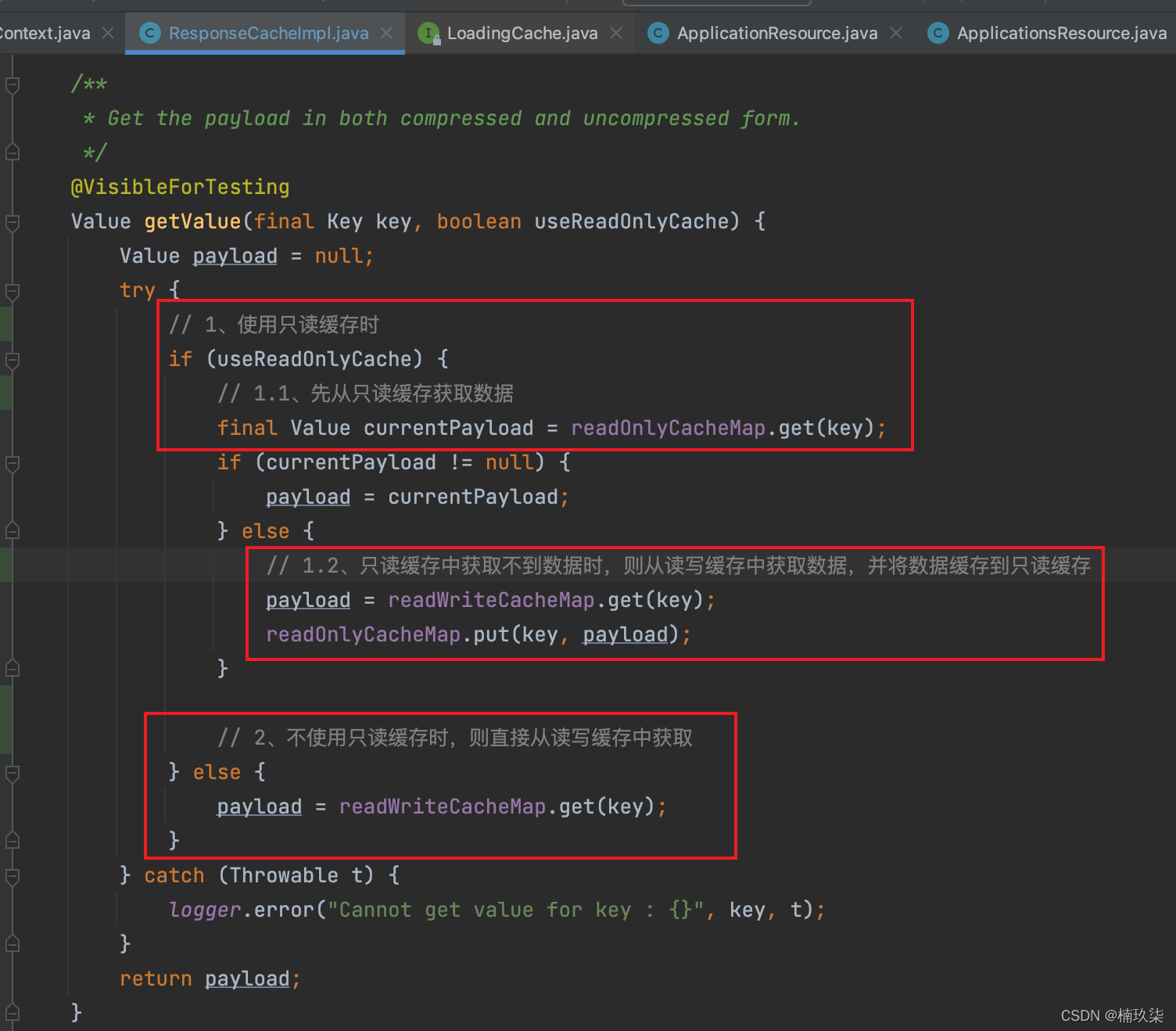

图文详述Eureka的缓存机制/三级缓存

XSS range intermediate bypass

【无标题】

【节能学院】推进农业水价综合改革的意见解读

第59章 ApplicationPart内置依赖注入中间件

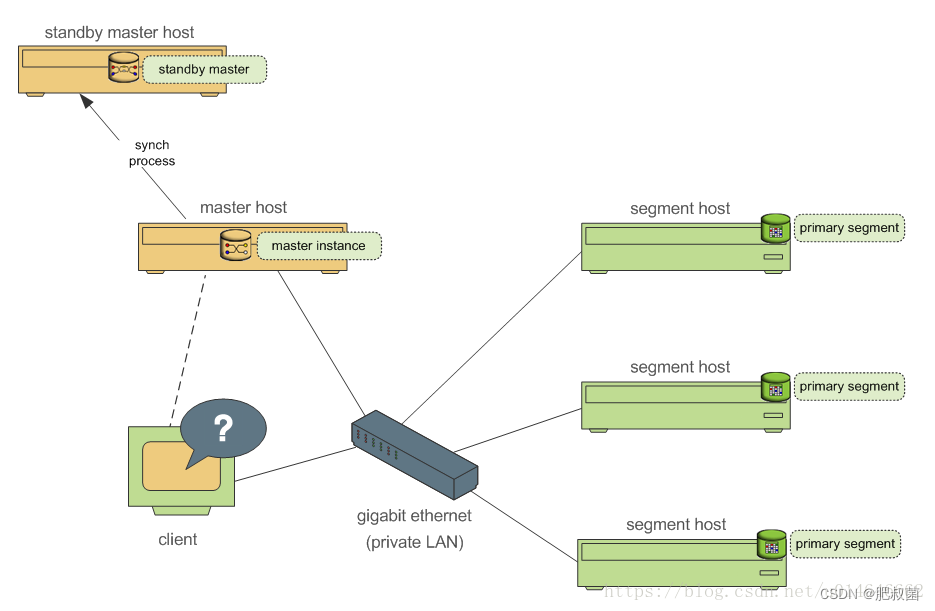

Greenplum Database Source Code Analysis - Analysis of Standby Master Operation Tools

研究生新同学,牛人看英文文献的经验,值得你收藏

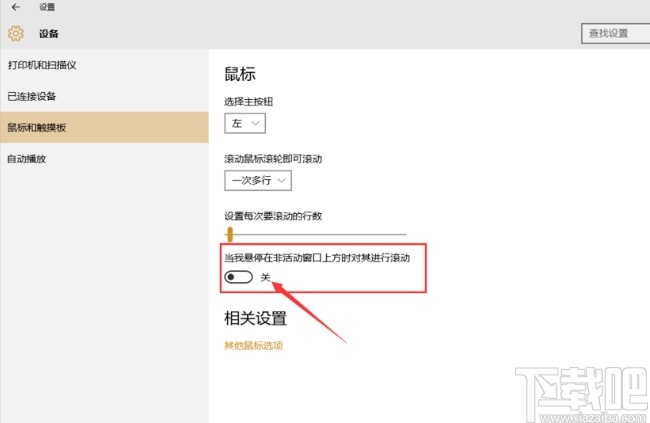

Win10, the middle mouse button cannot zoom in and out in proe/creo

![58: Chapter 5: Develop admin management services: 11: Develop [admin face login, interface]; (not measured) (using Ali AI face recognition) (demonstrated, using RestTemplate to implement interface cal](/img/ab/1c0adeb344329e28010b6ffda5389d.png)

58: Chapter 5: Develop admin management services: 11: Develop [admin face login, interface]; (not measured) (using Ali AI face recognition) (demonstrated, using RestTemplate to implement interface cal

【kali-信息收集】(1.2)SNMP枚举:Snmpwalk、Snmpcheck;SMTP枚举:smtp-user-enum

随机推荐

【ES】ES2021 我学不动了,这次只学 3 个。

卷积神经网络(CNN)mnist数字识别-Tensorflow

【kali-信息收集】(1.3)探测网络范围:DMitry(域名查询工具)、Scapy(跟踪路由工具)

经验共享|在线文档协作:企业文档处理的最佳选择

【节能学院】推进农业水价综合改革的意见解读

Risc-v Process Attack

油猴hook小脚本

洛谷 P2440 木材加工

数据可视化

环境变量,进程地址空间

57:第五章:开发admin管理服务:10:开发【从MongoDB的GridFS中,获取文件,接口】;(从GridFS中,获取文件的SOP)(不使用MongoDB的服务,可以排除其自动加载类)

Database Plus 的云上之旅:SphereEx 正式开源 ShardingSphere on Cloud 解决方案

Compose实战-实现一个带下拉加载更多功能的LazyColumn

CMake教程——Leeds_Garden

【七夕特别篇】七夕已至,让爱闪耀

八百客、销售易、纷享销客各行其道

【无标题】

【软考软件评测师】基于规则说明的测试技术下篇

regular expression

第59章 ApplicationPart内置依赖注入中间件