当前位置:网站首页>WGAN、WGAN-GP、BigGAN

WGAN、WGAN-GP、BigGAN

2022-07-27 10:01:00 【yfy2022yfy】

One 、WGAN summary

WGAN Address of thesis :https://arxiv.org/abs/1701.07875

In this paper , The author studied different measurement methods , To describe the distribution gap between the model generated samples and the confirmed samples , Or say , Different definitions of divergence , After comparison , Think EM It is more suitable for GAN Of , Then on EM The optimization method is defined , The key points of the article are as follows :

- In the second quarter , Analyze with comprehensive profit theory , Contrast EM(Earth Mover) distance , The probability distance from the previous popularity (log(p) form ) The performance of the .

- In the third quarter , Defined Wasserstein-GAN, Used reasonably 、 Efficiently minimize EM distance , The corresponding optimization problem is described theoretically .

- The fourth quarter, , Exhibition WGAN It's solved GAN The main training problems .WGAN There is no need to make sure before training , The network structure of discriminator and generator has been balanced . in addition , Pattern collapse is also mitigated .

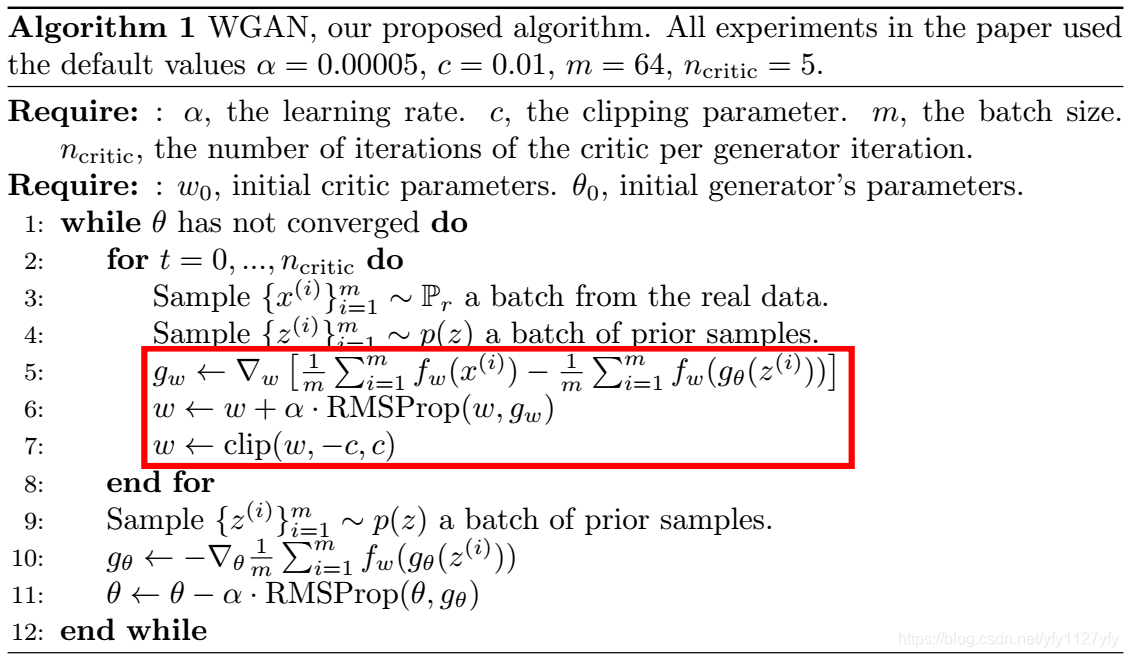

Whole WGAN The algorithm is as follows :

Two 、 WGAN-GP

WGAN-GP Address of thesis :https://arxiv.org/abs/1704.00028

The author of this paper , Find out WGAN Sometimes very poor samples will be produced , Or convergence fails . They found that the main reason came from the weight pruning of the discriminator (Weight Clipping), This book is used to strengthen Lipschitz Constrained . To improve the problem , Another weight pruning method is proposed —— For every input , Punish the gradient after regularization of the corresponding discriminator .

The main contents are as follows :

- 1、 On toy datasets , The problem caused by weight pruning of the discriminator is proved .

- 2、 Gradient penalty is proposed (WGAN-GP), Can solve 1 Problems in .

- 3、 We have confirmed the following progress brought by this improvement :(a). Can train stably with different GAN structure ;(b). Performance improvement higher than weight pruning ;(c). It can generate high-quality graphs ;(d). A character level that does not use discrete sampling GAN Language model .

WGAN -GP The algorithm is as follows , And WGAN Compare the , You can see the difference :

3、 ... and 、BigGAN

BigGAN Address of thesis : https://arxiv.org/abs/1809.11096

at present , From complex data sets ( Such as ImageNet) in , Generate high resolution 、 Diverse samples are still a problem . This paper is trying to train on large-scale pictures GAN, Study ways to improve stability . There is only one method mentioned in the summary —— Orthogonal regularization , After orthogonal regularization , By lowering the generator input z The variance of , It can balance the diversity and fidelity of generated samples . following D It's a discriminator ,G Generator .

Some recent papers aim to improve stability , One idea is to improve the objective function to promote convergence , The other is through constraints D Or regularization , To make up for the borderless loss The negative impact of function , Make sure D In any case, you can give G Provide gradient .

In the third quarter , The author explored the large size GAN How to train , Get big models and big batch Performance improvements .baseline Use SAGAN, to G Input additional classification information , The use of hinge loss function , The optimizer settings are G、D Consistent learning rate , Every two optimizations D Optimize once G. Details are in the annex of the paper C . Adopt two evaluation indexes :Inception Score (IS), The bigger the better ;Frechet Inception Distance (FID), The smaller the better. .

The study found the following improvements :

- batchsize Improve Eightfold , There is a significant improvement ,IS Improve your score 46%. The side effect is , After a few iterations , The generated graph has good details , however , Eventually become unstable , Generate mode collapse . The reasons will be discussed in Section 4 .

- Increased width ( The channel number )50%, The parameters have roughly doubled , This is IS Raised the appointment 21%. Guess is relative to complex data sets , Small model capacity is the bottleneck , Now the capacity is increased . Doubling the depth did not cause an increase at the beginning . This problem will be followed up BigGAN-deep Discussion in .

- Is offering to G In the message , Put the categories c Embedded in condition (conditional)BN Layers will contain a lot of weights . We used Shared embeddedness , Linearly project category information to each layer gains and bias in , This is much faster than using a separate layer for each embedding before .

- G Input noise z when , We start with simple z Initialization layer , Changed to use z Jump to the next layer . stay BigGan in , It's a z Divided into one resolution (?) One piece , Then each block and c Series connection (concatenating) get up . stay BigGan-deep in , It's even simpler , Put... Directly z and c Connected .

- truncation z vector , Resample values that exceed the set threshold , It will improve the quality of a single sample , But it will reduce the overall sample diversity , See the following figure .( Personal understanding , The smaller the threshold , After truncation z The more similar the distribution of . The smaller the input difference , The better the convergence , But the diversity is small ,). But when training large models , truncation z Vectors may cause some oversaturated data , The model cannot completely conform to truncation . To solve this problem , Orthogonal regularization is proposed , Make the model more consistent with truncation ,G Smoother , such z It can be well mapped to the output samples .

The formula of orthogonal regularization is as follows (ps In this paper, the normal distribution is written as N(0,i),i It should be a number defined by oneself ):

![]()

The author did a comparative experiment , The results are as follows , The improvements mentioned above are all reflected :

in addition , No 5 Point in point z Effect of truncation threshold , You can see in the figure below :

There are still some that I haven't seen , To be continued ...

边栏推荐

- Is Damon partgroupdef a custom object?

- Come on, chengxujun

- How to use tdengine sink connector?

- Shell函数、系统函数、basename [string / pathname] [suffix] 可以理解为取路径里的文件名称 、dirname 文件绝对路径、自定义函数

- Understand chisel language. 26. Chisel advanced input signal processing (II) -- majority voter filtering, function abstraction and asynchronous reset

- GO基础知识—数组和切片

- 深度剖析分库分表最强辅助Sharding Sphere

- 加油程序君

- Food safety | are you still eating fermented rice noodles? Be careful these foods are poisonous!

- 刷题《剑指Offer》day03

猜你喜欢

如何在树莓派上安装cpolar内网穿透

Understand chisel language. 26. Chisel advanced input signal processing (II) -- majority voter filtering, function abstraction and asynchronous reset

Food safety | the more you eat junk food, the more you want to eat it? Please keep this common food calorimeter

Looking for a job for 4 months, interviewing 15 companies and getting 3 offers

NFT系统开发-教程

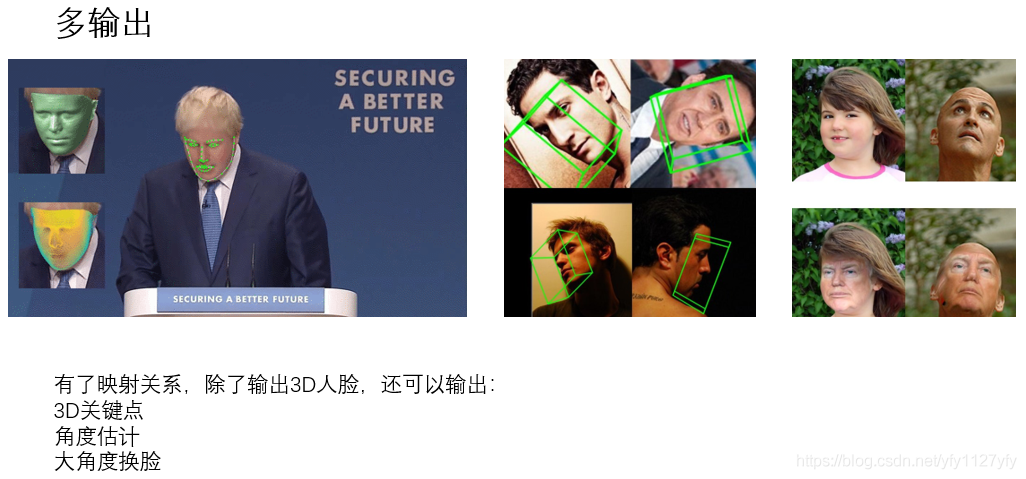

3D人脸重建:Joint 3D Face Reconstruction and Dense Alignment with position Map Regression Network

Shell流程控制(重点)、if 判断、case 语句、let用法、for 循环中有for (( 初始值;循环控制条件;变量变化 ))和for 变量 in 值 1 值 2 值 3… 、while 循环

![[scm] source code management - lock of perforce branch](/img/c6/daead474a64a9a3c86dd140c097be0.jpg)

[scm] source code management - lock of perforce branch

What happens if the MySQL disk is full? I really met you!

MOS drive in motor controller

随机推荐

并发之park与unpark说明

电机控制器中的MOS驱动

深度剖析分库分表最强辅助Sharding Sphere

After one year, the paper was finally accepted by the international summit

Interview JD T5, was pressed on the ground friction, who knows what I experienced?

如何使用TDengine Sink Connector?

Gbase 8A MPP cluster capacity expansion practice

食品安全 | 无糖是真的没有糖吗?这些真相要知道

[cloud native] how can I compete with this database?

XML overview

面试京东 T5,被按在地上摩擦,鬼知道我经历了什么?

Qt 学习(二) —— Qt Creator简单介绍

Shell函数、系统函数、basename [string / pathname] [suffix] 可以理解为取路径里的文件名称 、dirname 文件绝对路径、自定义函数

7/26 thinking +dp+ suffix array learning

Understand chisel language. 27. Chisel advanced finite state machine (I) -- basic finite state machine (Moore machine)

面试必备:虾皮服务端15连问

July training (day 24) - segment tree

NPM common commands

Review summary of engineering surveying examination

July training (day 23) - dictionary tree