当前位置:网站首页>[cloud native] 2.5 kubernetes core practice (Part 2)

[cloud native] 2.5 kubernetes core practice (Part 2)

2022-07-02 11:17:00 【Program ape chase】

hello ~ Hello, everyone , Let's continue with the explanation of the previous article and the previous article

( I feel like I'm in Hydrology , This is the last article in this series , Tell you in secret , There are surprises in the next article ~),ok , Don't talk nonsense , Let's start our class !

Personal home page : Personal home page

Series column :【 Cloud native series 】

Articles related to this article :

2.2【 Cloud native 】 kubeadm Create clusters 【 Cloud native 】2.2 kubeadm Create clusters _ Program ape chase blog -CSDN Blog 2.3【 Cloud native 】2.3 Kubernetes Core combat ( On ) 【 Cloud native 】2.3 Kubernetes Core combat ( On )_ Program ape chase blog -CSDN Blog 2.4【 Cloud native 】Kubernetes Core combat ( in ) 【 Cloud native 】2.4 Kubernetes Core combat ( in )_ Program ape chase blog -CSDN Blog

Catalog

One 、 Basic concepts and NFS Environment building

1、 Build a network file system

3、 ... and 、 Use SConfigMap Extract configuration

Four 、Secret Examples of scenarios

One 、 Basic concepts and NFS Environment building

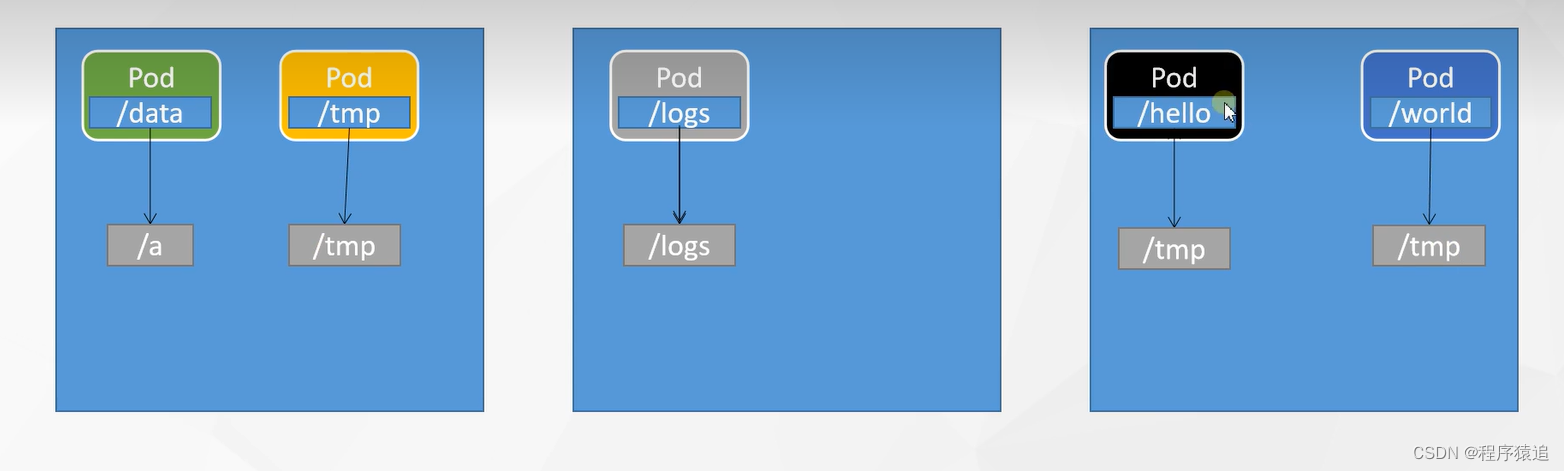

Look at the picture , some time , We have all kinds of Pod, There are some data that you want to modify outside , such as : We will Pod Of / data, Hang on to / a Inside , So are the others .

When an application of our unit 3 breaks down , This is how he will fail over , etc. 5 Minutes later, the self-healing has not been successful , This will be transferred to 2 On plane number , But the data of unit 3 will be in 2 Flight number one ? The answer is no . We will call the outside —— Storage layer .

1、 Build a network file system

To build , Everyone must install nfs

All machine installations

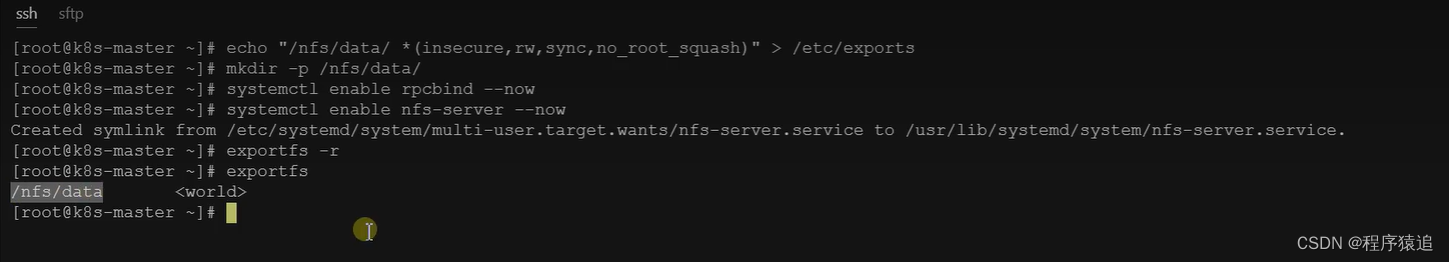

yum install -y nfs-utilsThen set up in the master node nfs Master node

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exportsmkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --nowCheck if the configuration works

exportfs -r

Execute the command to mount nfs Shared directory on the server to the local path /root/nfsmount

mkdir -p /nfs/data

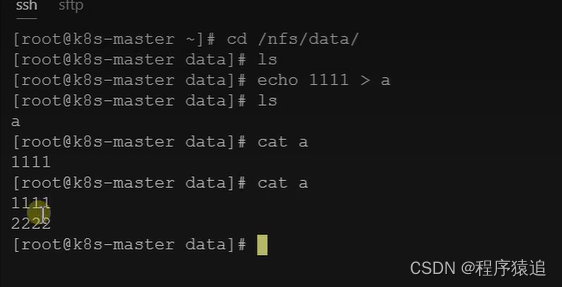

mount -t nfs 172.31.0.4:/nfs/data /nfs/dataWrite test file

echo "hello nfs server" > /nfs/data/test.txt

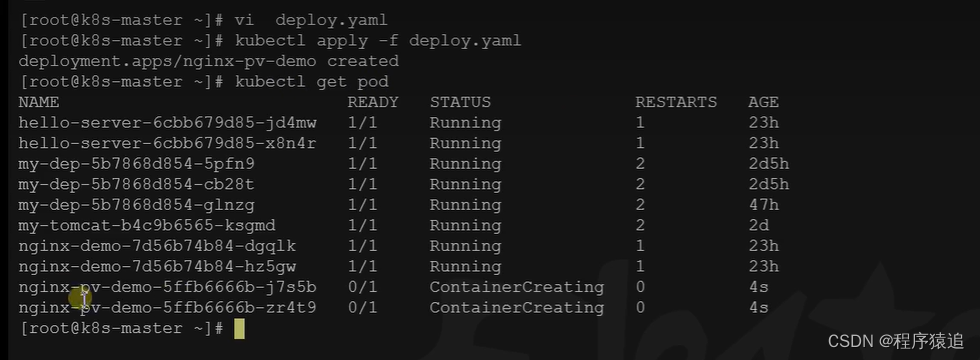

2、Deplryment Use NFS Mount

Enter the code to test

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

nfs:

server: 172.31.0.4

path: /nfs/data/nginx-pv

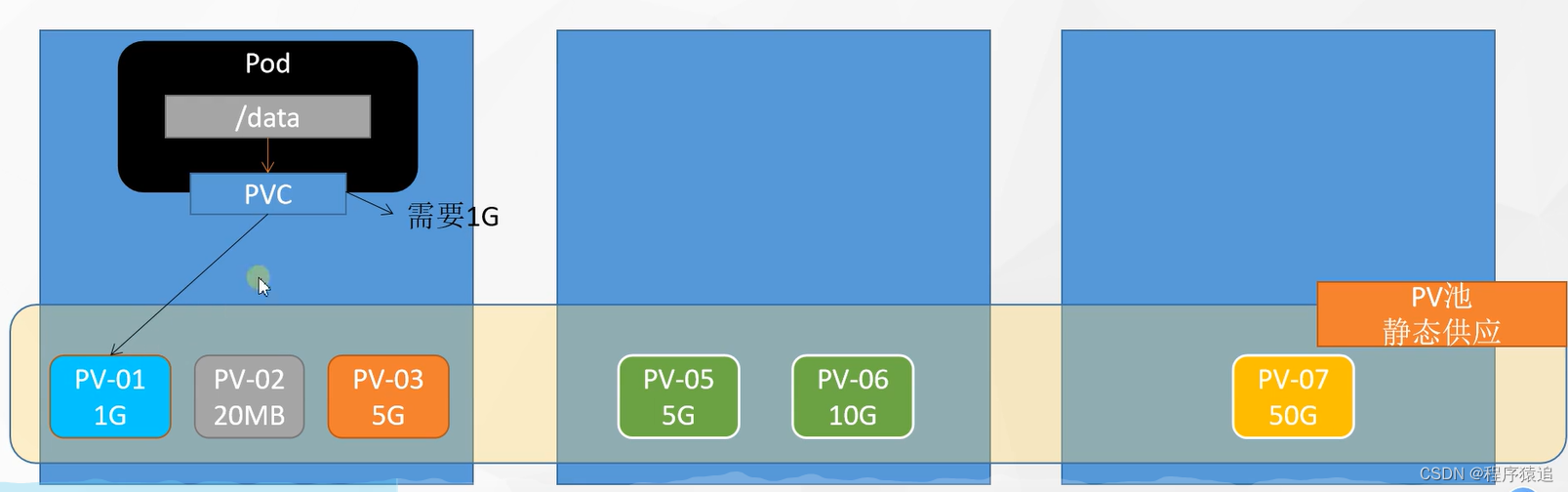

Two 、PV And PVC Use

What is PV? What is it PVC ?

PV: Persistent volume (Persistent Volume), Save the data that the application needs to persist to the specified location

PVC: Persistent volume declaration (Persistent Volume Claim), State the persistent volume specifications to be used

for instance , Suppose we need 1GB The persistent volume of (PV), that PVC It's us Pod An application to apply for , Application and PV After the volume of matches , Then determine the location .

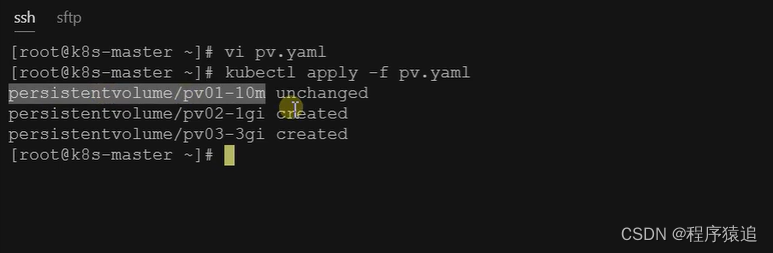

1、 establish pv pool

nfs Master node

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03establish PV

( notes : Remember to change server The address of )

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 172.31.0.4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 172.31.0.4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 172.31.0.4

PV Create it after it is created PVC

establish Pod binding PVC

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

3、 ... and 、 Use SConfigMap Extract configuration

Hang it in the file here ConfigMap

effect : Extract application configuration , And can be automatically updated

Create a configuration ,redis Save to k8s Of etcd;

kubectl create cm redis-conf --from-file=redis.confdata Is all the real data ,key: The default is file name value: Content of profile

apiVersion: v1

data:

redis.conf: |

appendonly yes

kind: ConfigMap

metadata:

name: redis-conf

namespace: defaultestablish Pod

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf" # refer to redis Position inside the container

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.confCheck the default configuration

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET appendonly

127.0.0.1:6379> CONFIG GET requirepassmodify ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: example-redis-config

data:

redis-config: |

maxmemory 2mb

maxmemory-policy allkeys-lru Check whether the configuration is updated

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET maxmemory

127.0.0.1:6379> CONFIG GET maxmemory-policyCheck whether the contents of the specified file have been updated

Revised CM.Pod The configuration file inside will change

The configuration value has not changed , Because you need to restart Pod From the associated ConfigMap Get updated values from .

reason : our Pod The deployed middleware has no hot update capability

Four 、Secret Examples of scenarios

Secret Object types are used to hold sensitive information , For example, password 、 Information such as tokens and keys . Put this information in secret Put the middle ratio in Pod It is more secure and flexible in the definition or container image .

kubectl create secret docker-registry leifengyang-docker \

--docker-username=leifengyang \

--docker-password=Lfy123456 \

[email protected]

## Command format

kubectl create secret docker-registry regcred \

--docker-server=< Your mirror warehouse server > \

--docker-username=< Your username > \

--docker-password=< Your password > \

--docker-email=< Your email address >apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: leifengyang/guignginx:v1.0

imagePullSecrets:

- name: leifengyang-dockerWell, let's make a speech k8s That's it , The next one comes KubeSphere piece .

( Please pay attention to ) Ongoing update ……

边栏推荐

- Mongodb learning and sorting (condition operator, $type operator, limit() method, skip() method and sort() method)

- What are the software product management systems? Inventory of 12 best product management tools

- Static variables in static function

- QT learning diary 7 - qmainwindow

- 数字化转型挂帅复产复工,线上线下全融合重建商业逻辑

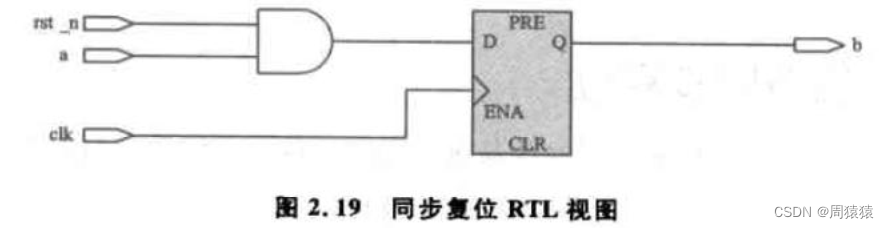

- [play with FPGA learning 2 in simple terms ----- design skills (basic grammar)]

- Native method merge word

- Luogu p1892 [boi2003] Gang (and search for variant anti set)

- V2x SIM dataset (Shanghai Jiaotong University & New York University)

- Special topic of binary tree -- acwing 3540 Binary search tree building (use the board to build a binary search tree and output the pre -, middle -, and post sequence traversal)

猜你喜欢

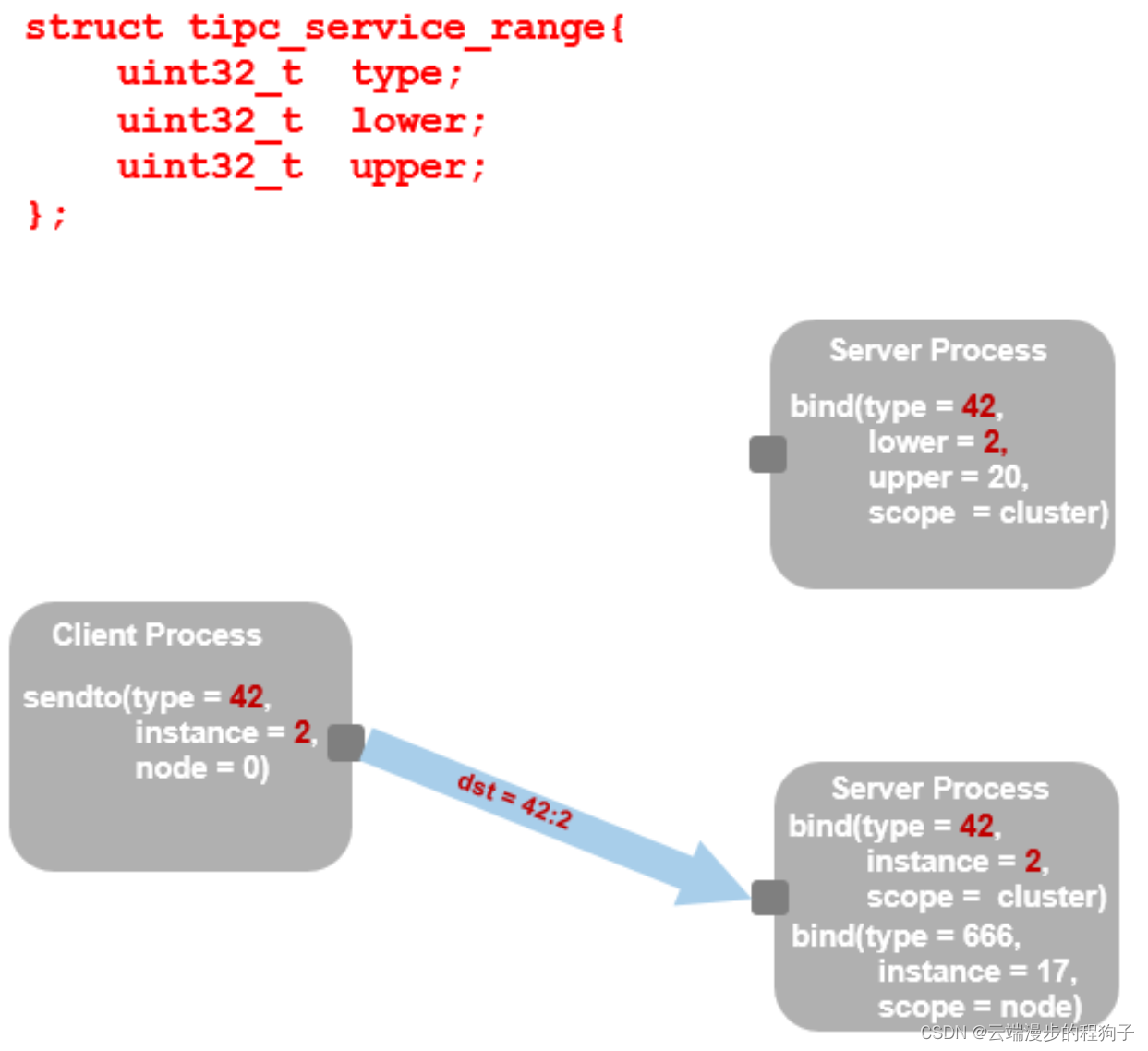

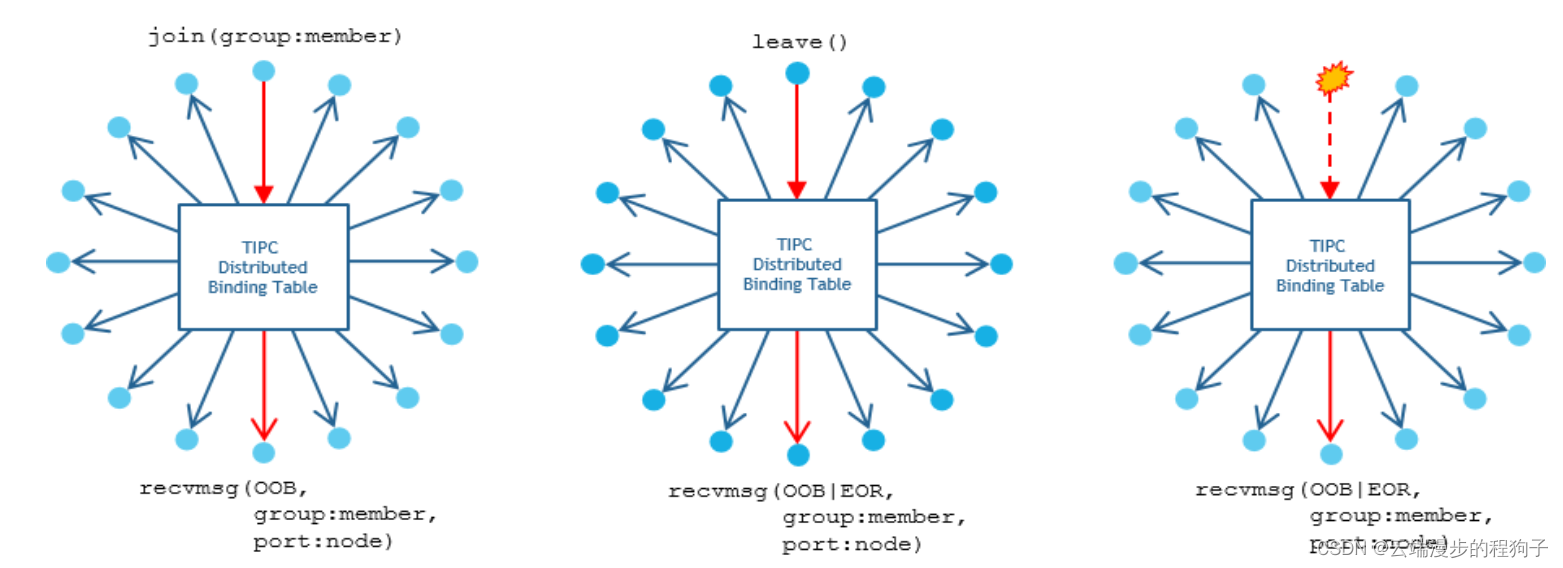

TIPC addressing 2

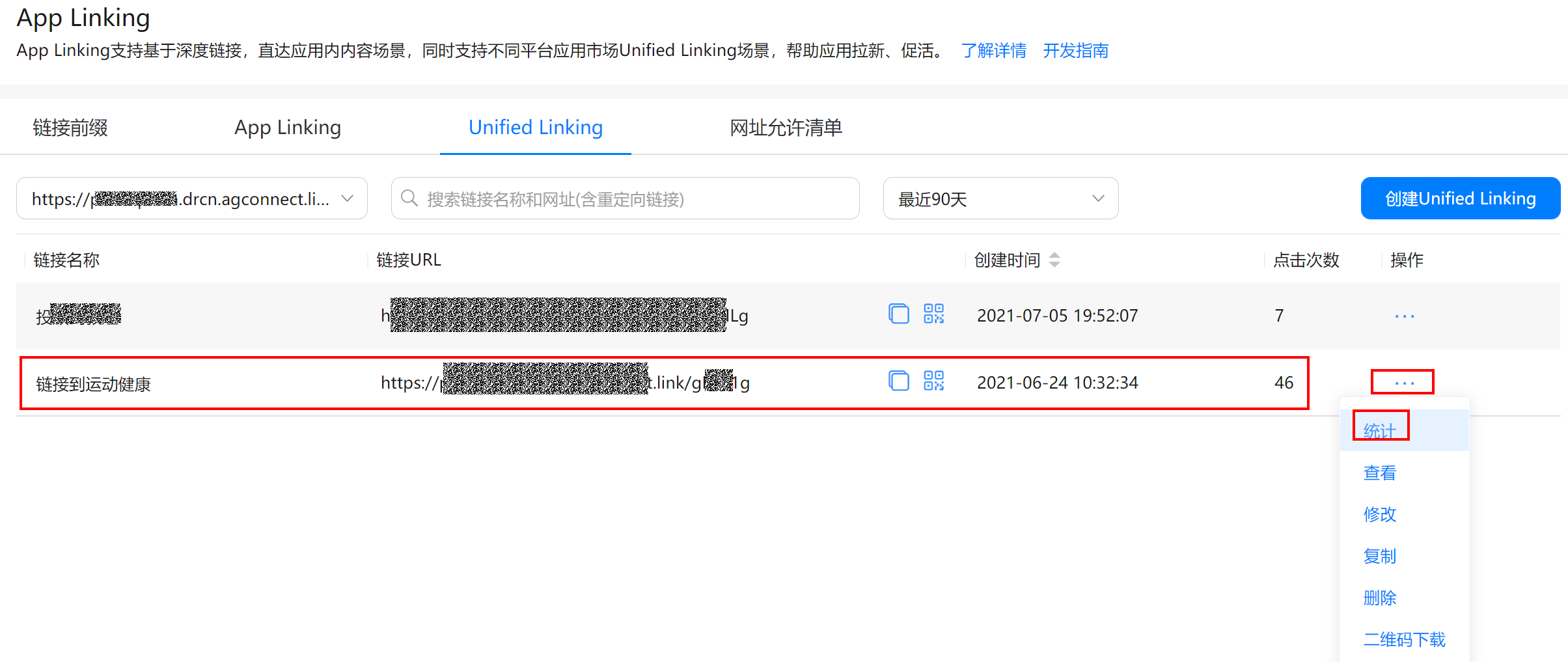

Creation and use of unified links in Huawei applinking

MTK full dump grab

TIPC Service and Topology Tracking4

Huawei game failed to initialize init with error code 907135000

PHP tea sales and shopping online store

解决uniapp列表快速滑动页面数据空白问题

TIPC Cluster5

ImportError: cannot import name ‘Digraph‘ from ‘graphviz‘

【深入浅出玩转FPGA学习5-----复位设计】

随机推荐

函数式接口和方法引用

V2x SIM dataset (Shanghai Jiaotong University & New York University)

Rest (XOR) position and thinking

Calculate the sum of sequences

Win11 arm system configuration Net core environment variable

C file and folder operation

接口调试工具概论

Regular and common formulas

Binary tree topic -- Luogu p3884 [jloi2009] binary tree problem (DFS for binary tree depth BFS for binary tree width Dijkstra for shortest path)

Resources reads 2D texture and converts it to PNG format

tidb-dm报警DM_sync_process_exists_with_error排查

Primary key policy problem

How to implement tabbar title bar with list component

SSRF

QT learning diary 7 - qmainwindow

The working day of the month is calculated from the 1st day of each month

TIPC Service and Topology Tracking4

每月1号开始计算当月工作日

The first white paper on agile practice in Chinese enterprises was released | complete download is attached

TIPC Cluster5