当前位置:网站首页>Clickhouse 20.x distributed table testing and chproxy deployment (II)

Clickhouse 20.x distributed table testing and chproxy deployment (II)

2022-07-27 15:58:00 【51CTO】

label ( Test case space separation for air test ):clickhouse series

One : clickhouse20.x Distributed measurement of

1.1:clickhosue Distributed table creation

Prepare test files :

Refer to the official website

https://clickhouse.com/docs/en/getting-started/example-datasets/metrica

Download the file :

curl https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz | unxz --threads=`nproc` > hits_v1.tsv

# Validate the checksum

md5sum hits_v1.tsv

# Checksum should be equal to: f3631b6295bf06989c1437491f7592cb

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

1.2: Create libraries and distributed tables

Create local table :

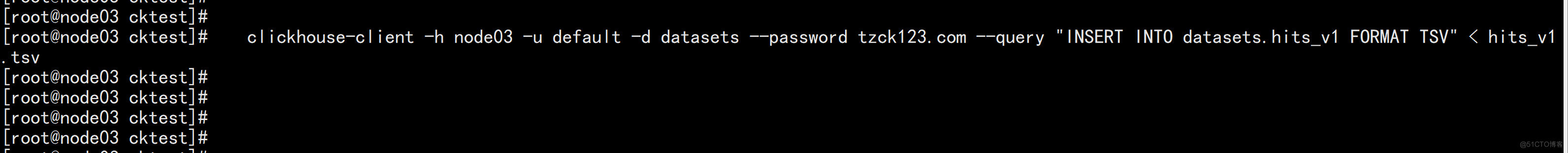

clickhouse-client -h node03 -u default --password tzck123.com

CREATE TABLE datasets.hits_v1 on cluster tzcluster3s2r02 ( WatchID UInt64, JavaEnable UInt8, Title String, GoodEvent Int16, EventTime DateTime, EventDate Date, CounterID UInt32, ClientIP UInt32, ClientIP6 FixedString(16), RegionID UInt32, UserID UInt64, CounterClass Int8, OS UInt8, UserAgent UInt8, URL String, Referer String, URLDomain String, RefererDomain String, Refresh UInt8, IsRobot UInt8, RefererCategories Array(UInt16), URLCategories Array(UInt16), URLRegions Array(UInt32), RefererRegions Array(UInt32), ResolutionWidth UInt16, ResolutionHeight UInt16, ResolutionDepth UInt8, FlashMajor UInt8, FlashMinor UInt8, FlashMinor2 String, NetMajor UInt8, NetMinor UInt8, UserAgentMajor UInt16, UserAgentMinor FixedString(2), CookieEnable UInt8, JavascriptEnable UInt8, IsMobile UInt8, MobilePhone UInt8, MobilePhoneModel String, Params String, IPNetworkID UInt32, TraficSourceID Int8, SearchEngineID UInt16, SearchPhrase String, AdvEngineID UInt8, IsArtifical UInt8, WindowClientWidth UInt16, WindowClientHeight UInt16, ClientTimeZone Int16, ClientEventTime DateTime, SilverlightVersion1 UInt8, SilverlightVersion2 UInt8, SilverlightVersion3 UInt32, SilverlightVersion4 UInt16, PageCharset String, CodeVersion UInt32, IsLink UInt8, IsDownload UInt8, IsNotBounce UInt8, FUniqID UInt64, HID UInt32, IsOldCounter UInt8, IsEvent UInt8, IsParameter UInt8, DontCountHits UInt8, WithHash UInt8, HitColor FixedString(1), UTCEventTime DateTime, Age UInt8, Sex UInt8, Income UInt8, Interests UInt16, Robotness UInt8, GeneralInterests Array(UInt16), RemoteIP UInt32, RemoteIP6 FixedString(16), WindowName Int32, OpenerName Int32, HistoryLength Int16, BrowserLanguage FixedString(2), BrowserCountry FixedString(2), SocialNetwork String, SocialAction String, HTTPError UInt16, SendTiming Int32, DNSTiming Int32, ConnectTiming Int32, ResponseStartTiming Int32, ResponseEndTiming Int32, FetchTiming Int32, RedirectTiming Int32, DOMInteractiveTiming Int32, DOMContentLoadedTiming Int32, DOMCompleteTiming Int32, LoadEventStartTiming Int32, LoadEventEndTiming Int32, NSToDOMContentLoadedTiming Int32, FirstPaintTiming Int32, RedirectCount Int8, SocialSourceNetworkID UInt8, SocialSourcePage String, ParamPrice Int64, ParamOrderID String, ParamCurrency FixedString(3), ParamCurrencyID UInt16, GoalsReached Array(UInt32), OpenstatServiceName String, OpenstatCampaignID String, OpenstatAdID String, OpenstatSourceID String, UTMSource String, UTMMedium String, UTMCampaign String, UTMContent String, UTMTerm String, FromTag String, HasGCLID UInt8, RefererHash UInt64, URLHash UInt64, CLID UInt32, YCLID UInt64, ShareService String, ShareURL String, ShareTitle String, ParsedParams Nested(Key1 String, Key2 String, Key3 String, Key4 String, Key5 String, ValueDouble Float64), IslandID FixedString(16), RequestNum UInt32, RequestTry UInt8) ENGINE = ReplicatedReplacingMergeTree('/clickhouse/tables/{layer}-{shard}/datasets/hits_v1','{replica}') PARTITION BY toYYYYMM(EventDate) ORDER BY (CounterID, EventDate, intHash32(UserID)) SAMPLE BY intHash32(UserID) SETTINGS index_granularity = 8192

- 1.

- 2.

- 3.

- 4.

- 5.

Query with distributed table from any node

clickhouse-client -h node01 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node02 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node03 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node04 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node05 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node06 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

node01 And node05 Of hits_v1 The tables are 1674680 Data

Look at the distribution table hits_v1_all How many pieces are there

Query with distributed table from any node

clickhouse-client -h node01 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node02 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node03 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node04 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node05 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

clickhouse-client -h node06 -u default -d datasets --password tzck123.com --query "select count(1) from datasets.hits_v1_all"

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

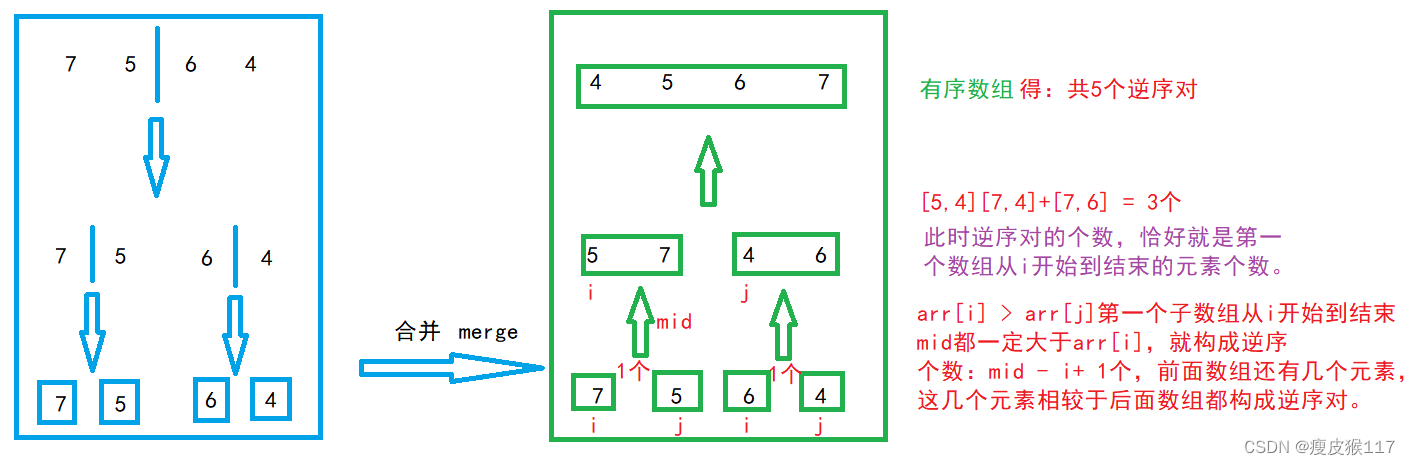

Distributed tables have 5024040 The data is 1674680 Of 3 times Because clusters are 3 Fragmentation 2 Cluster of replicas

Through the above tests and the characteristics of the cluster We can apply it in production clickhouse When writing local tables , Reading distributed tables

The load of the upper layer can be openresty do tcp Of 8123 Ports are proxy connections

- 1.

- 2.

- 3.

- 4.

- 5.

Two : About openresty Agent for clickhouse load

How to install openresty Omitted here You can refer to flyfish The article :https://blog.51cto.com/flyfish225/3108573

Need to give openresty Add plug-ins --with-stream Module support tcp Agent for :

Here is a list of openresty You can refer to the configuration file of :

cd /usr/local/openresty/nginx/conf

vim nginx.conf

-----

#user nobody;

worker_processes 8;

error_log /usr/local/openresty/nginx/logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

pid logs/nginx.pid;

events {

worker_connections 1024;

}

stream {

log_format proxy '$remote_addr [$time_local] '

'$protocol $status $bytes_sent $bytes_received '

'$session_time "$upstream_addr" '

'"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"';

access_log /usr/local/openresty/nginx/logs/tcp-access.log proxy ;

open_log_file_cache off;

include /usr/local/openresty/nginx/conf/conf.d/*.stream;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /usr/local/openresty/nginx/logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 60;

gzip on;

server {

listen 18080;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

-----

It is important to enable openrestry Of tcp Agent module here

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

cd /usr/local/openresty/nginx/conf/conf.d

vim ck_prod.stream

----

upstream ck {

server 192.168.100.142:8123 weight=25 max_fails=3 fail_timeout=60s;

server 192.168.100.143:8123 weight=25 max_fails=3 fail_timeout=60s;

server 192.168.100.144:8123 weight=25 max_fails=3 fail_timeout=60s;

server 192.168.100.145:8123 weight=25 max_fails=3 fail_timeout=60s;

server 192.168.100.146:8123 weight=25 max_fails=3 fail_timeout=60s;

}

server {

listen 18123;

proxy_pass ck;

}

----

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

3、 ... and : About chproxy agent

3.1 chproxy Introduction to :

chproxy A powerful clickhouse http Agent and load balancing middleware

chproxy Is based on golang Compiling clickhouse http Service proxy and load balancing middleware , It has rich functions

be based on yaml To configure , It is a good tool for multi cluster traffic processing

github:

https://github.com/Vertamedia/chproxy

Official website :

https://www.chproxy.org/cn

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

3.2 chproxy Deployment of :

To configure chproxy Agent for

mkdir /etc/chproxy/

cd /etc/chproxy/

vim chproxy.yml

-----------------

server:

http:

listen_addr: ":19000"

allowed_networks: ["192.168.100.0/24","192.168.120.0/24" ]

users:

- name: "distributed-write"

to_cluster: "distributed-write"

to_user: "default"

- name: "replica-write"

to_cluster: "replica-write"

to_user: "default"

- name: "distributed-read"

to_cluster: "distributed-read"

to_user: "default"

max_concurrent_queries: 6

max_execution_time: 1m

clusters:

- name: "replica-write"

replicas:

- name: "replica"

nodes: ["node01:8123", "node02:8123", "node03:8123", "node04:8123","node05:8123","node06:8123"]

users:

- name: "default"

password: "tzck123.com"

- name: "distributed-write"

nodes: [

"node01:8123",

"node02:8123",

"node03:8123",

"node04:8123",

"node05:8123",

"node06:8123"

]

users:

- name: "default"

password: "tzck123.com"

- name: "distributed-read"

nodes: [

"node01:8123",

"node02:8123",

"node03:8123",

"node04:8123",

"node05:8123",

"node06:8123"

]

users:

- name: "default"

password: "tzck123.com"

caches:

- name: "shortterm"

dir: "/etc/chproxy/cache/shortterm"

max_size: 150Mb

expire: 130s

-----------------

Startup file :

vim chproxy.sh

-------

#!/bin/bash

cd /etc/chproxy

ps -ef | grep chproxy | head -2 | tail -1 | awk '{print $2}' | xargs kill -9

nohup /usr/bin/chproxy -config=/etc/chproxy/config.yml >> ./chproxy.out 2>&1 &

-----

chmod +x chproxy.sh

./chproxy.sh

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

test chproxy

stay node Query on the node

echo 'select * from system.clusters' | curl 'http://localhost:8123/?user=default&password=tzck123.com' --data-binary @-

stay chproxy The agent queries :

echo 'select * from system.clusters' | curl 'http://192.168.100.120:19000/?user=distributed-read&password=' --data-binary @-

echo 'select * from system.clusters' | curl 'http://192.168.100.120:19000/?user=distributed-write&password=' --data-binary @-

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

边栏推荐

- C language: custom type

- 传音控股披露被华为起诉一事:已立案,涉案金额2000万元

- 多线程带来的的风险——线程安全

- 借5G东风,联发科欲再战高端市场?

- [sword finger offer] interview question 50: the first character that appears only once - hash table lookup

- 减小程序rom ram,gcc -ffunction-sections -fdata-sections -Wl,–gc-sections 参数详解

- Breaking through soft and hard barriers, Xilinx releases Vitis unified software platform for developers

- Using Prometheus to monitor spark tasks

- Voice live broadcast system -- a necessary means to improve the security of cloud storage

- Binder initialization process

猜你喜欢

Interview focus - TCP protocol of transport layer

Analysis of spark task scheduling exceptions

The difference between synchronized and reentrantlock

![[sword finger offer] interview question 54: the k-largest node of the binary search tree](/img/13/7574af86926a228811503904464f3f.png)

[sword finger offer] interview question 54: the k-largest node of the binary search tree

剑指 Offer 51. 数组中的逆序对

Causes and solutions of deadlock in threads

C语言:数据的存储

The risk of multithreading -- thread safety

网络原理(2)——网络开发

DRF学习笔记(四):DRF视图

随机推荐

C language: minesweeping games

聊聊面试必问的索引

Interview focus - TCP protocol of transport layer

Go language slow start - Basic built-in types

First acquaintance with MySQL database

To meet risc-v challenges? ARM CPU introduces custom instruction function!

台积电6纳米制程将于明年一季度进入试产

[regular expression] matches multiple characters

Spark RPC

DRF学习笔记(准备)

线程间等待与唤醒机制、单例模式、阻塞队列、定时器

Push down of spark filter operator on parquet file

Static关键字的三种用法

Addition, deletion, query and modification of MySQL table data

Spark 3.0 DPP implementation logic

JS operation DOM node

scrapy爬虫框架

The risk of multithreading -- thread safety

禁令之下,安防巨头海康与大华的应对之策!

Leetcode-1: sum of two numbers