当前位置:网站首页>CVPR 2022 𞓜 text guided entity level image manipulation manitrans

CVPR 2022 𞓜 text guided entity level image manipulation manitrans

2022-06-11 11:42:00 【Zhiyuan community】

This article mainly introduces an article on the cooperation between Fudan University fuyanwei's research group and Huawei Noah's Ark laboratory ,ManiTrans: Entity-Level Text-Guided Image Manipulation via Token-wise Semantic Alignment and Generation. This article was accepted in CVPR 2022(Oral).

author :Jianan Wang, Guansong Lu, Hang Xu*, Zhenguo Li, Chunjing Xu and Yanwei Fu*

arxiv: https://arxiv.org/abs/2204.04428

Project home page :https://jawang19.github.io/manitrans/

Introduce

lately OpenAI Released the latest DALLE-2 edition (https://openai.com/dall-e-2/) It has aroused widespread concern in academia and industry .DALLE-2 Has a good understanding of known pictures , The ability to make entity level image modification based on text . Allied , This article introduces us CVPR2022 The job of , It is also an entity image operation ability focusing on text guidance . suffer DALL-E[1]、VQGAN[2] Inspired by work , We propose a new framework based on the two-stage image generation method , namely ManiTrans, It can not only edit the appearance of entities , You can also generate a new entity corresponding to the text guide , It also supports operations on multiple entities .

Method

ManiTrans frame

ManiTrans It's a two-stage framework , from (1) Automatic image coder , And (2) Fitting the joint distribution of text and image Transformer Model composition .

(1) Automatic image coder learned the coder 、 Decoder and image embedding are three parts . It first samples the input image , Then image embedding is used to quantify the feature map after down sampling , Finally, a decoder is used for the quantized feature map , Regenerate the image .

(2) Medium Transformer It's an autoregression (auto-regressive) Model , Take the text sequence and the index sequence of image quantization as the input , Predict the next possible element in the sequence . In this stage of training , To help Transformer It can better capture the corresponding relationship between text and image , Also for the sake of (1) The decoding process of the generated image in , We designed semantic alignment loss

The purpose of semantic alignment loss is to maximize the similarity between the text and the generated image .

When operating on the entity of an image , We need three inputs , Include a visual input : original image (image); Two language input : The entity you want to modify (prompt)、 Target text (text). The operation process is as follows :

(a) Segment the entities on the original image ;

(b) according to prompt Similarity with image entities , Determine the position of the entity to be modified in the image , And corresponding to the position of the index sequence ;

(c) Subject to the target text , Index the image that needs to be changed , namely (b) Index determined in , Make a new forecast . When the model only needs to operate on the appearance of the entity , Another condition is to add the gray image of the original image , To provide prior information about the structure of the original entity .

result

Multi entity operation COCO Cross category operations on datasets CUB And Oxford Cross category operation of flowers and birds on dataset

If you have details about the model 、 More results or analysis of interest , Please move our article .

Postscript

In recent years , With the help of Transformer technology 、 Pre training techniques and the improvement of computing power , The field of vision and language multimodal understanding has developed rapidly , Also began to be concerned by more people . In the near future DALL-E-2 Work is even more amazing , Let us have greater expectations for the future of visual language . in fact , There are still many directions in this field that have not been thoroughly explored , Text based image operations are in this column . The work of this paper is not perfect , There is still room for further improvement , But we hope that the work of this paper can represent a step forward in the direction of text guided image manipulation . Last , I wish everyone can make what they think is interesting 、 Valuable work .

[1] Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. Zero-Shot Text-to-Image Generation. arXiv:2102.12092, 2021.

[2] Patrick Esser, Robin Rombach, and Björn Ommer. Taming Transformers for High-Resolution Image Synthesis. arXiv:2012.09841, 2020.

边栏推荐

- nft数字藏品app系统搭建

- JS 加法乘法错误解决 number-precision

- Processing of uci-har datasets

- Use pydub to modify the bit rate of the wav file, and an error is reported: c:\programdata\anaconda3\lib\site packages\pydub\utils py:170: RuntimeWarning:

- How to form a good habit? By perseverance? By determination? None of them!

- WordPress regenerate featured image plugin: regenerate thumbnails

- Count the top k strings with the most occurrences

- MSF CS OpenSSL traffic encryption

- 为WordPress相关日志插件增加自动缩略图功能

- Node连接MySql数据库写模糊查询接口

猜你喜欢

Enterprise wechat applet pit avoidance guide, welcome to add...

js面试题---箭头函数,find和filter some和every

![my. Binlog startup failure caused by the difference between [mysql] and [mysqld] in CNF](/img/bd/a28e74654c7821b3a9cd9260d2e399.png)

my. Binlog startup failure caused by the difference between [mysql] and [mysqld] in CNF

Use yolov5 to train your own data set and get started quickly

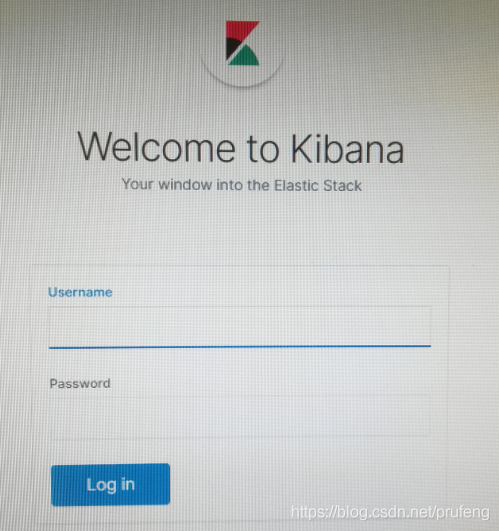

ELK - X-Pack设置用户密码

ELK - ElastAlert最大的坑

Command mode - attack, secret weapon

Display of receiving address list 【 project mall 】

苹果MobileOne: 移动端仅需1ms的高性能骨干

Adapter mode -- can you talk well?

随机推荐

No category parents插件帮你去掉分类链接中的category前缀

WordPress database cache plug-in: DB cache Reloaded

为WordPress相关日志插件增加自动缩略图功能

Web development model selection, who graduated from web development

nft数字藏品app系统搭建

MYCAT sub database and sub table

nft数字藏品系统品台搭建

李飞飞:我更像物理学界的科学家,而不是工程师|深度学习崛起十年

2022 | framework for Android interview -- Analysis of the core principles of binder, handler, WMS and AMS!

[第二章 基因和染色体的关系]生物知识概括–高一生物

WordPress landing page customization plug-in recommendation

File excel export

WordPress登录页面定制插件推荐

WordPress站内链接修改插件:Velvet Blues Update URLs

Typeerror: argument of type "Int 'is not Iterable

JS 加法乘法错误解决 number-precision

文件excel导出

Only when you find your own advantages can you work tirelessly and get twice the result with half the effort!

my. Binlog startup failure caused by the difference between [mysql] and [mysqld] in CNF

MSF CS OpenSSL traffic encryption