当前位置:网站首页>Spark practice 1: build spark operation environment in single node local mode

Spark practice 1: build spark operation environment in single node local mode

2022-07-03 13:22:00 【Brother Xing plays with the clouds】

Preface :

Spark For its own use scala Written , Running on the JVM above .

JAVA edition :java 6 /higher edition.

1 download Spark

http://spark.apache.org/downloads.html

You can choose the version you need , My choice here is :

http://d3kbcqa49mib13.cloudfront.net/spark-1.1.0-bin-hadoop1.tgz

If you are a good farmer who works hard , You can download the source code yourself :http://github.com/apache/spark.

Be careful : I'm running here Linux In the environment . You can install it on the virtual machine without conditions !

2 decompression & Entry directory

tar -zvxf spark-1.1.0-bin-Hadoop1.tgz

cd spark-1.1.0-bin-hadoop1/

3 start-up shell

./bin/spark-shell

You will see a lot of things printed , Finally, it shows

4 A profound

Execute the following statements successively

val lines = sc.textFile("README.md")

lines.count()

lines.first()

val pythonLines = lines.filter(line => line.contains("Python"))

scala> lines.first() res0: String = ## Interactive Python Shel

--- explain , What is? sc

sc It is generated by default SparkContext object .

such as

scala> sc res13: org.apache.spark.SparkContext = [email protected]

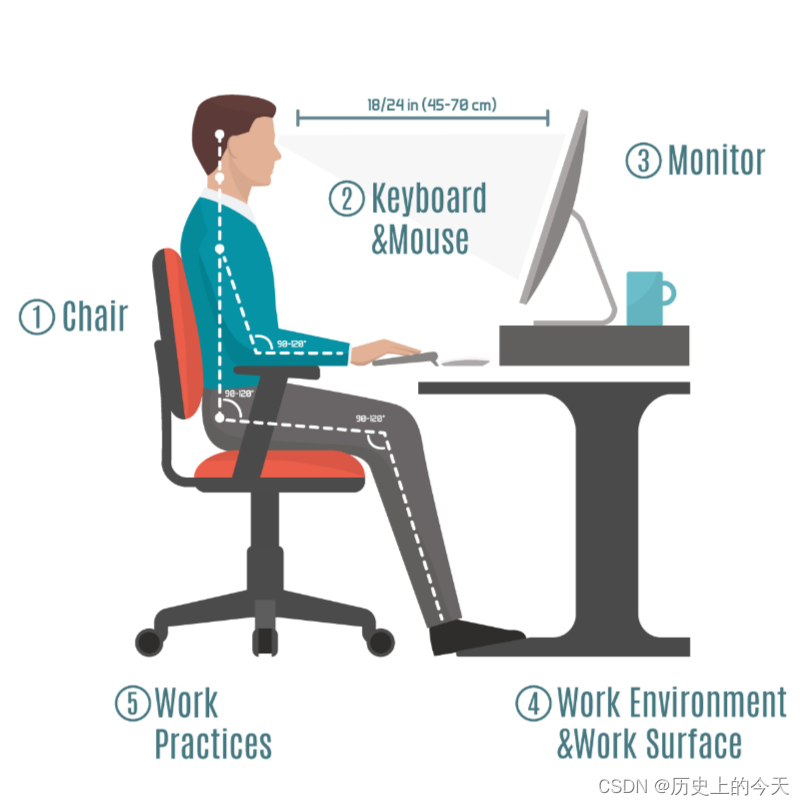

Here is only local operation , Let's know in advance Distributed A diagram of the calculation :

5 Independent program

Finally, I will conclude this section with an example

In order to make it run smoothly , Just follow the steps below :

-------------- The directory structure is as follows :

/usr/local/spark-1.1.0-bin-hadoop1/test$ find . . ./src ./src/main ./src/main/scala ./src/main/scala/example.scala ./simple.sbt

then simple.sbt Is as follows :

name := "Simple Project" version := "1.0" scalaVersion := "2.10.4" libraryDependencies += "org.apache.spark" %% "spark-core" % "1.1.0"example.scala Is as follows :

import org.apache.spark.SparkConf import org.apache.spark.SparkContext import org.apache.spark.SparkContext._

object example { def main(args: Array[String]) { val conf = new SparkConf().setMaster("local").setAppName("My App") val sc = new SparkContext("local", "My App") sc.stop() //System.exit(0) //sys.exit() println("this system exit ok!!!") } }

Red local: One colony Of URL, Here is local, tell spark How to connect a colony ,local It means running in a single thread on the local machine without connecting to a cluster .

Orange My App: The name of a project ,

And then execute :sbt package

Execute after success

./bin/spark-submit --class "example" ./target/scala-2.10/simple-project_2.10-1.0.jar

give the result as follows :

It shows that the implementation is indeed successful !

end !

边栏推荐

- Server coding bug

- json序列化时案例总结

- Task6: using transformer for emotion analysis

- MapReduce实现矩阵乘法–实现代码

- Tutoriel PowerPoint, comment enregistrer une présentation sous forme de vidéo dans Powerpoint?

- Kivy教程之 盒子布局 BoxLayout将子项排列在垂直或水平框中(教程含源码)

- R language uses the data function to obtain the sample datasets available in the current R environment: obtain all the sample datasets in the datasets package, obtain the datasets of all packages, and

- MySQL

- Sword finger offer 15 Number of 1 in binary

- 剑指 Offer 14- II. 剪绳子 II

猜你喜欢

![【R】 [density clustering, hierarchical clustering, expectation maximization clustering]](/img/a2/b287a5878761ee22bdbd535cae77eb.png)

【R】 [density clustering, hierarchical clustering, expectation maximization clustering]

The principle of human voice transformer

【历史上的今天】7 月 3 日:人体工程学标准法案;消费电子领域先驱诞生;育碧发布 Uplay

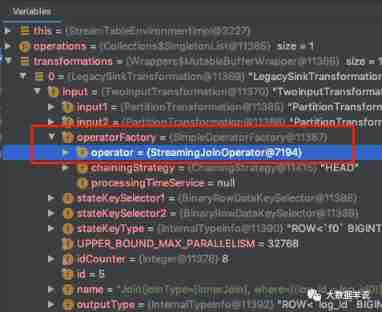

When we are doing flow batch integration, what are we doing?

Image component in ETS development mode of openharmony application development

AI 考高数得分 81,网友:AI 模型也免不了“内卷”!

Libuv库 - 设计概述(中文版)

今日睡眠质量记录77分

Flink SQL knows why (12): is it difficult to join streams? (top)

刚毕业的欧洲大学生,就能拿到美国互联网大厂 Offer?

随机推荐

MySQL functions and related cases and exercises

剑指 Offer 17. 打印从1到最大的n位数

2022-01-27 redis cluster brain crack problem analysis

Cadre de logback

我的创作纪念日:五周年

Today's sleep quality record 77 points

The reasons why there are so many programming languages in programming internal skills

Solve system has not been booted with SYSTEMd as init system (PID 1) Can‘t operate.

Setting up remote links to MySQL on Linux

Mysqlbetween implementation selects the data range between two values

开始报名丨CCF C³[email protected]奇安信:透视俄乌网络战 —— 网络空间基础设施面临的安全对抗与制裁博弈...

今日睡眠质量记录77分

Task6: using transformer for emotion analysis

Flink SQL knows why (17): Zeppelin, a sharp tool for developing Flink SQL

Flink SQL knows why (XV): changed the source code and realized a batch lookup join (with source code attached)

R语言gt包和gtExtras包优雅地、漂亮地显示表格数据:nflreadr包以及gtExtras包的gt_plt_winloss函数可视化多个分组的输赢值以及内联图(inline plot)

Sword finger offer 12 Path in matrix

剑指 Offer 14- II. 剪绳子 II

Road construction issues

AI 考高数得分 81,网友:AI 模型也免不了“内卷”!