当前位置:网站首页>Detailed comments on MapReduce instance code on the official website

Detailed comments on MapReduce instance code on the official website

2022-07-03 15:05:00 【Brother Xing plays with the clouds】

introduction

1. This article does not describe MapReduce Introduction , There are many such knowledge online , Please check by yourself

2. The example code of this article comes from the official website

http://hadoop.apache.org/docs/current/hadoop-mapreduce-client/hadoop-mapreduce-client-core/MapReduceTutorial.html

final WordCount v2.0, This code is compared with org.apache.Hadoop.examples.WordCount Be complex and complete , More suitable for MapReduce Template code

3. The purpose of this article is to develop MapReduce My classmates provided a template with detailed comments , You can develop based on this template .

--------------------------------------------------------------------------------

Official website instance code ( A slight change )

WordCount2.java

import java.io.BufferedReader; import java.io.FileReader; import java.io.IOException; import java.net.URI; import java.util.ArrayList; import java.util.HashSet; import java.util.List; import java.util.Set; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.Counter; import org.apache.hadoop.util.GenericOptionsParser; import org.apache.hadoop.util.StringUtils; public class WordCount2 { // Log group name MapCounters, The log of INPUT_WORDS static enum MapCounters { INPUT_WORDS } static enum ReduceCounters { OUTPUT_WORDS } // static enum CountersEnum { INPUT_WORDS,OUTPUT_WORDS } // Log group name CountersEnum, The log of INPUT_WORDS and OUTPUT_WORDS public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); // map Output value private Text word = new Text(); // map Output key private boolean caseSensitive; // Case sensitive or not , Read the assignment from the configuration file private Set<String> patternsToSkip = new HashSet<String>(); // Used to save keywords to be filtered , Read the assignment from the configuration file private Configuration conf; private BufferedReader fis; // Save file input stream /** * Whole setup Just two things : 1. Read... In the configuration file wordcount.case.sensitive, Assign a value to caseSensitive Variable * 2. Read... In the configuration file wordcount.skip.patterns, If true, take CacheFiles All files are added to the filtering range */ @Override public void setup(Context context) throws IOException, InterruptedException { conf = context.getConfiguration(); // getBoolean(String name, boolean defaultValue) // obtain name Specify the value of the property , If the attribute is not specified , Or the specified value is invalid , Just use defaultValue return . // Attributes can be passed on the command line -Dpropretyname Appoint , for example -Dwordcount.case.sensitive=true // Attributes can also be found in main Function through job.getConfiguration().setBoolean("wordcount.case.sensitive", // true) Appoint caseSensitive = conf.getBoolean("wordcount.case.sensitive", true); // In the configuration file wordcount.case.sensitive Whether the function is turned on // wordcount.skip.patterns The value of the attribute depends on whether the command line parameter has -skip, Specific logic in main In the method if (conf.getBoolean("wordcount.skip.patterns", false)) { // In the configuration file wordcount.skip.patterns Whether the function is turned on URI[] patternsURIs = Job.getInstance(conf).getCacheFiles(); // getCacheFiles() Method can fetch the cached localization file , In this case, main Set up for (URI patternsURI : patternsURIs) { // every last patternsURI All represent a file Path patternsPath = new Path(patternsURI.getPath()); String patternsFileName = patternsPath.getName().toString(); parseSkipFile(patternsFileName); // Add the file to the filtering range , See... For specific logic parseSkipFile(String // fileName) } } } /** * Add the contents of the specified file into the filtering range * * @param fileName */ private void parseSkipFile(String fileName) { try { fis = new BufferedReader(new FileReader(fileName)); String pattern = null; while ((pattern = fis.readLine()) != null) { // SkipFile Every line of is one that needs to be filtered pattern, for example \! patternsToSkip.add(pattern); } } catch (IOException ioe) { System.err .println("Caught exception while parsing the cached file '" + StringUtils.stringifyException(ioe)); } } @Override public void map(Object key, Text value, Context context) throws IOException, InterruptedException { // there caseSensitive stay setup() Method String line = (caseSensitive) ? value.toString() : value.toString() .toLowerCase(); // If case sensitivity is set , Just leave it as it is , Otherwise, all are converted to lowercase for (String pattern : patternsToSkip) { // All the data will meet patternsToSkip Of pattern All filtered out line = line.replaceAll(pattern, ""); } StringTokenizer itr = new StringTokenizer(line); // take line With \t\n\r\f Delimit the separator while (itr.hasMoreTokens()) { word.set(itr.nextToken()); context.write(word, one); // getCounter(String groupName, String counterName) Counter // The name of the enumeration type is the name of the Group , The field of enumeration type is the counter name Counter counter = context.getCounter( MapCounters.class.getName(), MapCounters.INPUT_WORDS.toString()); counter.increment(1); } } } /** * Reducer There are no special upgrade features * * @author Administrator */ public static class IntSumReducer extends Reducer<Text, IntWritable, Text, IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); Counter counter = context.getCounter( ReduceCounters.class.getName(), ReduceCounters.OUTPUT_WORDS.toString()); counter.increment(1); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); GenericOptionsParser optionParser = new GenericOptionsParser(conf, args); /** * The command line syntax is :hadoop command [genericOptions] [application-specific * arguments] getRemainingArgs() All I got was [application-specific arguments] * such as :$ bin/hadoop jar wc.jar WordCount2 -Dwordcount.case.sensitive=true * /user/joe/wordcount/input /user/joe/wordcount/output -skip * /user/joe/wordcount/patterns.txt * getRemainingArgs() What you get is /user/joe/wordcount/input * /user/joe/wordcount/output -skip /user/joe/wordcount/patterns.txt */ String[] remainingArgs = optionParser.getRemainingArgs(); // remainingArgs.length == 2 when , Including input and output paths : ///user/joe/wordcount/input /user/joe/wordcount/output // remainingArgs.length == 4 when , Include input and output paths and skip files : ///user/joe/wordcount/input /user/joe/wordcount/output -skip /user/joe/wordcount/patterns.txt if (!(remainingArgs.length != 2 || remainingArgs.length != 4)) { System.err .println("Usage: wordcount <in> <out> [-skip skipPatternFile]"); System.exit(2); } Job job = Job.getInstance(conf, "word count"); job.setJarByClass(WordCount2.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); List<String> otherArgs = new ArrayList<String>(); // except -skip Other parameters except for (int i = 0; i < remainingArgs.length; ++i) { if ("-skip".equals(remainingArgs[i])) { job.addCacheFile(new Path(remainingArgs[++i]).toUri()); // take // -skip // Later parameters , namely skip Schema file url, Add to the localization cache job.getConfiguration().setBoolean("wordcount.skip.patterns", true); // Set up here wordcount.skip.patterns attribute , stay mapper Use in } else { otherArgs.add(remainingArgs[i]); // Will be in addition to -skip // Add other parameters except otherArgs in } } FileInputFormat.addInputPath(job, new Path(otherArgs.get(0))); // otherArgs The first parameter of is the input path FileOutputFormat.setOutputPath(job, new Path(otherArgs.get(1))); // otherArgs The second parameter of is the output path System.exit(job.waitForCompletion(true) ? 0 : 1); } }

边栏推荐

- Remote server background hangs nohup

- [graphics] adaptive shadow map

- Global and Chinese market of trimethylamine 2022-2028: Research Report on technology, participants, trends, market size and share

- 远程服务器后台挂起 nohup

- Global and Chinese markets for indoor HDTV antennas 2022-2028: Research Report on technology, participants, trends, market size and share

- 【可能是全中文网最全】pushgateway入门笔记

- Global and Chinese market of optical fiber connectors 2022-2028: Research Report on technology, participants, trends, market size and share

- 官网MapReduce实例代码详细批注

- 【微信小程序】WXSS 模板样式

- Byte practice plane longitude 2

猜你喜欢

【Transform】【实践】使用Pytorch的torch.nn.MultiheadAttention来实现self-attention

How can entrepreneurial teams implement agile testing to improve quality and efficiency? Voice network developer entrepreneurship lecture Vol.03

![[engine development] in depth GPU and rendering optimization (basic)](/img/71/abf09941eb06cd91784df50891fe29.jpg)

[engine development] in depth GPU and rendering optimization (basic)

![Mysql报错:[ERROR] mysqld: File ‘./mysql-bin.010228‘ not found (Errcode: 2 “No such file or directory“)](/img/cd/2e4f5884d034ff704809f476bda288.png)

Mysql报错:[ERROR] mysqld: File ‘./mysql-bin.010228‘ not found (Errcode: 2 “No such file or directory“)

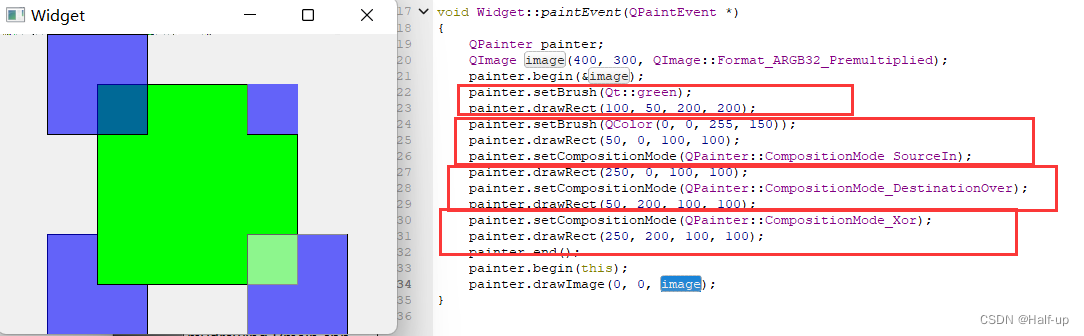

Qt—绘制其他东西

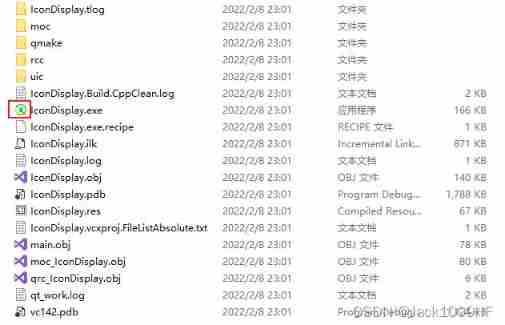

Vs+qt application development, set software icon icon

4-24--4-28

![[ue4] cascading shadow CSM](/img/83/f4dfda3bd5ba0172676c450ba7693b.jpg)

[ue4] cascading shadow CSM

cpu飙升排查方法

Yolov5 series (I) -- network visualization tool netron

随机推荐

4-29——4.32

Fundamentals of PHP deserialization

The method of parameter estimation of user-defined function in MATLAB

Yolov5 advanced nine target tracking example 1

Global and Chinese markets for flexible chips 2022-2028: Research Report on technology, participants, trends, market size and share

QT - draw something else

Yolov5进阶之八 高低版本格式转换问题

.NET六大设计原则个人白话理解,有误请大神指正

What is one hot encoding? In pytoch, there are two ways to turn label into one hot coding

What is machine reading comprehension? What are the applications? Finally someone made it clear

C language fcntl function

Global and Chinese market of transfer case 2022-2028: Research Report on technology, participants, trends, market size and share

[Yu Yue education] scientific computing and MATLAB language reference materials of Central South University

. Net six design principles personal vernacular understanding, please correct if there is any error

[ue4] material and shader permutation

How can entrepreneurial teams implement agile testing to improve quality and efficiency? Voice network developer entrepreneurship lecture Vol.03

Yolov5 advanced 8 format conversion between high and low versions

Apache ant extension tutorial

Using notepad++ to build an arbitrary language development environment

[set theory] inclusion exclusion principle (complex example)