当前位置:网站首页>Acl2022 | decomposed meta learning small sample named entity recognition

Acl2022 | decomposed meta learning small sample named entity recognition

2022-07-07 04:32:00 【zenRRan】

Every day I send you NLP Technical dry cargo !

author | Huiting wind

Company | Beijing University of Posts and telecommunications

Research direction | natural language understanding

come from | PaperWeekly

Paper title :

Decomposed Meta-Learning for Few-Shot Named Entity Recognition

Thesis link :

https://arxiv.org/abs/2204.05751

Code link :

https://github.com/microsoft/vert-papers/tree/master/papers/DecomposedMetaNER

Abstract

Few samples NER The purpose of the system is to identify new named entity classes through a small number of annotation samples . This paper proposes a decomposition meta learning method to solve small samples NER, By decomposing the original problem into two processes: small sample span prediction and small sample entity classification . say concretely , We treat span prediction as a sequence labeling problem and use MAML Algorithm training span predictor comes Find better model initialization parameters and enable the model to quickly adapt to new entities . For entity classification , We have put forward MAML-ProtoNet, One MAML Enhanced prototype network , can Find a good embedding space to better distinguish the span of different entity classes . In more than one benchmark The experiment on shows that , Our method has achieved better results than the previous method .

Intro

NER The purpose is to locate and recognize predefined entity classes in the text span, such as location、organization. In standard supervised learning NER The architecture of medium and deep learning has achieved great success . However , in application ,NER Our model usually needs to quickly adapt to some new and unprecedented entity classes , And it is usually expensive to label a large number of new samples . therefore , Small sample NER In recent years, it has been widely studied .

Before about small samples NER Our research is based on token Level measurement learning , Put each query token Compare metrics with prototypes , Then for each token Assign tags . Many recent studies have shifted to span level measurement learning , Able to bypass token Since the tag and clearly use the representation of phrases .

However, these methods may not be so effective when encountering large field deviations , Because they directly use the learning metrics without adapting the target domain . let me put it another way , These methods do not fully mine the information that supports set data . The current method still has the following limitations :

1. The decoding process requires careful handling of overlapping spans ;

2. Non entity type “O” Usually noise , Because these words have little in common .

Besides , When targeting a different field , The only available information is only a few support samples , Unfortunately , These samples were only used in the process of calculating similarity in the reasoning stage in the previous method .

To address these limitations , This paper presents a decomposition meta learning method , The original problem is decomposed into two processes: span prediction and entity classification . In particular :

1. For small sample span prediction , We regard it as a sequence annotation problem to solve the problem of overlapping span . This process aims to locate named entities and is category independent . Then we only classify the marked spans , This can also eliminate “O” Impact of noise like . When training the span detection module , We used MAML Algorithm to find good model initialization parameters , After updating the sample with a small number of target domain support sets , It can quickly adapt to new entity classes . When the model is updated , The span boundary information of a specific field can be effectively used by the model , So that the model can be better migrated to the target field ;

2. For entity classification , Adopted MAML-ProtoNet To narrow the gap between the source domain and the target domain .

We're in some benchmark We did experiments on , Experiments show that our proposed framework is better than the previous SOTA The model performs better , We also made qualitative and quantitative analysis , Effects of different meta learning strategies on model performance .

Method

This article follows the traditional N-way-K-shot Small sample setting , Examples are shown in the following table (2-way-1-shot):

The following figure shows the overall structure of the model :

2.1 Entity Span Detection

There is no need to classify specific entity classes in the span detection stage , Therefore, the parameters of the model can be shared among different fields . Based on this , We use MAML To promote domain invariant internal representation learning, rather than learning specific domain characteristics . The meta learning model trained in this way is more sensitive to the samples in the target domain , Therefore, only a small number of samples need to be fine tuned to achieve good results without over fitting .

2.1.1 Basic Detector

The base detector is a standard sequence annotation task , use BIOES Annotation strategy , For a sequence of sentences {xi}, Use an encoder to get its context representation h, And then through softmax Generate probability distribution .

▲ fθ: Encoder

▲ A probability distribution

The training error of the model adds a maximum term to the cross entropy loss to alleviate the high loss token The problem of insufficient learning :

▲ Cross entropy loss

Viterbi decoding is used in the reasoning stage , Here we have no training transfer matrix , Simply add some restrictions to ensure that the predicted label does not violate BIOES Annotation rules of .

2.1.2 Meta-Learning Procedure

Specifically speaking, the meta training process , First, randomly sample a group of training episode:

Use the support set for inner-update The process :

among Un representative n Step gradient update , The loss adopts the loss function mentioned above . Then use the updated parameters Θ' Evaluate on the query set , Will a batch In all of the episode Sum of losses , The training goal is to minimize this loss :

Update the original parameters of the model with the above losses Θ, Here we use the first derivative to approximate :

MAML Mathematical derivation reference :MAML

https://zhuanlan.zhihu.com/p/181709693

In the reasoning stage, the cross entropy loss mentioned in the base model is used to fine tune the support set , Then use the fine tuned model on the query set to test .

2.2 Entity Typing

The entity classification module uses the prototype network as the basic model , Use MAML The algorithm enhances the model , Make the model get a more representative embedded space to better distinguish different entity classes .

2.2.1 Basic Model

Here another encoder is used to input token Encoding , Then use the span detection module to output the span x[i,j], All in the span token The representation is averaged to represent the representation of this span :

Follow the setup of the prototype network , Use the summation average of the spans belonging to the same entity class in the support set as the representation of the class prototype :

The training process of the model first uses the support set to calculate the representation of each class prototype , Then for each span in the query set , The probability of belonging to a certain class is calculated by calculating the distance from it to the prototype of that class :

The training goal of the model is a cross entropy loss :

The reasoning stage is simply to calculate which kind of prototype is closest :

2.2.2 MAML Enhanced ProtoNet

The setting of this process and the application in span detection MAML Agreement , Also use MAML Algorithm to find a better initialization parameter , Refer to the above for the detailed process :

The reasoning stage is also consistent with the above , I won't elaborate here .

experiment

3.1 Data sets and settings

In this paper Few-NERD, One for few-shot NER Launched data sets and cross-dataset, Integration of data sets in four different fields . about Few-NERD Use P、R、micro-F1 As an evaluation indicator ,cross-dataset use P、R、F1 As an evaluation indicator . Two independent encoders are used in this paper BERT, Optimizer usage AdamW.

3.2 Main experiment

▲ Few-NERD

▲ Cross-Dataset

3.3 Ablation Experiment

3.4 analysis

For span detection , The author used a fully supervised span detector to carry out the experiment :

The author analyzes , Not fine tuned model predicted Broadway It is a wrong prediction for the new entity class (Broadway Appears in the training data ), Then fine tune the model by using new entity class samples , It can be seen that the model can predict the correct span , however Broadway This span is still predicted . This shows that although the traditional fine tuning can make the model obtain certain new class information , But there is still a big deviation .

Then the author compares MAML Enhanced models and unused MAML Model F1 indicators :

MAML The algorithm can make better use of the data of the supporting set , Find a better initialization parameter , Enable the model to quickly adapt to the new domain .

Then the author analyzes MAML How to improve the prototype network , First, indicators MAML The enhanced prototype network will be improved :

Then the author makes a visual analysis :

As can be seen from the above figure ,MAML The enhanced prototype network can better distinguish various types of prototypes .

Conclusion

This paper presents a two-stage model , Span detection and entity classification for small samples NER Mission , Both stages of the model use meta learning MAML Methods to enhance , Get better initialization parameters , The model can be quickly adapted to the new domain through a small number of samples . This article is also an enlightening article , We can see from the indicators , Meta learning method for small samples NER The task has been greatly improved .

Interpretation and submission of papers , Let your article be more diverse 、 People from different directions see , Don't be drowned in the sea , Maybe you can add a lot of references ~ Add the following wechat comments to your submission “ contribute ” that will do .

Recent articles

EMNLP 2022 and COLING 2022, Which meeting is better ?

A new and easy-to-use software based on Word-Word Relational NER The first mock exam

Ali + Peking University | Simple on gradient mask It has such a magical effect

Download one : Chinese version ! Study TensorFlow、PyTorch、 machine learning 、 Five pieces of deep learning and data structure ! The background to reply 【 A five piece set 】

Download two : NTU pattern recognition PPT The background to reply 【 NTU pattern recognition 】Contribute or exchange learning , remarks : nickname - School ( company )- Direction , Get into DL&NLP Communication group .

There are many directions : machine learning 、 Deep learning ,python, Sentiment analysis 、 Opinion mining 、 Syntactic parsing 、 Machine translation 、 Man-machine dialogue 、 Knowledge map 、 Speech recognition, etc .

Remember the remark

Sorting is not easy to , I'm looking forward to it !边栏推荐

- mpf2_线性规划_CAPM_sharpe_Arbitrage Pricin_Inversion Gauss Jordan_Statsmodel_Pulp_pLU_Cholesky_QR_Jacobi

- The most complete security certification of mongodb in history

- Advertising attribution: how to measure the value of buying volume?

- sscanf,sscanf_s及其相关使用方法「建议收藏」

- 这项15年前的「超前」技术设计,让CPU在AI推理中大放光彩

- leetcode 53. Maximum subarray maximum subarray sum (medium)

- Lecture 3 of "prime mover x cloud native positive sounding, cost reduction and efficiency enhancement lecture" - kubernetes cluster utilization improvement practice

- Data security -- 12 -- Analysis of privacy protection

- The worse the AI performance, the higher the bonus? Doctor of New York University offered a reward for the task of making the big model perform poorly

- Mathematical analysis_ Notes_ Chapter 10: integral with parameters

猜你喜欢

Mathematical analysis_ Notes_ Chapter 10: integral with parameters

这项15年前的「超前」技术设计,让CPU在AI推理中大放光彩

What if the win11 screenshot key cannot be used? Solution to the failure of win11 screenshot key

科兴与香港大学临床试验中心研究团队和香港港怡医院合作,在中国香港启动奥密克戎特异性灭活疫苗加强剂临床试验

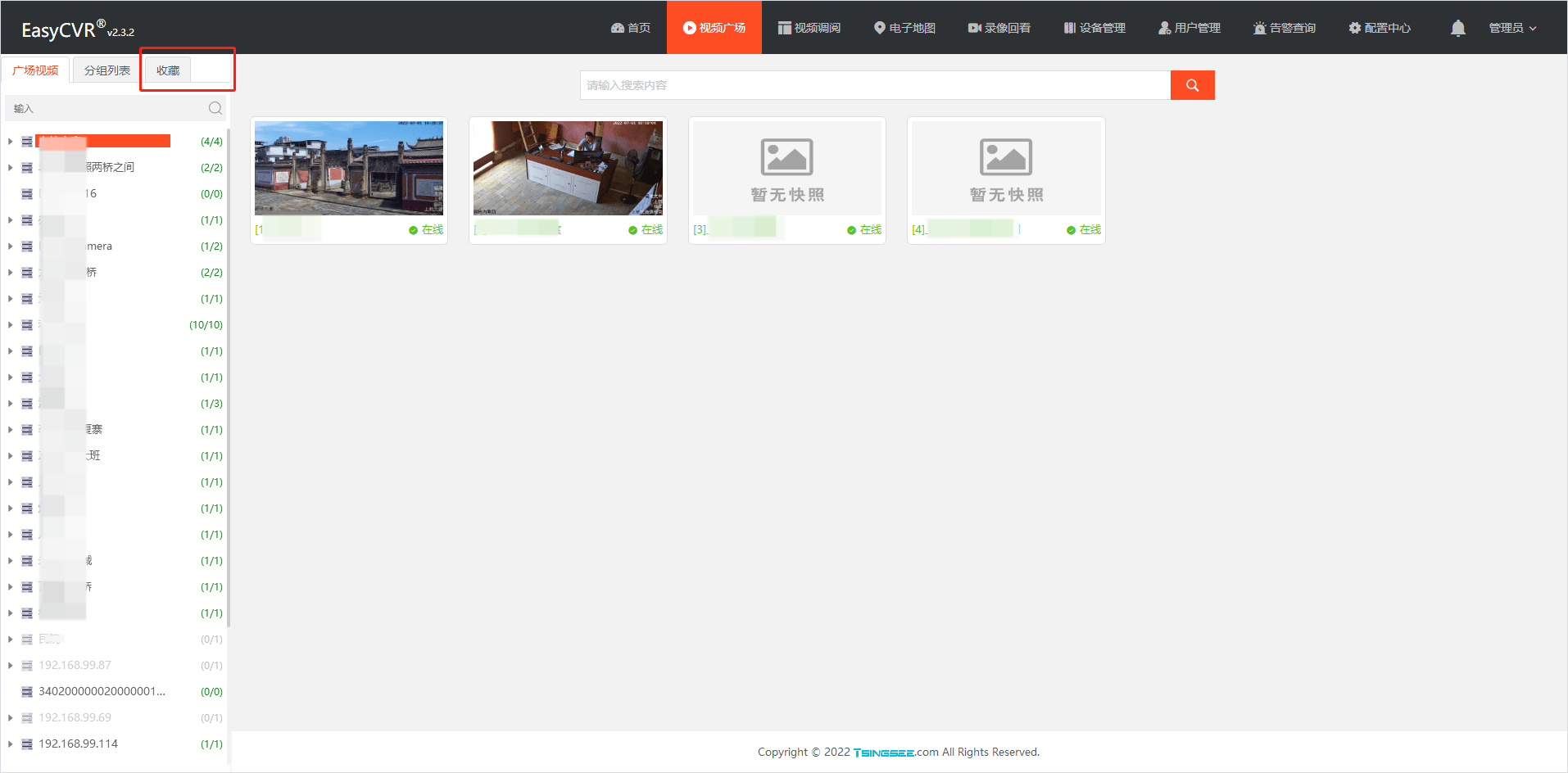

EasyCVR平台接入RTMP协议,接口调用提示获取录像错误该如何解决?

Five years of automated testing, and finally into the ByteDance, the annual salary of 30W is not out of reach

C # use Siemens S7 protocol to read and write PLC DB block

【写给初发论文的人】撰写综述性科技论文常见问题

深耕开发者生态,加速AI产业创新发展 英特尔携众多合作伙伴共聚

Video fusion cloud platform easycvr video Plaza left column list style optimization

随机推荐

[untitled]

Win11控制面板快捷键 Win11打开控制面板的多种方法

What about the collapse of win11 playing pubg? Solution to win11 Jedi survival crash

硬件开发笔记(十): 硬件开发基本流程,制作一个USB转RS232的模块(九):创建CH340G/MAX232封装库sop-16并关联原理图元器件

How to open win11 remote desktop connection? Five methods of win11 Remote Desktop Connection

Complimentary tickets quick grab | industry bigwigs talk about the quality and efficiency of software qecon conference is coming

Digital chemical plant management system based on Virtual Simulation Technology

Restore backup data on GCS with br

见到小叶栀子

Five years of automated testing, and finally into the ByteDance, the annual salary of 30W is not out of reach

How to write a resume that shines in front of another interviewer [easy to understand]

Data security -- 12 -- Analysis of privacy protection

2022 electrician cup question B analysis of emergency materials distribution under 5g network environment

Fix the problem that the highlight effect of the main menu disappears when the easycvr Video Square is clicked and played

2022 electrician cup a question high proportion wind power system energy storage operation and configuration analysis ideas

JS form get form & get form elements

【ArcGIS教程】专题图制作-人口密度分布图——人口密度分析

機器人(自動化)課程的持續學習-2022-

True global ventures' newly established $146million follow-up fund was closed, of which the general partner subscribed $62million to invest in Web3 winners in the later stage

A series of shortcut keys for jetbrain pychar