当前位置:网站首页>Chapter 4 decision tree and random forest

Chapter 4 decision tree and random forest

2022-07-27 03:58:00 【Sang zhiweiluo 0208】

Catalog

2 Decision tree learning algorithm

3 Information gain rate and Gini coefficient

3.2 Gini Coefficient related discussion

1 Information entropy

1.1 entropy

Entropy can be understood as the expected value of the uncertainty of the probability distribution . The bigger this is , Indicates that the greater the uncertainty of the probability distribution . It provides us with “ Information ” The smaller , The harder it is for us to use this probability distribution to make a correct judgment . That is, the greater the probability, the more certain , The less entropy .

expression :

1.2 Joint entropy

(X,Y) The entropy contained

expression :

1.3 Conditional entropy

(X,Y) The entropy contained , subtract X The entropy that occurs alone , That is to say X Under the premise of occurrence ,Y happen “ new ” Entropy brought about by .

expression : perhaps

perhaps

deduction :

1.4 Relative entropy

Relative entropy is also called cross entropy 、 Cross entropy 、 Identifying information 、Kullback entropy 、Kullback-Leible Divergence, etc .

set up p(x)、q(x) yes X Two probability distributions of the values in , On the other hand p Yes q The relative entropy of is

ps: Relative entropy can measure two random variables “ distance ”, Want to minimize the relative entropy , Is constant , So make

Is constant , So make  Maximum . Write the sample as

Maximum . Write the sample as  , Find the maximum , That is to find the maximum likelihood estimation .

, Find the maximum , That is to find the maximum likelihood estimation .

1.5 Mutual information

Two random variables X,Y Mutual information of , Defined as X,Y The relative entropy of the product of joint distribution and independent distribution of .

It can also be seen as H(Y) and H(Y|X) The difference between the .

expression :

or :

or :

Measure the product of joint distribution and independent distribution “ distance ”; if X,Y Are independent of each other , Then mutual information is 0.

The second formula is derived :

The third formula is derived :

1.6 Veen chart

2 Decision tree learning algorithm

2.1 Information gain

When the probability in entropy and conditional entropy is estimated by data ( Especially maximum likelihood estimation ) When you get it , The corresponding entropy and conditional entropy are called Experience in entropy and Empirical condition entropy .

The information gain represents the known characteristic A The information that makes the class X The degree to which the uncertainty of information is reduced .

Definition : features A On the training data set D Information gain of g(D,A), Defined as a set D The experience of the entropy H(D) With the characteristics of A Given the conditions D Entropy of empirical conditions H(D|A) The difference between the , namely  .

.

obviously , This is the training data set D And characteristics A Mutual information of .

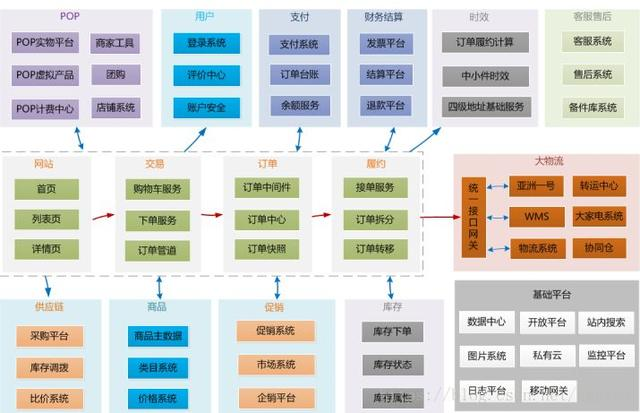

2.2 ID3、C4.5 、CART

ID3: Take the rate of information entropy decline as the standard for selecting test attributes , That is to say, the attribute with the highest information gain that has not been used to partition is selected as the partition standard in each node , And then we continue the process , Know that the generated decision tree can perfectly classify training samples .

C4.5:C4.5 The algorithm is ID3 An extension of the algorithm . The information gain rate is used .

CART:CART So is the algorithm ID3 An extension of the algorithm . It uses Gini coefficient .

3 Information gain rate and Gini coefficient

3.1 Definition

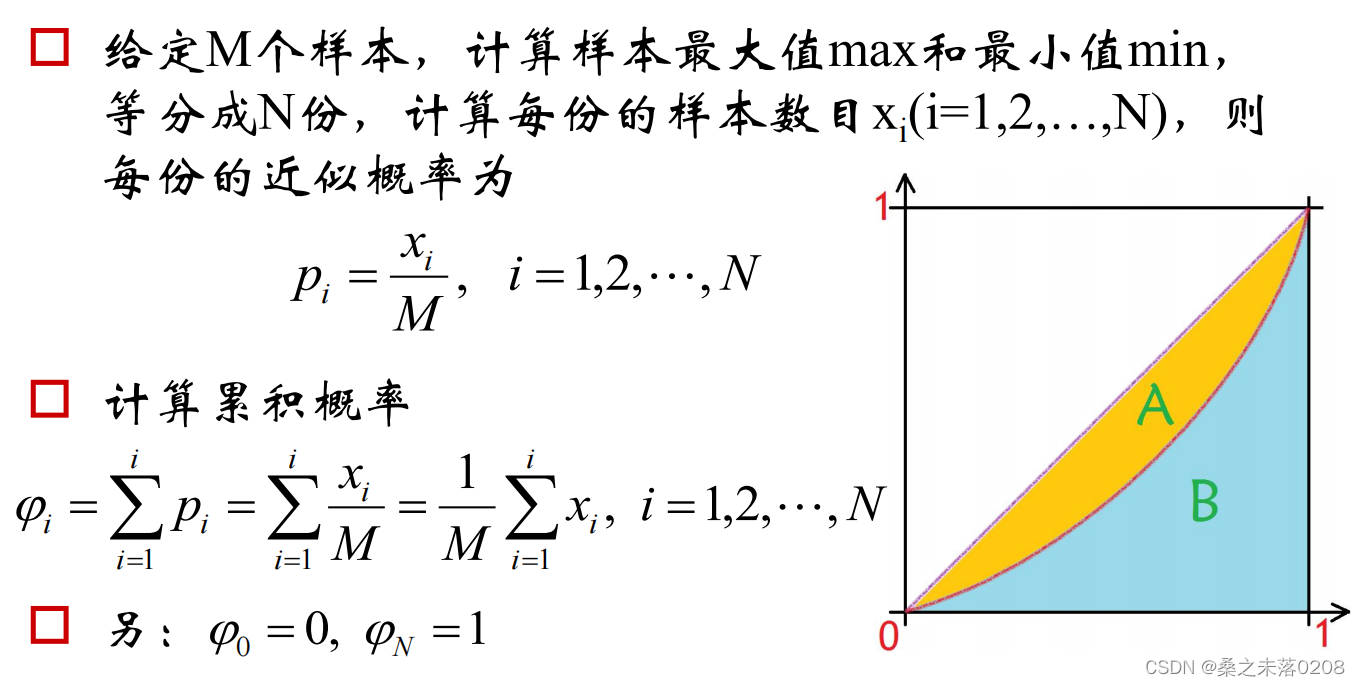

3.2 Gini Coefficient related discussion

First definition

The second definition

边栏推荐

- Redis spike case, learn from Shang Silicon Valley teacher in station B

- flinkSQLclient创建的job,flink重启就没了,有什么办法吗?

- GetObject call timing of factorybean

- Ming min investment Qiu Huiming: behind the long-term excellence and excess, the test is the team's investment and research ability and the integrity of strategy

- Contour detection based on OpenCV (1)

- If the detailed explanation is generated according to the frame code

- Two help points distribution brings to merchants

- Principle understanding and application of hash table and consistent hash

- Feitengtengrui d2000 won the "top ten hard core technologies" award of Digital China

- Redis source code learning (33), command execution process

猜你喜欢

代码回滚,你真的理解吗?

Program to change the priority of the process in LabVIEW

Basic concept and essence of Architecture

SkyWalking分布式系统应用程序性能监控工具-中

开机启动流程及营救模式

Number of 0 at the end of factorial

Plato Farm有望通过Elephant Swap,进一步向外拓展生态

【愚公系列】2022年7月 Go教学课程 018-分支结构之switch

面试题:String类中三种实例化对象的区别

NLP hotspots from ACL 2022 onsite experience

随机推荐

Review in the sixth week

Mysql database related operations

flink cdc 到MySQL8没问题,到MySQL5读有问题,怎么办?

Cocos game practice-04-collision detection and NPC rendering

一文读懂 | 数据中台如何支撑企业数字化经营

Characteristics and experimental suggestions of abbkine abfluor 488 cell apoptosis detection kit

[Android synopsis] kotlin multithreaded programming (I)

C # using sqlsugar updatable system to report invalid numbers, how to solve it? Ask for guidance!

Worthington papain dissociation system solution

Is Jiufang intelligent investment a regular company? Talk about Jiufang intelligent investment

Smart pointer shared_ ptr、unique_ ptr、weak_ ptr

Director of meta quest content ecology talks about the original intention of APP lab design

The new version of Alibaba Seata finally solves the idempotence, suspension and empty rollback problems of TCC mode

Principle understanding and application of hash table and consistent hash

[tree chain dissection] template question

DTS is equipped with a new self-developed kernel, which breaks through the key technology of the three center architecture of the two places Tencent cloud database

[tree chain dissection] 2022 Hangzhou Electric Multi school 21001 static query on tree

Kettle reads file split by line

Okaleido tiger is about to log in to binance NFT in the second round, which has aroused heated discussion in the community

LeetCode 第二十八天